Implementierung von Papier-YOLOV7: Trainingbare Beutel der freien Büsse legt neue hochmoderne für Echtzeit-Objektdetektoren fest

Frau Coco

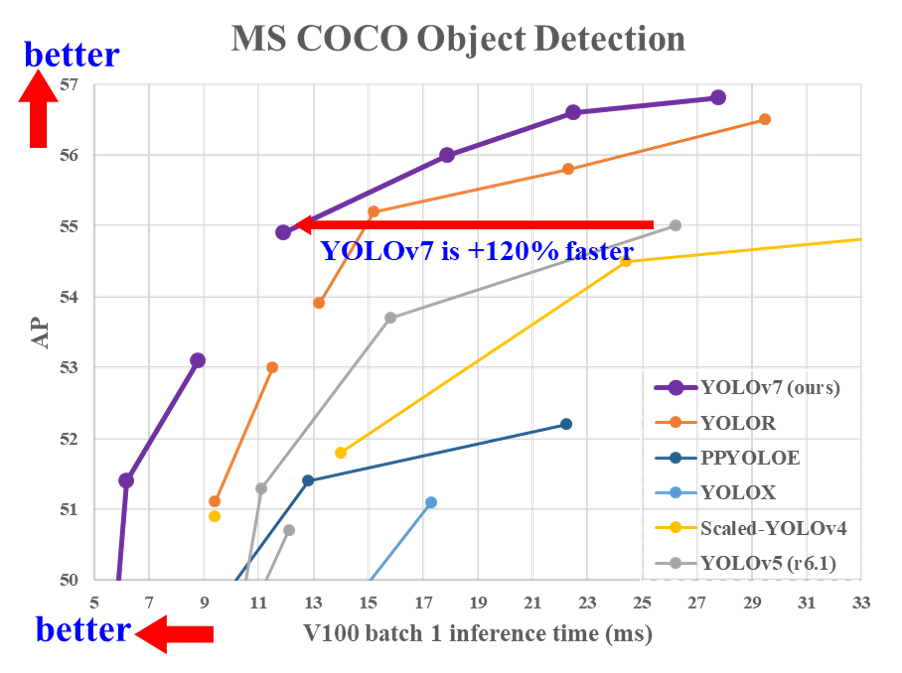

| Modell | Testgröße | AP -Test | AP 50 Test | AP 75 Test | Batch 1 fps | Batch 32 durchschnittliche Zeit |

|---|---|---|---|---|---|---|

| Yolov7 | 640 | 51,4% | 69,7% | 55,9% | 161 fps | 2,8 ms |

| Yolov7-x | 640 | 53,1% | 71,2% | 57,8% | 114 fps | 4,3 ms |

| Yolov7-W6 | 1280 | 54,9% | 72,6% | 60,1% | 84 fps | 7,6 ms |

| Yolov7-e6 | 1280 | 56,0% | 73,5% | 61,2% | 56 fps | 12,3 ms |

| Yolov7-d6 | 1280 | 56,6% | 74,0% | 61,8% | 44 fps | 15,0 ms |

| Yolov7-e6e | 1280 | 56,8% | 74,4% | 62,1% | 36 fps | 18,7 ms |

Docker -Umgebung (empfohlen)

# create the docker container, you can change the share memory size if you have more.

nvidia-docker run --name yolov7 -it -v your_coco_path/:/coco/ -v your_code_path/:/yolov7 --shm-size=64g nvcr.io/nvidia/pytorch:21.08-py3

# apt install required packages

apt update

apt install -y zip htop screen libgl1-mesa-glx

# pip install required packages

pip install seaborn thop

# go to code folder

cd /yolov7 yolov7.pt yolov7x.pt yolov7-w6.pt yolov7-e6.pt yolov7-d6.pt yolov7-e6e.pt

python test.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.65 --device 0 --weights yolov7.pt --name yolov7_640_valSie erhalten die Ergebnisse:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.51206

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.69730

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.55521

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.35247

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.55937

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.66693

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.38453

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.63765

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.68772

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.53766

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.73549

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.83868

Um die Genauigkeit zu messen, laden Sie Coco-Annotationen für Pycocotools auf die ./coco/annotations/instances_val2017.json herunter

Datenvorbereitung

bash scripts/get_coco.shtrain2017.cache und val2017.cache zu löschen, und Ladungsbezeichnungen wiederzuladenSingle GPU -Training

# train p5 models

python train.py --workers 8 --device 0 --batch-size 32 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights ' ' --name yolov7 --hyp data/hyp.scratch.p5.yaml

# train p6 models

python train_aux.py --workers 8 --device 0 --batch-size 16 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights ' ' --name yolov7-w6 --hyp data/hyp.scratch.p6.yamlMehrfacher GPU -Training

# train p5 models

python -m torch.distributed.launch --nproc_per_node 4 --master_port 9527 train.py --workers 8 --device 0,1,2,3 --sync-bn --batch-size 128 --data data/coco.yaml --img 640 640 --cfg cfg/training/yolov7.yaml --weights ' ' --name yolov7 --hyp data/hyp.scratch.p5.yaml

# train p6 models

python -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 train_aux.py --workers 8 --device 0,1,2,3,4,5,6,7 --sync-bn --batch-size 128 --data data/coco.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6.yaml --weights ' ' --name yolov7-w6 --hyp data/hyp.scratch.p6.yaml yolov7_training.pt yolov7x_training.pt yolov7-w6_training.pt yolov7-e6_training.pt yolov7-d6_training.pt yolov7-e6e_training.pt

Single GPU -Finetuning für benutzerdefinierten Datensatz

# finetune p5 models

python train.py --workers 8 --device 0 --batch-size 32 --data data/custom.yaml --img 640 640 --cfg cfg/training/yolov7-custom.yaml --weights ' yolov7_training.pt ' --name yolov7-custom --hyp data/hyp.scratch.custom.yaml

# finetune p6 models

python train_aux.py --workers 8 --device 0 --batch-size 16 --data data/custom.yaml --img 1280 1280 --cfg cfg/training/yolov7-w6-custom.yaml --weights ' yolov7-w6_training.pt ' --name yolov7-w6-custom --hyp data/hyp.scratch.custom.yamlSiehe Reparameterization.IPynb

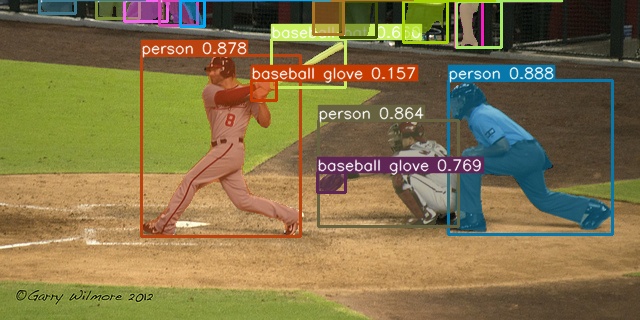

Auf Video:

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source yourvideo.mp4Auf Bild:

python detect.py --weights yolov7.pt --conf 0.25 --img-size 640 --source inference/images/horses.jpg

Pytorch zu Coreml (und Inferenz auf macOS/iOS)

Pytorch zu ONNX mit NMS (und Inferenz)

python export.py --weights yolov7-tiny.pt --grid --end2end --simplify

--topk-all 100 --iou-thres 0.65 --conf-thres 0.35 --img-size 640 640 --max-wh 640Pytorch nach Tensorrt mit NMS (und Inferenz)

wget https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-tiny.pt

python export.py --weights ./yolov7-tiny.pt --grid --end2end --simplify --topk-all 100 --iou-thres 0.65 --conf-thres 0.35 --img-size 640 640

git clone https://github.com/Linaom1214/tensorrt-python.git

python ./tensorrt-python/export.py -o yolov7-tiny.onnx -e yolov7-tiny-nms.trt -p fp16Pytorch zu Tensorrt auf eine andere Weise

wget https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-tiny.pt

python export.py --weights yolov7-tiny.pt --grid --include-nms

git clone https://github.com/Linaom1214/tensorrt-python.git

python ./tensorrt-python/export.py -o yolov7-tiny.onnx -e yolov7-tiny-nms.trt -p fp16

# Or use trtexec to convert ONNX to TensorRT engine

/usr/src/tensorrt/bin/trtexec --onnx=yolov7-tiny.onnx --saveEngine=yolov7-tiny-nms.trt --fp16Getestet mit: python 3.7.13, pytorch 1.12.0+cu113

code yolov7-w6-pose.pt

Siehe Keypoint.ipynb.

code yolov7-mask.pt

Siehe Instance.ipynb.

code yolov7-seg.pt

Yolov7 beispielsweise Segmentierung (yolor + yolov5 + yolact)

| Modell | Testgröße | AP -Box | AP 50 Box | AP 75 Box | AP -Maske | AP 50 Maske | AP 75 Maske |

|---|---|---|---|---|---|---|---|

| Yolov7-seg | 640 | 51,4% | 69,4% | 55,8% | 41,5% | 65,5% | 43,7% |

code yolov7-u6.pt

Yolov7 mit entkoppelten Talkopf (Yolor + Yolov5 + yolov6)

| Modell | Testgröße | AP val | AP 50 Val | AP 75 Val |

|---|---|---|---|---|

| Yolov7-u6 | 640 | 52,6% | 69,7% | 57,3% |

@inproceedings{wang2023yolov7,

title={{YOLOv7}: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors},

author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2023}

}

@article{wang2023designing,

title={Designing Network Design Strategies Through Gradient Path Analysis},

author={Wang, Chien-Yao and Liao, Hong-Yuan Mark and Yeh, I-Hau},

journal={Journal of Information Science and Engineering},

year={2023}

}

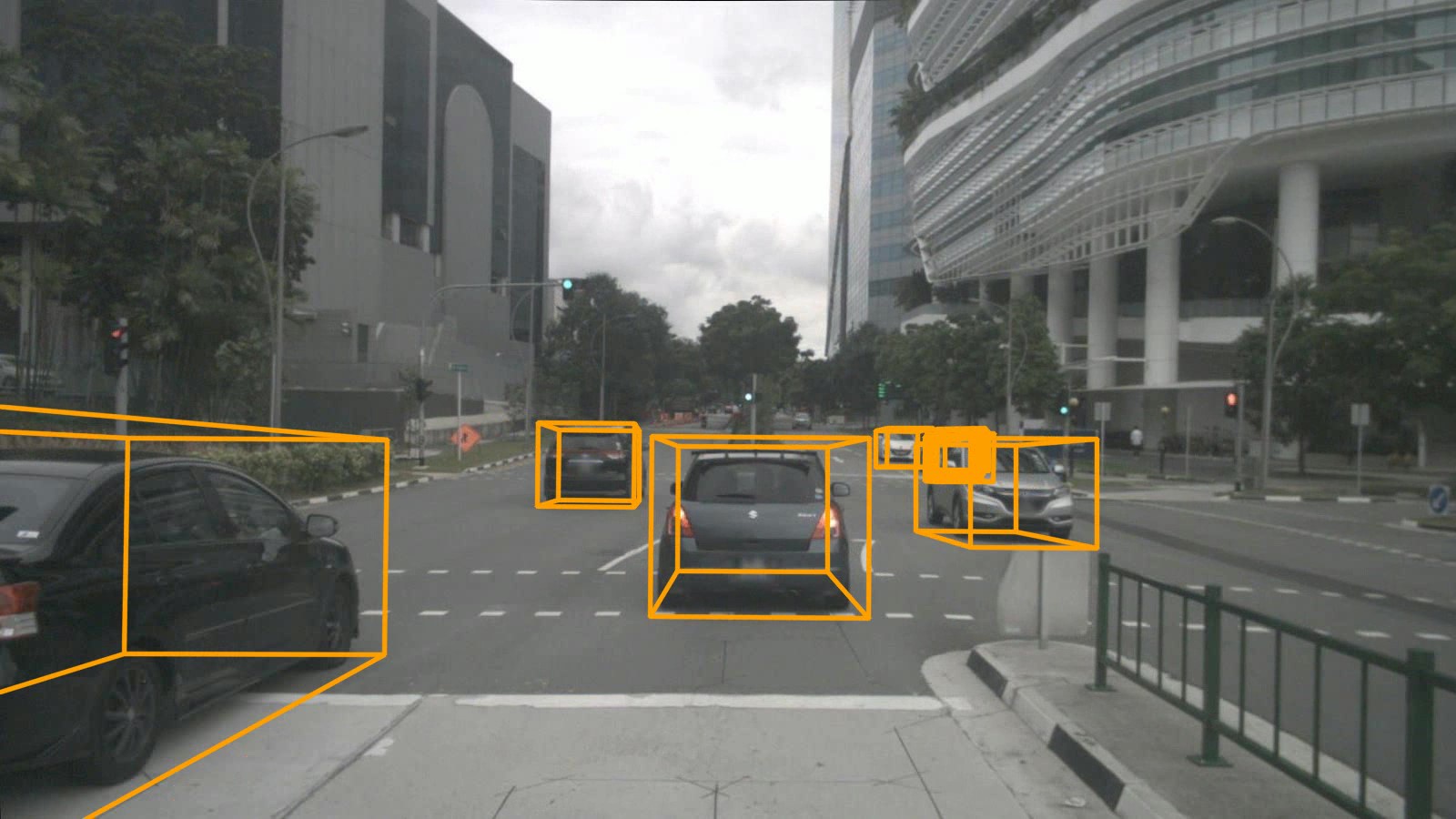

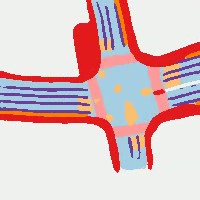

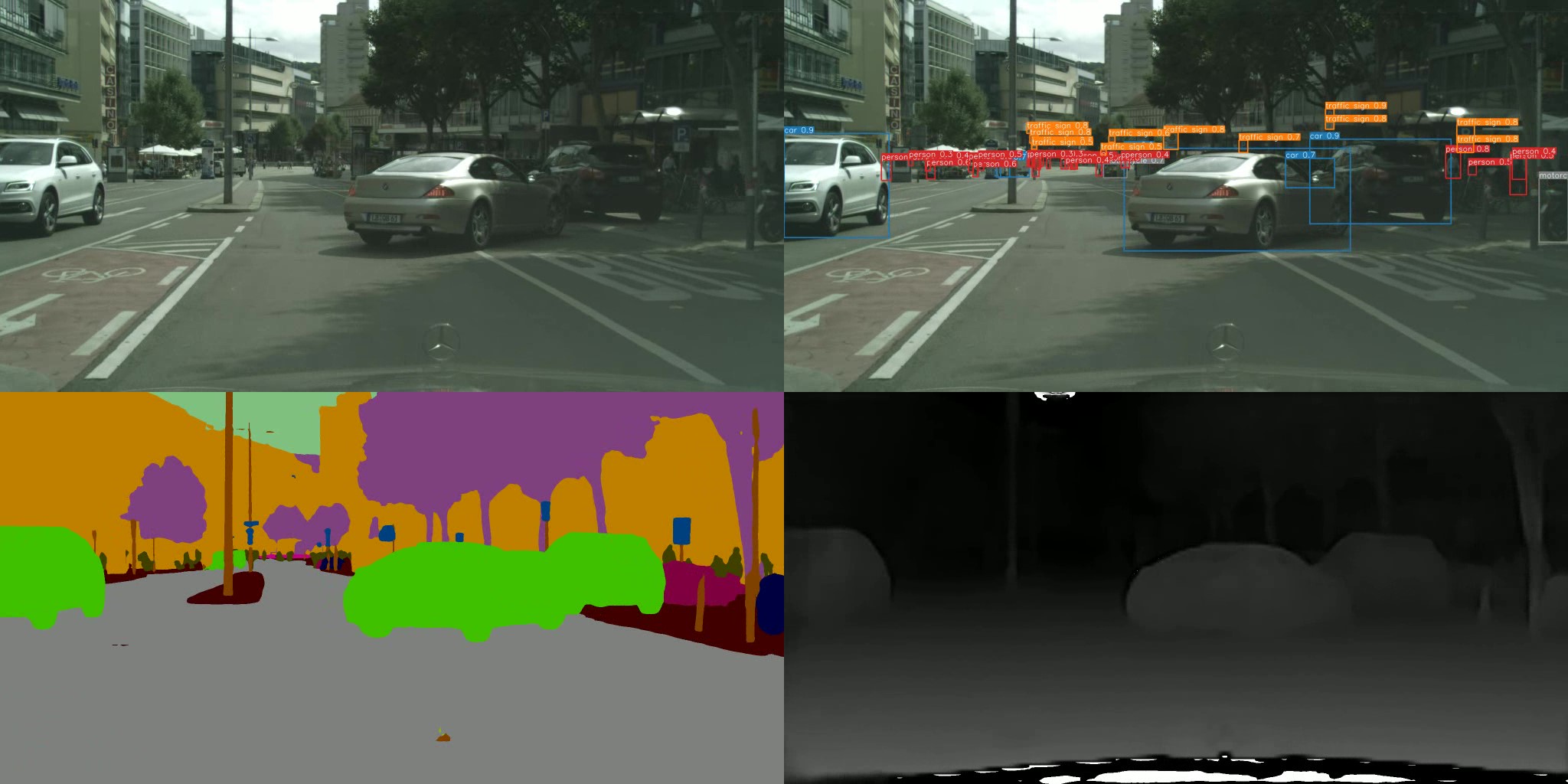

Yolov7-Semantic & Yolov7-Panoptic & Yolov7-Kapion

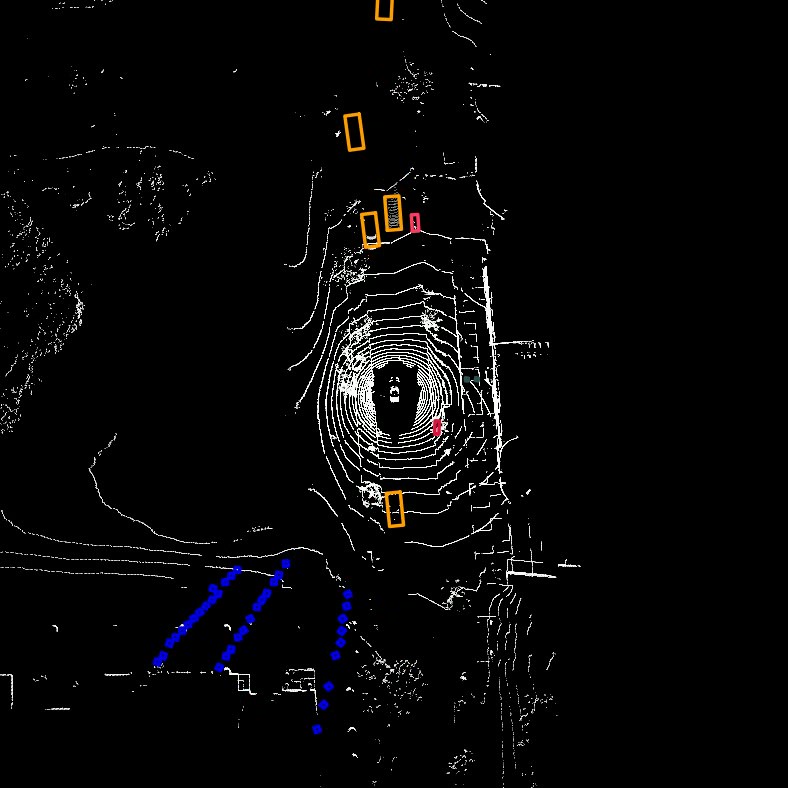

Yolov7-Semantic & Yolov7-Detektion & YOLOV7-TEPTH (mit NTUT)

Yolov7-3d-Detektion & Yolov7-Lidar & Yolov7-Road (mit NTUT)