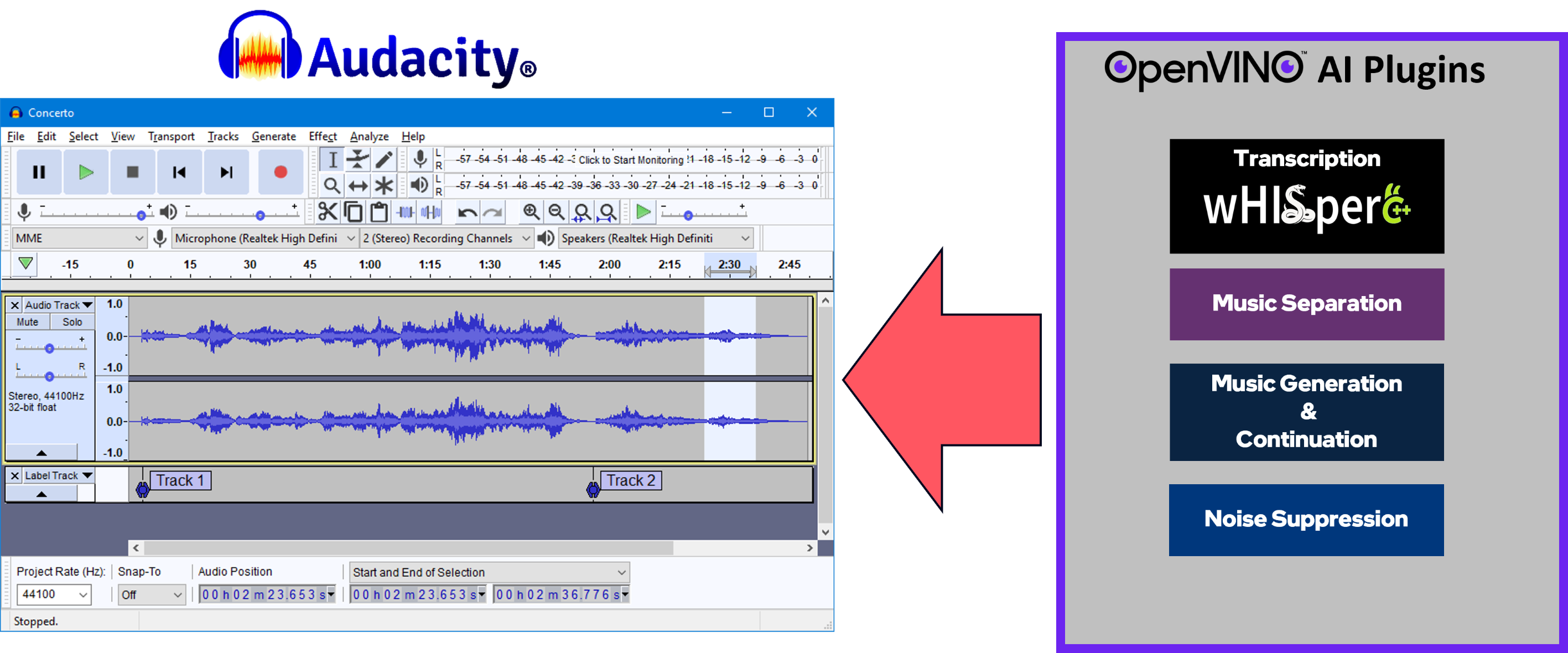

A set of AI-enabled effects, generators, and analyzers for Audacity®. These AI features run 100% locally on your PC ? -- no internet connection necessary! OpenVINO™ is used to run AI models on supported accelerators found on the user's system such as CPU, GPU, and NPU.

Music Separation -- Separate a mono or stereo track into individual stems -- Drums, Bass, Vocals, & Other Instruments.

Noise Suppression -- Removes background noise from an audio sample.

Music Generation & Continuation -- Uses MusicGen LLM to generate snippets of music, or to generate a continuation of an existing snippet of music.

Whisper Transcription -- Uses whisper.cpp to generate a label track containing the transcription or translation for a given selection of spoken audio or vocals.

Go here to find installation packages & instructions for the latest Windows release.

Windows Build Instructions

Linux Build Instructions

We welcome you to submit an issue here for

Questions

Bug Reports

Feature Requests

Feedback of any kind -- how can we improve this project?

Your contributions are welcome and valued, no matter how big or small. Feel free to submit a pull-request!

Audacity® development team & Muse Group-- Thank you for your support!

Audacity® GitHub -- https://github.com/audacity/audacity

Whisper transcription & translation analyzer uses whisper.cpp (with OpenVINO™ backend): https://github.com/ggerganov/whisper.cpp

Music Generation & Continuation use MusicGen model, from Meta.

We currently have support for MusicGen-Small, and MusicGen-Small-Stereo

The txt-to-music pipelines were ported from python to C++, referencing logic from the Hugging Face transformers project: https://github.com/huggingface/transformers

Music Separation effect uses Meta's Demucs v4 model (https://github.com/facebookresearch/demucs), which has been ported to work with OpenVINO™

Noise Suppression:

Ported the models & pipeline from here: https://github.com/Rikorose/DeepFilterNet

We also made use of @grazder's fork / branch (https://github.com/grazder/DeepFilterNet/tree/torchDF-changes) to better understand the Rust implementation, and so we also based some of our C++ implementation on torch_df_offline.py found here.

Citations:

@inproceedings{schroeter2022deepfilternet2,title = {{DeepFilterNet2}: Towards Real-Time Speech Enhancement on Embedded Devices for Full-Band Audio},author = {Schröter, Hendrik and Escalante-B., Alberto N. and Rosenkranz, Tobias and Maier, Andreas},booktitle={17th International Workshop on Acoustic Signal Enhancement (IWAENC 2022)},year = {2022},

} @inproceedings{schroeter2023deepfilternet3,title = {{DeepFilterNet}: Perceptually Motivated Real-Time Speech Enhancement},author = {Schröter, Hendrik and Rosenkranz, Tobias and Escalante-B., Alberto N. and Maier, Andreas},booktitle={INTERSPEECH},year = {2023},

}noise-suppression-denseunet-ll: from OpenVINO™'s Open Model Zoo: https://github.com/openvinotoolkit/open_model_zoo

DeepFilterNet2 & DeepFilterNet3:

OpenVINO™ Notebooks -- We have learned a lot from this awesome set of python notebooks, and are still using it to learn latest / best practices for implementing AI pipelines using OpenVINO™!