After a year's relentless efforts, today we are thrilled to release Qwen2-VL! Qwen2-VL is the latest version of the vision language models in the Qwen model families.

SoTA understanding of images of various resolution & ratio: Qwen2-VL achieves state-of-the-art performance on visual understanding benchmarks, including MathVista, DocVQA, RealWorldQA, MTVQA, etc.

Understanding videos of 20min+: with the online streaming capabilities, Qwen2-VL can understand videos over 20 minutes by high-quality video-based question answering, dialog, content creation, etc.

Agent that can operate your mobiles, robots, etc.: with the abilities of complex reasoning and decision making, Qwen2-VL can be integrated with devices like mobile phones, robots, etc., for automatic operation based on visual environment and text instructions.

Multilingual Support: to serve global users, besides English and Chinese, Qwen2-VL now supports the understanding of texts in different languages inside images, including most European languages, Japanese, Korean, Arabic, Vietnamese, etc.

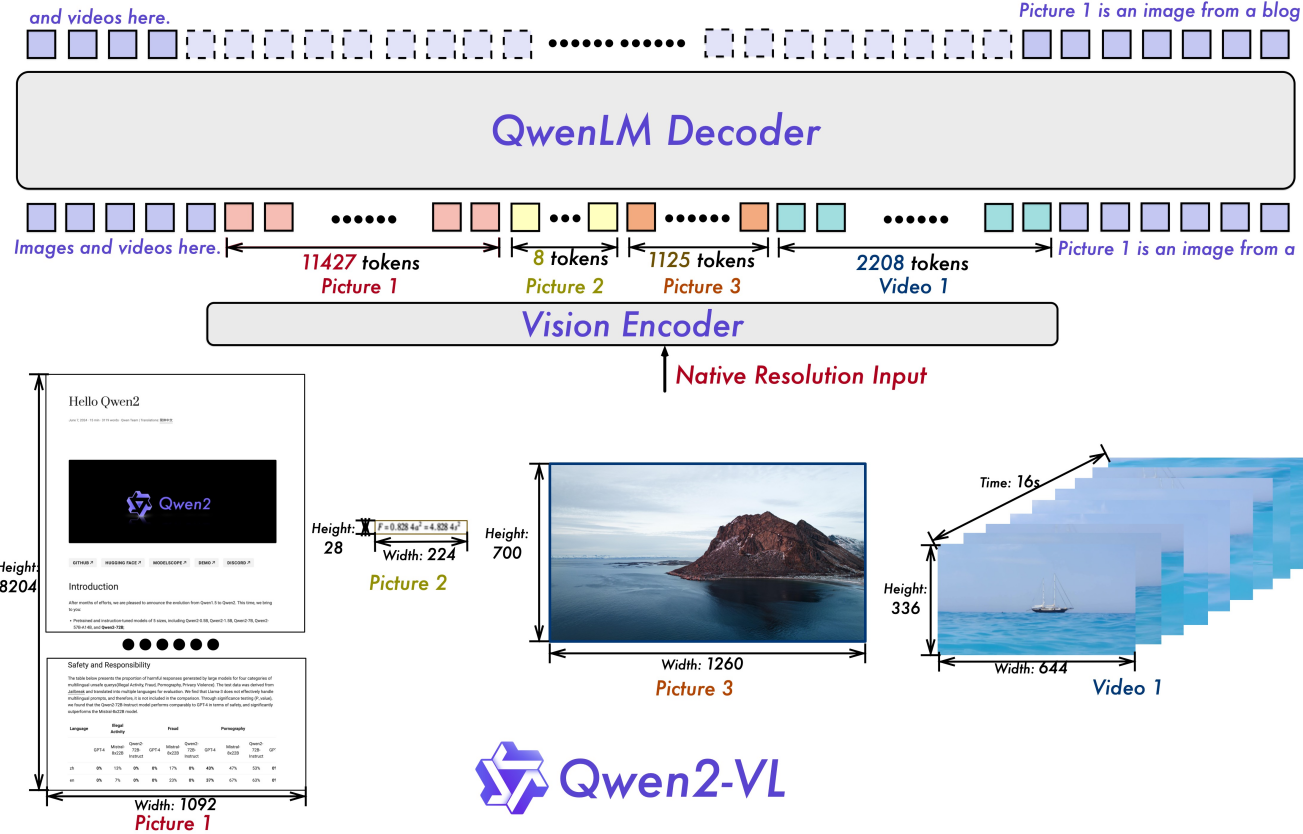

Naive Dynamic Resolution: Unlike before, Qwen2-VL can handle arbitrary image resolutions, mapping them into a dynamic number of visual tokens, offering a more human-like visual processing experience.

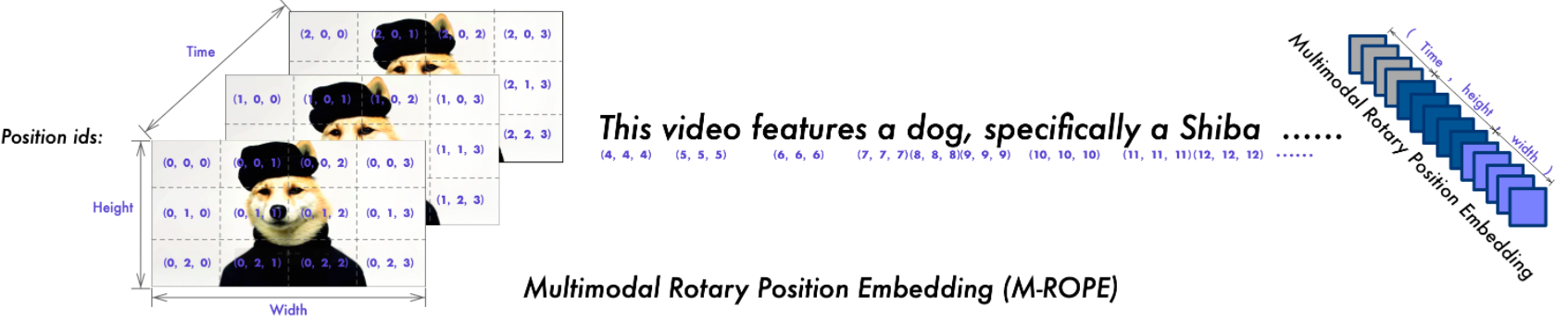

Multimodal Rotary Position Embedding (M-ROPE): Decomposes positional embedding into parts to capture 1D textual, 2D visual, and 3D video positional information, enhancing its multimodal processing capabilities.

We have open-sourced Qwen2-VL models, including Qwen2-VL-2B and Qwen2-VL-7B under the Apache 2.0 license, as well as Qwen2-VL-72B under the Qwen license. These models are now integrated with Hugging Face Transformers, vLLM, and other third-party frameworks. We hope you enjoy using them!

2024.09.19: The instruction-tuned Qwen2-VL-72B model and its quantized version [AWQ, GPTQ-Int4, GPTQ-Int8] are now available. We have also released the Qwen2-VL paper simultaneously.

2024.08.30: We have released the Qwen2-VL series. The 2B and 7B models are now available, and the 72B model for opensource is coming soon. For more details, please check our blog!

| Benchmark | Previous SoTA (Open-source LVLM) | Claude-3.5 Sonnet | GPT-4o | Qwen2-VL-72B (? ? | Qwen2-VL-7B (? ?) | Qwen2-VL-2B (??) |

|---|---|---|---|---|---|---|

| MMMUval | 58.3 | 68.3 | 69.1 | 64.5 | 54.1 | 41.1 |

| MMMU-Pro | 46.9 | 51.5 | 51.9 | 46.2 | 43.5 | 37.6 |

| DocVQAtest | 94.1 | 95.2 | 92.8 | 96.5 | 94.5 | 90.1 |

| InfoVQAtest | 82.0 | - | - | 84.5 | 76.5 | 65.5 |

| ChartQAtest | 88.4 | 90.8 | 85.7 | 88.3 | 83.0 | 73.5 |

| TextVQAval | 84.4 | - | - | 85.5 | 84.3 | 79.7 |

| OCRBench | 852 | 788 | 736 | 877 | 845 | 794 |

| MTVQA | 17.3 | 25.7 | 27.8 | 30.9 | 25.6 | 18.1 |

| VCRen easy | 84.67 | 63.85 | 91.55 | 91.93 | 89.70 | 81.45 |

| VCRzh easy | 22.09 | 1.0 | 14.87 | 65.37 | 59.94 | 46.16 |

| RealWorldQA | 72.2 | 60.1 | 75.4 | 77.8 | 70.1 | 62.9 |

| MMEsum | 2414.7 | 1920.0 | 2328.7 | 2482.7 | 2326.8 | 1872.0 |

| MMBench-ENtest | 86.5 | 79.7 | 83.4 | 86.5 | 83.0 | 74.9 |

| MMBench-CNtest | 86.3 | 80.7 | 82.1 | 86.6 | 80.5 | 73.5 |

| MMBench-V1.1test | 85.5 | 78.5 | 82.2 | 85.9 | 80.7 | 72.2 |

| MMT-Benchtest | 63.4 | - | 65.5 | 71.7 | 63.7 | 54.5 |

| MMStar | 67.1 | 62.2 | 63.9 | 68.3 | 60.7 | 48.0 |

| MMVetGPT-4-Turbo | 65.7 | 66.0 | 69.1 | 74.0 | 62.0 | 49.5 |

| HallBenchavg | 55.2 | 49.9 | 55.0 | 58.1 | 50.6 | 41.7 |

| MathVistatestmini | 67.5 | 67.7 | 63.8 | 70.5 | 58.2 | 43.0 |

| MathVision | 16.97 | - | 30.4 | 25.9 | 16.3 | 12.4 |

| Benchmark | Previous SoTA (Open-source LVLM) | Gemini 1.5-Pro | GPT-4o | Qwen2-VL-72B (? ?) | Qwen2-VL-7B (? ?) | Qwen2-VL-2B (??) |

|---|---|---|---|---|---|---|

| MVBench | 69.6 | - | - | 73.6 | 67.0 | 63.2 |

| PerceptionTesttest | 66.9 | - | - | 68.0 | 62.3 | 53.9 |

| EgoSchematest | 62.0 | 63.2 | 72.2 | 77.9 | 66.7 | 54.9 |

| Video-MME (wo/w subs) | 66.3/69.6 | 75.0/81.3 | 71.9/77.2 | 71.2/77.8 | 63.3/69.0 | 55.6/60.4 |

| Benchmark | Metric | Previous SoTA | GPT-4o | Qwen2-VL-72B | |

|---|---|---|---|---|---|

| General | FnCall[1] | TM | - | 90.2 | 93.1 |

| EM | - | 50.0 | 53.2 | ||

| Game | Number Line | SR | 89.4[2] | 91.5 | 100.0 |

| BlackJack | SR | 40.2[2] | 34.5 | 42.6 | |

| EZPoint | SR | 50.0[2] | 85.5 | 100.0 | |

| Point24 | SR | 2.6[2] | 3.0 | 4.5 | |

| Android | AITZ | TM | 83.0[3] | 70.0 | 89.6 |

| EM | 47.7[3] | 35.3 | 72.1 | ||

| AI2THOR | ALFREDvalid-unseen | SR | 67.7[4] | - | 67.8 |

| GC | 75.3[4] | - | 75.8 | ||

| VLN | R2Rvalid-unseen | SR | 79.0 | 43.7[5] | 51.7 |

| REVERIEvalid-unseen | SR | 61.0 | 31.6[5] | 31.0 |

SR, GC, TM and EM are short for success rate, goal-condition success, type match and exact match. ALFRED is supported by SAM[6].

Self-Curated Function Call Benchmark by Qwen Team

Fine-Tuning Large Vision-Language Models as Decision-Making Agents via Reinforcement Learning

Android in the Zoo: Chain-of-Action-Thought for GUI Agents

ThinkBot: Embodied Instruction Following with Thought Chain Reasoning

MapGPT: Map-Guided Prompting with Adaptive Path Planning for Vision-and-Language Navigation

Segment Anything.

| Models | AR | DE | FR | IT | JA | KO | RU | TH | VI | AVG |

|---|---|---|---|---|---|---|---|---|---|---|

| Qwen2-VL-72B | 20.7 | 36.5 | 44.1 | 42.8 | 21.6 | 37.4 | 15.6 | 17.7 | 41.6 | 30.9 |

| GPT-4o | 20.2 | 34.2 | 41.2 | 32.7 | 20.0 | 33.9 | 11.5 | 22.5 | 34.2 | 27.8 |

| Claude3 Opus | 15.1 | 33.4 | 40.6 | 34.4 | 19.4 | 27.2 | 13.0 | 19.5 | 29.1 | 25.7 |

| Gemini Ultra | 14.7 | 32.3 | 40.0 | 31.8 | 12.3 | 17.2 | 11.8 | 20.3 | 28.6 | 23.2 |

These results are evaluated on the benchmark of MTVQA.

Below, we provide simple examples to show how to use Qwen2-VL with ? ModelScope and ? Transformers.

The code of Qwen2-VL has been in the latest Hugging face transformers and we advise you to build from source with command:

pip install git+https://github.com/huggingface/transformers@21fac7abba2a37fae86106f87fcf9974fd1e3830 accelerate

or you might encounter the following error:

KeyError: 'qwen2_vl'

NOTE: Current latest version of transformers have a bug when loading Qwen2-VL config, so you need to install a specific version of transformers as above.

We offer a toolkit to help you handle various types of visual input more conveniently, as if you were using an API. This includes base64, URLs, and interleaved images and videos. You can install it using the following command:

# It's highly recommanded to use `[decord]` feature for faster video loading.pip install qwen-vl-utils[decord]

If you are not using Linux, you might not be able to install decord from PyPI. In that case, you can use pip install qwen-vl-utils which will fall back to using torchvision for video processing. However, you can still install decord from source to get decord used when loading video.

Here we show a code snippet to show you how to use the chat model with transformers and qwen_vl_utils:

from transformers import Qwen2VLForConditionalGeneration, AutoTokenizer, AutoProcessorfrom qwen_vl_utils import process_vision_info# default: Load the model on the available device(s)model = Qwen2VLForConditionalGeneration.from_pretrained("Qwen/Qwen2-VL-7B-Instruct", torch_dtype="auto", device_map="auto")# We recommend enabling flash_attention_2 for better acceleration and memory saving, especially in multi-image and video scenarios.# model = Qwen2VLForConditionalGeneration.from_pretrained(# "Qwen/Qwen2-VL-7B-Instruct",# torch_dtype=torch.bfloat16,# attn_implementation="flash_attention_2",# device_map="auto",# )# default processerprocessor = AutoProcessor.from_pretrained("Qwen/Qwen2-VL-7B-Instruct")# The default range for the number of visual tokens per image in the model is 4-16384.# You can set min_pixels and max_pixels according to your needs, such as a token range of 256-1280, to balance performance and cost.# min_pixels = 256*28*28# max_pixels = 1280*28*28# processor = AutoProcessor.from_pretrained("Qwen/Qwen2-VL-7B-Instruct", min_pixels=min_pixels, max_pixels=max_pixels)messages = [

{"role": "user","content": [

{"type": "image","image": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-VL/assets/demo.jpeg",

},

{"type": "text", "text": "Describe this image."},

],

}

]# Preparation for inferencetext = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)image_inputs, video_inputs = process_vision_info(messages)inputs = processor(text=[text],images=image_inputs,videos=video_inputs,padding=True,return_tensors="pt",

)inputs = inputs.to("cuda")# Inference: Generation of the outputgenerated_ids = model.generate(**inputs, max_new_tokens=128)generated_ids_trimmed = [out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]output_text = processor.batch_decode(generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False)print(output_text)# Messages containing multiple images and a text querymessages = [

{"role": "user","content": [

{"type": "image", "image": "file:///path/to/image1.jpg"},

{"type": "image", "image": "file:///path/to/image2.jpg"},

{"type": "text", "text": "Identify the similarities between these images."},

],

}

]# Preparation for inferencetext = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)image_inputs, video_inputs = process_vision_info(messages)inputs = processor(text=[text],images=image_inputs,videos=video_inputs,padding=True,return_tensors="pt",

)inputs = inputs.to("cuda")# Inferencegenerated_ids = model.generate(**inputs, max_new_tokens=128)generated_ids_trimmed = [out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]output_text = processor.batch_decode(generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False)print(output_text)# Messages containing a images list as a video and a text querymessages = [

{"role": "user","content": [

{"type": "video","video": ["file:///path/to/frame1.jpg","file:///path/to/frame2.jpg","file:///path/to/frame3.jpg","file:///path/to/frame4.jpg",

],

},

{"type": "text", "text": "Describe this video."},

],

}

]# Messages containing a local video path and a text querymessages = [

{"role": "user","content": [

{"type": "video","video": "file:///path/to/video1.mp4","max_pixels": 360 * 420,"fps": 1.0,

},

{"type": "text", "text": "Describe this video."},

],

}

]# Messages containing a video url and a text querymessages = [

{"role": "user","content": [

{"type": "video","video": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-VL/space_woaudio.mp4",

},

{"type": "text", "text": "Describe this video."},

],

}

]# Preparation for inferencetext = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)image_inputs, video_inputs = process_vision_info(messages)inputs = processor(text=[text],images=image_inputs,videos=video_inputs,padding=True,return_tensors="pt",

)inputs = inputs.to("cuda")# Inferencegenerated_ids = model.generate(**inputs, max_new_tokens=128)generated_ids_trimmed = [out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]output_text = processor.batch_decode(generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False)print(output_text)Video URL compatibility largely depends on the third-party library version. The details are in the table below. change the backend by FORCE_QWENVL_VIDEO_READER=torchvision or FORCE_QWENVL_VIDEO_READER=decord if you prefer not to use the default one.

| Backend | HTTP | HTTPS |

|---|---|---|

| torchvision >= 0.19.0 | ✅ | ✅ |

| torchvision < 0.19.0 | ❌ | ❌ |

| decord | ✅ | ❌ |

# Sample messages for batch inferencemessages1 = [

{"role": "user","content": [

{"type": "image", "image": "file:///path/to/image1.jpg"},

{"type": "image", "image": "file:///path/to/image2.jpg"},

{"type": "text", "text": "What are the common elements in these pictures?"},

],

}

]messages2 = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who are you?"},

]# Combine messages for batch processingmessages = [messages1, messages2]# Preparation for batch inferencetexts = [processor.apply_chat_template(msg, tokenize=False, add_generation_prompt=True)for msg in messages]image_inputs, video_inputs = process_vision_info(messages)inputs = processor(text=texts,images=image_inputs,videos=video_inputs,padding=True,return_tensors="pt",

)inputs = inputs.to("cuda")# Batch Inferencegenerated_ids = model.generate(**inputs, max_new_tokens=128)generated_ids_trimmed = [out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]output_texts = processor.batch_decode(generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False)print(output_texts)We strongly advise users especially those in mainland China to use ModelScope. snapshot_download can help you solve issues concerning downloading checkpoints.

For input images, we support local files, base64, and URLs. For videos, we currently only support local files.

# You can directly insert a local file path, a URL, or a base64-encoded image into the position where you want in the text.## Local file pathmessages = [

{"role": "user","content": [

{"type": "image", "image": "file:///path/to/your/image.jpg"},

{"type": "text", "text": "Describe this image."},

],

}

]## Image URLmessages = [

{"role": "user","content": [

{"type": "image", "image": "http://path/to/your/image.jpg"},

{"type": "text", "text": "Describe this image."},

],

}

]## Base64 encoded imagemessages = [

{"role": "user","content": [

{"type": "image", "image": "data:image;base64,/9j/..."},

{"type": "text", "text": "Describe this image."},

],

}

]The model supports a wide range of resolution inputs. By default, it uses the native resolution for input, but higher resolutions can enhance performance at the cost of more computation. Users can set the minimum and maximum number of pixels to achieve an optimal configuration for their needs, such as a token count range of 256-1280, to balance speed and memory usage.

min_pixels = 256 * 28 * 28max_pixels = 1280 * 28 * 28processor = AutoProcessor.from_pretrained("Qwen/Qwen2-VL-7B-Instruct", min_pixels=min_pixels, max_pixels=max_pixels)Besides, We provide two methods for fine-grained control over the image size input to the model:

Specify exact dimensions: Directly set resized_height and resized_width. These values will be rounded to the nearest multiple of 28.

Define min_pixels and max_pixels: Images will be resized to maintain their aspect ratio within the range of min_pixels and max_pixels.

# resized_height and resized_widthmessages = [

{"role": "user","content": [

{"type": "image","image": "file:///path/to/your/image.jpg","resized_height": 280,"resized_width": 420,

},

{"type": "text", "text": "Describe this image."},

],

}

]# min_pixels and max_pixelsmessages = [

{"role": "user","content": [

{"type": "image","image": "file:///path/to/your/image.jpg","min_pixels": 50176,"max_pixels": 50176,

},

{"type": "text", "text": "Describe this image."},

],

}

]By default, images and video content are directly included in the conversation. When handling multiple images, it's helpful to add labels to the images and videos for better reference. Users can control this behavior with the following settings:

conversation = [

{"role": "user","content": [{"type": "image"}, {"type": "text", "text": "Hello, how are you?"}],

},

{"role": "assistant","content": "I'm doing well, thank you for asking. How can I assist you today?",

},

{"role": "user","content": [

{"type": "text", "text": "Can you describe these images and video?"},

{"type": "image"},

{"type": "image"},

{"type": "video"},

{"type": "text", "text": "These are from my vacation."},

],

},

{"role": "assistant","content": "I'd be happy to describe the images and video for you. Could you please provide more context about your vacation?",

},

{"role": "user","content": "It was a trip to the mountains. Can you see the details in the images and video?",

},

]# default:prompt_without_id = processor.apply_chat_template(conversation, add_generation_prompt=True)# Excepted output: '<|im_start|>systemnYou are a helpful assistant.<|im_end|>n<|im_start|>usern<|vision_start|><|image_pad|><|vision_end|>Hello, how are you?<|im_end|>n<|im_start|>assistantnI'm doing well, thank you for asking. How can I assist you today?<|im_end|>n<|im_start|>usernCan you describe these images and video?<|vision_start|><|image_pad|><|vision_end|><|vision_start|><|image_pad|><|vision_end|><|vision_start|><|video_pad|><|vision_end|>These are from my vacation.<|im_end|>n<|im_start|>assistantnI'd be happy to describe the images and video for you. Could you please provide more context about your vacation?<|im_end|>n<|im_start|>usernIt was a trip to the mountains. Can you see the details in the images and video?<|im_end|>n<|im_start|>assistantn'# add idsprompt_with_id = processor.apply_chat_template(conversation, add_generation_prompt=True, add_vision_id=True)# Excepted output: '<|im_start|>systemnYou are a helpful assistant.<|im_end|>n<|im_start|>usernPicture 1: <|vision_start|><|image_pad|><|vision_end|>Hello, how are you?<|im_end|>n<|im_start|>assistantnI'm doing well, thank you for asking. How can I assist you today?<|im_end|>n<|im_start|>usernCan you describe these images and video?Picture 2: <|vision_start|><|image_pad|><|vision_end|>Picture 3: <|vision_start|><|image_pad|><|vision_end|>Video 1: <|vision_start|><|video_pad|><|vision_end|>These are from my vacation.<|im_end|>n<|im_start|>assistantnI'd be happy to describe the images and video for you. Could you please provide more context about your vacation?<|im_end|>n<|im_start|>usernIt was a trip to the mountains. Can you see the details in the images and video?<|im_end|>n<|im_start|>assistantn'First, make sure to install the latest version of Flash Attention 2:

pip install -U flash-attn --no-build-isolation

Also, you should have a hardware that is compatible with Flash-Attention 2. Read more about it in the official documentation of the flash attention repository. FlashAttention-2 can only be used when a model is loaded in torch.float16 or torch.bfloat16.

To load and run a model using Flash Attention-2, simply add attn_implementation="flash_attention_2" when loading the model as follows:

from transformers import Qwen2VLForConditionalGenerationmodel = <spa