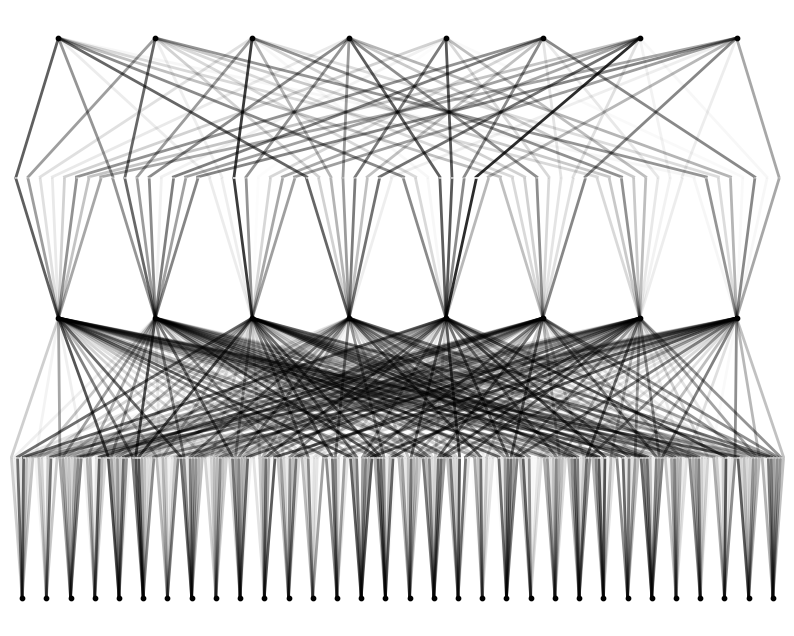

KAN: Kolmogorov-Arnold Networks is a promising challenger to traditional MLPs. We're thrilled about integrating KAN into NeRF! Is KAN suited for view synthesis tasks? What challenges will we face? How will we tackle them? We provide our initial observations and future discussion!

KANeRF is buid based on nerfstudio and Efficient-KAN. Please refer to the website for detailed installation instructions if you encounter any problems.

# create python env

conda create --name nerfstudio -y python=3.8

conda activate nerfstudio

python -m pip install --upgrade pip

# install torch

pip install torch==2.1.2+cu118 torchvision==0.16.2+cu118 --extra-index-url https://download.pytorch.org/whl/cu118

conda install -c "nvidia/label/cuda-11.8.0" cuda-toolkit

# install tinycudann

pip install ninja git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torch

# install nerfstudio

pip install nerfstudio

# install efficient-kan

pip install git+https://github.com/Blealtan/efficient-kan.gitWe integrate KAN and NeRFacto and compare KANeRF with NeRFacto in terms of model parameters, training time, novel view synthesis performance, etc. on the Blender dataset. Under the same network settings, KAN slightly outperforms MLP in novel view synthesis, suggesting that KAN possesses a more powerful fitting capability. However, KAN's inference and training speed are significantly** slower than those of MLP. Furthermore, with a comparable number of parameters, KAN underperforms MLP.

| Model | NeRFacto | NeRFacto Tiny | KANeRF |

|---|---|---|---|

| Trainable Network Parameters | 8192 | 2176 | 7131 |

| Total Network Parameters | 8192 | 2176 | 10683 |

| hidden_dim | 64 | 8 | 8 |

| hidden dim color | 64 | 8 | 8 |

| num layers | 2 | 1 | 1 |

| num layers color | 2 | 1 | 1 |

| geo feat dim | 15 | 7 | 7 |

| appearance embed dim | 32 | 8 | 8 |

| Training Time | 14m 13s | 13m 47s | 37m 20s |

| FPS | 2.5 | ~2.5 | 0.95 |

| LPIPS | 0.0132 | 0.0186 | 0.0154 |

| PSNR | 33.69 | 32.67 | 33.10 |

| SSIM | 0.973 | 0.962 | 0.966 |

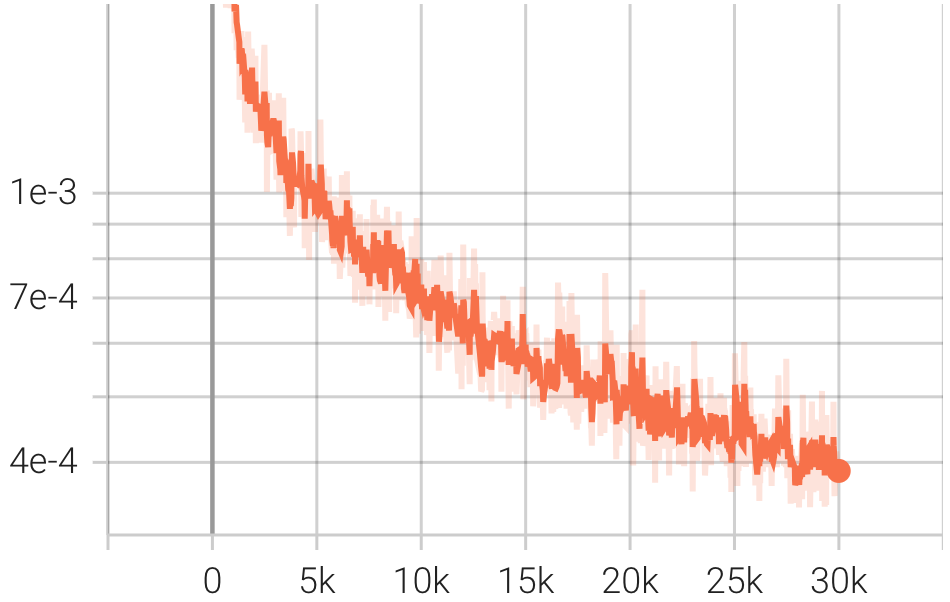

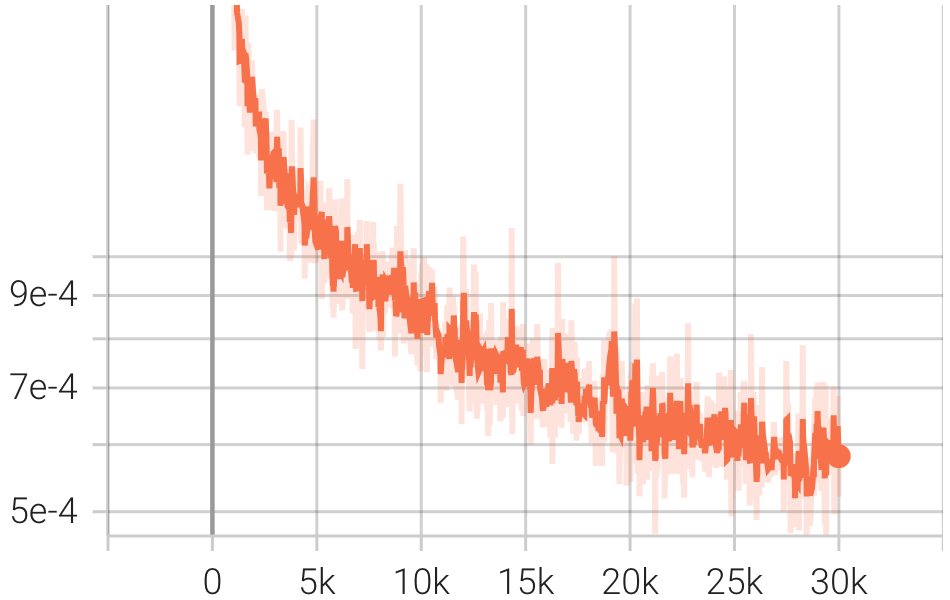

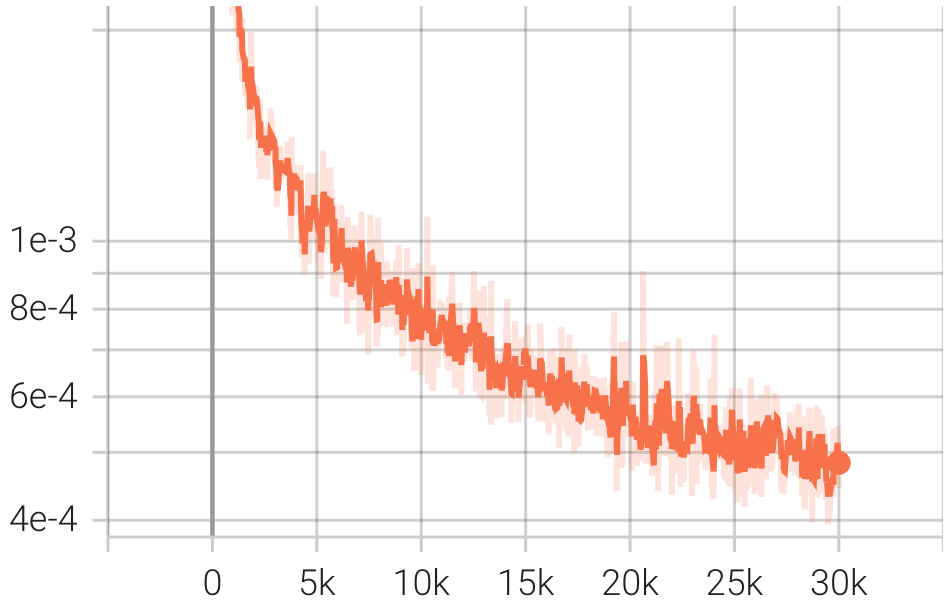

| Loss |  |

|

|

| result (rgb) | nerfacto_rgb.mp4 |

nerfacto_tiny_rgb.mp4 |

kanerf_rgb.mp4 |

| result (depth) | nerfacto_depth.mp4 |

nerfacto_tiny_depth.mp4 |

kanerf_depth.mp4 |

KAN has the potential for optimization, particularly with regard to accelerating its inference speed. We plan to develop a CUDA-accelerated version of KAN to further enhance its performance : D

@Manual{,

title = {Hands-On NeRF with KAN},

author = {Delin Qu, Qizhi Chen},

year = {2024},

url = {https://github.com/Tavish9/KANeRF},

}