This project aims to build a large Chinese language model with a small number of parameters, which can be used to quickly start learning knowledge about large models. If this project is useful to you, you can click start. Thank you!

Model architecture: The overall model architecture adopts open source general architecture, including: RMSNorm, RoPE, MHA, etc.

Implementation details: Implement two-stage training of large models and subsequent human alignment, namely: word segmentation (Tokenizer) -> pre-training (PTM) -> instruction fine-tuning (SFT) -> human alignment (RLHF, DPO) -> evaluation -> quantification- > Deployment.

The project has been deployed and can be experienced on the following website.

Project features:

Bash script, supporting models of different sizes, such as 16m, 42m, 92m, 210m, 440m, etc.;This project mainly has three branches. It is recommended to study the main branch. The specific differences are as follows:

main tiny_llm : Align the open source community model, use the Transformers library to build the underlying model, and also use the Transformers library for multi-card and multi-machine training;tiny_llm , modify MLP layer to a MoE model, and use the Transformers library for multi-card and multi-machine training.Notice:

doc folder (being sorted...) The model is hosted in Huggingface and ModeScope and can be downloaded automatically by running code.

It is recommended to use Huggingface to load the model online. If it cannot run, try ModeScope. If you need to run it locally, modify the path in model_id to the local directory and you can run it.

pip install -r requirements.txt from transformers import AutoTokenizer , AutoModelForCausalLM

from transformers . generation import GenerationConfig

model_id = "wdndev/tiny_llm_sft_92m"

tokenizer = AutoTokenizer . from_pretrained ( model_id , trust_remote_code = True )

model = AutoModelForCausalLM . from_pretrained ( model_id , device_map = "auto" , trust_remote_code = True )

generation_config = GenerationConfig . from_pretrained ( model_id , trust_remote_code = True )

sys_text = "你是由wdndev开发的个人助手。"

# user_text = "世界上最大的动物是什么?"

# user_text = "介绍一下刘德华。"

user_text = "介绍一下中国。"

input_txt = " n " . join ([ "<|system|>" , sys_text . strip (),

"<|user|>" , user_text . strip (),

"<|assistant|>" ]). strip () + " n "

generation_config . max_new_tokens = 200

model_inputs = tokenizer ( input_txt , return_tensors = "pt" ). to ( model . device )

generated_ids = model . generate ( model_inputs . input_ids , generation_config = generation_config )

generated_ids = [

output_ids [ len ( input_ids ):] for input_ids , output_ids in zip ( model_inputs . input_ids , generated_ids )

]

response = tokenizer . batch_decode ( generated_ids , skip_special_tokens = True )[ 0 ]

print ( response ) from modelscope import AutoModelForCausalLM , AutoTokenizer

model_id = "wdndev/tiny_llm_sft_92m"

tokenizer = AutoTokenizer . from_pretrained ( model_id , trust_remote_code = True )

model = AutoModelForCausalLM . from_pretrained ( model_id , device_map = "auto" , trust_remote_code = True )

sys_text = "你是由wdndev开发的个人助手。"

# user_text = "世界上最大的动物是什么?"

# user_text = "介绍一下刘德华。"

user_text = "介绍一下中国。"

input_txt = " n " . join ([ "<|system|>" , sys_text . strip (),

"<|user|>" , user_text . strip (),

"<|assistant|>" ]). strip () + " n "

model_inputs = tokenizer ( input_txt , return_tensors = "pt" ). to ( model . device )

generated_ids = model . generate ( model_inputs . input_ids , max_new_tokens = 200 )

generated_ids = [

output_ids [ len ( input_ids ):] for input_ids , output_ids in zip ( model_inputs . input_ids , generated_ids )

]

response = tokenizer . batch_decode ( generated_ids , skip_special_tokens = True )[ 0 ]

print ( response )Generate effects

问:世界上最大的动物是什么?

答:目前已知最大的动物是蓝鲸(Balaenoptera musculus),这是一个庞大的哺乳动物,属于须鲸亚目、须鲸科中的最大物种。蓝鲸的身长可达30米以上,体重可达175吨。它们在海洋中生活,主要以浮游生物为食,如甲壳类动物和小型鱼类等。由于其巨大的体型和复杂的生态群落,蓝鲸成为海洋旅游的热门景点之一。

问:介绍一下刘德华。

答:刘德华是一位香港流行歌手、演员和导演,他在音乐界的贡献非常巨大。他是华语乐坛历史上最伟大的艺人之一,代表作品包括《爱我身体》和《肥皂泡》。他也经常参演电影和电视剧,并在电视上受到好评。

问:介绍一下中国。

答:中国是位于东亚的大陆,被欧洲以及亚洲和其他大陆所包围。它是中国第二大文明和世界上最大的经济体之一。中国的历史可以追溯到公元前5000年左右,从古至今都有其独特的文化和语言传承者。

There are two ways to build an LLM word segmenter: one is to construct a word list yourself and train a word segmenter; the other is to select a word segmenter trained by an open source model.

For the sake of convenience, this project selects vocabulary from excellent open source projects. Considering that the trained model is small and the size of the vocabulary affects the size of the model, open source projects with smaller vocabulary are preferred. After comparison, the vocabulary of ChatGLM3 is finally selected. table, the size of the word table is 64798.

For the method of constructing a vocabulary yourself, see tokenizer. The 32K vocabulary of LLaMA2 is expanded to 50K, and a 20K Chinese vocabulary is added. For detailed expansion methods, see the documentation or tokenizer/README.md.

Note: This project uses the vocabulary of ChatGLM3.

The model structure adopts a Llama2-like structure, including: RMSNorm, RoPE, MHA, etc.;

The specific parameter details are as follows:

| model | hidden size | intermediate size | n_layers | n_heads | max context length | params | vocab size |

|---|---|---|---|---|---|---|---|

| tiny-llm-16m | 120 | 384 | 6 | 6 | 512 | 16M | 64798 |

| tiny-llm-42m | 288 | 768 | 6 | 6 | 512 | 42M | 64798 |

| tiny-llm-92m | 512 | 1024 | 8 | 8 | 1024 | 92M | 64798 |

| tiny-llm-210m | 768 | 2048 | 16 | 12 | 1024 | 210M | 64798 |

| tiny-llm-440m | 1024 | 2816 | twenty four | 16 | 1024 | 440M | 64798 |

| tiny-llm-1_5b | 2048 | 5504 | twenty four | 16 | 1024 | 1.5B | 64798 |

Since most of the training data and fine-tuning data are Chinese data, the model is evaluated on the two data sets of C-Eval and CMMLU . The OpenCompass tool is used to evaluate the model. The evaluation scores are as follows:

| model | Type | C-Eval | CMMLU |

|---|---|---|---|

| tiny-llm-92m | Base | 23.48 | 25.02 |

| tiny-llm-92m | Chat | 26.79 | 26.59 |

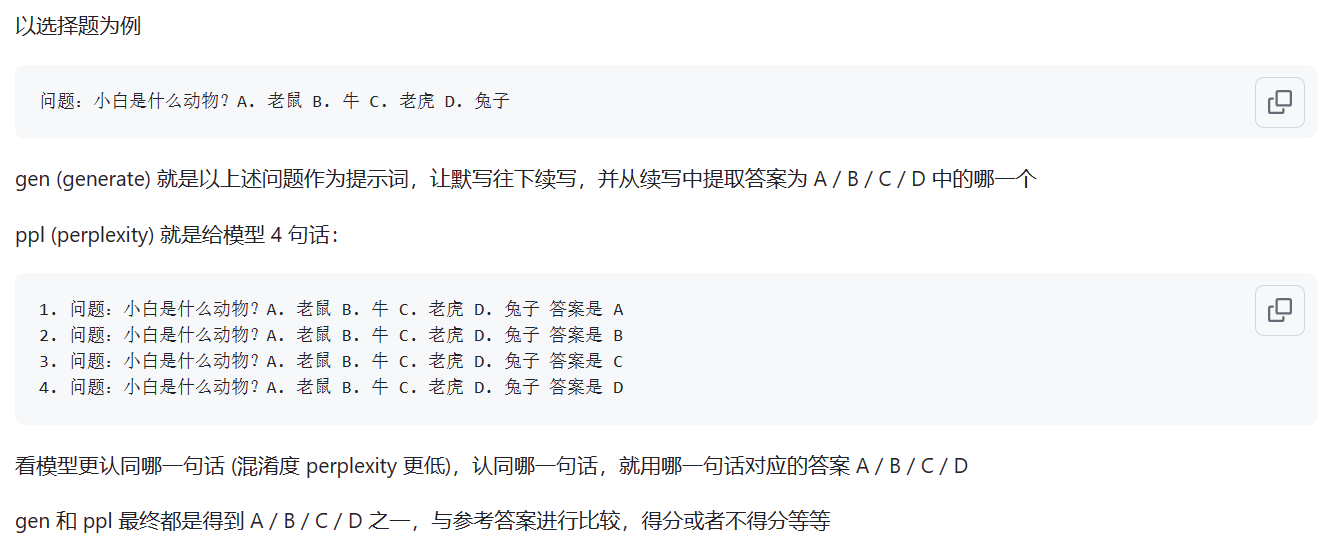

The Base model is evaluated using the ppl evaluation method; the Chat model is evaluated using the gen method. The specific differences are shown in the figure below:

Source: What is the difference between ppl and gen mode

Note: Only two commonly used models have been evaluated, and the scores are low. The evaluation of the remaining models is of little significance.

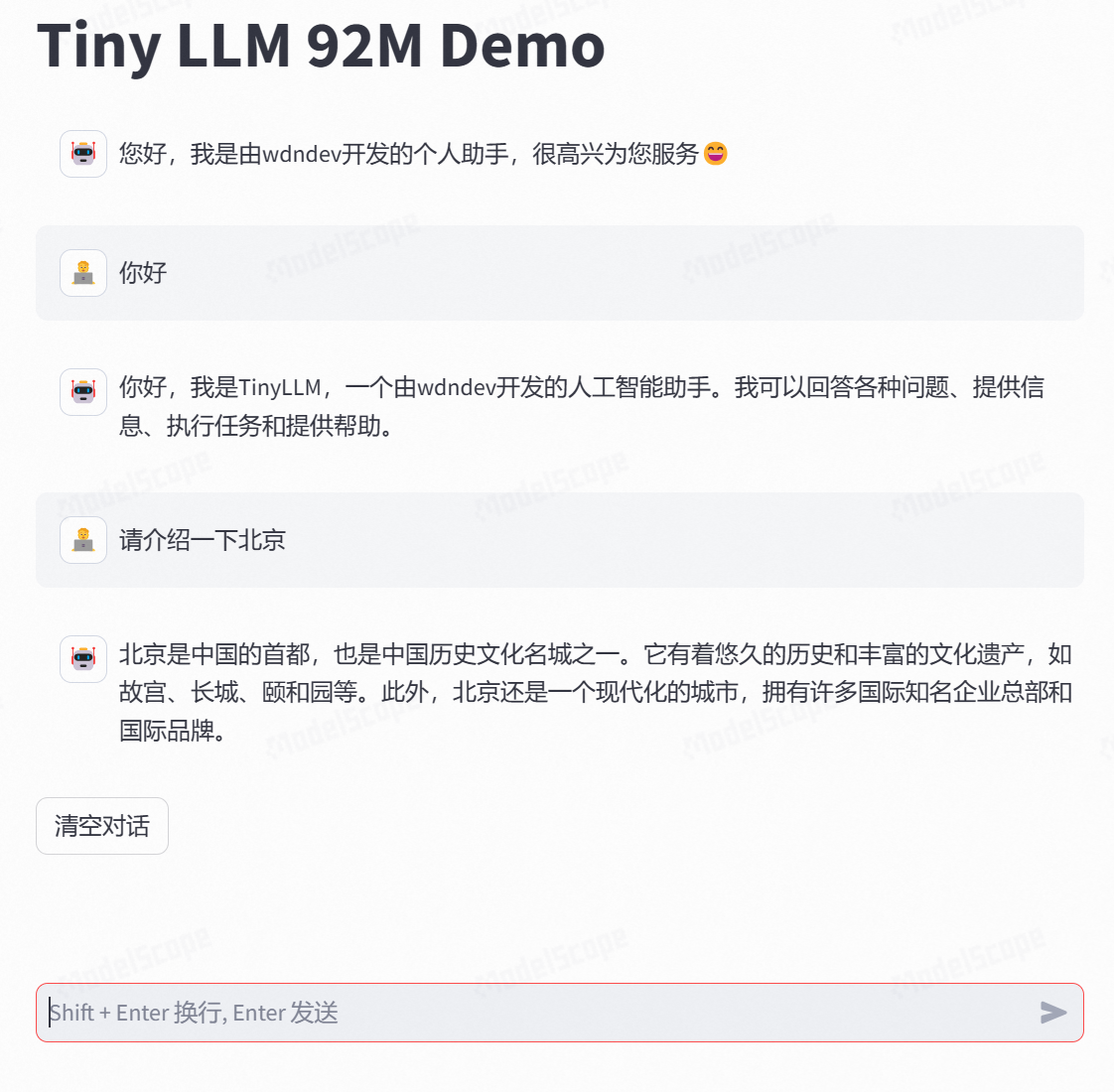

The webpage Demo has been deployed and can be experienced on the following website: ModeScope Tiny LLM

If you want to run the web page Demo locally, pay attention to modifying the model path model_id in the web_demo.py file and enter the following command to run:

streamlit run web_demo.py

Transfomers framework deployment is located in the demo/infer_chat.py and demo/infer_func.py files. It is not much different from other LLM operations. Just pay attention to the splicing of input.

For detailed vllm deployment, see vllm

If you use CUDA 12 or above and PyTorch 2.1 or above , you can directly use the following command to install vLLM.

pip install vllm==0.4.0Otherwise, please refer to vLLM official installation instructions.

After the installation is complete, the following operations are required~

vllm/tinyllm.py file to vllm/model_executor/models directory corresponding to the env environment. " TinyllmForCausalLM " : ( " tinyllm " , " TinyllmForCausalLM " ),Since the model structure is defined by yourself, vllm is not officially implemented and you need to add it manually.

For detailed llama.cpp deployment, see llama.cpp

The Tiny LLM 92M model already supports the llama.cpp C++ inference framework. It is recommended to test in the Linux environment. The effect is not good on Windows;

The supported version of llama.cpp is my own modified version, and the warehouse link is: llama.cpp.tinyllm