Awesome AGI Survey

Must-read papers on Artificial General Intelligence

? News

-

[2024-10] ? Our paper was accepted by TMLR 2024.

-

[2024-05] ? We are organizing an AGI Workshop at ICLR 2024 and have released our position paper, "How Far Are We From AGI?".

Our project is an ongoing, open initiative that will evolve in parallel with advancements in AGI. We warmly welcome feedback and pull requests from the community and plan to update our paper annually. Contributors on the project website will be gratefully acknowledged in future revisions.

BibTex citation if you find our work/resources useful:

@article{feng2024far,

title={How Far Are We From AGI},

author={Feng, Tao and Jin, Chuanyang and Liu, Jingyu and Zhu, Kunlun and Tu, Haoqin and Cheng, Zirui and Lin, Guanyu and You, Jiaxuan},

journal={arXiv preprint arXiv:2405.10313},

year={2024}

}Content

- Content

- 1. Introduction

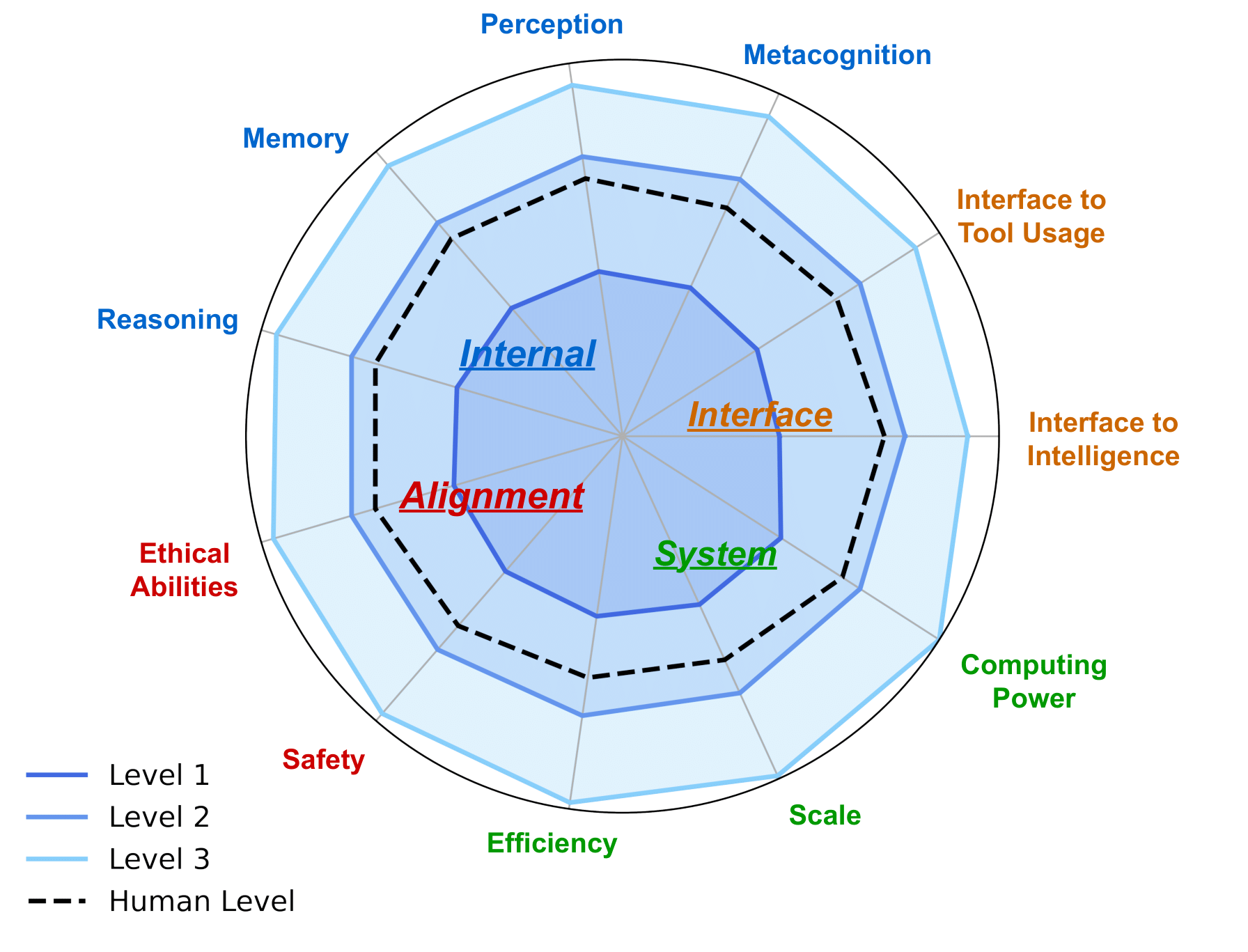

- 2. AGI Internal: Unveiling the Mind of AGI

- 2.1 AI Perception

- 2.2 AI Reasoning

- 2.3 AI Memory

- 2.4 AI Metacognition

- 3. AGI Interface: Connecting the World with AGI

- 3.1 AI Interfaces to Digital World

- 3.2 AI Interfaces to Physical World

- 3.3 AI Interfaces to Intelligence

- 3.3.1 AI Interfaces to AI Agents

- 3.3.2 AI Interfaces to Human

- 4. AGI Systems: Implementing the Mechanism of AGI

- 4.2 Scalable Model Architectures

- 4.3 Large-scale Training

- 4.4 Inference Techniques

- 4.5 Cost and Efficiency

- 4.6 Computing Platforms

- 5. AGI Alignment: Ensuring AGI Meets Various Needs

- 5.1 Expectations of AGI Alignment

- 5.2 Current Alignment Techniques

- 5.3 How to approach AGI Alignments

- 6. AGI Roadmap: Responsibly Approaching AGI

- 6.1 AI Levels: Charting the Evolution of Artificial Intelligence

- 6.2 AGI Evaluation

- 6.2.1 Expectations for AGI Evaluation

- 6.2.2 Current Evaluations and Their Limitations

- 6.5 Further Considerations during AGI Development

- 7. Case Studies

- 7.1 AI for Science Discovery and Research

- 7.2 Generative Visual Intelligence

- 7.3 World Models

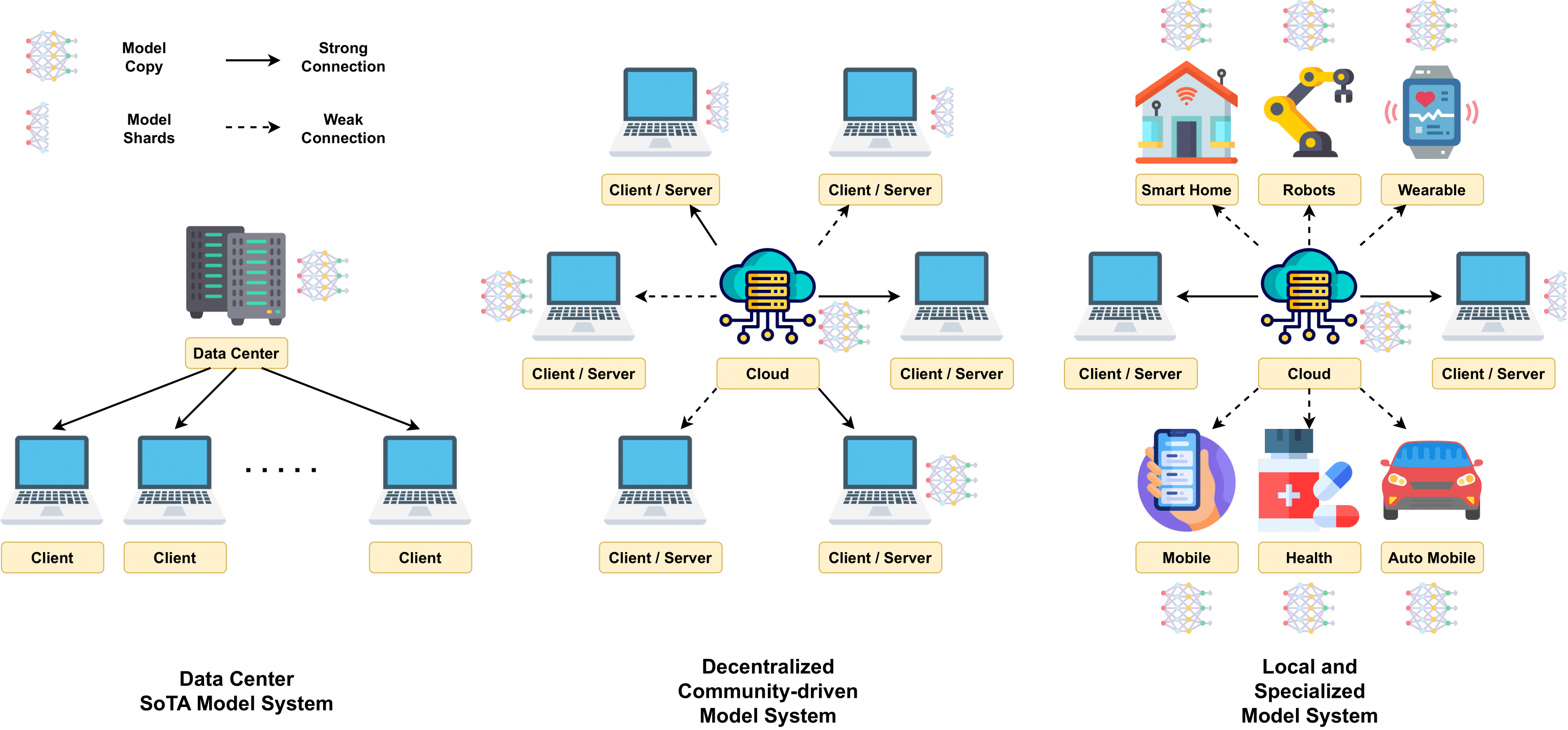

- 7.4 Decentralized LLM

- 7.5 AI for Coding

- 7.6 AI for Robotics in Real World Applications

- 7.7 Human-AI Collaboration

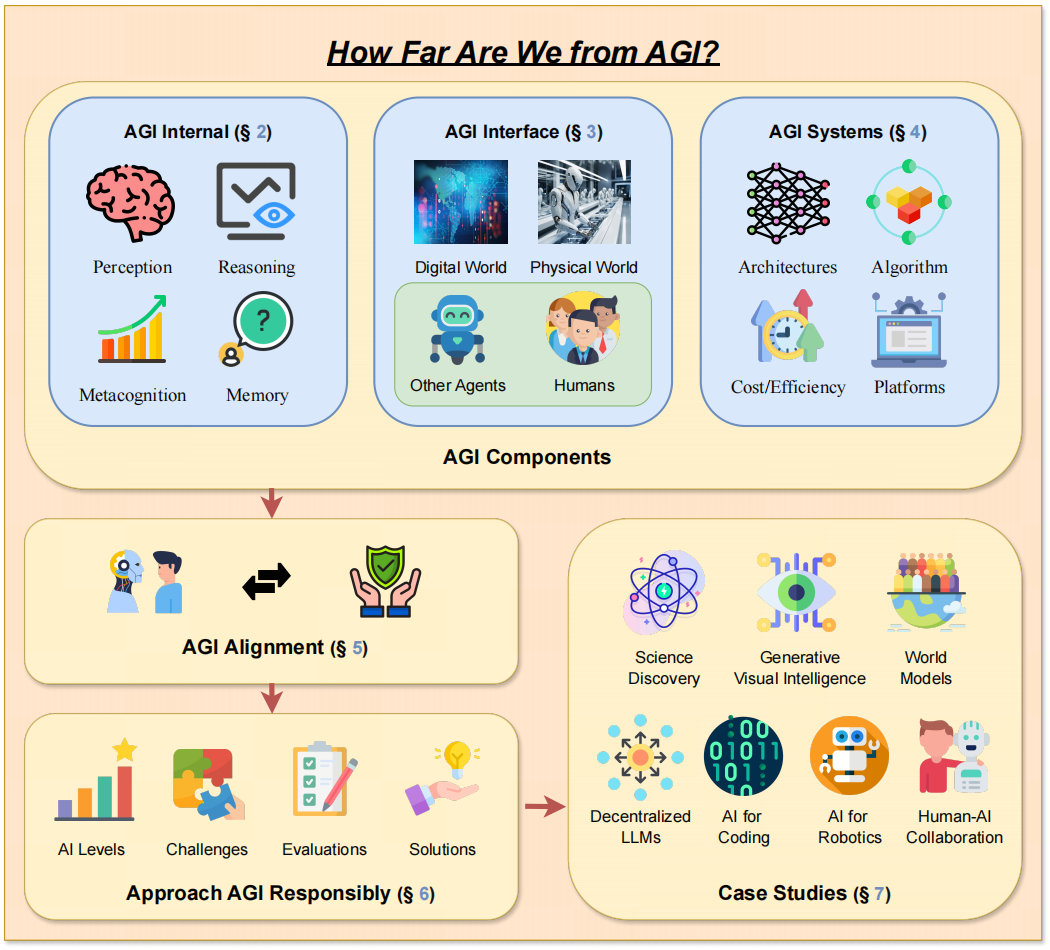

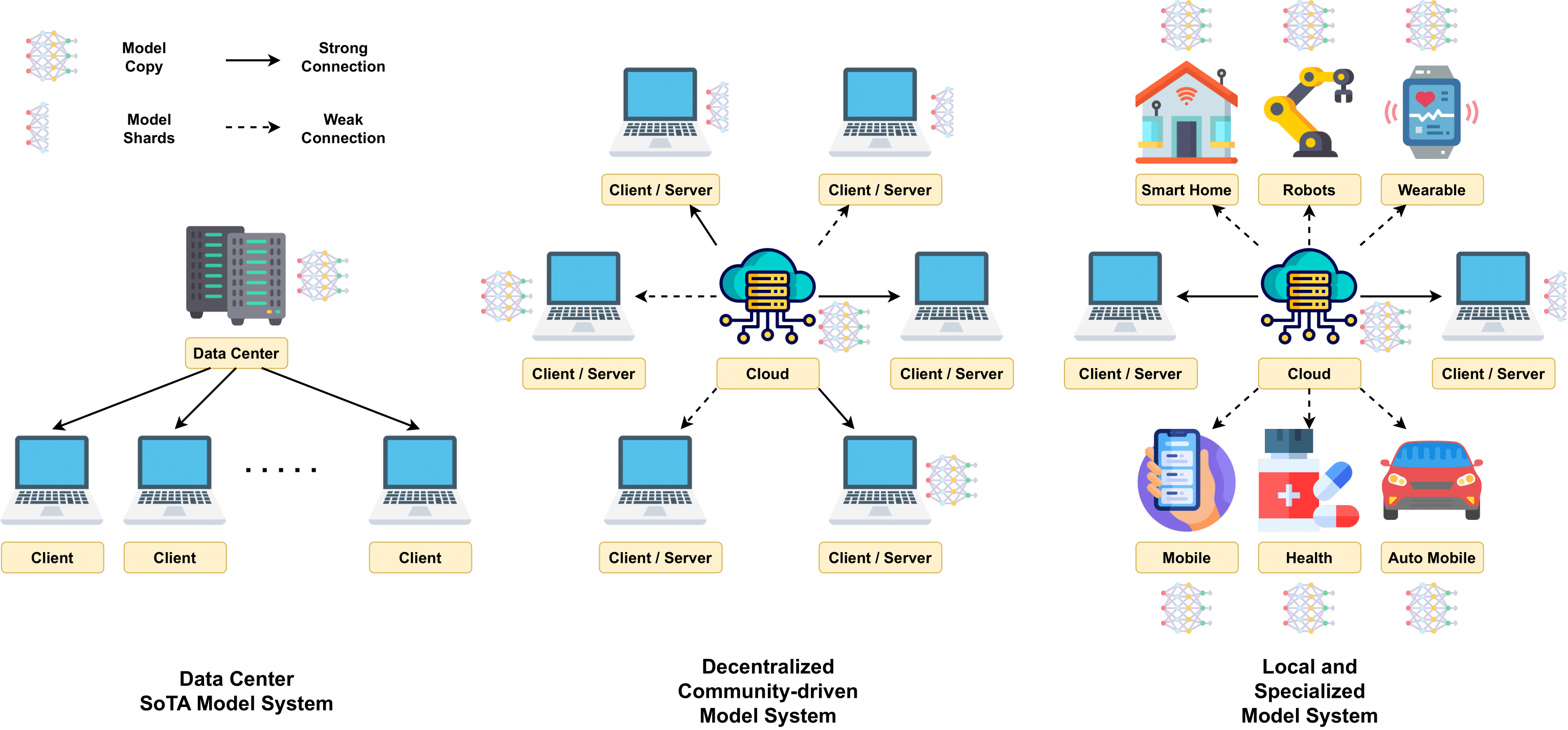

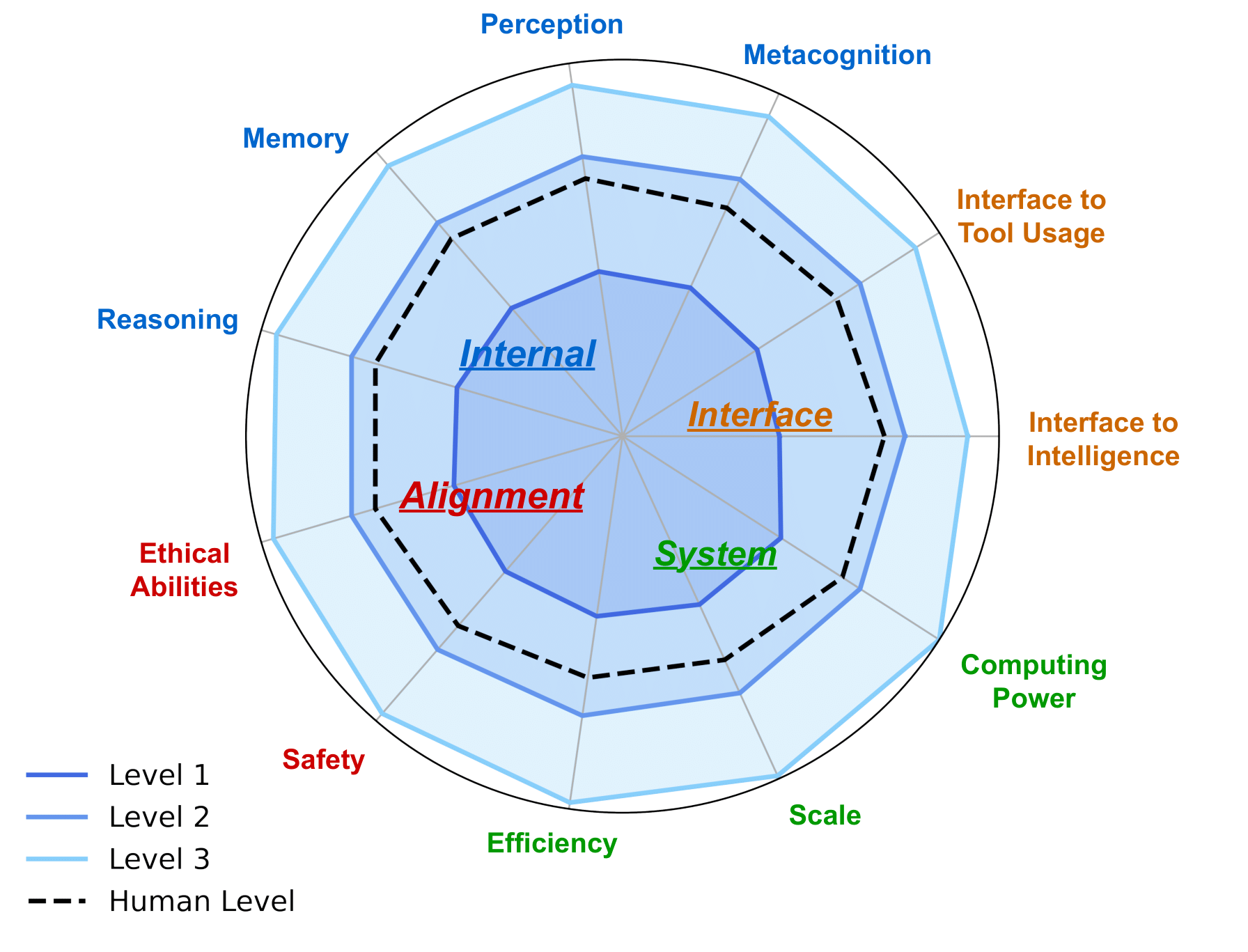

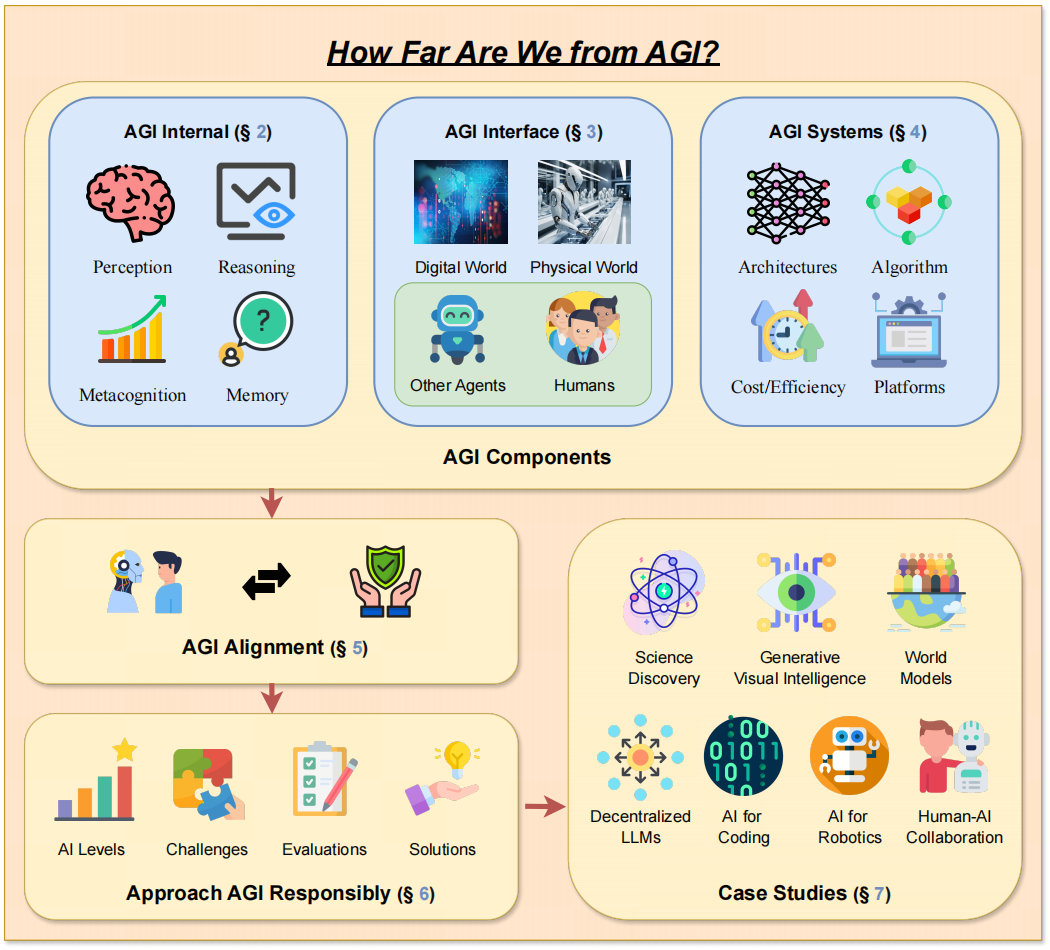

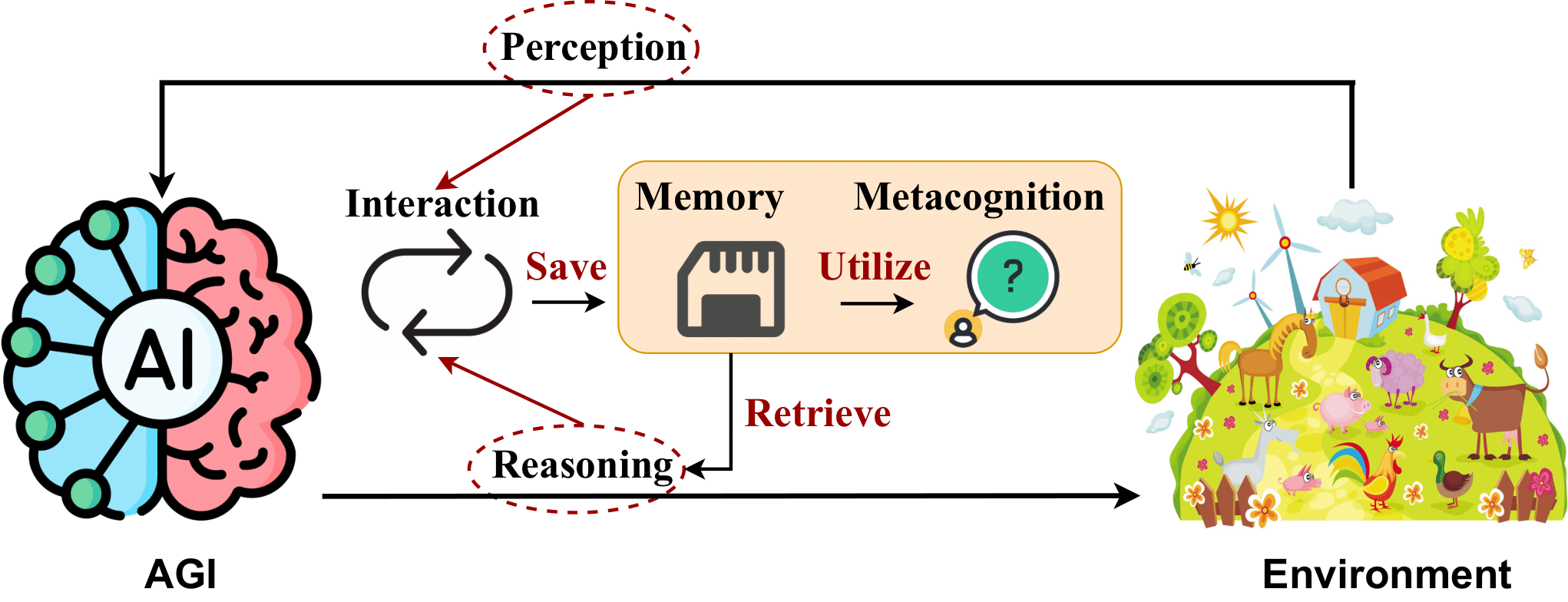

-> The framework design of our paper. <-

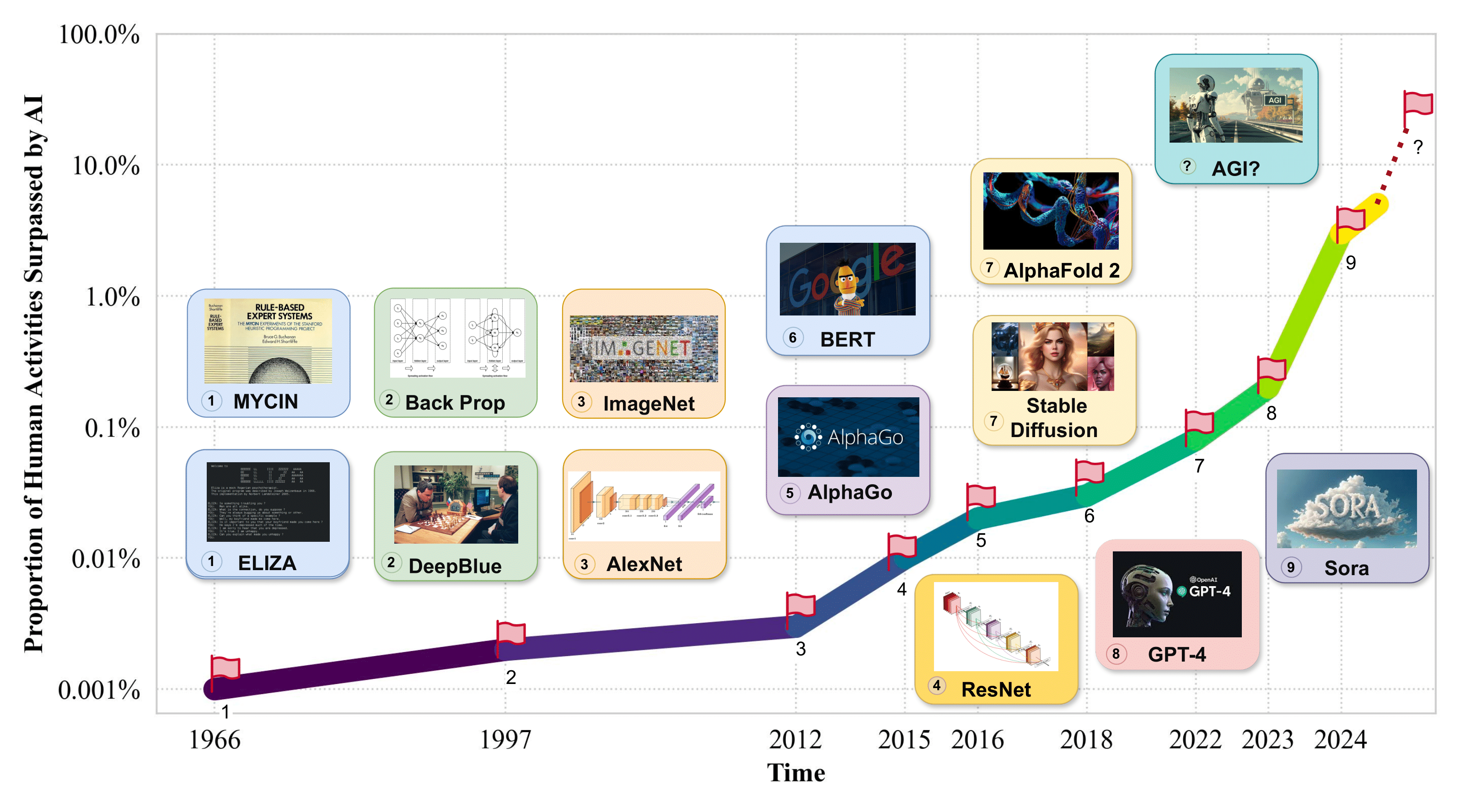

1. Introduction

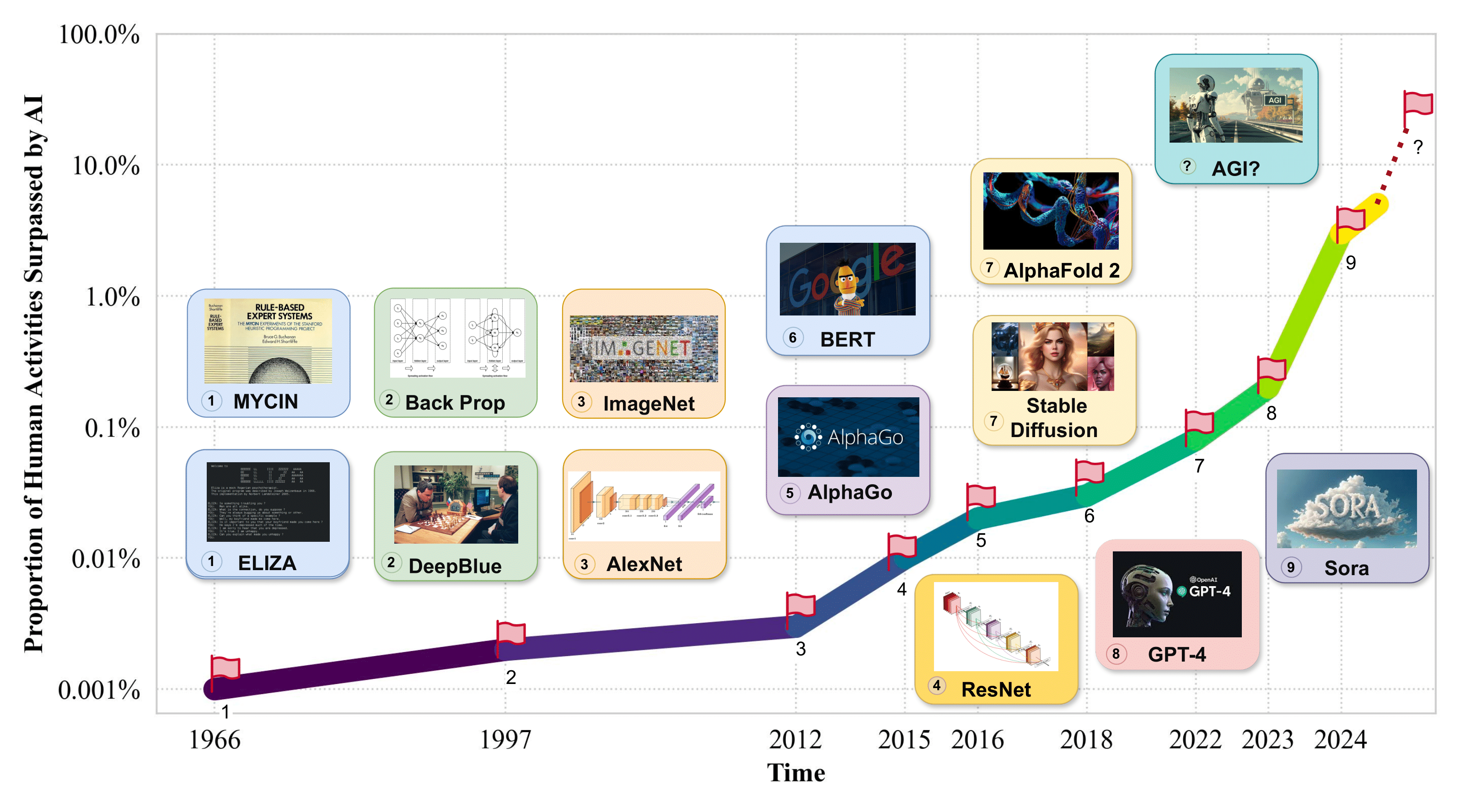

-> Proportion of Human Activities Surpassed by AI. <-

2. AGI Internal: Unveiling the Mind of AGI

2.1 AI Perception

-

Flamingo: a Visual Language Model for Few-Shot Learning. Jean-Baptiste Alayrac et al. NeurIPS 2022. [paper]

-

BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. Junnan Li et al. ICML 2023. [paper]

-

SPHINX: The Joint Mixing of Weights, Tasks, and Visual Embeddings for Multi-modal Large Language Models. Ziyi Lin et al. EMNLP 2023. [paper]

-

Visual Instruction Tuning. Haotian Liu et al. NeurIPS 2023. [paper]

-

GPT4Tools: Teaching Large Language Model to Use Tools via Self-instruction. Rui Yang et al. NeurIPS 2023. [paper]

-

Otter: A Multi-Modal Model with In-Context Instruction Tuning. Bo Li et al. arXiv 2023. [paper]

-

VideoChat: Chat-Centric Video Understanding. KunChang Li et al. arXiv 2023. [paper]

-

mPLUG-Owl: Modularization Empowers Large Language Models with Multimodality. Qinghao Ye et al. arXiv 2023. [paper]

-

A Survey on Multimodal Large Language Models. Shukang Yin et al. arXiv 2023. [paper]

-

PandaGPT: One Model To Instruction-Follow Them All. Yixuan Su et al. arXiv 2023. [paper]

-

LLaMA-Adapter: Efficient Fine-tuning of Language Models with Zero-init Attention. Renrui Zhang et al. arXiv 2023. [paper]

-

Gemini: A Family of Highly Capable Multimodal Models. Rohan Anil et al. arXiv 2023. [paper]

-

Shikra: Unleashing Multimodal LLM's Referential Dialogue Magic. Keqin Chen et al. arXiv 2023. [paper]

-

ImageBind: One Embedding Space To Bind Them All. Rohit Girdhar et al. CVPR 2023. [paper]

-

MobileVLM : A Fast, Strong and Open Vision Language Assistant for Mobile Devices. Xiangxiang Chu et al. arXiv 2023. [paper]

-

What Makes for Good Visual Tokenizers for Large Language Models?. Guangzhi Wang et al. arXiv 2023. [paper]

-

MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models. Deyao Zhu et al. ICLR 2024. [paper]

-

LanguageBind: Extending Video-Language Pretraining to N-modality by Language-based Semantic Alignment. Bin Zhu et al. ICLR 2024. [paper]

2.2 AI Reasoning

-

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. Jason Wei et al. NeurIPS 2022. [paper]

-

Neural Theory-of-Mind? On the Limits of Social Intelligence in Large LMs. Maarten Sap et al. EMNLP 2022. [paper]

-

Inner Monologue: Embodied Reasoning through Planning with Language Models. Wenlong Huang et al. CoRL 2022. [paper]

-

Survey of Hallucination in Natural Language Generation. Ziwei Ji et al. ACM Computing Surveys 2022. [paper]

-

ReAct: Synergizing Reasoning and Acting in Language Models. Shunyu Yao et al. ICLR 2023. [paper]

-

Decomposed Prompting: A Modular Approach for Solving Complex Tasks. Tushar Khot et al. ICLR 2023. [paper]

-

Complexity-Based Prompting for Multi-Step Reasoning. Yao Fu et al. ICLR 2023. [paper]

-

Least-to-Most Prompting Enables Complex Reasoning in Large Language Models. Denny Zhou et al. ICLR 2023. [paper]

-

Towards Reasoning in Large Language Models: A Survey. Jie Huang et al. ACL Findings 2023. [paper]

-

ProgPrompt: Generating Situated Robot Task Plans using Large Language Models. Ishika Singh et al. ICRA 2023. [paper]

-

Reasoning with Language Model is Planning with World Model. Shibo Hao et al. EMNLP 2023. [paper]

-

Evaluating Object Hallucination in Large Vision-Language Models. Yifan Li et al. EMNLP 2023. [paper]

-

Tree of Thoughts: Deliberate Problem Solving with Large Language Models. Shunyu Yao et al. NeurIPS 2023. [paper]

-

Self-Refine: Iterative Refinement with Self-Feedback. Aman Madaan et al. NeurIPS 2023. [paper]

-

Reflexion: Language Agents with Verbal Reinforcement Learning. Noah Shinn et al. NeurIPS 2023. [paper]

-

Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents. Zihao Wang et al. NeurIPS 2023. [paper]

-

LLM+P: Empowering Large Language Models with Optimal Planning Proficiency. Bo Liu et al. arXiv 2023. [paper]

-

Language Models, Agent Models, and World Models: The LAW for Machine Reasoning and Planning. Zhiting Hu et al. arXiv 2023. [paper]

-

MMToM-QA: Multimodal Theory of Mind Question Answering. Chuanyang Jin et al. arXiv 2024. [paper]

-

Graph of Thoughts: Solving Elaborate Problems with Large Language Models. Maciej Besta et al. AAAI 2024. [paper]

-

Achieving >97% on GSM8K: Deeply Understanding the Problems Makes LLMs Perfect Reasoners. Qihuang Zhong et al. arXiv 2024. [paper] pending

2.3 AI Memory

-

Dense Passage Retrieval for Open-Domain Question Answering. Vladimir Karpukhin et al. EMNLP 2020. [paper]

-

Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Patrick Lewis et al. NeurIPS 2020. [paper]

-

REALM: Retrieval-Augmented Language Model Pre-Training. Kelvin Guu et al. ICML 2020. [paper]

-

Retrieval Augmentation Reduces Hallucination in Conversation. Kurt Shuster et al. EMNLP Findings 2021. [paper]

-

Improving Language Models by Retrieving from Trillions of Tokens. Sebastian Borgeaud et al. ICML 2022. [paper]

-

Generative Agents: Interactive Simulacra of Human Behavior. Joon Sung Park et al. UIST 2023. [paper]

-

Cognitive Architectures for Language Agents. Theodore R. Sumers et al. TMLR 2024. [paper]

-

Voyager: An Open-Ended Embodied Agent with Large Language Models. Guanzhi Wang et al. arXiv 2023. [paper]

-

A Survey on the Memory Mechanism of Large Language Model based Agents. Zeyu Zhang et al. arXiv 2024. [paper]

-

Recursively Summarizing Enables Long-Term Dialogue Memory in Large Language Models. Qingyue Wang et al. arXiv 2023. [paper] pending

2.4 AI Metacognition

-

The Consideration of Meta-Abilities in Tacit Knowledge Externalization and Organizational Learning. Jyoti Choudrie et al. HICSS 2006. [paper]

-

Evolving Self-supervised Neural Networks Autonomous Intelligence from Evolved Self-teaching. Nam Le. arXiv 2019. [paper]

-

Making pre-trained language models better few-shot learners. Tianyu Gao et al. ACL 2021. [paper]

-

Identifying and Manipulating the Personality Traits of Language Models. Graham Caron et al. arXiv 2022. [paper]

-

Brainish: Formalizing A Multimodal Language for Intelligence and Consciousness. Paul Liang et al. arXiv 2022. [paper]

-

A theory of consciousness from a theoretical computer science perspective: Insights from the Conscious Turing Machine. Lenore Blum et al. PNAS 2022. [paper]

-

WizardLM: Empowering Large Language Models to Follow Complex Instructions. Can Xu et al. arXiv 2023. [paper]

-

Self-Instruct: Aligning Language Models with Self-Generated Instructions. Yizhong Wang et al. ACL 2023. [paper]

-

ReST meets ReAct: Self-Improvement for Multi-Step Reasoning LLM Agent. Renat Aksitov et al. arXiv 2023. [paper]

-

The Cultural Psychology of Large Language Models: Is ChatGPT a Holistic or Analytic Thinker?. Chuanyang Jin et al. arXiv 2023. [paper]

-

Consciousness in Artificial Intelligence: Insights from the Science of Consciousness. Patrick Butlin et al. arXiv 2023. [paper]

-

Revisiting the Reliability of Psychological Scales on Large Language Models. Jen-tse Huang et al. arXiv 2023. [paper]

-

Evaluating and Inducing Personality in Pre-trained Language Models. Guangyuan Jiang et al. NeurIPS 2024. [paper]

-

Levels of AGI: Operationalizing Progress on the Path to AGI. Meredith Ringel Morris et al. arXiv 2024. [paper] pending

-

Investigate-Consolidate-Exploit: A General Strategy for Inter-Task Agent Self-Evolution. Cheng Qian et al. arXiv 2024. [paper] pending

3. AGI Interface: Connecting the World with AGI

3.1 AI Interfaces to Digital World

-

Principles of mixed-initiative user interfaces. Eric Horvitz. SIGCHI 1999. [paper]

-

The rise and potential of large language model based agents: A survey. Zhiheng Xi et al. arXiv 2023. [paper]

-

Tool learning with foundation models. Yujia Qin et al. arXiv 2023. [paper]

-

Creator: Disentangling abstract and concrete reasonings of large language models through tool creation. Cheng Qian et al. arXiv 2023. [paper]

-

AppAgent: Multimodal Agents as Smartphone Users. Zhao Yang et al. arXiv 2023. [paper]

-

Mind2Web: Towards a Generalist Agent for the Web. Xiang Deng et al. NeurIPS benchmark 2023. [paper]

-

ToolQA: A Dataset for LLM Question Answering with External Tools. Yuchen Zhuang et al. arXiv 2023. [paper]

-

Generative agents: Interactive simulacra of human behavior. Joon Sung Park et al. arXiv 2023. [paper]

-

Toolformer: Language models can teach themselves to use tools. Timo Schick et al. arXiv 2023. [paper]

-

Gorilla: Large Language Model Connected with Massive APIs. Shishir G Patil et al. arXiv 2023. [paper]

-

Voyager: An open-ended embodied agent with large language models. Guanzhi Wang et al. arXiv 2023. [paper]

-

OS-Copilot: Towards Generalist Computer Agents with Self-Improvement. Zhiyong Wu et al. arXiv 2024. [paper]

-

WebArena: A Realistic Web Environment for Building Autonomous Agents. Shuyan Zhou et al. ICLR 2024. [paper]

-

Large Language Models as Tool Makers. Tianle Cai et al. ICLR 2024. [paper]

3.2 AI Interfaces to Physical World

-

Lessons from the amazon picking challenge: Four aspects of building robotic systems. Clemens Eppner et al. RSS 2016. [paper]

-

Perceiver-Actor: A Multi-Task Transformer for Robotic Manipulation. Mohit Shridhar et al. arXiv 2022. [paper]

-

Vima: General robot manipulation with multimodal prompts. Yunfan Jiang et al. arXiv 2022. [paper]

-

Do as i can, not as i say: Grounding language in robotic affordances. Michael Ahn et al. arXiv 2022. [paper]

-

Voxposer: Composable 3d value maps for robotic manipulation with language models. Wenlong Huang et al. arXiv 2023. [paper]

-

MotionGPT: Human Motion as a Foreign Language. Biao Jiang et al. arXiv 2023. [paper]

-

Rt-2: Vision-language-action models transfer web knowledge to robotic control. Anthony Brohan et al. arXiv 2023. [paper]

-

Navigating to objects in the real world. Theophile Gervet et al. Science Robotics 2023. [paper]

-

Lm-nav: Robotic navigation with large pre-trained models of language, vision, and action. Dhruv Shah et al. CRL 2023. [paper]

-

Palm-e: An embodied multimodal language model. Danny Driess et al. arXiv 2023. [paper]

-

LLM-Planner: Few-Shot Grounded Planning for Embodied Agents with Large Language Models. Chan Hee Song et al. ICCV 2023. [paper]

-

Instruct2Act: Mapping Multi-modality Instructions to Robotic Actions with Large Language Model. Siyuan Huang et al. arXiv 2023. [paper]

-

DROID: A Large-Scale In-The-Wild Robot Manipulation Dataset. Alexander Khazatsky et al. arXiv 2024. [paper]

-

BEHAVIOR-1K: A Human-Centered, Embodied AI Benchmark with 1,000 Everyday Activities and Realistic Simulation. Chengshu Li et al. arXiv 2024. [paper]

3.3 AI Interfaces to Intelligence

3.3.1 AI Interfaces to AI Agents

-

Counterfactual multi-agent policy gradients. Jakob Foerster et al. AAAI 2018. [paper]

-

Explanations from large language models make small reasoners better. Shiyang Li et al. arXiv 2022. [paper]

-

In-context Learning Distillation: Transferring Few-shot Learning Ability of Pre-trained Language Models. Yukun Huang et al. arXiv 2022. [paper]

-

Autoagents: A framework for automatic agent generation. Guangyao Chen et al. arXiv 2023. [paper]

-

Neural amortized inference for nested multi-agent reasoning. Kunal Jha et al. arXiv 2023. [paper]

-

Knowledge Distillation of Large Language Models. Yuxian Gu et al. arXiv 2023. [paper]

-

Metagpt: Meta programming for multi-agent collaborative framework. Sirui Hong et al. arXiv 2023. [paper]

-

Describe, explain, plan and select: Interactive planning with large language models enables open-world multi-task agents. Zihao Wang et al. arXiv 2023. [paper]

-

Agentverse: Facilitating multi-agent collaboration and exploring emergent behaviors in agents. Weize Chen et al. arXiv 2023. [paper]

-

Mindstorms in natural language-based societies of mind. Mingchen Zhuge et al. arXiv 2023. [paper]

-

Jarvis-1: Open-world multi-task agents with memory-augmented multimodal language models. Zihao Wang et al. arXiv 2023. [paper]

-

Symbolic Chain-of-Thought Distillation: Small Models Can Also "Think" Step-by-Step. Liunian Harold Li et al. ACL 2023. [paper]

-

Distilling step-by-step! outperforming larger language models with less training data and smaller model sizes. Cheng-Yu Hsieh et al. arXiv 2023. [paper]

-

Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision. Collin Burns et al. arXiv 2023. [paper]

-

Enhancing chat language models by scaling high-quality instructional conversations. Ning Ding et al. arXiv 2023. [paper]

-

GAIA: a benchmark for General AI Assistants. Grégoire Mialon et al. ICLR 2024. [paper]

-

Voyager: An open-ended embodied agent with large language models. Guanzhi Wang et al. arXiv 2023. [paper]

-

Camel: Communicative agents for" mind" exploration of large scale language model society. Guohao Li et al. arXiv 2023. [paper]

-

Tailoring self-rationalizers with multi-reward distillation. Sahana Ramnath et al. arXiv 2023. [paper]

-

Vision Superalignment: Weak-to-Strong Generalization for Vision Foundation Models. Jianyuan Guo et al. arXiv 2024. [paper]

-

WebArena: A Realistic Web Environment for Building Autonomous Agents. Shuyan Zhou et al. ICLR 2024. [paper]

-

Principle-driven self-alignment of language models from scratch with minimal human supervision. Zhiqing Sun et al. NeurIPS 2024. [paper]

-

Mind2web: Towards a generalist agent for the web. Xiang Deng et al. NeurIPS 2024. [paper]

-

Towards general computer control: A multimodal agent for red dead redemption ii as a case study. Weihao Tan et al. arXiv 2024. [paper]

3.3.2 AI Interfaces to Human

-

Guidelines for Human-AI Interaction.

Saleema Amershi et al. CHI 2019. [paper]

-

Design Principles for Generative AI Applications.

Justin D. Weisz et al. CHI 2024. [paper]

-

Graphologue: Exploring Large Language Model Responses with Interactive Diagrams.

Peiling Jiang et al. UIST 2023. [paper]

-

Sensecape: Enabling Multilevel Exploration and Sensemaking with Large Language Models.

Sangho Suh et al. UIST 2023. [paper]

-

Supporting Sensemaking of Large Language Model Outputs at Scale.

Katy Ilonka Gero et al. CHI 2024. [paper]

-

Luminate: Structured Generation and Exploration of Design Space with Large Language Models for Human-AI Co-Creation.

Sangho Suh et al. CHI 2024. [Paper]

-

AI Chains: Transparent and Controllable Human-AI Interaction by Chaining Large Language Model Prompts.

Tongshuang Wu et al. CHI 2022. [paper]

-

Promptify: Text-to-Image Generation through Interactive Prompt Exploration with Large Language Models.

Stephen Brade et al. CHI 2023. [paper]

-

ChainForge: A Visual Toolkit for Prompt Engineering and LLM Hypothesis Testing.

Ian Arawjo et al. CHI 2024. [paper]

-

CoPrompt: Supporting Prompt Sharing and Referring in Collaborative Natural Language Programming.

Li Feng et al. CHI 2024. [paper]

-

Generating Automatic Feedback on UI Mockups with Large Language Models.

Peitong Duan et al. CHI 2024. [paper]

-

Rambler: Supporting Writing With Speech via LLM-Assisted Gist Manipulation.

Susan Lin et al. CHI 2024. [paper]

-

Embedding Large Language Models into Extended Reality: Opportunities and Challenges for Inclusion, Engagement, and Privacy.

Efe Bozkir et al. arXiv 2024. [paper]

-

GenAssist: Making Image Generation Accessible.

Mina Huh et al. UIST 2023. [paper]

-

“The less I type, the better”: How AI Language Models can Enhance or Impede Communication for AAC Users.

Stephanie Valencia et al. CHI 2023. [paper]

-

Re-examining Whether, Why, and How Human-AI Interaction Is Uniquely Difficult to Design.

Qian Yang et al. CHI 2020. [paper]

4. AGI Systems: Implementing the Mechanism of AGI

4.2 Scalable Model Architectures

-

Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. Noam Shazeer et al. arXiv 2017. [paper]

-

Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention. Angelos Katharopoulos et al. arXiv 2020. [paper]

-

Longformer: The Long-Document Transformer. Iz Beltagy et al. arXiv 2020. [paper]

-

LightSeq: A High Performance Inference Library for Transformers. Xiaohui Wang et al. arXiv 2021. [paper]

-

Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity. William Fedus et al. arXiv 2022. [paper]

-

Efficiently Modeling Long Sequences with Structured State Spaces. Albert Gu et al. arXiv 2022. [paper]

-

MegaBlocks: Efficient Sparse Training with Mixture-of-Experts. Trevor Gale et al. arXiv 2022. [paper]

-

Training Compute-Optimal Large Language Models. Jordan Hoffmann et al. arXiv 2022. [paper]

-

Effective Long-Context Scaling of Foundation Models. Wenhan Xiong et al. arXiv 2023. [paper]

-

Hyena Hierarchy: Towards Larger Convolutional Language Models. Michael Poli et al. arXiv 2023. [paper]

-

Stanford Alpaca: An Instruction-following LLaMA model. Rohan Taori et al. GitHub 2023. [code]

-

Rwkv: Reinventing rnns for the transformer era. Bo Peng et al. arXiv 2023. [paper]

-

Deja Vu: Contextual Sparsity for Efficient LLMs at Inference Time. Zichang Liu et al. arXiv 2023. [paper]

-

Flash-LLM: Enabling Cost-Effective and Highly-Efficient Large Generative Model Inference with Unstructured Sparsity. Haojun Xia et al. arXiv 2023. [paper]

-

ByteTransformer: A High-Performance Transformer Boosted for Variable-Length Inputs. Yujia Zhai et al. arXiv 2023. [paper]

-

Tutel: Adaptive Mixture-of-Experts at Scale. Changho Hwang et al. arXiv 2023. [paper]

-

Mamba: Linear-Time Sequence Modeling with Selective State Spaces. Albert Gu, Tri Dao. arXiv 2023. [paper]

-

Hungry Hungry Hippos: Towards Language Modeling with State Space Models. Daniel Y. Fu et al. arXiv 2023. [paper]

-

Retentive Network: A Successor to Transformer for Large Language Models. Yutao Sun et al. ArXiv, 2023.

-

Mechanistic Design and Scaling of Hybrid Architectures. Michael Poli et al. arXiv 2024. [paper]

-

Revisiting Knowledge Distillation for Autoregressive Language Models. Qihuang Zhong et al. arXiv 2024. [paper]

-

DB-LLM: Accurate Dual-Binarization for Efficient LLMs. Hong Chen et al. arXiv 2024. [paper]

-

Reducing Transformer Key-Value Cache Size with Cross-Layer Attention. William Brandon et al. arXiv 2024.[paper]

-

You Only Cache Once: Decoder-Decoder Architectures for Language Models Yutao Sun et al. arXiv 2024. [paper]

4.3 Large-scale Training

-

Training Deep Nets with Sublinear Memory Cost. Tianqi Chen et al. arXiv 2016. [paper]

-

Beyond Data and Model Parallelism for Deep Neural Networks. Zhihao Jia et al. arXiv 2018. [paper]

-

GPipe: Efficient Training of Giant Neural Networks using Pipeline Parallelism. Yanping Huang et al. arXiv 2019. [paper]

-

Parameter-Efficient Transfer Learning for NLP. Neil Houlsby et al. ICML 2019. paper

-

Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism. Mohammad Shoeybi et al. arXiv 2020. [paper]

-

Alpa: Automating Inter- and Intra-Operator Parallelism for Distributed Deep Learning. Lianmin Zheng et al. arXiv 2022. [paper]

-

DeepSpeed Inference: Enabling Efficient Inference of Transformer Models at Unprecedented Scale. Reza Yazdani Aminabadi et al. arXiv 2022. [paper]

-

Memorization Without Overfitting: Analyzing the Training Dynamics of Large Language Models. Kushal Tirumala et al. arXiv 2022. [paper]

-

SWARM Parallelism: Training Large Models Can Be Surprisingly Communication-Efficient. Max Ryabinin et al. arXiv 2023. [paper]

-

Training Trajectories of Language Models Across Scales. Mengzhou Xia et al. arXiv 2023. [paper]

-

HexGen: Generative Inference of Foundation Model over Heterogeneous Decentralized Environment. Youhe Jiang et al. arXiv 2023. [paper]

-

FusionAI: Decentralized Training and Deploying LLMs with Massive Consumer-Level GPUs. Zhenheng Tang et al. arXiv 2023. [paper]

-

Ring Attention with Blockwise Transformers for Near-Infinite Context. Hao Liu et al. arXiv 2023. [paper]

-

Pythia: A Suite for Analyzing Large Language Models Across Training and Scaling. Stella Biderman et al. arXiv 2023. [paper]

-

Fine-tuning Language Models over Slow Networks using Activation Compression with Guarantees. Jue Wang et al. arXiv 2023. [paper]

-

LLaMA-Adapter: Efficient Fine-tuning of Language Models with Zero-init Attention. Renrui Zhang et al. arXiv 2023. [paper]

-

QLoRA: Efficient Finetuning of Quantized LLMs. Tim Dettmers et al. arXiv 2023. [paper]

-

Efficient Memory Management for Large Language Model Serving with PagedAttention. Woosuk Kwon et al. arXiv 2023. [paper]

-

Infinite-LLM: Efficient LLM Service for Long Context with DistAttention and Distributed KVCache. Bin Lin et al. arXiv 2024. [paper]

-

OLMo: Accelerating the Science of Language Models. Dirk Groeneveld et al. arXiv 2024. [paper]

-

On Efficient Training of Large-Scale Deep Learning Models: A Literature Review. Li Shen et al. arXiv 2023. [paper] pending

4.4 Inference Techniques

-

FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness. Tri Dao et al. NeurIPS 2022. [paper]

-

Draft & Verify: Lossless Large Language Model Acceleration via Self-Speculative Decoding. Jun Zhang et al. arXiv 2023. [paper]

-

Towards Efficient Generative Large Language Model Serving: A Survey from Algorithms to Systems. Xupeng Miao et al. arXiv 2023. [paper]

-

FlashDecoding++: Faster Large Language Model Inference on GPUs. Ke Hong et al. arXiv 2023. [paper]

-

Fast Inference from Transformers via Speculative Decoding. Yaniv Leviathan et al. arXiv 2023. [paper]

-

Fast Distributed Inference Serving for Large Language Models. Bingyang Wu et al. arXiv 2023. [paper]

-

S-LoRA: Serving Thousands of Concurrent LoRA Adapters. Ying Sheng et al. arXiv 2023. [paper]

-

TensorRT-LLM: A TensorRT Toolbox for Optimized Large Language Model Inference. NVIDIA. GitHub 2023. [code]

-

Punica: Multi-Tenant LoRA Serving. Lequn Chen et al. arXiv 2023. [paper]

-

S$^3: Increasing GPU Utilization during Generative Inference for Higher Throughput. Yunho Jin et al. arXiv 2023. [paper]

-

Multi-LoRA inference server that scales to 1000s of fine-tuned LLMs. Predibase. GitHub 2023. [code]

-

Prompt Lookup Decoding. Apoorv Saxena. GitHub 2023. [code]

-

FasterTransformer. NVIDIA. GitHub 2021. [paper]

-

DeepSpeed-FastGen: High-throughput Text Generation for LLMs via MII and DeepSpeed-Inference. Connor Holmes et al. arXiv 2024. [paper]

-

SpecInfer: Accelerating Generative Large Language Model Serving with Tree-based Speculative Inference and Verification. Xupeng Miao et al. arXiv 2024. [paper]

-

Medusa: Simple LLM Inference Acceleration Framework with Multiple Decoding Heads. Tianle Cai et al. arXiv 2024. [paper]

-

Model Tells You What to Discard: Adaptive KV Cache Compression for LLMs. Suyu Ge et al. ICLR 2024. [paper]

-

Efficient Streaming Language Models with Attention Sinks. Guangxuan Xiao et al. ICLR 2024. [paper]

-

DeFT: Flash Tree-attention with IO-Awareness for Efficient Tree-search-based LLM Inference. Jinwei Yao et al. arXiv 2024. [paper]

-

Efficiently Programming Large Language Models using SGLang. Lianmin Zheng et al. arXiv 2023. [paper]

-

SPEED: Speculative Pipelined Execution for Efficient Decoding. Coleman Hooper et al. arXiv 2023. [paper]

-

Sequoia: Scalable, Robust, and Hardware-aware Speculative Decoding. Zhuoming Chen et al. arXiv 2024. [paper] pending

4.5 Cost and Efficiency

-

Demonstrate-Search-Predict: Composing Retrieval and Language Models for Knowledge-Intensive NLP. Omar Khattab et al. arXiv 2023. [paper]

-

Automated Machine Learning: Methods, Systems, Challenges. Frank Hutter et al. Springer Publishing Company, Incorporated, 2019.

-

Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time. Mitchell Wortsman et al. arXiv 2022. [paper]

-

Data Debugging with Shapley Importance over End-to-End Machine Learning Pipelines. Bojan Karlaš et al. arXiv 2022. [paper]

-

Cost-Effective Hyperparameter Optimization for Large Language Model Generation Inference. Chi Wang et al. arXiv 2023. [paper]

-

Large Language Models Are Human-Level Prompt Engineers. Yongchao Zhou et al. arXiv 2023. [paper]

-

Merging by Matching Models in Task Subspaces. Derek Tam et al. arXiv 2023. [paper]

-

Editing Models with Task Arithmetic. Gabriel Ilharco et al. arXiv 2023. [paper]

-

PriorBand: Practical Hyperparameter Optimization in the Age of Deep Learning. Neeratyoy Mallik et al. arXiv 2023. [paper]

-

An Empirical Study of Multimodal Model Merging. Yi-Lin Sung et al. arXiv 2023. [paper]

-

DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines. Omar Khattab et al. arXiv 2023. [paper]

-

FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance. Lingjiao Chen et al. arXiv 2023. [paper]

-

Tandem Transformers for Inference Efficient LLMs. Aishwarya P S et al. arXiv 2024. [paper]

-

AIOS: LLM Agent Operating System. Kai Mei et al. arXiv 2024. [paper]

-

LoraHub: Efficient Cross-Task Generalization via Dynamic LoRA Composition. Chengsong Huang et al. arXiv 2024. [paper]

-

AutoML in the Age of Large Language Models: Current Challenges, Future Opportunities and Risks. Alexander Tornede et al. arXiv 2024. [paper]

-

Merging Experts into One: Improving Computational Efficiency of Mixture of Experts. Shwai He et al. EMNLP 2023. [paper] pending

4.6 Computing Platforms

-

TVM: An Automated End-to-End Optimizing Compiler for Deep Learning. Tianqi Chen et al. arXiv 2018. [paper]

-

TPU v4: An Optically Reconfigurable Supercomputer for Machine Learning with Hardware Support for Embeddings. Norman P. Jouppi et al. arXiv 2023. [paper]

5. AGI Alignment: Ensuring AGI Meets Various Needs

5.1 Expectations of AGI Alignment

-

Human Compatible: Artificial Intelligence and the Problem of Control.

Stuart Russell. Viking, 2019.

-

Artificial Intelligence, Values and Alignment.

Iason Gabriel. Minds and Machines, 2020. [paper]

-

Alignment of Language Agents.

Zachary Kenton et al. arXiv, 2021. [Paper]

-

The Value Learning Problem.

Nate Soares. Machine Intelligence Research Institute Technical Report [paper]

-

Concrete Problems in AI Safety.

Dario Amodei et al. arXiv, 2016. [paper]

-

Ethical and social risks of harm from Language Models.

Laura Weidinger et al. arXiv, 2021. [paper]

-

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?.

Emily M. Bender et al. FAccT 2021. [paper]

-

The global landscape of AI ethics guidelines.

Anna Jobin et al. Nature Machine Intelligence, 2019. [paper]

-

Persistent Anti-Muslim Bias in Large Language Models.

Abubakar Abid et al. AIES, 2021. [paper]

-

Toward Gender-Inclusive Coreference Resolution.

Yang Trista Cao et al. ACL, 2020. [paper]

-

The Social Impact of Natural Language Processing.

Dirk Hovy et al. ACL 2016. [paper]

-

TruthfulQA: Measuring How Models Mimic Human Falsehoods.

Stephanie Lin et al. ACL 2022. [paper]

-

The Radicalization Risks of GPT-3 and Advanced Neural Language Models.

Kris McGuffie et al. arXiv, 2020. [paper]

-

AI Transparency in the Age of LLMs: A Human-Centered Research Roadmap.

Q. Vera Liao et al. arXiv 2023. [paper]

-

Beyond Expertise and Roles: A Framework to Characterize the Stakeholders of Interpretable Machine Learning and their Needs.

Harini Suresh et al. CHI 2021. [paper]

-

Identifying and Mitigating the Security Risks of Generative AI.

Clark Barrett et al. arXiv, 2023. [paper]

-

LLM Agents can Autonomously Hack Websites.

Richard Fang et al. arXiv, 2024. [paper]

-

Deepfakes, Phrenology, Surveillance, and More! A Taxonomy of AI Privacy Risks.

Hao-Ping Lee et al. CHI 2024. [paper]

-

Privacy in the Age of AI.

Sauvik Das et al. Communications of the ACM, 2023. [paper]

5.2 Current Alignment Techniques

-

Learning to summarize with human feedback. Nisan Stiennon et al. NeurIPS 2020. [paper]

-

Second thoughts are best: Learning to re-align with human values from text edits. Ruibo Liu et al. NeurIPS 2022. [paper]

-

Training language models to follow instructions with human feedback. Long Ouyang et al. NeurIPS 2022. [paper]

-

Leashing the Inner Demons: Self-Detoxification for Language Models. Canwen Xu et al. AAAI 2022. paper

-

Aligning generative language models with human values. Ruibo Liu et al. NAACL 2022. [paper]

-

Training a helpful and harmless assistant with reinforcement learning from human feedback. Yuntao Bai et al. arXiv 2022. [paper]

-

Constitutional AI: Harmlessness from AI Feedback. Yuntao Bai et al. arXiv 2022. [paper]

-

Raft: Reward ranked finetuning for generative foundation model alignment. Hanze Dong et al. arXiv 2023. [paper]

-

Improving Language Models with Advantage-based Offline Policy Gradients. Ashutosh Baheti et al. arXiv 2023. [paper]

-

Training language models with language feedback at scale. Jérémy Scheurer et al. arXiv 2023. [paper]

-

A general theoretical paradigm to understand learning from human preferences. Mohammad Gheshlaghi Azar et al. arXiv 2023. [paper]

-

Let's Verify Step by Step. Hunter Lightman et al. arXiv 2023. [paper]

-

Open problems and fundamental limitations of reinforcement learning from human feedback. Stephen Casper et al. arXiv 2023. [paper]

-

Aligning Large Language Models through Synthetic Feedback. Sungdong Kim et al. arXiv 2023. [paper]

-

RLAIF: Scaling Reinforcement Learning from Human Feedback with AI Feedback. Harrison Lee et al. arXiv 2023. [paper]

-

Preference ranking optimization for human alignment. Feifan Song et al. arXiv 2023. [paper]

-

Improving Factuality and Reasoning in Language Models through Multiagent Debate. Yilun Du et al. arXiv 2023. [paper]

-

Large language model alignment: A survey. Tianhao Shen et al. arXiv 2023. [paper]

-

Direct preference optimization: Your language model is secretly a reward model. Rafael Rafailov et al. NeurIPS 2024. [paper]

-

Lima: Less is more for alignment. Chunting Zhou et al. NeurIPS 2024. [paper]

5.3 How to approach AGI Alignments

-

Ethical and social risks of harm from Language. Mellor Weidinger et al. arXiv 2021. [paper]

-

Beijing AI Safety International Consensus. Beijing Academy of Artificial Intelligence. 2024. [paper]

-

Counterfactual Explanations without Opening the Black Box: Automated Decisions and the GDPR. Sandra Wachter et al. Harvard Journal of Law & Technology, 2017. [paper]

-

Scalable agent alignment via reward modeling: a research direction. Jan Leike et al. arXiv 2018. [paper]

-

Building Ethics into Artificial Intelligence. Han Yu et al. IJCAI 2018. paper

-

Human Compatible: Artificial Intelligence and the Problem of Control. Stuart Russell. Viking, 2019. [paper]

-

Responsible artificial intelligence: How to develop and use AI in a responsible way. Virginia Dignum. Springer Nature, 2019. [paper]

-

Machine Ethics: The Design and Governance of Ethical AI and Autonomous Systems. Alan F. Winfield et al. Proceedings of the IEEE, 2019. [paper]

-

Open problems in cooperative AI. Allan Dafoe et al. arXiv 2020. [paper]

-

Artificial intelligence, values, and alignment. Iason Gabriel. Minds and Machines, 2020. [paper]

-

Cooperative AI: machines must learn to find common ground. Allan Dafoe et al. Nature 2021. [paper]

-

Machine morality, moral progress, and the looming environmental disaster. Ben Kenward et al. arXiv 2021. [paper]

-

X-risk analysis for ai research. Dan Hendrycks et al. arXiv 2022. [paper]

-

Task decomposition for scalable oversight (AGISF Distillation). Charbel-Raphaël Segerie. blog 2023. [blog]

-

Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision. Collin Burns, et al. arXiv 2023. [paper]

-

Whose opinions do language models reflect?. Shibani Santurkar et al. ICML 2023. [paper]

-

Ai alignment: A comprehensive survey. Jiaming Ji et al. arXiv 2023. [paper]

-

Open problems and fundamental limitations of reinforcement learning from human feedback. Stephen Casper et al. arXiv 2023. [paper]

-

The Unlocking Spell on Base LLMs: Rethinking Alignment via In-Context Learning. Bill Yuchen Lin et al. arXiv 2023. [paper]

-

Large Language Model Alignment: A Survey. Tianhao Shen et al. arXiv 2023. [paper]

6. AGI Roadmap: Responsibly Approaching AGI

6.1 AI Levels: Charting the Evolution of Artificial Intelligence

-

Sparks of Artificial General Intelligence: Early experiments with GPT-4. Sébastien Bubeck et al. arXiv 2023. [paper]

-

Levels of AGI: Operationalizing Progress on the Path to AGI. Meredith Ringel Morris et al. arXiv 2024. [paper]

6.2 AGI Evaluation

6.2.1 Expectations for AGI Evaluation

-

Towards the systematic reporting of the energy and carbon footprints of machine learning. Peter Henderson et al. Journal of Machine Learning Research, 2020.

-

Green ai. Roy Schwartz Communications of the ACM, 2020.

-

Evaluating Large Language Models Trained on Code. Mark Chen et al. No journal, 2021.

-

Documenting large webtext corpora: A case study on the colossal clean crawled corpus. Jesse Dodge et al. arXiv 2021. [paper]

-

On the opportunities and risks of foundation models. Rishi Bommasani et al. arXiv 2021. [paper]

-

Human-like systematic generalization through a meta-learning neural network. Brenden M Lake et al. Nature, 2023. [paper]

-

SuperBench is Measuring LLMs in The Open: A Critical Analysis. SuperBench Team. arXiv 2023.

-

Holistic Evaluation of Language Models. Percy Liang et al. arXiv 2023. [paper]

6.2.2 Current Evaluations and Their Limitations

-

SQuAD: 100,000+ Questions for Machine Comprehension of Text. Pranav Rajpurkar et al. arXiv 2016. [paper]

-

TriviaQA: A Large Scale Distantly Supervised Challenge Dataset for Reading Comprehension. Mandar Joshi et al. arXiv 2017. [paper]

-

Coqa: A conversational question answering challenge. Siva Reddy et al. Transactions of the Association for Computational Linguistics, 2019.

-

Accurate, reliable and fast robustness evaluation. Wieland Brendel et al. NeurIPS 2019. [paper]

-

Measuring massive multitask language understanding. Dan Hendrycks et al. arXiv 2020. [paper]

-

Evaluating model robustness and stability to dataset shift. Adarsh Subbaswamy et al. Presented at International conference on artificial intelligence and statistics, 2021. paper

-

Mmdialog: A large-scale multi-turn dialogue dataset towards multi-modal open-domain conversation. Jiazhan Feng et al. arXiv 2022. [paper]

-

Self-instruct: Aligning language models with self-generated instructions. Yizhong Wang et al. arXiv 2022. [paper]

-

Super-naturalinstructions: Generalization via declarative instructions on 1600+ nlp tasks. Yizhong Wang et al. arXiv 2022. [paper]

-

Holistic analysis of hallucination in gpt-4v (ision): Bias and interference challenges. Chenhang Cui et al. arXiv 2023. [paper]

-

Evaluating the Robustness to Instructions of Large Language Models. Yuansheng Ni et al. arXiv 2023. [paper]

-

GAIA: a benchmark for General AI Assistants. Grégoire Mialon et al. arXiv 2023. [paper]

-

A Comprehensive Evaluation Framework for Deep Model Robustness. Jun Guo et al. Pattern Recognition, 2023. [paper]

-

Agieval: A human-centric benchmark for evaluating foundation models. Wanjun Zhong et al. arXiv 2023. [paper]

-

Mmmu: A massive multi-discipline multimodal understanding and reasoning benchmark for expert agi. Xiang et al. arXiv 2023. [paper]

-

Evaluating Large Language Model Creativity from a Literary Perspective. Murray Shanahan et al. arXiv 2023. [paper]

-

AgentBench: Evaluating LLM. Xiao Liu et al. ICLR 2024. [paper]

-

Assessing and Understanding Creativity in Large Language Models. Yunpu Zhao et al. arXiv 2024. [paper]

-

Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena. Lianmin Zheng et al. NeurIPS 2024. [paper]

6.5 Further Considerations during AGI Development

-

Foundational Challenges in Assuring Alignment and Safety of Large Language Models. Usman Anwar et al. arXiv 2024. [paper]

-

Best Practices and Lessons Learned on Synthetic Data for Language Models. Ruibo Liu et al. arXiv 2024. [paper]

-

Advancing Social Intelligence in AI Agents: Technical Challenges and Open Questions. Leena Mathur et al. arXiv 2024. [paper]

7. Case Studies

7.1 AI for Science Discovery and Research

-

Highly accurate protein structure prediction with AlphaFold. Jumper, John et al. Nature, 2021. [paper]

-

Automated Scientific Discovery: From Equation Discovery to Autonomous Discovery Systems. Kramer, Stefan et al. arXiv 2023. [paper]

-

Predicting effects of noncoding variants with deep learning--based sequence model. Zhou, Jian et al. Nature methods, 2015. [[paper](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4768299/]

-

Learning to See Physics via Visual De-animation. Wu, Jiajun et al. NeurIPS 2017. [paper]

-

Deep learning for real-time gravitational wave detection and parameter estimation: Results with Advanced LIGO data. George, Daniel et al. Physics Letters B, 2018. [paper]

-

Identifying quantum phase transitions with adversarial neural networks. Rem, Bart-Jan et al. Nature Physics, 2019. [paper]

-

OpenAGI: When LLM Meets Domain Experts. Ge, Yingqiang et al. NeurIPS, 2023. [paper]

-

From dark matter to galaxies with convolutional networks. Zhang, Xinyue et al. arXiv 2019. [paper]

-

Global optimization of quantum dynamics with AlphaZero deep exploration. Dalgaard, Mogens et al. npj Quantum Information, 2020. [paper]

-

Learning to Exploit Temporal Structure for Biomedical Vision-Language Processing. Shruthi Bannur et al. CVPR, 2023. [paper]

-

Mathbert: A pre-trained language model for general nlp tasks in mathematics education. Shen, Jia Tracy et al. arXiv 2021. [paper]

-

Molecular Optimization using Language Models. Maziarz, Krzysztof et al. arXiv 2022. [paper]

-

RetroTRAE: retrosynthetic translation of atomic environments with Transformer. Ucak, Umit Volkan et al. No journal, 2022.[paper]

-

ScholarBERT: bigger is not always better. Hong, Zhi et al. arXiv 2022. [paper]

-

Galactica: A large language model for science. Taylor, Ross et al. arXiv 2022. [paper]

-

Formal mathematics statement curriculum learning. Polu, Stanislas et al. arXiv 2022. [paper]

-

Proof Artifact Co-Training for Theorem Proving with Language Models. Jesse Michael Han et al. ICLR 2022. [paper]

-

Solving quantitative reasoning problems with language models. Lewkowycz, Aitor et al. arXiv 2022. [paper]

-

BioGPT: generative pre-trained transformer for biomedical text generation and mining. Luo, Renqian et al. Briefings in bioinformatics, 2022.

-

ChemCrow: Augmenting large-language models with chemistry tools. Bran, Andres M et al. arXiv 2023. [paper]

-

Autonomous chemical research with large language models. Boiko, Daniil A et al. Nature, 2023. [paper]

-

Emergent autonomous scientific research capabilities of large language models. Daniil A. Boiko et al. arXiv 2023. [paper]

-

MathPrompter: Mathematical Reasoning using Large Language Models. Imani, Shima, et al. Presented at Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 5: Industry Track), 2023. [paper]

-

Learning to Exploit Temporal Structure for Biomedical Vision-Language Processing. Shruthi Bannur et al. arXiv 2023. [paper]

-

LLMs for Science: Usage for Code Generation and Data Analysis. Nejjar, Mohamed et al. arXiv 2023. [paper]

-

MedAgents: Large Language Models as Collaborators for Zero-shot Medical Reasoning. Xiangru Tang et al. arXiv 2024. [paper]

7.2 Generative Visual Intelligence

-

Deep Unsupervised Learning using Nonequilibrium Thermodynamics. Jascha Sohl-Dickstein et al. ICML 2015. [paper]

-

Generative Modeling by Estimating Gradients of the Data Distribution. Yang Song et al. NeurIPS 2019. [paper]

-

Denoising Diffusion Probabilistic Models. Jonathan Ho et al. NeurIPS 2020. [paper]

-

Score-Based Generative Modeling through Stochastic Differential Equations. Yang Song et al. ICLR 2021. [paper]

-

GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. Alex Nichol et al. ICML 2022. [paper]

-

SDEdit: Guided Image Synthesis and Editing with Stochastic Differential Equations. Chenlin Meng et al. ICLR 2022. [paper]

-

Video Diffusion Models. Jonathan Ho et al. NeurIPS 2022. [paper]

-

Hierarchical Text-Conditional Image Generation with CLIP Latents. Aditya Ramesh et al. arXiv 2022. [paper]

-

Classifier-Free Diffusion Guidance. Jonathan Ho et al. arXiv 2022. [paper]

-

Palette: Image-to-Image Diffusion Models. Chitwan Saharia et al. SIGGRAPH 2022. [paper]

-

High-Resolution Image Synthesis with Latent Diffusion Models. Robin Rombach et al. CVPR 2022. [paper]

-

Adding Conditional Control to Text-to-Image Diffusion Models. Lvmin Zhang et al. ICCV 2023. [paper]

-

Scalable Diffusion Models with Transformers. William Peebles et al. ICCV 2023. [paper]

-

Sequential Modeling Enables Scalable Learning for Large Vision Models. Yutong Bai et al. arXiv 2023. [paper]

-

Video Generation Models as World Simulators. Tim Brooks et al. OpenAI 2024. [paper]

7.3 World Models

-

Learning to See Physics via Visual De-animation. Wu, Jiajun et al. NeurIPS 2017. [paper]

-

Safe model-based reinforcement learning with stability guarantees. Berkenkamp, Felix et al. NeurIPS, 2017. [paper]

-

SimNet: Learning Simulation-Based World Models for Physical Reasoning. Vicol, Paul, Menapace et al. ICLR 2022. [paper]

-

DreamIX: DreamFusion via Iterative Spatiotemporal Mixing. Khalifa, Anji et al. arXiv 2022. [paper]

-

General-Purpose Embodied AI Agent via Reinforcement Learning with Internet-Scale Knowledge. Guo, Xiaoxiao et al. arXiv 2022. [paper]

-

VQGAN-CLIP: Open Domain Image Generation and Editing with Natural Language Guidance. Crowson, Katherine. arXiv 2022. [paper]

-

A Path Towards Autonomous Machine Intelligence. Lecun Yann Openreview, 2022. [paper]

-

Language Models Meet World Models: Embodied Experiences Enhance Language Models. Jiannan Xiang et al. NeurIPS 2023. [paper]

-

Mastering Diverse Domains through World Models. Hafner, Danijar et al. arXiv 2023. [paper]

-

Acquisition of Multimodal Models via Retrieval. Reed, Scott et al. arXiv 2023. [paper]

-

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents. Dohan, David et al. arXiv 2023. [paper]

-

Language Models, Agent Models, and World Models: The LAW for Machine Reasoning and Planning. Zhiting Hu et al. arXiv 2023. [paper]

-

Reasoning with language model is planning with world model. Hao, Shibo et al. arXiv 2023. [paper]

-

MetaSim: Learning to Generate Synthetic Datasets. Zhang, Yuxuan et al. arXiv 2023. [paper]

-

World Model on Million-Length Video And Language With RingAttention. Hao Liu et al. arXiv 2024. [paper]

-

Genie: Generative Interactive Environments. Jake Bruce et al. arXiv 2024. [paper]

7.4 Decentralized LLM

-

Petals: Collaborative Inference and Fine-tuning of Large Models. Alexander Borzunov et al. arXiv 2022. [paper]

-

Blockchain for Deep Learning: Review and Open Challenges. The Economic Times. Cluster Computing 2021. [paper]

-

FlexGen: High-Throughput Generative Inference of Large Language Models with a Single GPU. Ying Sheng et al. arXiv 2023. [paper]

-

Decentralized Training of Foundation Models in Heterogeneous Environments. Binhang Yuan et al. arXiv 2023. [paper]

7.5 AI for Coding

-

A framework for the evaluation of code generation models. Ben Allal et al. GitHub, 2023. [code]

-

Evaluating Large Language Models Trained on Code. Mark Chen et al. arxiv 2021. [paper]

-

Program Synthesis with Large Language Models. Jacob Austin et al. arXiv 2021. [paper]

-

Competition-level code generation with AlphaCode. Yujia Li et al. Science, 2022. [paper]

-

Efficient Training of Language Models to Fill in the Middle. Mohammad Bavarian et al. arXiv 2022. [paper]

-

SantaCoder: don't reach for the stars!. Loubna Ben Allal et al. Mind, 2023. [paper]

-

StarCoder: may the source be with you!. Raymond Li et al. arXiv 2023. [paper]

-

Large Language Models for Compiler Optimization. Chris Cummins et al. arXiv 2023. [paper]

-

Textbooks Are All You Need. Suriya Gunasekar et al. arXiv 2023. [paper]

-

InterCode: Standardizing and Benchmarking Interactive Coding with Execution Feedback. John Yang et al. arXiv 2023. [paper]

-

Reinforcement Learning from Automatic Feedback for High-Quality Unit Test Generation. Benjamin Steenhoek et al. arXiv 2023. [paper]

-

InCoder: A Generative Model for Code Infilling and Synthesis. Daniel Fried et al. arXiv 2023. [paper]

-

Refining Decompiled C Code with Large Language Models. Wai Kin Wong et al. arXiv 2023. [paper]

-

SWE-bench: Can Language Models Resolve Real-World