Supports high-speed streaming output, multi-round dialogue, zero-configuration deployment, and multi-channel token support.

Fully compatible with ChatGPT interface.

There are also the following eight free-api welcome to pay attention to:

Moonshot AI (Kimi.ai) interface to API kimi-free-api

Zhipu AI (Zhipu Qingyan) interface to API glm-free-api

StepChat interface to API step-free-api

Alibaba Tongyi (Qwen) interface to API qwen-free-api

Metaso AI (Metaso) interface to API metaso-free-api

Iflytek Spark interface to API spark-free-api

MiniMax (Conch AI) interface to API hailuo-free-api

Lingxin Intelligence (Emohaa) interface to API emohaa-free-api

The reverse API is unstable. It is recommended to go to DeepSeek official https://platform.deepseek.com/ to pay to use the API to avoid the risk of being banned.

This organization and individuals do not accept any financial donations or transactions. This project is purely for research, exchange and learning!

It is for personal use only, and it is prohibited to provide external services or commercial use to avoid putting pressure on the official service, otherwise it is at your own risk!

It is for personal use only, and it is prohibited to provide external services or commercial use to avoid putting pressure on the official service, otherwise it is at your own risk!

It is for personal use only, and it is prohibited to provide external services or commercial use to avoid putting pressure on the official service, otherwise it is at your own risk!

https://udify.app/chat/IWOnEupdZcfCN0y7

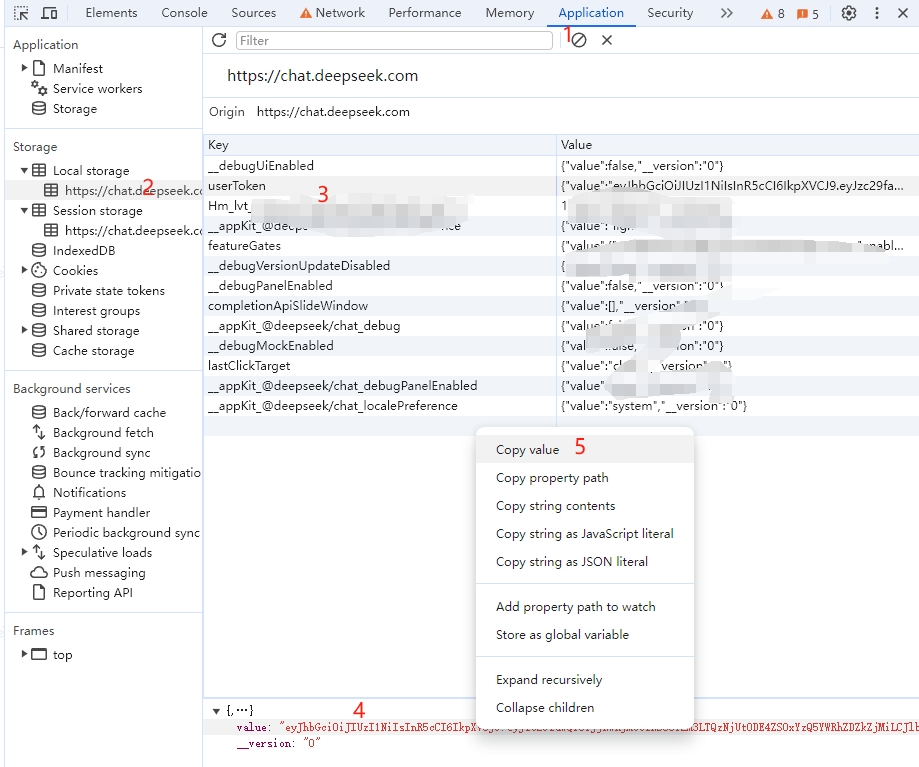

Get userToken value from DeepSeek

Enter DeepSeek to initiate a conversation, then open the developer tools with F12 and find the value in userToken from Application > LocalStorage. This will be used as the Bearer Token value of Authorization: Authorization: Bearer TOKEN

Currently, the same account can only have one output at the same time. You can provide userToken values of multiple accounts , use them to provide:

Authorization: Bearer TOKEN1,TOKEN2,TOKEN3

One of these will be selected each time the service is requested.

Please prepare a server with a public IP and open port 8000.

Pull the image and start the service

docker run -it -d --init --name deepseek-free-api -p 8000:8000 -e TZ=Asia/Shanghai vinlic/deepseek-free-api:latestView real-time service logs

docker logs -f deepseek-free-apiRestart service

docker restart deepseek-free-apiStop service

docker stop deepseek-free-api version : ' 3 '

services :

deepseek-free-api :

container_name : deepseek-free-api

image : vinlic/deepseek-free-api:latest

restart : always

ports :

- " 8000:8000 "

environment :

- TZ=Asia/ShanghaiNote: Some deployment areas may not be able to connect to deepseek. If the container log shows a request timeout or cannot be connected, please switch to other areas for deployment! Note: The container instance of the free account will automatically stop running after a period of inactivity, which will cause a delay of 50 seconds or more on the next request. It is recommended to check Render container keep alive

Fork this project to your github account.

Visit Render and log in to your github account.

Build your Web Service (New+ -> Build and deploy from a Git repository -> Connect your forked project -> Select the deployment area -> Select the instance type as Free -> Create Web Service).

After waiting for the construction to complete, copy the assigned domain name and splice the URL for access.

Note: The request response timeout for Vercel free accounts is 10 seconds, but the interface response usually takes longer, and you may encounter a 504 timeout error returned by Vercel!

Please make sure you have installed the Node.js environment first.

npm i -g vercel --registry http://registry.npmmirror.com

vercel login

git clone https://github.com/LLM-Red-Team/deepseek-free-api

cd deepseek-free-api

vercel --prodPlease prepare a server with a public IP and open port 8000.

Please install the Node.js environment and configure the environment variables first, and confirm that the node command is available.

Install dependencies

npm iInstall PM2 for process guarding

npm i -g pm2Compile and build. When you see the dist directory, the build is complete.

npm run buildStart service

pm2 start dist/index.js --name " deepseek-free-api "View real-time service logs

pm2 logs deepseek-free-apiRestart service

pm2 reload deepseek-free-apiStop service

pm2 stop deepseek-free-apiIt is faster and easier to use the following secondary development client to access the free-api series projects, and supports document/image upload!

LobeChat developed by Clivia https://github.com/Yanyutin753/lobe-chat

ChatGPT Web https://github.com/SuYxh/chatgpt-web-sea developed by Guangguang@

Currently, it supports the /v1/chat/completions interface that is compatible with openai. You can use the client access interface that is compatible with openai or other clients, or use online services such as dify to access it.

Conversation completion interface, compatible with openai's chat-completions-api.

POST /v1/chat/completions

header needs to set the Authorization header:

Authorization: Bearer [userToken value]

Request data:

{

// model必须为deepseek_chat或deepseek_code

"model" : " deepseek_chat " ,

"messages" : [

{

"role" : " user " ,

"content" : "你是谁? "

}

],

// 如果使用SSE流请设置为true,默认false

"stream" : false

}Response data:

{

"id" : " " ,

"model" : " deepseek_chat " ,

"object" : " chat.completion " ,

"choices" : [

{

"index" : 0 ,

"message" : {

"role" : " assistant " ,

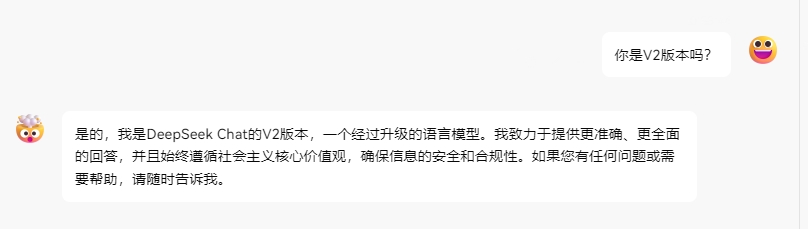

"content" : " 我是DeepSeek Chat,一个由中国深度求索公司开发的智能助手,基于人工智能技术构建,旨在通过自然语言处理和机器学习算法来提供信息查询、对话交流和解答问题等服务。我的设计理念是遵循社会主义核心价值观,致力于为用户提供准确、安全、有益的信息和帮助。 "

},

"finish_reason" : " stop "

}

],

"usage" : {

"prompt_tokens" : 1 ,

"completion_tokens" : 1 ,

"total_tokens" : 2

},

"created" : 1715061432

}Check whether the userToken is alive. If alive is not true, otherwise it is false. Please do not call this interface frequently (less than 10 minutes).

POST /token/check

Request data:

{

"token" : " eyJhbGciOiJIUzUxMiIsInR5cCI6IkpXVCJ9... "

}Response data:

{

"live" : true

}If you are using Nginx reverse proxy deepseek-free-api, please add the following configuration items to optimize the output effect of the stream and optimize the experience.

# 关闭代理缓冲。当设置为off时,Nginx会立即将客户端请求发送到后端服务器,并立即将从后端服务器接收到的响应发送回客户端。

proxy_buffering off ;

# 启用分块传输编码。分块传输编码允许服务器为动态生成的内容分块发送数据,而不需要预先知道内容的大小。

chunked_transfer_encoding on ;

# 开启TCP_NOPUSH,这告诉Nginx在数据包发送到客户端之前,尽可能地发送数据。这通常在sendfile使用时配合使用,可以提高网络效率。

tcp_nopush on ;

# 开启TCP_NODELAY,这告诉Nginx不延迟发送数据,立即发送小数据包。在某些情况下,这可以减少网络的延迟。

tcp_nodelay on ;

# 设置保持连接的超时时间,这里设置为120秒。如果在这段时间内,客户端和服务器之间没有进一步的通信,连接将被关闭。

keepalive_timeout 120 ;Since the inference side is not in deepseek-free-api, the token cannot be counted and will be returned as a fixed number.