Do you have a large folder of memes you want to search semantically? Do you have a Linux server with an Nvidia GPU? You do; this is now mandatory.

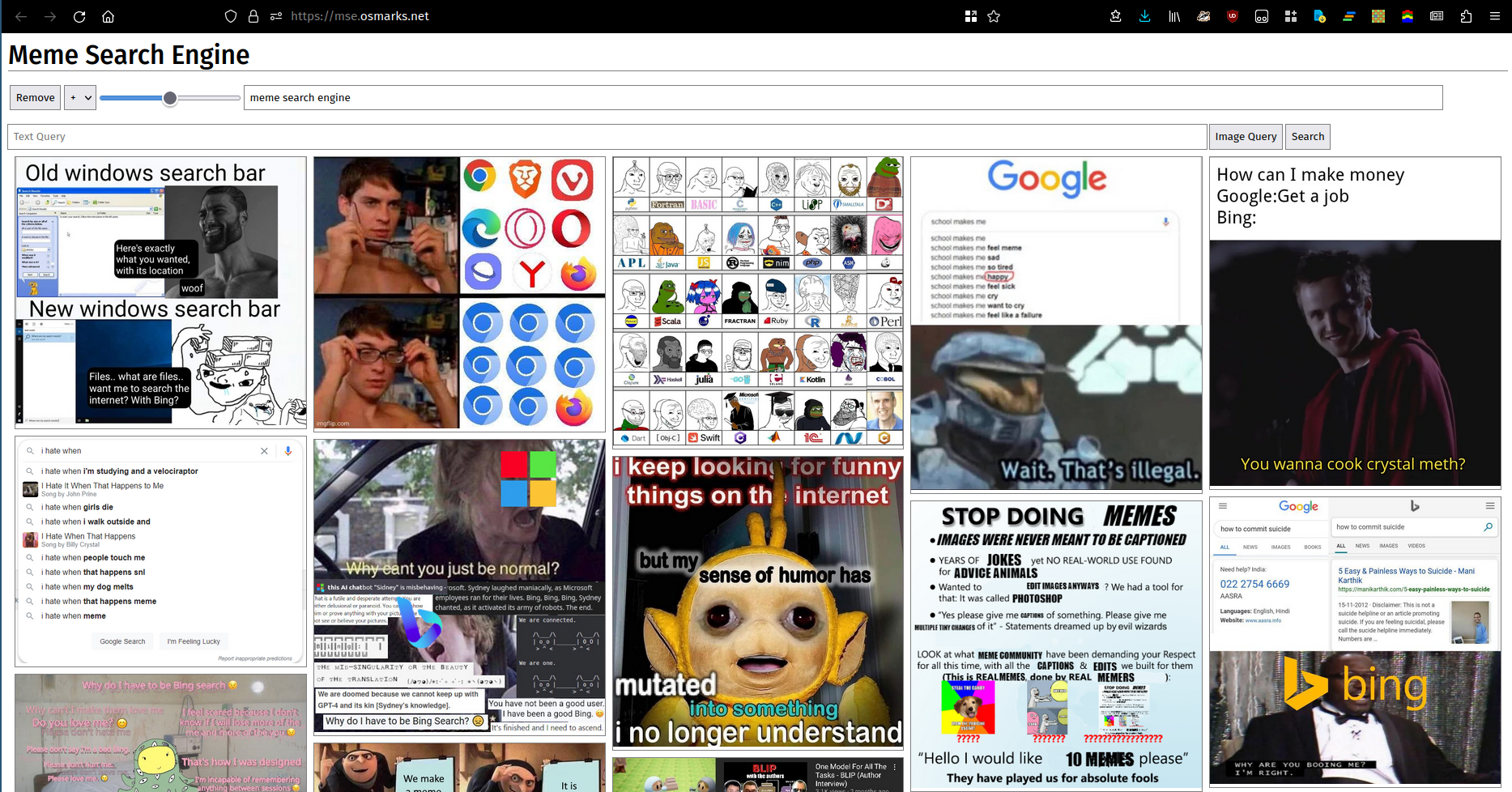

They say a picture is worth a thousand words. Unfortunately, many (most?) sets of words cannot be adequately described by pictures. Regardless, here is a picture. You can use a running instance here.

This is untested. It might work. The new Rust version simplifies some steps (it integrates its own thumbnailing).

python -m http.server.pip from requirements.txt (the versions probably shouldn't need to match exactly if you need to change them; I just put in what I currently have installed).

transformers due to SigLIP support.thumbnailer.py (periodically, at the same time as index reloads, ideally)clip_server.py (as a background service).

clip_server_config.json.

device should probably be cuda or cpu. The model will run on here.model is model_name is the name of the model for metrics purposes.max_batch_size controls the maximum allowed batch size. Higher values generally result in somewhat better performance (the bottleneck in most cases is elsewhere right now though) at the cost of higher VRAM use.port is the port to run the HTTP server on.meme-search-engine (Rust) (also as a background service).

clip_server is the full URL for the backend server.db_path is the path for the SQLite database of images and embedding vectors.files is where meme files will be read from. Subdirectories are indexed.port is the port to serve HTTP on.enable_thumbs to true to serve compressed images.npm install, node src/build.js.frontend_config.json.

image_path is the base URL of your meme webserver (with trailing slash).backend_url is the URL mse.py is exposed on (trailing slash probably optional).clip_server.py.See here for information on MemeThresher, the new automatic meme acquisition/rating system (under meme-rater). Deploying it yourself is anticipated to be somewhat tricky but should be roughly doable:

crawler.py with your own source and run it to collect an initial dataset.mse.py with a config file like the provided one to index it.rater_server.py to collect an initial dataset of pairs.train.py to train a model. You might need to adjust hyperparameters since I have no idea which ones are good.active_learning.py on the best available checkpoint to get new pairs to rate.copy_into_queue.py to copy the new pairs into the rater_server.py queue.library_processing_server.py and schedule meme_pipeline.py to run periodically.Meme Search Engine uses an in-memory FAISS index to hold its embedding vectors, because I was lazy and it works fine (~100MB total RAM used for my 8000 memes). If you want to store significantly more than that you will have to switch to a more efficient/compact index (see here). As vector indices are held exclusively in memory, you will need to either persist them to disk or use ones which are fast to build/remove from/add to (presumably PCA/PQ indices). At some point if you increase total traffic the CLIP model may also become a bottleneck, as I also have no batching strategy. Indexing is currently GPU-bound since the new model appears somewhat slower at high batch sizes and I improved the image loading pipeline. You may also want to scale down displayed memes to cut bandwidth needs.