The repository contains code for generating short poems using generative language models with a GPT architecture.

A transformative language model based on the GPT architecture is used. This model takes into account both the grammar of the Russian language (similar to language models like rugpt) and its phonetics, including the rules of rhyming and the construction of poetic meter. Details are in the presentation.

Model binaries are available in the inkoziev/verslibre:latest docker image.

Download and run the image:

sudo docker pull inkoziev/verslibre:latest

sudo docker run -it inkoziev/verslibre:latest

After launch, the program will ask you to enter a token for the telegram bot.

After loading all the models, you can start the bot in its chat with the /start command. The bot will ask you to choose one of three random topics for your essay or enter your own topic. The topic can be any phrase with a noun in the lead role, for example “poem generator”.

This bot is available in telegram as @verslibre_bot

Generation examples:

* * *

Любовь - источник вдохновения,

Души непризнанных людей.

И день весеннего цветения,

Омытый зеленью дождей…

* * *

Душа, гонимая страстями,

Тревожит, веет теплотой.

Любовь, хранимая стихами,

И примиренье, и покой.

In addition to the generative model itself, the poetic transcriptor, which marks the source poems for training models, is of great importance for proper operation. You can read more about the work of the transcriptor here.

A docker image inkoziev/haiku:latest is available to run the generator as a telegram bot.

Download the image and run:

sudo docker pull inkoziev/haiku:latest

sudo docker run -it inkoziev/haiku

The program will ask you to enter a telegram bot token. Then the models will load (about a minute) and you can communicate with the bot. Enter a seed - a noun or phrase. Generating several options on the CPU takes approximately 30 seconds. Then the bot will display the first option and offer to evaluate it, or display the next option.

This bot is available in telegram as @haiku_guru_bot.

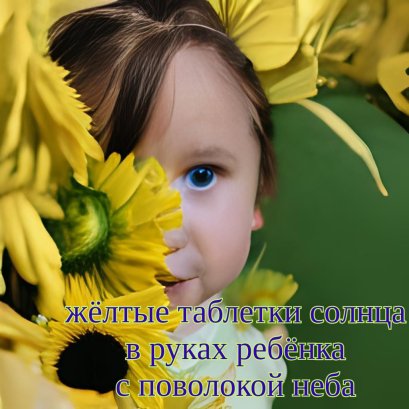

Since this is a randomized generative model, its results cannot usually be replicated by simply introducing the same seed. Copy good results, supplement them with an illustrative model, such as ruDALLE, and get completely unique content:

More examples of haiku can be seen on my blog.

The tmp subdirectory contains files with part of the training data:

poetry_corpus.txt - corpus of filtered quatrains, symbol | as a line separator; used for additional training of the ruGPT model.

poem_generator_dataset.dat - dataset for training ruGPT, which produces the text of a poem by topic (key phrase).

captions_generator_rugpt.dat - dataset for training ruGPT, generating a verse title based on its content.

A description of the training corps preparation process can be found here.