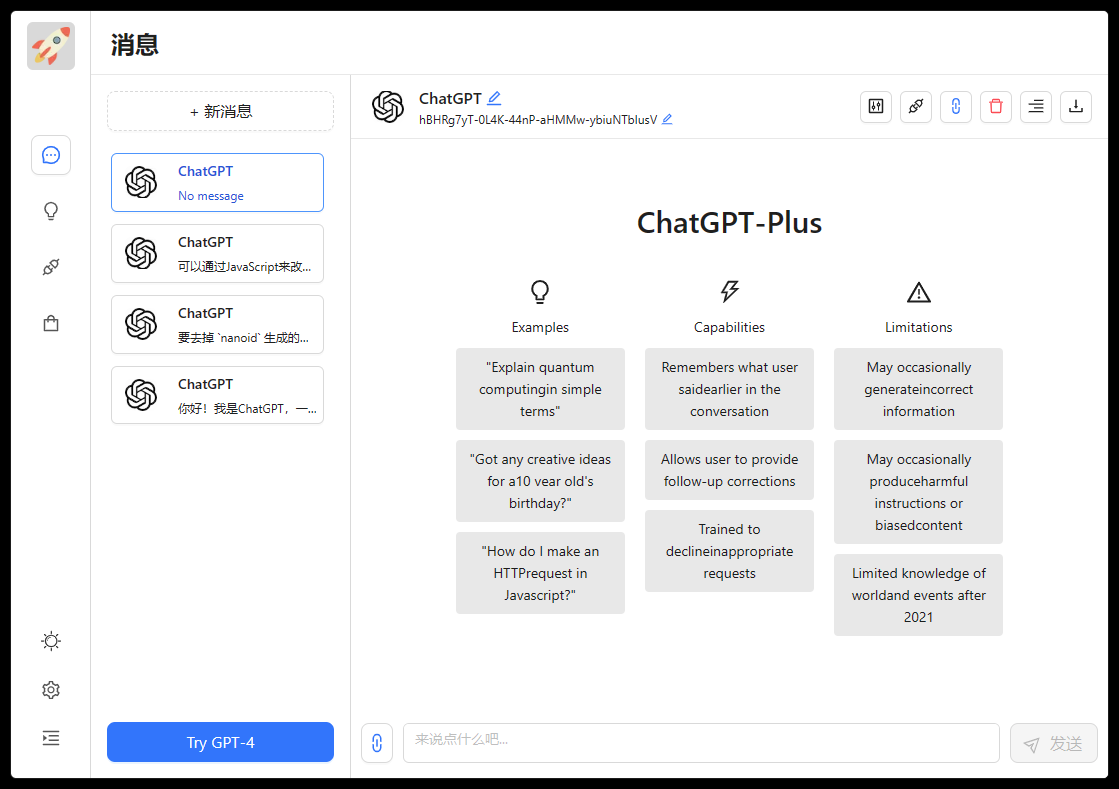

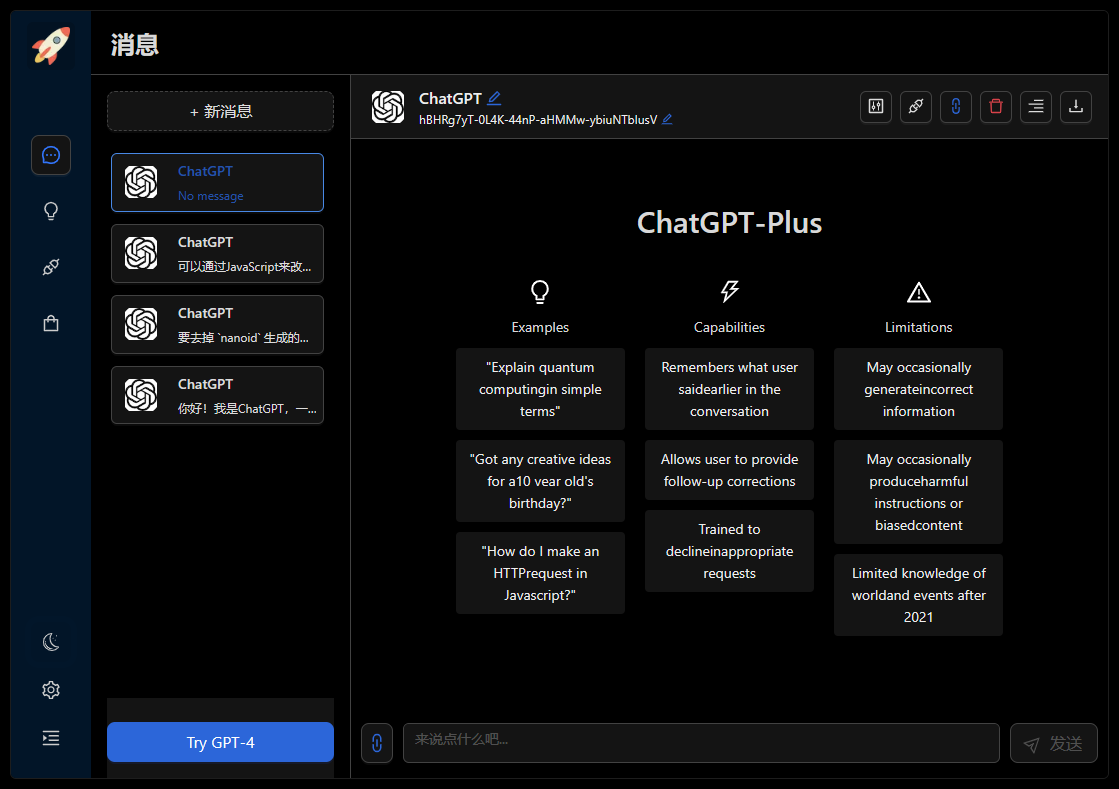

ChatGPT-Plus is an application that uses the official ChatGPT API.

Demonstrate Demo/Feedback Issues/Develop Gitpod/Deploy Vercel

Simplified Chinese | English

Like this project? Please give me a Star ️

Or share it with your friends to help it improve!

ChatGPT-Plus client is an application of the official ChatGPT API. This application is a wrapper application for the official API of OpenAI's ChatGPT.

There are two ways to access it. To use this module in Node.js, you need to choose between two methods:

| method | free? | robust? | quality? |

|---|---|---|---|

ChatGPTAPI | no | ✅ Yes | ✅️Real ChatGPT model |

ChatGPTUnofficialProxyAPI | ✅ Yes | ☑️ Possibly | ✅ Real ChatGPT |

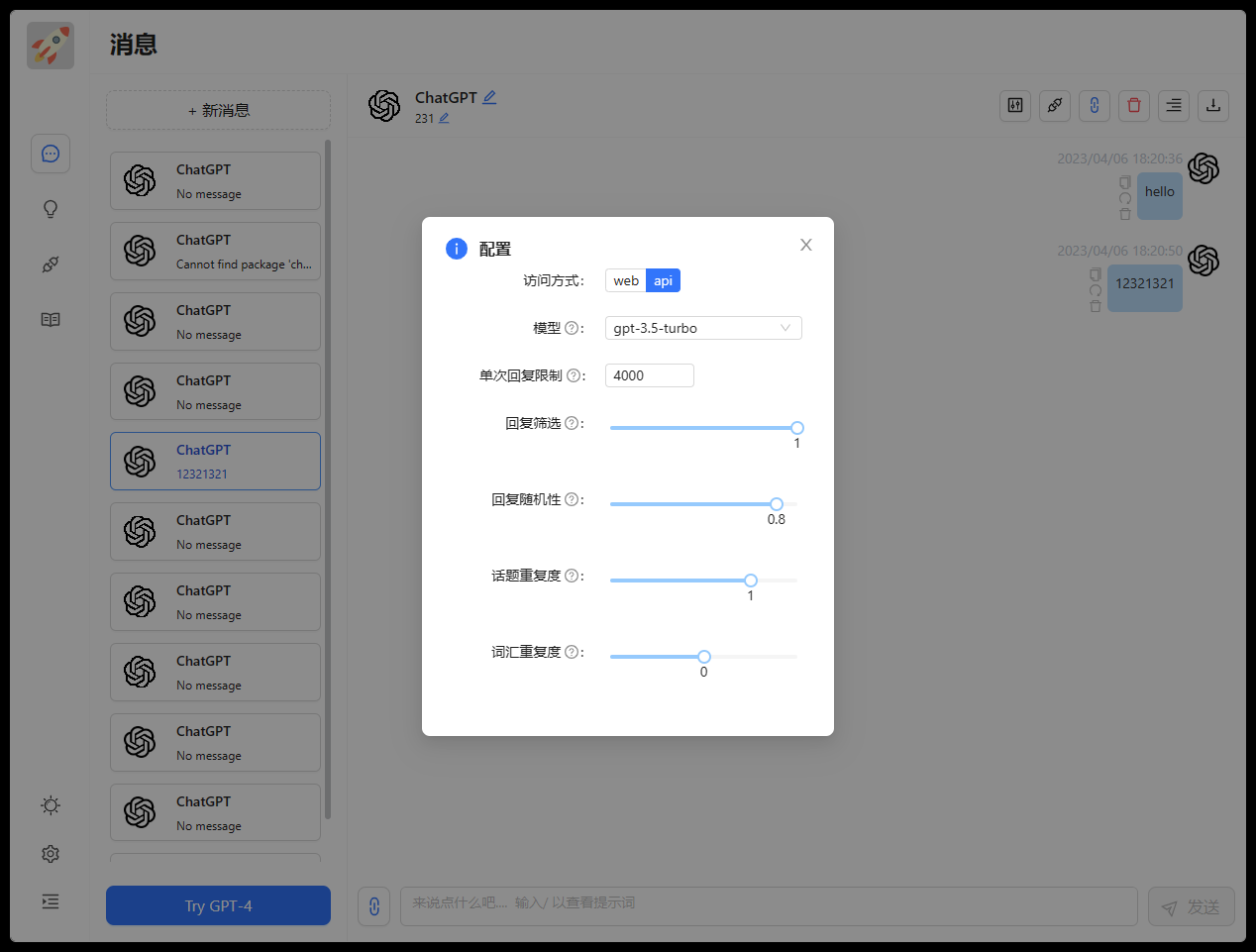

ChatGPTAPI - Uses gpt-3.5-turbo-0301 model with the official OpenAI chat completion API (official, powerful method, but not free). You can override models, completion parameters, and system messages to fully customize your assistant.

ChatGPTUnofficialProxyAPI - Use an unofficial proxy server to access ChatGPT's backend API in a way that bypasses Cloudflare (uses the real ChatGPT, which is lightweight, but relies on third-party servers and has rate limits).

These two methods have very similar APIs, so switching between them should be simple.

Note: We strongly recommend using ChatGPTAPI as it uses OpenAI’s officially supported API. In a future release, we may discontinue support for ChatGPTUnofficialProxyAPI .

The request principle uses the function module provided by chatgpt-api.

You can use Gitpod for online development:

Or clone to local development and follow these steps:

# clone the project

git clone https://github.com/zhpd/chatgpt-plus.gitIf you don’t have a git environment, you can directly download the zip package, unzip it and enter the project directory.

This project is developed based on Node.js and requires Node.js 14.0+ environment. Make sure you're using

node >= 18sofetchis available (ornode >= 14if you install a fetch polyfill).

This project uses the API officially provided by OpenAI and requires application for API Key and AccessToken.

- OpenAI official registration application address: https://platform.openai.com/, scientific Internet access is required

- Obtain

ApiKeyorAccessTokenthrough other methods. Click to view

After the application is successful, fill in the APIKey and AccessToken into the chatgpt-plus/service/.env file

It is recommended to use the VSCode editor for development, install the plug-ins

ESLintandPrettier, and turn onFormat On Savein the settings.

Configure the port and interface request address in the root directory .env. You can directly copy the .env.example file in the root directory for modification, and change the file name to .env)

| Environment variable name | default value | illustrate |

|---|---|---|

PORT | 3000 | port |

NEXT_PUBLIC_API_URL | http://localhost:3002 | interface address |

You can directly copy the .env.example file in the root directory for modification, and change the file name to .env

# port

PORT = 3000

# api url

NEXT_PUBLIC_API_URL = http://localhost:3002

# enter the project directory

cd chatgpt-plus

# install dependency

npm install

# develop

npm run devAfter the operation is started successfully, you can open http://localhost:3000 in the browser to view the effect.

Configure the port, ApiKey, and AccessToken in .env in the service directory.

| Environment variable name | default value | illustrate |

|---|---|---|

PORT | 3002 | port |

OPENAI_API_KEY | - | API_KEY |

OPENAI_ACCESS_TOKEN | - | ACCESS_TOKEN |

API_REVERSE_PROXY | https://api.pawan.krd/backend-api/conversation | acting |

TIMEOUT_MS | 60000 | Timeout in milliseconds |

You can directly copy the .env.example file in the service directory for modification, and change the file name to .env

# service/.env

# OpenAI API Key - https://platform.openai.com/overview

OPENAI_API_KEY =

# change this to an `accessToken` extracted from the ChatGPT site's `https://chat.openai.com/api/auth/session` response

OPENAI_ACCESS_TOKEN =

# Reverse Proxy default 'https://bypass.churchless.tech/api/conversation'

API_REVERSE_PROXY =

# timeout

TIMEOUT_MS = 100000

# enter the project directory

cd chatgpt-plus

# enter the service directory

cd service

# install dependency

npm install

# develop

npm run devAfter the operation is started successfully, the backend service can run normally.

To deploy using Docker, you need to install the Docker environment

Use the configuration file in the docker-compose folder to pull and run it

Use Vercel for deployment, one-click deployment

API_URL in the .env file in the root directory to be your actual backend interface public network addressnpm install to install dependenciesnpm run build to package the codedist folder to the directory of your website’s前端服务dist foldernpm run start to start the service service foldernpm install to install dependenciesnpm run build to package the codeservice/dist folder to the directory of your website’s后端服务service/dist foldernpm run start to start the serviceNote : If you do not want to package, you can directly copy the

servicefolder to the server and runnpm installandnpm run startto start the service.

You can access it by configuring OPENAI_API_KEY key to the backend service.env:

# R OpenAI API Key

OPENAI_API_KEY =This project uses the API officially provided by OpenAI. You need to apply for an OpenAI account first.

You can access it by configuring the OPENAI_ACCESS_TOKEN access token to the backend service.env:

# change this to an `accessToken` extracted from the ChatGPT

OPENAI_ACCESS_TOKEN = You need to obtain an OpenAI access token from the ChatGPT web application. You can use one of the following methods, which require an email and password and return an access token:

These libraries work with accounts that authenticate with email and password (for example, they do not support accounts authenticated with Microsoft/Google).

Alternatively, you can manually obtain an accessToken by logging into the ChatGPT web application and opening https://chat.openai.com/api/auth/session , which will return a JSON object containing your accessToken string.

Access tokens are valid for a number of days.

Note : Using a reverse proxy will expose your access token to third parties. This won't have any adverse effects, but consider the risks before using this method.

You can override the reverse proxy by configuring the API_REVERSE_PROXY proxy address in the backend service.env:

# Reverse Proxy

API_REVERSE_PROXY =Known reverse proxies run by community members include:

| Reverse proxy URL | author | rate limit | last check date |

|---|---|---|---|

https://bypass.churchless.tech/api/conversation | @acheong08 | 5 req / 10 seconds by IP | 3/24/2023 |

https://api.pawan.krd/backend-api/conversation | @PawanOsman | 50 req / 15 seconds (~3 r/s) | 3/23/2023 |

Note: Information on how the reverse proxy works is currently not released to prevent OpenAI from disabling access.

Q: If I only use the front-end page, where do I change the request interface?

A: The API_URL field in the .env file in the root directory.

Q: There is no typewriter effect on the front end?

A: One possible reason is that after Nginx reverse proxy and the buffer is turned on, Nginx will try to buffer a certain size of data from the backend before sending it to the browser. Please try adding proxy_buffering off; after the anti-parameter, and then reload Nginx. The configuration of other web servers is the same.

Thanks to everyone who contributed!

If you think this project is helpful to you, you can give it a Star ️ or share it with your friends. Your support is my biggest motivation!

MIT © zhpd