minichat

1.0.0

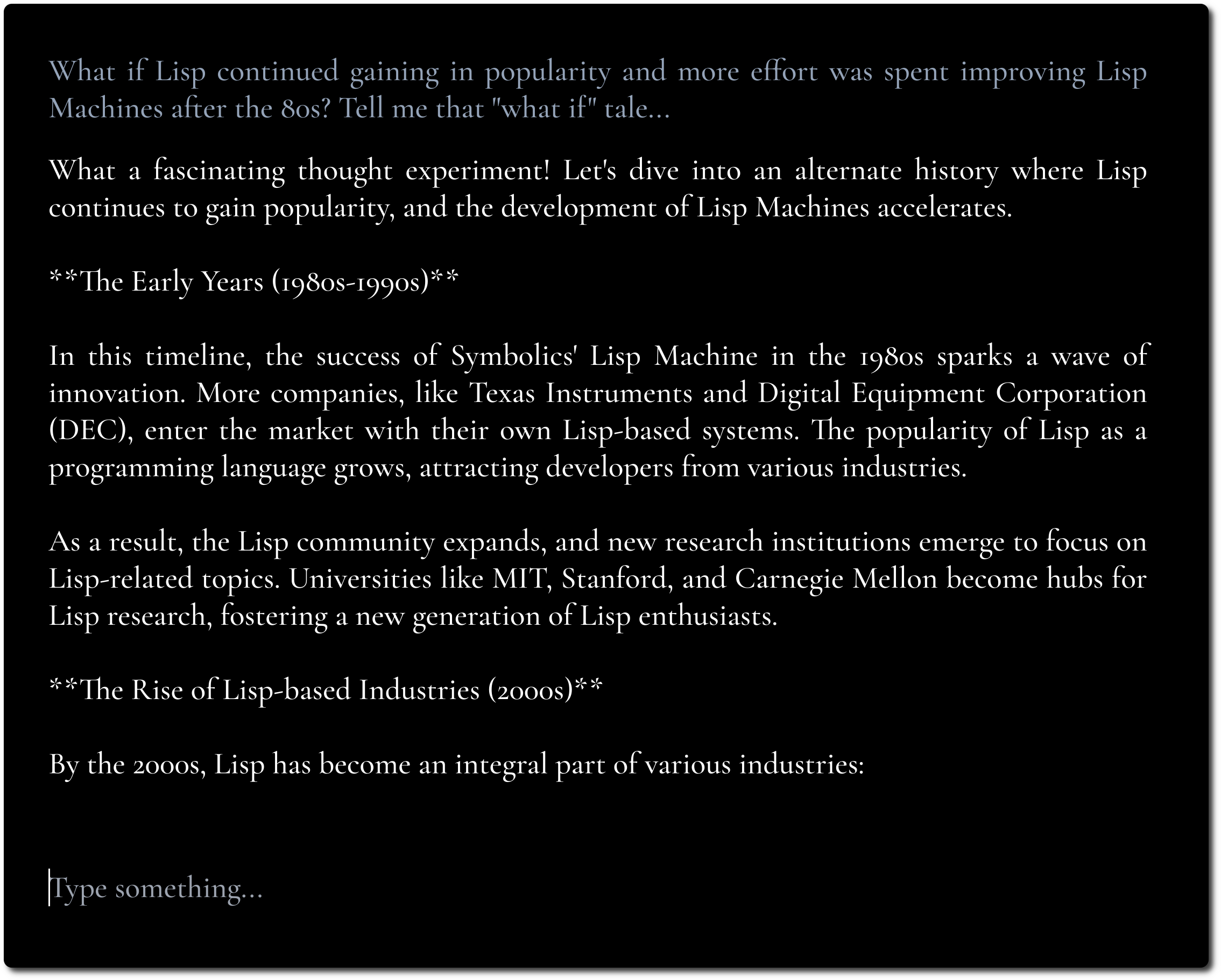

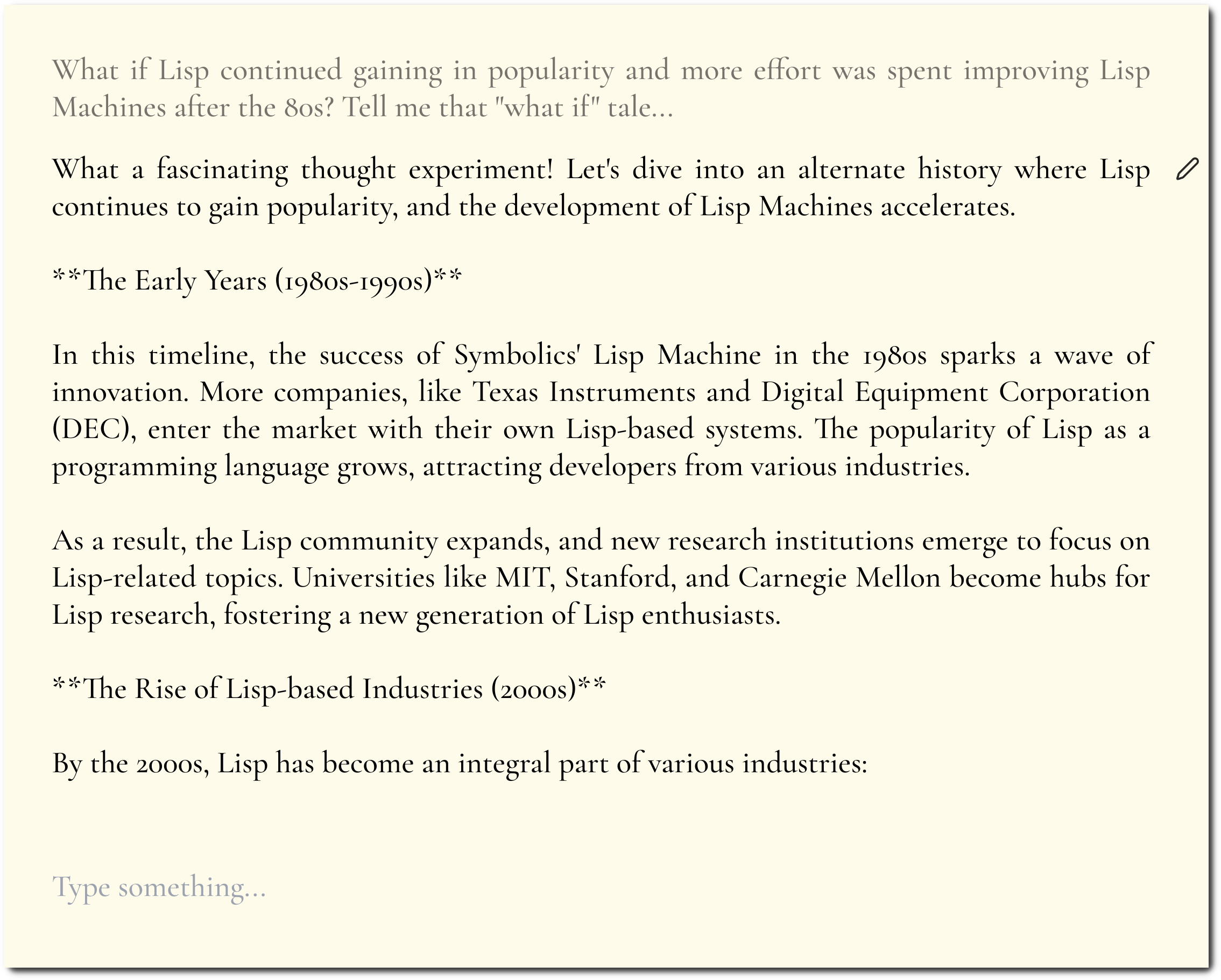

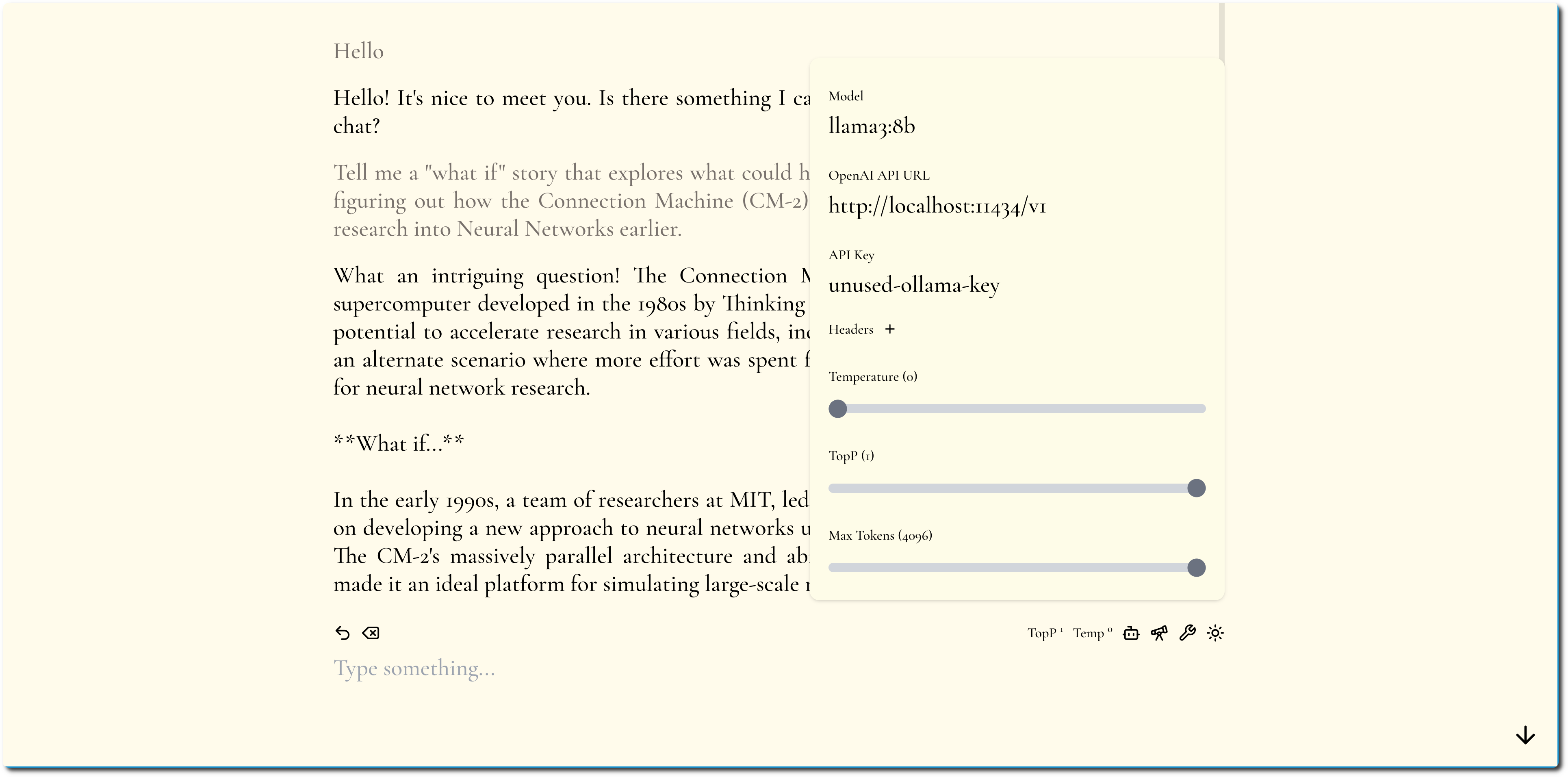

A minimalistic LLM chat UI, inspired by Fooocus and Pi.

If you have a CORS enabled API endpoint to use, you can try it here.

The goal is to focus on the content and hide away as much tinkering and tweaking as possible.

We emphasize the content by using bold typography and minimal design.

We try to keep configuration minimal yet flexible.

MiniChat supports OpenAI compatible APIs, see the Docker Compose section for running MiniChat with various model providers by leveraging gateways such as Portkey.

You can run MiniChat with Docker, the image is less than 2MB.

docker run -p 3216:3216 ghcr.io/functorism/minichat:master

Run

docker compose -f docker-compose-ollama.yml up

docker exec minichat-ollama-1 ollama pull llama3:8b

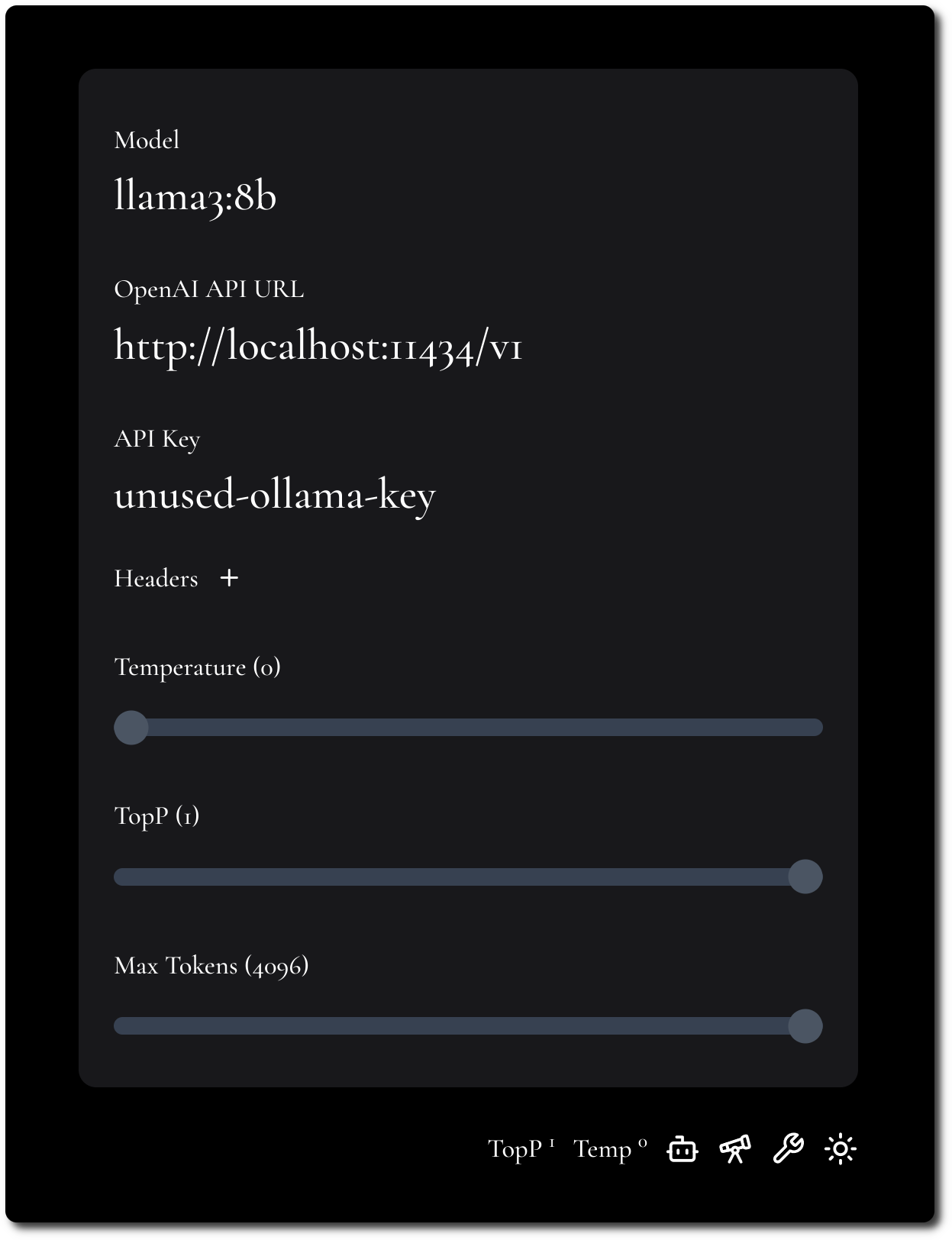

UI Settings:

Run

docker compose -f docker-compose-portkey.yml up

UI Settings:

Run

docker compose -f docker-compose-tf-tgi.yml up

UI Settings: