AI generates novels with one click

About the project • Change log • Novel generation prompt • Quick start • Demo usage guide • Contribution

The project includes a novel generator based on large language models such as GPT, as well as various novel generation prompts and tutorials. We welcome community contributions and continue to update to provide the best novel writing experience.

Long-Novel-GPT is a novel generator based on large language models such as GPT. It adopts a hierarchical outline/chapter/text structure to grasp the coherent plot of the novel; it obtains context through precise mapping of outline->chapter->text, thereby optimizing API call costs; and continuously optimizes based on its own or user feedback. , until you create the novel in your mind.

Structured writing : The hierarchical structure effectively grasps the development context of the novel.

Reflective cycle : Continuously optimize the generated outline, chapters and text content

Cost optimization : Intelligent context management ensures fixed API call costs

Community-driven : Welcome to contribute prompts and improvement suggestions to jointly promote the development of the project

Online demo: Long-Novel-GPT Demo

Supports individually re-creating selected paragraphs while authoring (by quoting text)

The generation of prompts for outlines, chapters, and main text has been optimized.

There are three built-in prompts to choose from for outline, chapters, and text: New, Expanded, and Polished

Supports entering your own Prompt

The interaction logic of prompt preview is better

Supports one-click generation and will automatically help you generate all outlines, chapters, and texts.

Added support for GLM model

Added support for multiple large language models:

OpenAI series: o1-preivew, o1-mini, gpt4o, etc.

Claude series: Claude-3.5-Sonnet, etc.

Wen Xinyiyan: ERNIE-4.0, ERNIE-3.5, ERNIE-Novel

Bean bag: doubao-lite/pro series

Supports any custom model compatible with the OpenAI interface

Optimized the generation interface and user experience

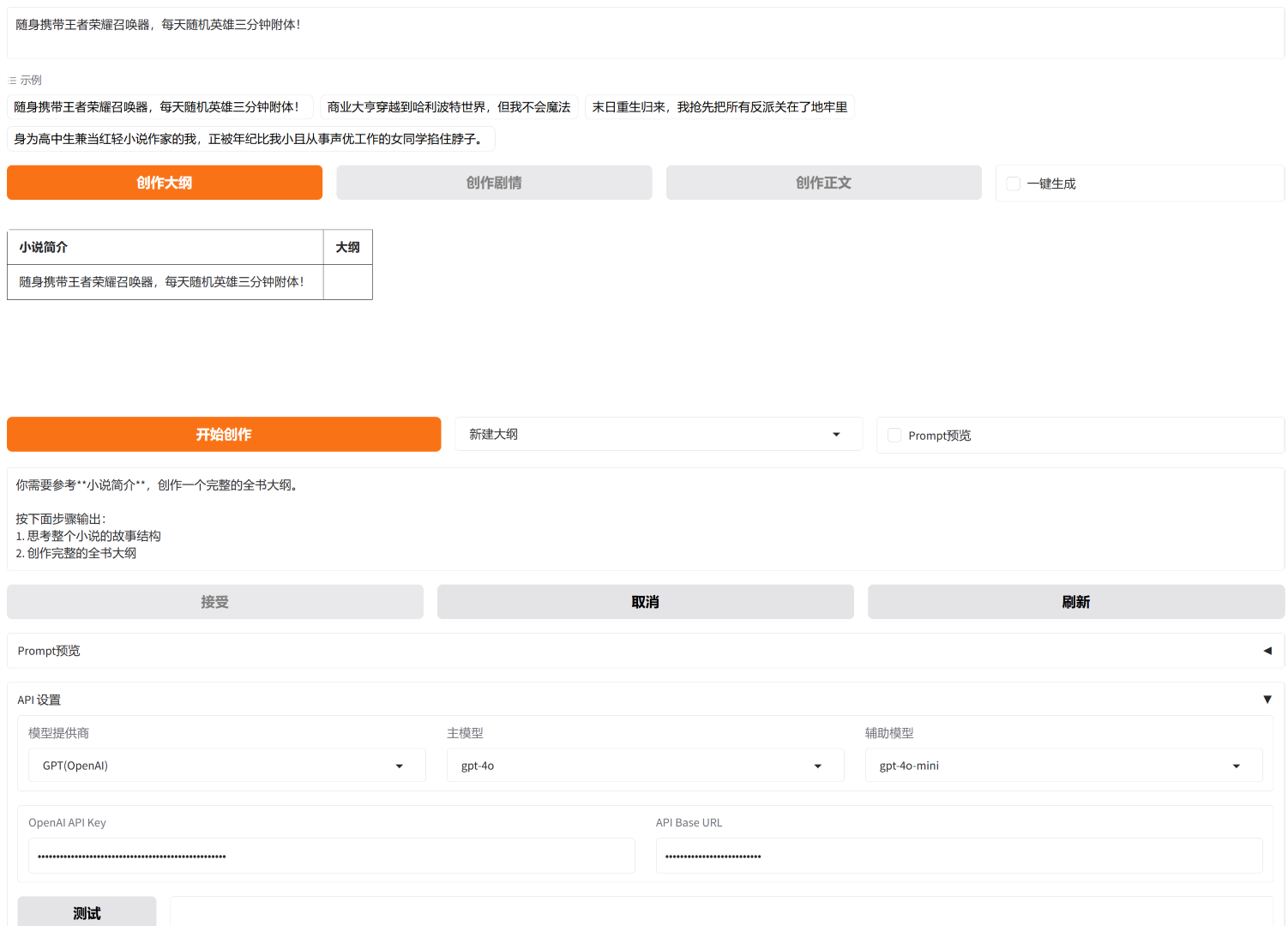

Demo supports multiple large language models (the picture shows the API setting interface)

An online demo is provided to support the direct generation of the entire book from one sentence of ideas.

Consider a more beautiful and practical editing interface (completed)

Support Wenxin Novel model (completed)

Support bean bag model (completed)

Directly generate a complete novel with one click from an idea (in progress)

Support generating outlines and chapters (in progress)

Long-Novel-GPT 1.5 and previous versions provide a complete novel generation APP, but the operating experience is not perfect. Starting from version 1.6, we will pay more attention to user experience, rewrite a new interface, and move the project files to the core directory. The previous demo is no longer supported. If you want to experience it, you can choose the previous commit to download.

| Prompt | describe |

|---|---|

| Cecilia potato style | Used to create text based on the outline, imitating the writing style of Tiancan Tudou |

| Polish the draft | Polish and improve the first draft of your online article |

Submit your prompt

No installation required, experience our online demo now: Long-Novel-GPT Demo

Multi-threaded parallel creation (the picture shows the scene of creating the text)

Supports viewing Prompt (the picture is the answer of the o1-preview model)

If you wish to run Long-Novel-GPT locally:

conda create -n lngpt python conda activate lngpt pip install -r requirements.txt

cd Long-Novel-GPT pythoncore/frontend.py

After starting, just visit the link in the browser: http://localhost:7860/

Yes, Long-Novel-GPT-1.9 uses multi-thread generation and automatically manages context to ensure the continuity of generated plots. In version 1.7, you need to deploy it locally and use your own API-Key, and configure the maximum number of threads used during generation in config.py .

MAX_THREAD_NUM = 5 # Maximum number of threads used during generation

Online demo is not possible because the maximum thread is 5.

First, you need to deploy it locally, configure API-Key and lift thread restrictions.

Then, in the outline creation stage, you need to generate about 40 lines of plot, each line has 50 words, so there are 2,000 words here. ( Expand the entire outline by clicking continuously)

Secondly, during the plot creation stage, the outline was expanded from 2k words to 20k words. (10+ threads in parallel)

Finally, in the text creation stage, the 20K words are expanded to 100K words. (50+ threads in parallel)

Version 1.7 is the first version to support the generation of million-level novels. It mainly guarantees multi-threaded processing, generation window management and provides a complete interface.

Version 1.9 has greatly optimized prompts, providing three types of prompts: new, expanded, and polished prompts for users to choose from, and it also supports input prompts.

In general, version 1.9 can generate web articles that meet the contract threshold under user supervision.

Our ultimate goal is always to generate the entire book with one click, which will be officially launched after 2-3 version iterations.

Currently, the Demo supports GPT, Claude, Wenxin, Doubao, GLM and other models, and the API-Key has been configured. The default model is GPT4o, and the maximum number of threads is 5.

You can select any idea in the example and click Create Outline to initialize the outline.

After initialization, click the Start Creation button to continue creating the outline until you are satisfied.

After creating the outline, click the Create Plot button and then repeat the above process.

After selecting one-click generation , click the button on the left again to generate one-click generation.

If you encounter any unresolved issues, click the refresh button.

If the problem still cannot be solved, please refresh the browser page. This will cause the loss of all data. Please manually back up important texts.

We welcome all forms of contributions, whether suggestions for new features, code improvements, or bug reports. Please contact us via GitHub issues or pull requests.

You can also join the group and discuss in the group.