Chinese | English

CodeFuse-ChatBot: Development by Private Knowledge Augmentation

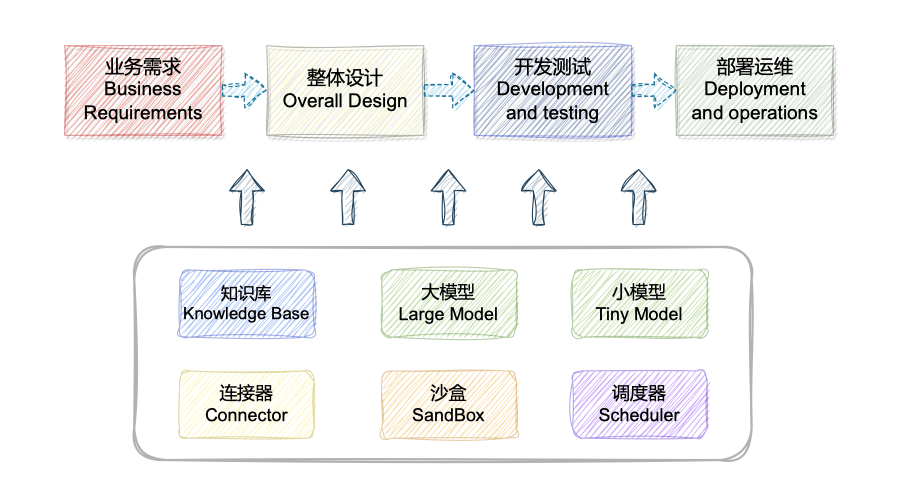

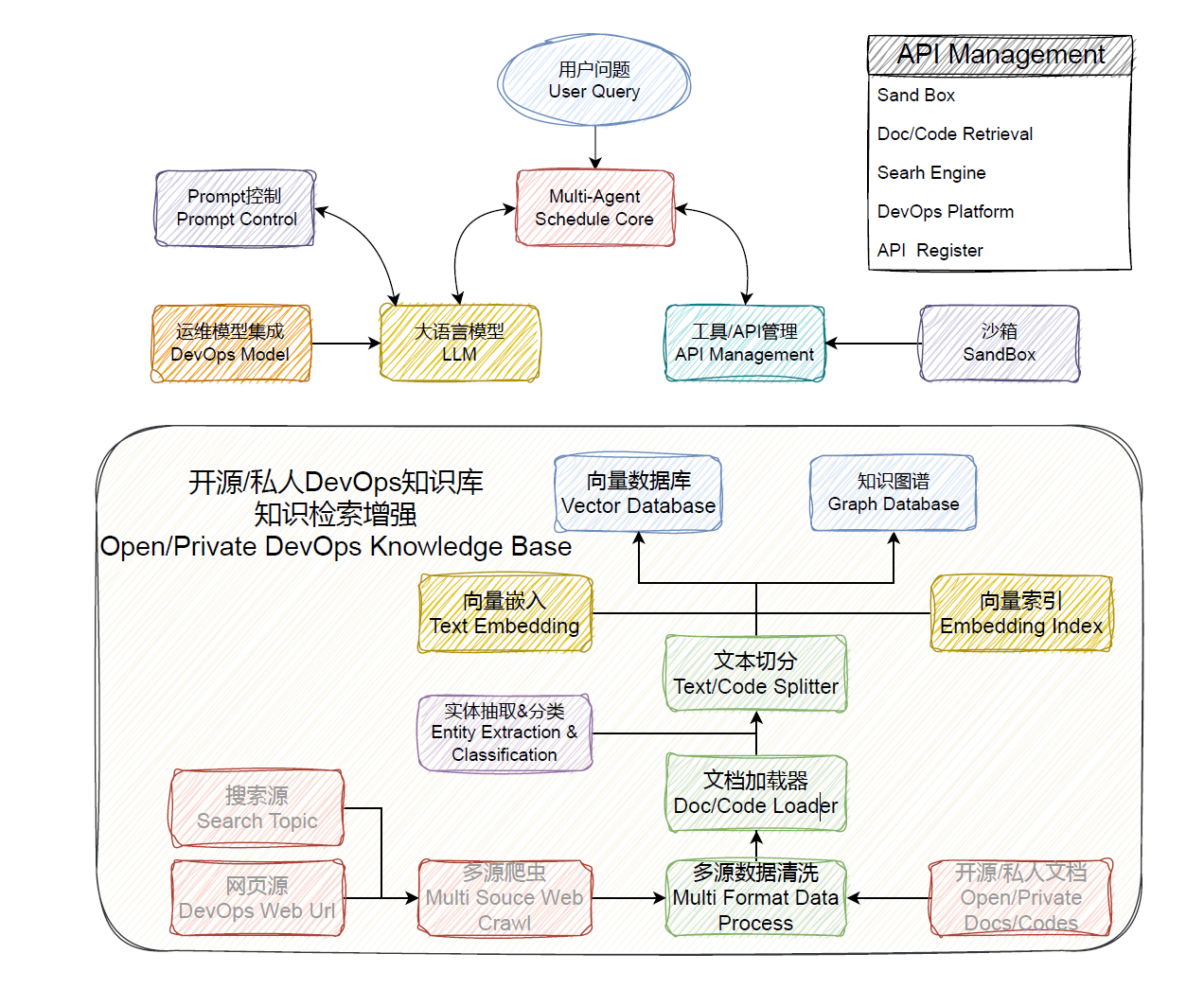

CodeFuse-ChatBot is an open source AI intelligent assistant developed by the Ant CodeFuse team, dedicated to simplifying and optimizing all aspects of the software development life cycle. This project combines the collaborative scheduling mechanism of Multi-Agent and integrates a rich tool library, code library, knowledge base and sandbox environment, enabling the LLM model to effectively execute and handle complex tasks in the DevOps field.

This project aims to build an AI intelligent assistant for the full life cycle of software development through Retrieval Augmented Generation (RAG), Tool Learning and a sandbox environment, covering the stages of design, coding, testing, deployment and operation and maintenance. . Gradually transform from the traditional development and operation model of data query everywhere and independent and decentralized platform operation to the intelligent development and operation model of large model question and answer, changing people's development and operation habits.

The core differentiating technologies and functional points of this project are:

? Relying on the open source LLM and Embedding models, this project can realize offline private deployment based on the open source model. In addition, this project also supports OpenAI API calls. Access Demo

The core R&D team has long been focusing on research in the field of AIOps + NLP. We launched the Codefuse-ai project and hope that everyone will widely contribute high-quality development and operation and maintenance documents to jointly improve this solution to achieve the goal of "making development easy in the world."

In order to help you understand the functions and usage of Codefuse-ChatBot more intuitively, we have recorded a series of demonstration videos. You can quickly understand the main features and operating procedures of this project by watching these videos.

For specific implementation details, please see: Technical route details and project plan follow-up: Projects

If you need to integrate a specific model, please let us know your needs by submitting an issue.

| model_name | model_size | gpu_memory | quantify | HFhub | ModelScope |

|---|---|---|---|---|---|

| chatgpt | - | - | - | - | - |

| codellama-34b-int4 | 34b | 20g | int4 | coming soon | link |

For complete documentation, see: CodeFuse-muAgent

pip install codefuse-muagent

Please install the nvidia driver yourself. This project has been tested in Python 3.9.18, CUDA 11.7 environment, Windows, and X86 architecture macOS systems.

For Docker installation, privatized LLM access and related startup issues, please see: Quick usage details

For Apple Silicon, you may need to brew install qpdf first.

1. python environment preparation

# 准备 conda 环境

conda create --name devopsgpt python=3.9

conda activate devopsgpt cd codefuse-chatbot

# python=3.9,notebook用最新即可,python=3.8用notebook=6.5.6

pip install -r requirements.txt2. Start the service

# 完成server_config.py配置后,可一键启动

cd examples

bash start.sh

# 开始在页面进行相关配置,然后打开`启动对话服务`即可

Or start the old version through start.py For more LLM access methods, see more details...

Thank you very much for your interest in the Codefuse project. We very much welcome your suggestions, opinions (including criticism), comments and contributions to the Codefuse project.

Your various suggestions, opinions, and comments about Codefuse can be submitted directly through Issues on GitHub.

There are many ways to participate in and contribute to the Codefuse project: code implementation, test writing, process tool improvements, documentation improvements, and more. Any contributions are very welcome and you will be added to the contributors list. See Contribution Guide for details...

This project is based on langchain-chatchat and codebox-api, and I would like to express my deep gratitude to their open source contributions!