English | 中文 | 日本語

Dataset | Benchmark | ? Models | ? Paper

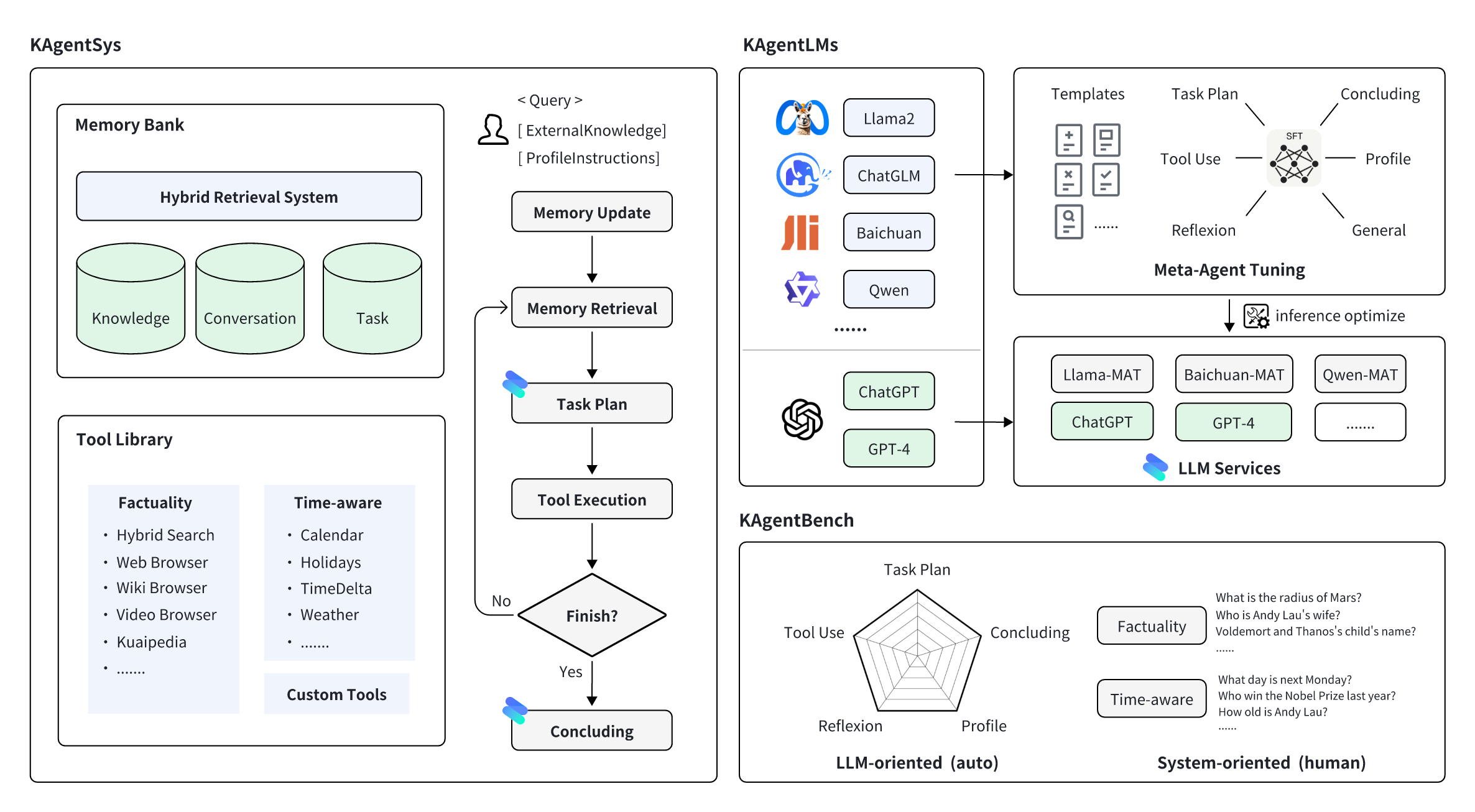

KwaiAgents is a series of Agent-related works open-sourced by the KwaiKEG from Kuaishou Technology. The open-sourced content includes:

| Type | Models | Training Data | Benchmark Data |

| Qwen |

Qwen-7B-MAT Qwen-14B-MAT Qwen-7B-MAT-cpp Qwen1.5-14B-MAT |

KAgentInstruct | KAgentBench |

| Baichuan | Baichuan2-13B-MAT |

| Scale | Planning | Tool-use | Reflection | Concluding | Profile | Overall Score | |

|---|---|---|---|---|---|---|---|

| GPT-3.5-turbo | - | 18.55 | 26.26 | 8.06 | 37.26 | 35.42 | 25.63 |

| Llama2 | 13B | 0.15 | 0.44 | 0.14 | 16.60 | 17.73 | 5.30 |

| ChatGLM3 | 6B | 7.87 | 11.84 | 7.52 | 30.01 | 30.14 | 15.88 |

| Qwen | 7B | 13.34 | 18.00 | 7.91 | 36.24 | 34.99 | 21.17 |

| Baichuan2 | 13B | 6.70 | 16.10 | 6.76 | 24.97 | 19.08 | 14.89 |

| ToolLlama | 7B | 0.20 | 4.83 | 1.06 | 15.62 | 10.66 | 6.04 |

| AgentLM | 13B | 0.17 | 0.15 | 0.05 | 16.30 | 15.22 | 4.88 |

| Qwen-MAT | 7B | 31.64 | 43.30 | 33.34 | 44.85 | 44.78 | 39.85 |

| Baichuan2-MAT | 13B | 37.27 | 52.97 | 37.00 | 48.01 | 41.83 | 45.34 |

| Qwen-MAT | 14B | 43.17 | 63.78 | 32.14 | 45.47 | 45.22 | 49.94 |

| Qwen1.5-MAT | 14B | 42.42 | 64.62 | 30.58 | 46.51 | 45.95 | 50.18 |

| Scale | NoAgent | ReACT | Auto-GPT | KAgentSys | |

|---|---|---|---|---|---|

| GPT-4 | - | 57.21% (3.42) | 68.66% (3.88) | 79.60% (4.27) | 83.58% (4.47) |

| GPT-3.5-turbo | - | 47.26% (3.08) | 54.23% (3.33) | 61.74% (3.53) | 64.18% (3.69) |

| Qwen | 7B | 52.74% (3.23) | 51.74% (3.20) | 50.25% (3.11) | 54.23% (3.27) |

| Baichuan2 | 13B | 54.23% (3.31) | 55.72% (3.36) | 57.21% (3.37) | 58.71% (3.54) |

| Qwen-MAT | 7B | - | 58.71% (3.53) | 65.67% (3.77) | 67.66% (3.87) |

| Baichuan2-MAT | 13B | - | 61.19% (3.60) | 66.67% (3.86) | 74.13% (4.11) |

Install miniconda for build environment first. Then create build env first:

conda create -n kagent python=3.10

conda activate kagent

pip install -r requirements.txtWe recommend using vLLM and FastChat to deploy the model inference service. First, you need to install the corresponding packages (for detailed usage, please refer to the documentation of the two projects):

pip install vllm

pip install "fschat[model_worker,webui]"pip install "fschat[model_worker,webui]"

pip install vllm==0.2.0

pip install transformers==4.33.2To deploy KAgentLMs, you first need to start the controller in one terminal.

python -m fastchat.serve.controllerSecondly, you should use the following command in another terminal for single-gpu inference service deployment:

python -m fastchat.serve.vllm_worker --model-path $model_path --trust-remote-codeWhere $model_path is the local path of the model downloaded. If the GPU does not support Bfloat16, you can add --dtype half to the command line.

Thirdly, start the REST API server in the third terminal.

python -m fastchat.serve.openai_api_server --host localhost --port 8888Finally, you can use the curl command to invoke the model same as the OpenAI calling format. Here's an example:

curl http://localhost:8888/v1/chat/completions

-H "Content-Type: application/json"

-d '{"model": "kagentlms_qwen_7b_mat", "messages": [{"role": "user", "content": "Who is Andy Lau"}]}'Here, change kagentlms_qwen_7b_mat to the model you deployed.

llama-cpp-python offers a web server which aims to act as a drop-in replacement for the OpenAI API. This allows you to use llama.cpp compatible models with any OpenAI compatible client (language libraries, services, etc). The converted model can be found in kwaikeg/kagentlms_qwen_7b_mat_gguf.

To install the server package and get started:

pip install "llama-cpp-python[server]"

python3 -m llama_cpp.server --model kagentlms_qwen_7b_mat_gguf/ggml-model-q4_0.gguf --chat_format chatml --port 8888Finally, you can use the curl command to invoke the model same as the OpenAI calling format. Here's an example:

curl http://localhost:8888/v1/chat/completions

-H "Content-Type: application/json"

-d '{"messages": [{"role": "user", "content": "Who is Andy Lau"}]}'Download and install the KwaiAgents, recommended Python>=3.10

git clone [email protected]:KwaiKEG/KwaiAgents.git

cd KwaiAgents

python setup.py developexport OPENAI_API_KEY=sk-xxxxx

export WEATHER_API_KEY=xxxxxx

The WEATHER_API_KEY is not mandatory, but you need to configure it when asking weather-related questions. You can obtain the API key from this website (Same for local model usage).

kagentsys --query="Who is Andy Lau's wife?" --llm_name="gpt-3.5-turbo" --lang="en"To use a local model, you need to deploy the corresponding model service as described in the previous chapter

kagentsys --query="Who is Andy Lau's wife?" --llm_name="kagentlms_qwen_7b_mat"

--use_local_llm --local_llm_host="localhost" --local_llm_port=8888 --lang="en"Full command arguments:

options:

-h, --help show this help message and exit

--id ID ID of this conversation

--query QUERY User query

--history HISTORY History of conversation

--llm_name LLM_NAME the name of llm

--use_local_llm Whether to use local llm

--local_llm_host LOCAL_LLM_HOST

The host of local llm service

--local_llm_port LOCAL_LLM_PORT

The port of local llm service

--tool_names TOOL_NAMES

the name of llm

--max_iter_num MAX_ITER_NUM

the number of iteration of agents

--agent_name AGENT_NAME

The agent name

--agent_bio AGENT_BIO

The agent bio, a short description

--agent_instructions AGENT_INSTRUCTIONS

The instructions of how agent thinking, acting, or talking

--external_knowledge EXTERNAL_KNOWLEDGE

The link of external knowledge

--lang {en,zh} The language of the overall system

--max_tokens_num Maximum length of model input

Note:

browse_website tool, you need to configure the chromedriver on your server.http_proxy.Custom tools usage can be found in examples/custom_tool_example.py

We only need two lines to evaluate the agent capabilities like:

cd benchmark

python infer_qwen.py qwen_benchmark_res.jsonl

python benchmark_eval.py ./benchmark_eval.jsonl ./qwen_benchmark_res.jsonlThe above command will give the results like

plan : 31.64, tooluse : 43.30, reflextion : 33.34, conclusion : 44.85, profile : 44.78, overall : 39.85

Please refer to benchmark/ for more details.

@article{pan2023kwaiagents,

author = {Haojie Pan and

Zepeng Zhai and

Hao Yuan and

Yaojia Lv and

Ruiji Fu and

Ming Liu and

Zhongyuan Wang and

Bing Qin

},

title = {KwaiAgents: Generalized Information-seeking Agent System with Large Language Models},

journal = {CoRR},

volume = {abs/2312.04889},

year = {2023}

}