Koushik Srivatsan

Fahad Shamshad

Muzammal Naseer

Karthik Nandakumar

Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), UAE.

The rapid proliferation of large-scale text-to-image generation (T2IG) models has led to concerns about their potential misuse in generating harmful content. Though many methods have been proposed for erasing undesired concepts from T2IG models, they only provide a false sense of security, as recent works demonstrate that concept-erased models (CEMs) can be easily deceived to generate the erased concept through adversarial attacks. The problem of adversarially robust concept erasing without significant degradation to model utility (ability to generate benign concepts) remains an unresolved challenge, especially in the white-box setting where the adversary has access to the CEM. To address this gap, we propose an approach called STEREO that involves two distinct stages. The first stage Searches Thoroughly Enough (STE) for strong and diverse adversarial prompts that can regenerate an erased concept from a CEM, by leveraging robust optimization principles from adversarial training. In the second Robustly Erase Once (REO) stage, we introduce an anchor-concept-based compositional objective to robustly erase the target concept at one go, while attempting to minimize the degradation on model utility. By benchmarking the proposed STEREO approach against four state-of-the-art concept erasure methods under three adversarial attacks, we demonstrate its ability to achieve a better robustness vs. utility trade-off.

Large-scale diffusion models for text-to-image generation are susceptible to adversarial attacks that can regenerate harmful concepts despite erasure efforts. We introduce STEREO, a robust approach designed to prevent this regeneration while preserving the model's ability to generate benign content.

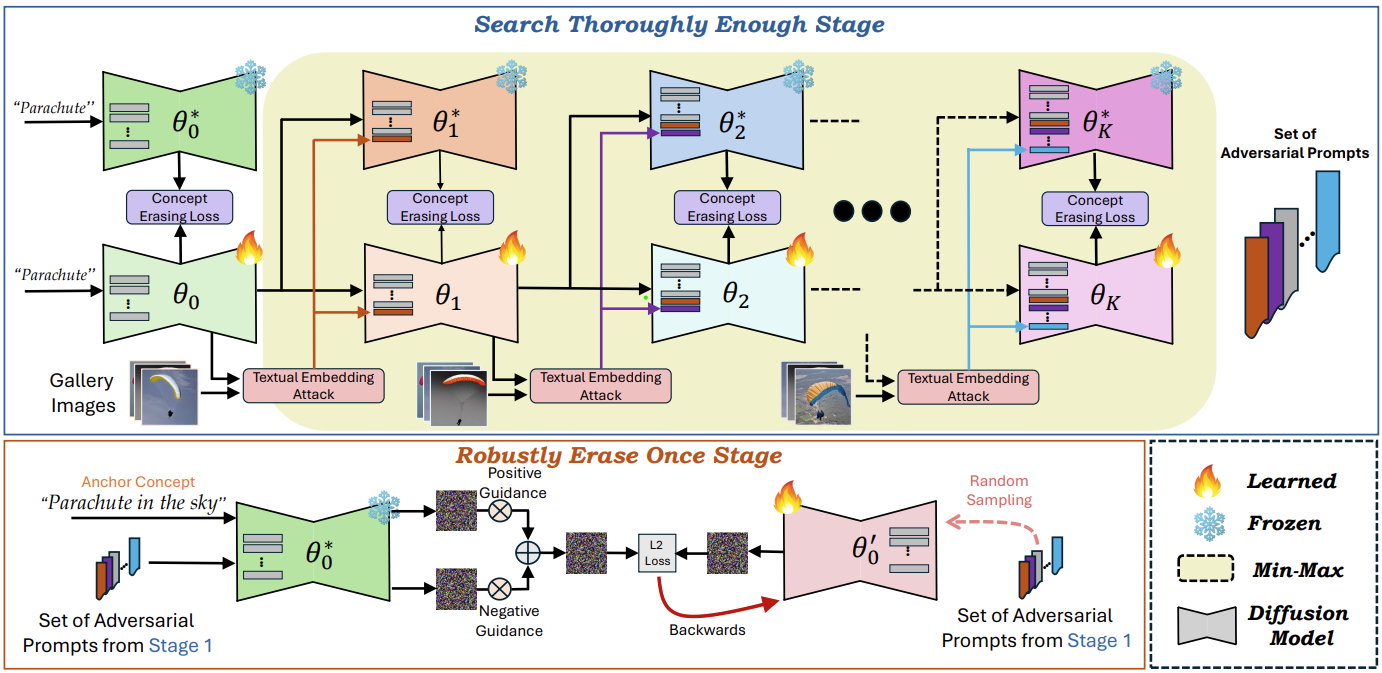

Overview of STEREO. We propose a novel two-stage framework for adversarially robust concept erasing from pre-trained text-to-image generation models without significantly affecting the utility for benign concepts.

Stage 1 (top): Search Thoroughly Enough (STE) follows the robust optimization framework of Adversarial Training and formulates concept erasing as a min-max optimization problem, to discover strong adversarial prompts that can regenerate target concepts from erased models. Note that, the core novelty of our approach lies in the fact that we employ AT not as a final solution, but only as an intermediate step to search thoroughly enough for strong adversarial prompts.

Stage 2 (bottom): Robustly Erase Once fine-tunes the model using an anchor concept and the set of strong adversarial prompts from Stage 1 via a compositional objective, maintaining high-fidelity generation of benign concepts while robustly erasing the target concept.

If you find our work and this repository useful, please consider giving our repo a star and citing our paper as follows:

@article{srivatsan2024stereo,

title={STEREO: Towards Adversarially Robust Concept Erasing from Text-to-Image Generation Models},

author={Srivatsan, Koushik and Shamshad, Fahad and Naseer, Muzammal and Nandakumar, Karthik},

journal={arXiv preprint arXiv:2408.16807},

year={2024}

}If you have any questions, please create an issue on this repository or contact at [email protected].

Our code is built on top of the ESD repository. We thank the authors for releasing their code.