This project is a deep convolutional generative adversarial network that can create high quality images from a random seed like portraits, animals, drawings and more.

The model is a Generative Adversarial Network (GAN) like described in the paper Generative Adversarial Nets from Montreal University (2014)

The generator and the discriminator are both deep convolutional neural networks like in the paper Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks from Facebook AI Research (2015) but with a few improvements:

I added Equalized Learning Rate Layers from the paper Progressive Growing of GANs for Improved Quality, Stability, and Variation by Nvidia (2017)

I added Bilinear Upsampling / Downsampling from the paper Making Convolutional Networks Shift-Invariant Again by Adobe Research (2019)

I implemented Wavelet Transform from the paper SWAGAN: A Style-based Wavelet-driven Generative Model by Tel-Aviv University (2021)

I used a Style-Based Architecture with a Constant Input, Learned Styles from a Mapping Network and Noise Injection from the paper A Style-Based Generator Architecture for Generative Adversarial Networks by Nvidia (2018)

I added Skip Connections from the paper MSG-GAN: Multi-Scale Gradients for Generative Adversarial Networks by TomTom and Adobe (2019)

I added Residual Blocks from the paper Deep Residual Learning for Image Recognition by Microsoft Research (2015)

I added Minibatch Standard Deviation at the end of the discriminator from the paper Improved Techniques for Training GANs by OpenAI (2016)

I kept the original Non-Saturating Loss from the paper Generative Adversarial Nets by Montreal University (2014)

I added Path Length Regularization on the generator from the paper Analyzing and Improving the Image Quality of StyleGAN by Nvidia (2019)

I added Gradient Penalty Regularization on the discriminator from the paper Improved Training of Wasserstein GANs by Google Brain (2017)

I added Adaptive Discriminator Augmentation (ADA) from the paper Training Generative Adversarial Networks with Limited Data by Nvidia (2020) but the augmentation probability is not trained and has to be set manually (and some augmentations are disabled because of a missing PyTorch implementation)

I added the computation of the Fréchet Inception Distance (FID) during training from the paper GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium by University Linz (2017) using the pytorch-fid module

I added a Projector like in the paper Analyzing and Improving the Image Quality of StyleGAN by Nvidia (2019)

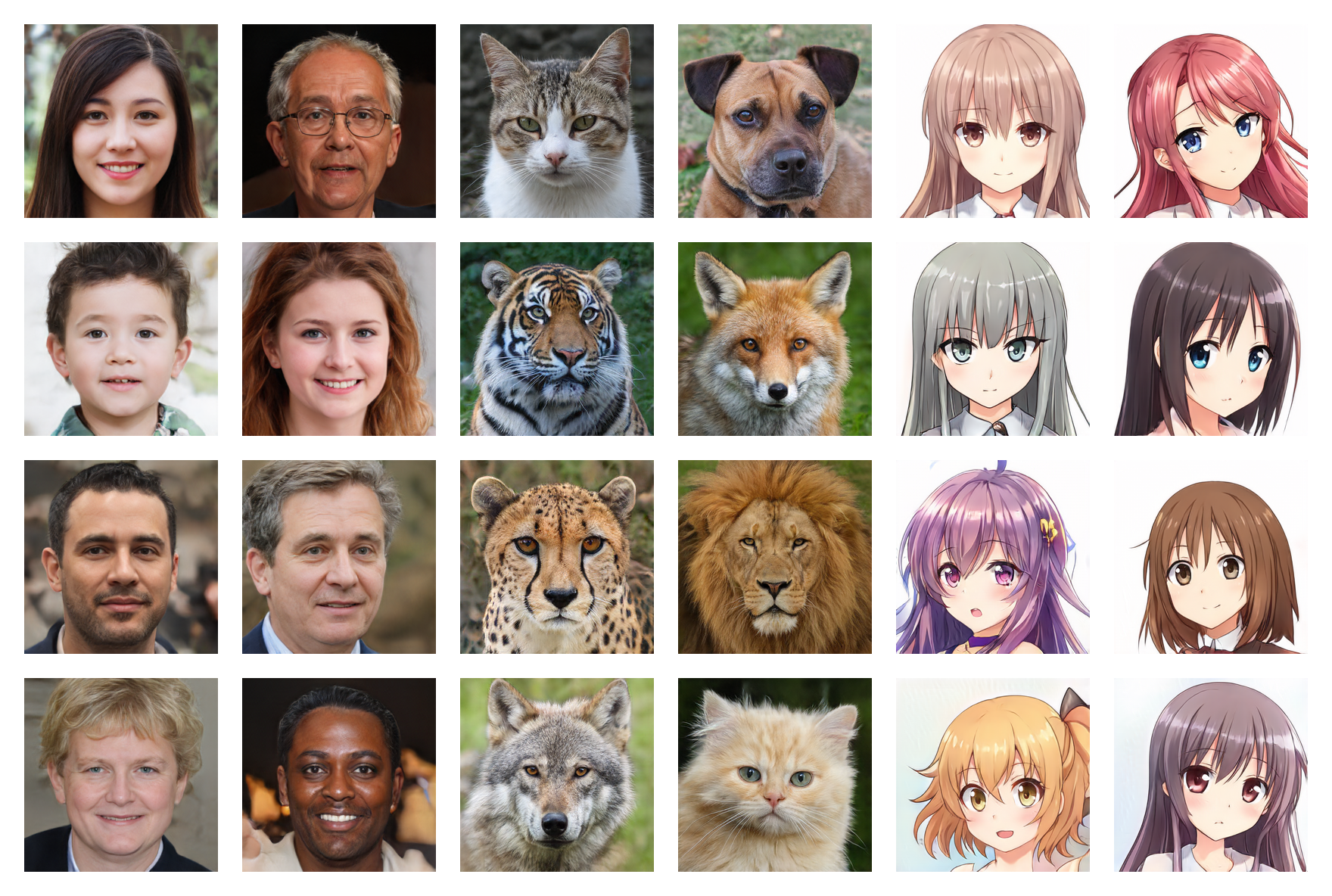

Human faces 256*256 (FID: 5.97)

Animal faces 256*256 (FID: 6.56)

Anime faces 256*256 (FID: 3.74)

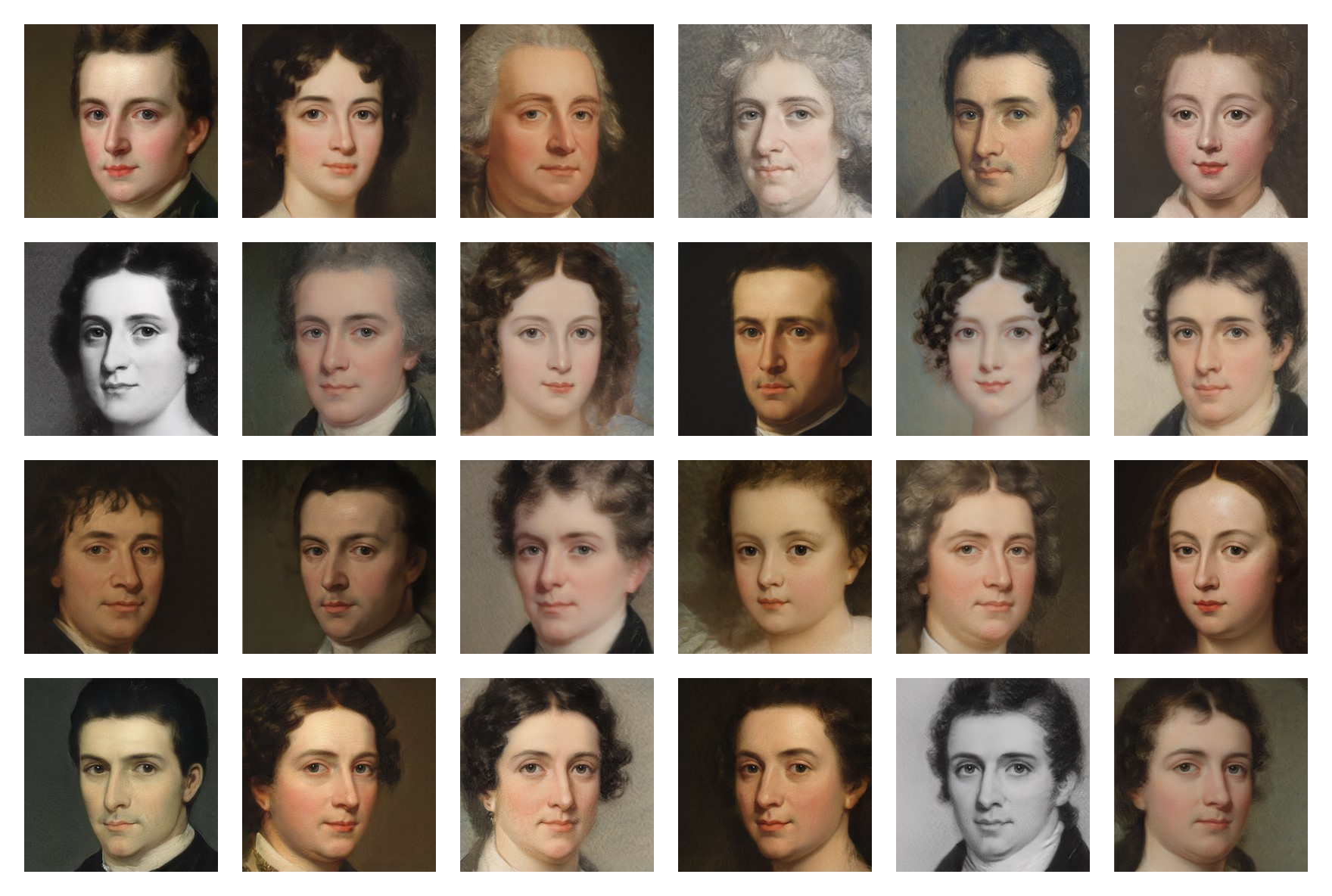

Painting faces 256*256 (FID: 20.32)

The trained weights on multiple datasets are available on Google Drive, you just need to download the .pt files and put them in the models folder.

Run the following command to install the dependencies:

$ pip install -r requirements.txt(You may need to use a specific command for PyTorch if you want to use CUDA)

First, you need to find and download a dataset of images (less than 5,000 may be too little and more than 150,000 is not necessary). You can find a lot of datasets on Kaggle and the ones I used on my Google Drive.

Then, in the training/settings.py file, specify the path to the dataset

If you don't have an overpriced 24GB GPU like me, the default settings may not work for you. You can try to:

Run the training.ipynb file (you can stop the training at any time and resume it later thanks to the checkpoints)

Run the testing.ipynb file to generate random images

Run the testing/interpolation.ipynb file to generate the images of a smooth interpolation video

Run the testing/projector.ipynb file to project real images into the latent space

Run the testing/style_mixing.ipynb file to generate the images of a style mixing interpolation video

Run the testing/timelapse.ipynb file to generate the images of a training timelapse video