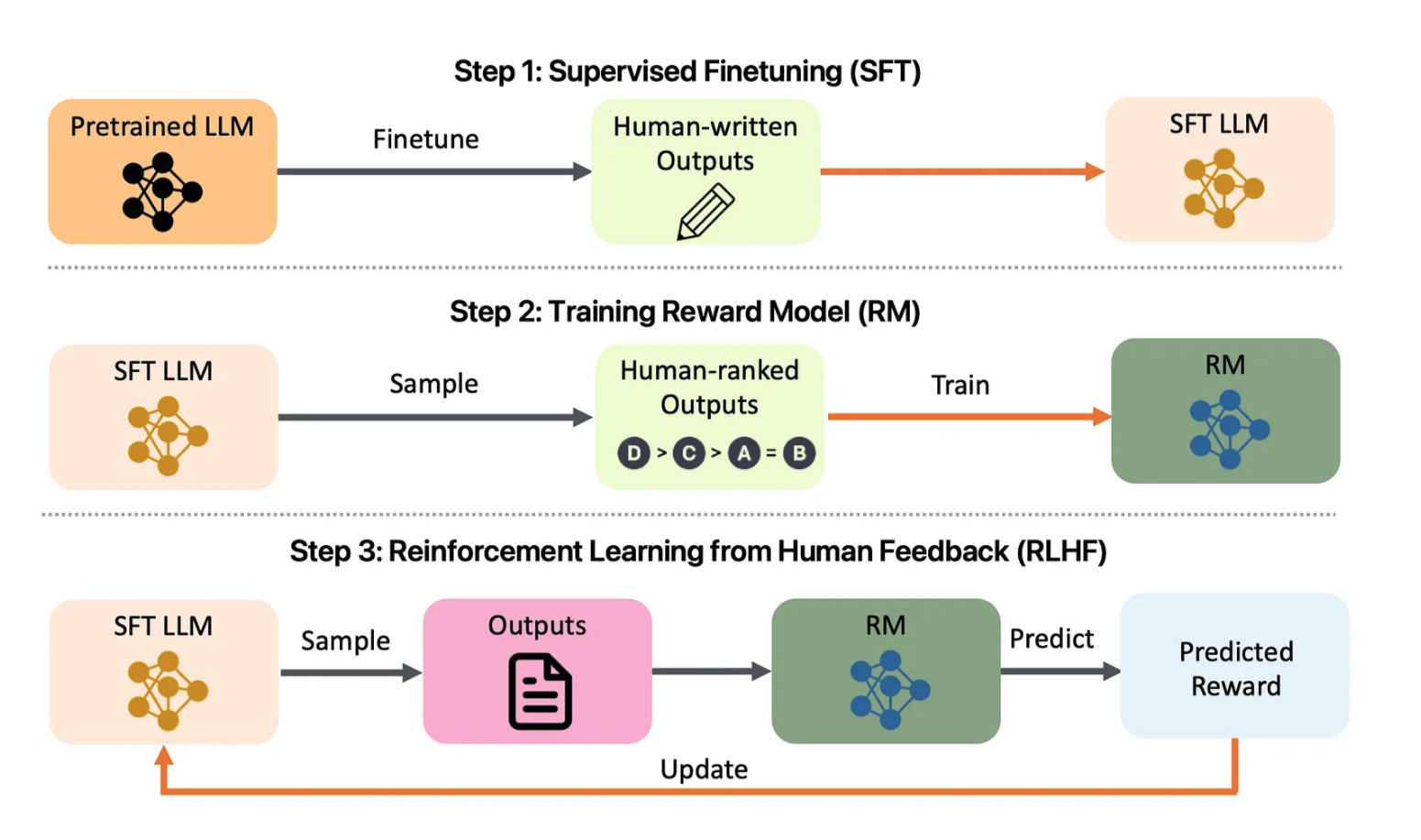

Figure 1: Overview of the LLM Alignment Project. Take a look at: arXiv:2308.05374

LLM Alignment Template is not just a comprehensive tool for aligning large language models (LLMs), but also serves as a powerful template for building your own LLM alignment application. Inspired by project templates like PyTorch Project Template, this repository is designed to provide a full stack of functionality, acting as a starting point to customize and extend for your own LLM alignment needs. Whether you are a researcher, developer, or data scientist, this template provides a solid foundation for efficiently creating and deploying LLMs tailored to align with human values and objectives.

LLM Alignment Template provides a full stack of functionality, including training, fine-tuning, deploying, and monitoring LLMs using Reinforcement Learning from Human Feedback (RLHF). This project also integrates evaluation metrics to ensure ethical and effective use of language models. The interface offers a user-friendly experience for managing alignment, visualizing training metrics, and deploying at scale.

app/: Contains API and UI code.

auth.py, feedback.py, ui.py: API endpoints for user interaction, feedback collection, and general interface management.app.js, chart.js), CSS (styles.css), and Swagger API documentation (swagger.json).chat.html, feedback.html, index.html) for UI rendering.src/: Core logic and utilities for preprocessing and training.

preprocessing/):

preprocess_data.py: Combines original and augmented datasets and applies text cleaning.tokenization.py: Handles tokenization.training/):

fine_tuning.py, transfer_learning.py, retrain_model.py: Scripts for training and retraining models.rlhf.py, reward_model.py: Scripts for reward model training using RLHF.utils/): Common utilities (config.py, logging.py, validation.py).dashboards/: Performance and explainability dashboards for monitoring and model insights.

performance_dashboard.py: Displays training metrics, validation loss, and accuracy.explainability_dashboard.py: Visualizes SHAP values to provide insight into model decisions.tests/: Unit, integration, and end-to-end tests.

test_api.py, test_preprocessing.py, test_training.py: Various unit and integration tests.e2e/): Cypress-based UI tests (ui_tests.spec.js).load_testing/): Uses Locust (locustfile.py) for load testing.deployment/: Configuration files for deployment and monitoring.

kubernetes/): Deployment and Ingress configurations for scaling and canary releases.monitoring/): Prometheus (prometheus.yml) and Grafana (grafana_dashboard.json) for performance and system health monitoring.Clone the Repository:

git clone https://github.com/yourusername/LLM-Alignment-Template.git

cd LLM-Alignment-TemplateInstall Dependencies:

pip install -r requirements.txtcd app/static

npm installBuild Docker Images:

docker-compose up --buildAccess the Application:

http://localhost:5000.kubectl apply -f deployment/kubernetes/deployment.yml

kubectl apply -f deployment/kubernetes/service.ymlkubectl apply -f deployment/kubernetes/hpa.ymldeployment/kubernetes/canary_deployment.yml to roll out new versions safely.deployment/monitoring/ to enable monitoring dashboards.docker-compose.logging.yml for centralized logs.The training module (src/training/transfer_learning.py) uses pre-trained models like BERT to adapt to custom tasks, providing a significant performance boost.

The data_augmentation.py script (src/data/) applies augmentation techniques like back-translation and paraphrasing to improve data quality.

rlhf.py and reward_model.py scripts to fine-tune models based on human feedback.feedback.html), and the model retrains with retrain_model.py.The explainability_dashboard.py script uses SHAP values to help users understand why a model made specific predictions.

tests/, covering API, preprocessing, and training functionalities.tests/load_testing/locustfile.py) to ensure stability under load.Contributions are welcome! Please submit pull requests or issues for improvements or new features.

This project is licensed under the MIT License. See the LICENSE file for more information.

Developed with ❤️ by Amirsina Torfi