? HF Repo • ⚒️ SwissArmyTransformer (sat) • ? Twitter

• ? [CogView@NeurIPS 21] [GitHub] • ? [GLM@ACL 22] [GitHub]

Join us on Slack and WeChat

[2023.10] Welcome to pay attention to CogVLM (https://github.com/THUDM/CogVLM), a new generation multi-modal dialogue model of Zhipu AI. It adopts the new architecture of visual experts and won the first place in 10 authoritative classic multi-modal tasks. . The current open source CogVLM-17B English model will be based on the GLM open source Chinese model.

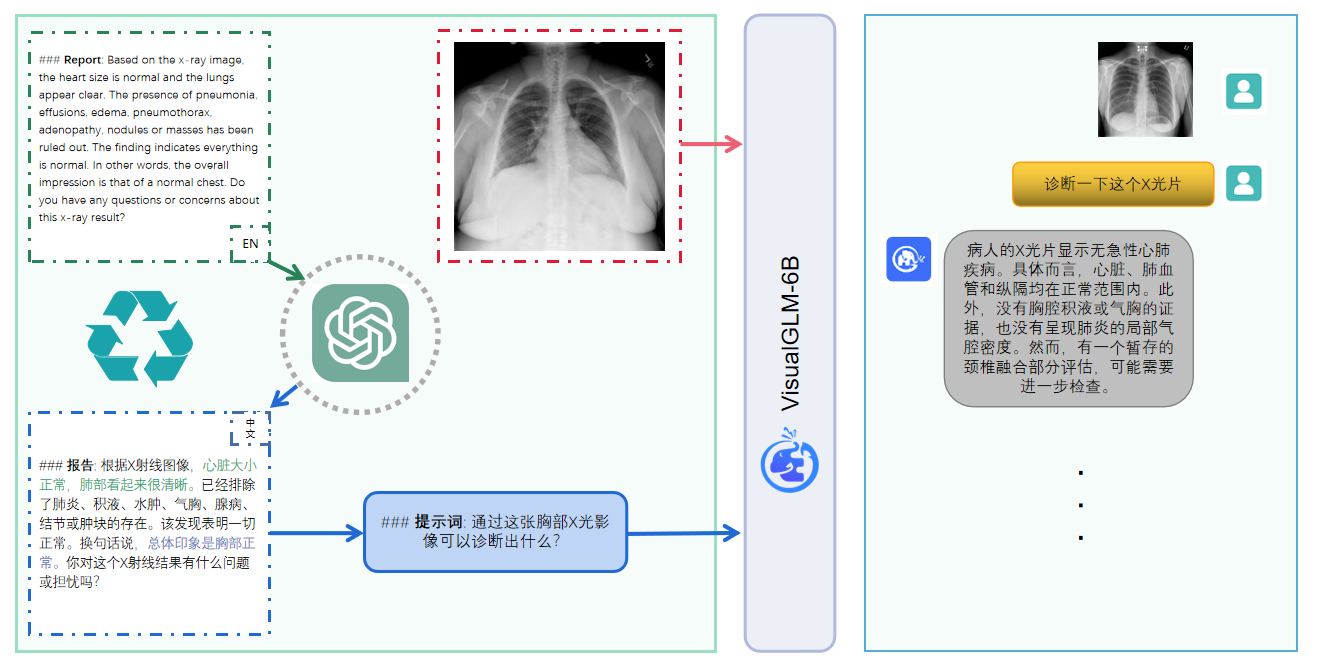

VisualGLM-6B is an open-source, multi-modal dialog language model that supports images, Chinese, and English . The language model is based on ChatGLM-6B with 6.2 billion parameters; the image part builds a bridge between the visual model and the language model through the training of BLIP2-Qformer, with the total model comprising 7.8 billion parameters. Click here for English version.

VisualGLM-6B is an open source multi-modal dialogue language model that supports images, Chinese and English . The language model is based on ChatGLM-6B and has 6.2 billion parameters. The image part builds a bridge between the visual model and the language model by training BLIP2-Qformer. , the overall model has a total of 7.8 billion parameters.

VisualGLM-6B relies on 30M high-quality Chinese image-text pairs from the CogView data set and 300M screened English image-text pairs for pre-training. Chinese and English weights are the same. This training method better aligns visual information to the semantic space of ChatGLM; in the subsequent fine-tuning stage, the model is trained on long visual question and answer data to generate answers that conform to human preferences.

VisualGLM-6B is trained by the SwissArmyTransformer ( sat for short) library, which is a tool library that supports flexible modification and training of Transformer, and supports efficient fine-tuning methods of parameters such as Lora and P-tuning. This project provides a huggingface interface that conforms to user habits, and also provides an interface based on sat.

Combined with model quantization technology, users can deploy it locally on consumer-grade graphics cards (the minimum required is 6.3G of video memory at the INT4 quantization level).

The VisualGLM-6B open source model aims to promote the development of large model technology together with the open source community. Developers and everyone are kindly requested to abide by the open source agreement and do not use this open source model and code and derivatives based on this open source project for any purpose that may bring harm to the country and society. Harmful uses and any services that have not been safety assessed and documented. Currently, this project has not officially developed any applications based on VisualGLM-6B, including websites, Android Apps, Apple iOS applications, Windows Apps, etc.

Since VisualGLM-6B is still in the v1 version, it is currently known to have quite a few limitations , such as image description factuality/model hallucination problems, insufficient image detail information capture, and some limitations from language models. Although the model tries its best to ensure the compliance and accuracy of the data at each stage of training, due to the small scale of the VisualGLM-6B model and the fact that the model is affected by probabilistic and random factors, the accuracy of the output content cannot be guaranteed, and the model is easily Misleading (see Limitations section for details). In subsequent versions of VisualGLM, efforts will be made to optimize such problems. This project does not bear the risks and responsibilities of data security and public opinion risks caused by open source models and codes, or the risks and responsibilities arising from any model being misled, abused, disseminated, or improperly exploited.

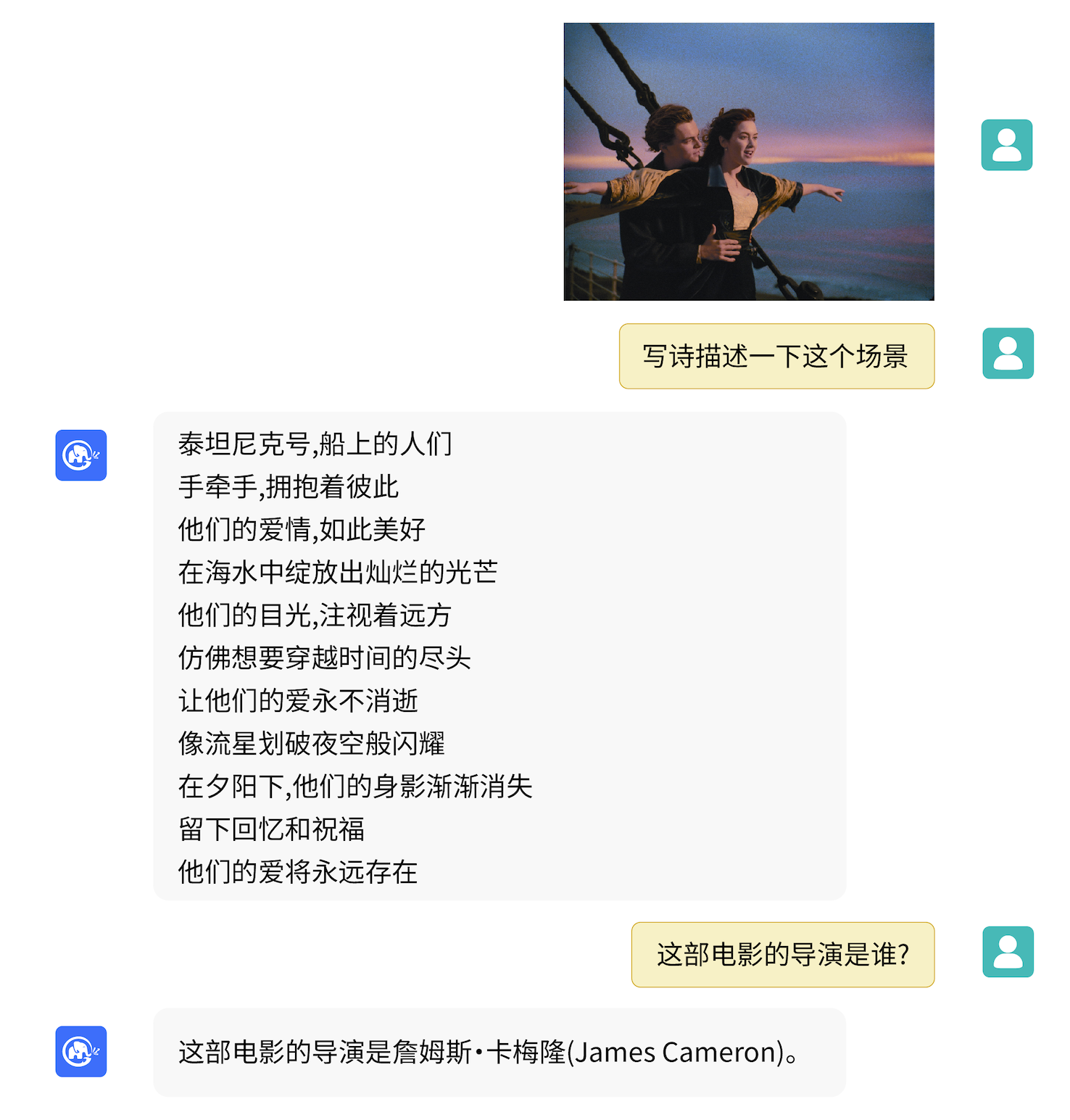

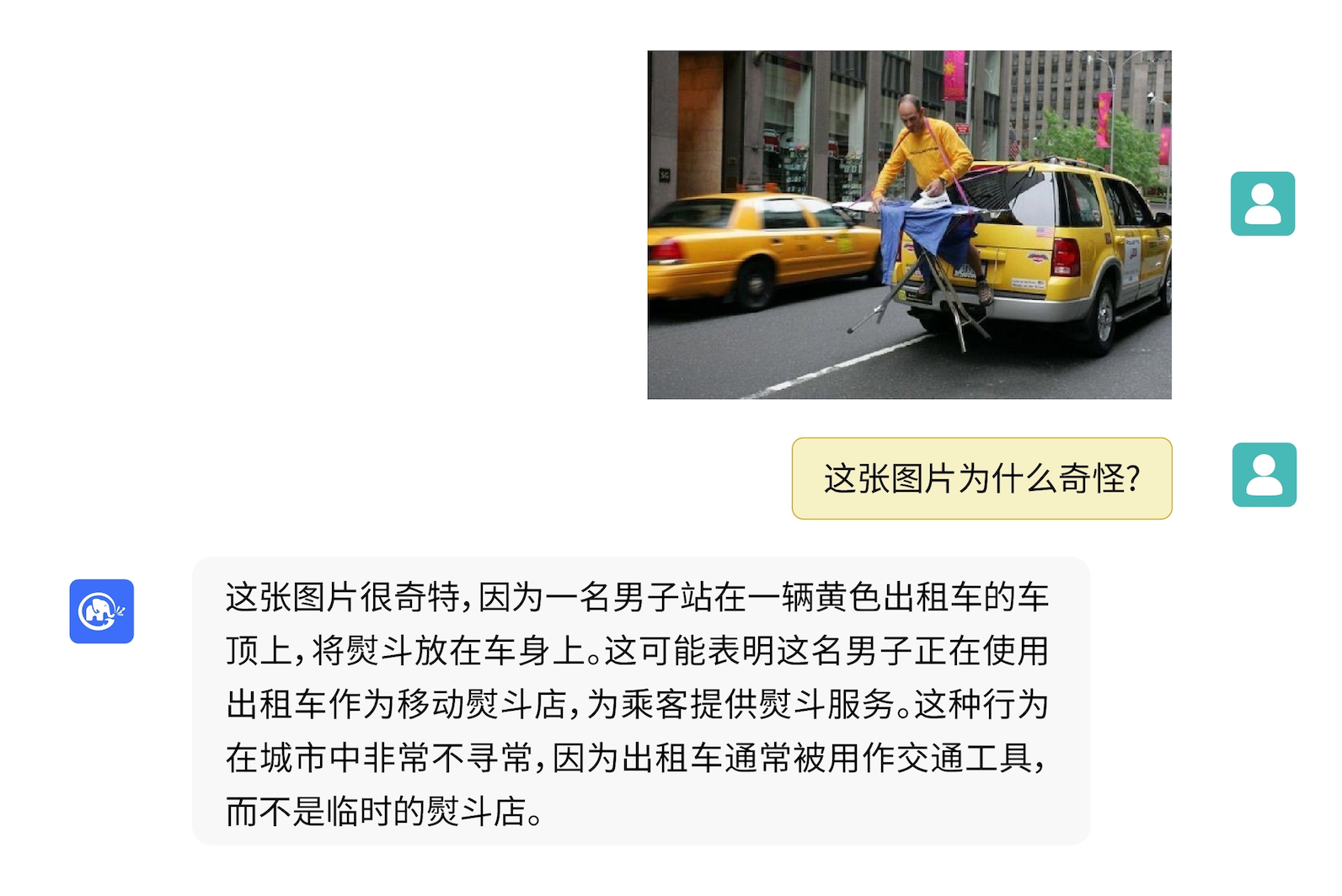

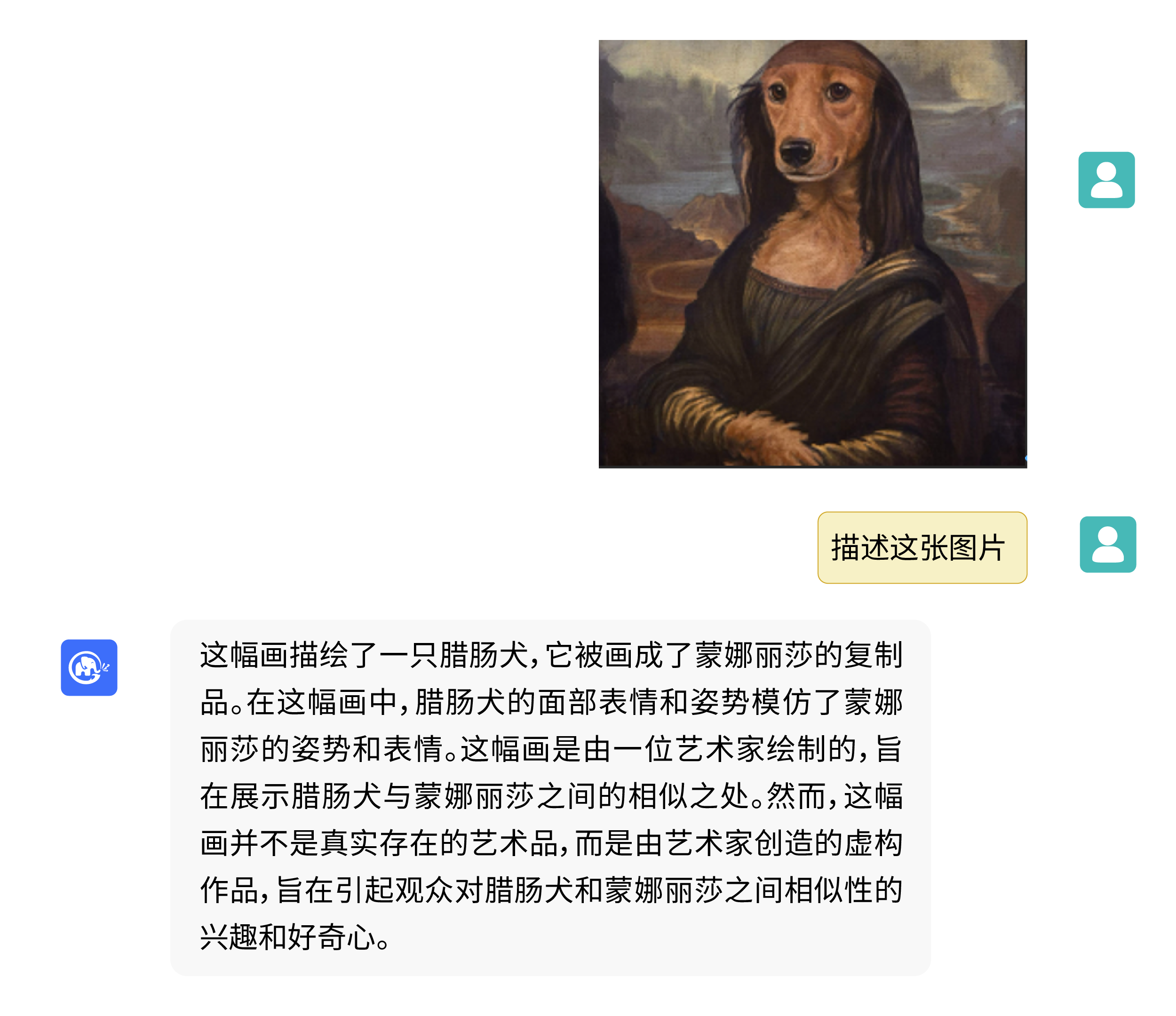

VisualGLM-6B can perform question and answer related knowledge of image description.

Use pip to install dependencies

pip install -i https://pypi.org/simple -r requirements.txt

# 国内请使用aliyun镜像,TUNA等镜像同步最近出现问题,命令如下

pip install -i https://mirrors.aliyun.com/pypi/simple/ -r requirements.txt

At this time, deepspeed library (which supports sat library training) will be installed by default. This library is not necessary for model inference. At the same time, some Windows environments will encounter problems when installing this library. If we want to bypass the deepspeed installation, we can change the command to

pip install -i https://mirrors.aliyun.com/pypi/simple/ -r requirements_wo_ds.txt

pip install -i https://mirrors.aliyun.com/pypi/simple/ --no-deps "SwissArmyTransformer>=0.4.4"

If you use the Huggingface transformers library to call the model ( you also need to install the above dependency package! ), you can pass the following code (where the image path is the local path):

from transformers import AutoTokenizer , AutoModel

tokenizer = AutoTokenizer . from_pretrained ( "THUDM/visualglm-6b" , trust_remote_code = True )

model = AutoModel . from_pretrained ( "THUDM/visualglm-6b" , trust_remote_code = True ). half (). cuda ()

image_path = "your image path"

response , history = model . chat ( tokenizer , image_path , "描述这张图片。" , history = [])

print ( response )

response , history = model . chat ( tokenizer , image_path , "这张图片可能是在什么场所拍摄的?" , history = history )

print ( response ) The above code will automatically download the model implementation and parameters by transformers . The complete model implementation can be found in Hugging Face Hub. If you are slow to download model parameters from Hugging Face Hub, you can manually download the model parameter file from here and load the model locally. For specific methods, please refer to Loading the model from local. For information on quantification, CPU inference, Mac MPS backend acceleration, etc. based on the transformers library model, please refer to the low-cost deployment of ChatGLM-6B.

If you use the SwissArmyTransformer library to call the model, the method is similar. You can use the environment variable SAT_HOME to determine the model download location. In this warehouse directory:

import argparse

from transformers import AutoTokenizer

tokenizer = AutoTokenizer . from_pretrained ( "THUDM/chatglm-6b" , trust_remote_code = True )

from model import chat , VisualGLMModel

model , model_args = VisualGLMModel . from_pretrained ( 'visualglm-6b' , args = argparse . Namespace ( fp16 = True , skip_init = True ))

from sat . model . mixins import CachedAutoregressiveMixin

model . add_mixin ( 'auto-regressive' , CachedAutoregressiveMixin ())

image_path = "your image path or URL"

response , history , cache_image = chat ( image_path , model , tokenizer , "描述这张图片。" , history = [])

print ( response )

response , history , cache_image = chat ( None , model , tokenizer , "这张图片可能是在什么场所拍摄的?" , history = history , image = cache_image )

print ( response ) Efficient fine-tuning of parameters can also be easily performed using the sat library.

Multimodal tasks are widely distributed and of many types, and pre-training often cannot cover everything. Here we provide an example of small sample fine-tuning, using 20 annotated images to enhance the model's ability to answer "background" questions.

Unzip fewshot-data.zip and run the following command:

bash finetune/finetune_visualglm.sh

Currently, three methods of fine-tuning are supported:

--layer_range and --lora_rank parameters can be adjusted according to the specific scenario and data volume.bash finetune/finetune_visualglm_qlora.sh . QLoRA quantizes the linear layer of ChatGLM with 4-bit and only requires 9.8GB of video memory for fine-tuning.--use_lora with --use_ptuning , but it is not recommended unless the model application scenario is very fixed.After training, you can use the following command for inference:

python cli_demo.py --from_pretrained your_checkpoint_path --prompt_zh 这张图片的背景里有什么内容?

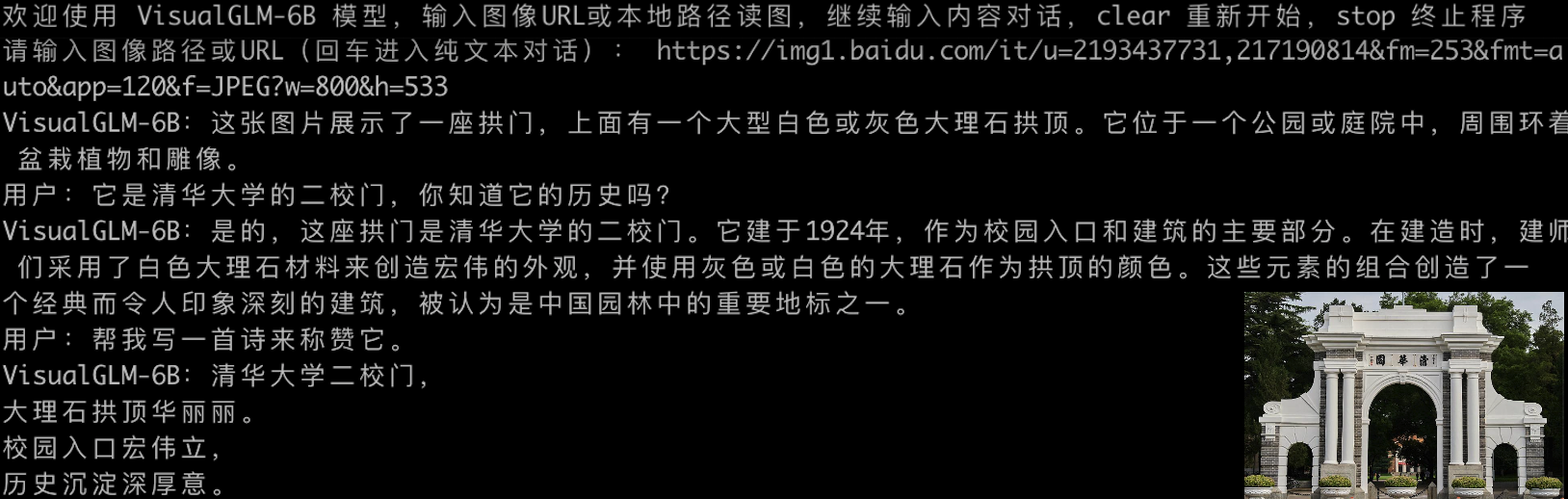

Before fine-tuning:

欢迎使用 VisualGLM-6B 模型,输入图像URL或本地路径读图,继续输入内容对话,clear 重新开始,stop 终止程序

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/2p.png

用户:这张图片的背景里有什么内容?

VisualGLM-6B:人们总是被生活琐事所困扰,无法摆脱束缚.

用户:clear

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/meme.png

用户:这张图片的背景里有什么内容?

VisualGLM-6B:这张图片的背景是一张狗的图片,它躺在木地板上。

用户:clear

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/passport.png

用户:这张图片的背景里有什么内容?

VisualGLM-6B:这张图片的背景是一台电脑键盘和两张护照。

用户:clear

请输入图像路径或URL(回车进入纯文本对话):

After fine-tuning:

欢迎使用 VisualGLM-6B 模型,输入图像URL或本地路径读图,继续输入内容对话,clear 重新开始,stop 终止程序

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/2p.png

用户:这张图片的背景里有什么内容?

VisualGLM-6B:这张图片的背景是雨天,

用户:描述一下这张图片。

VisualGLM-6B:这张图片描绘了雨天的情景,雨水淅淅沥沥地落在地面上。人们穿着雨衣或雨伞,走在雨中,似乎在寻找着什么.

用户:clear

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/meme.png

用户:这张图片的背景里有什么内容?

VisualGLM-6B:这张图片的背景是木质地板。

用户:什么颜色的地板

VisualGLM-6B:这张图片的背景是蓝色的。

用户:描述一下这张图片。

VisualGLM-6B:这张图片描绘了一只棕色的小狗躺在蓝色的木质地板上。

用户:clear

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/passport.png

用户:这张图片的背景里有什么内容?

VisualGLM-6B:这张图片的背景是电脑键盘。

用户:描述一下这张图片。

VisualGLM-6B:这张图片的特点是电脑键盘和护照。

用户:有几张护照

VisualGLM-6B:两张护照。

用户:clear

请输入图像路径或URL(回车进入纯文本对话):

If you want to merge the parameters of the LoRA part into the original weights, you can call merge_lora() , for example:

from finetune_visualglm import FineTuneVisualGLMModel

import argparse

model , args = FineTuneVisualGLMModel . from_pretrained ( 'checkpoints/finetune-visualglm-6b-05-19-07-36' ,

args = argparse . Namespace (

fp16 = True ,

skip_init = True ,

use_gpu_initialization = True ,

device = 'cuda' ,

))

model . get_mixin ( 'lora' ). merge_lora ()

args . layer_range = []

args . save = 'merge_lora'

args . mode = 'inference'

from sat . training . model_io import save_checkpoint

save_checkpoint ( 1 , model , None , None , args ) Fine-tuning requires the installation of the deepspeed library. Currently, this process only supports Linux systems. More sample instructions and process instructions for Windows systems will be completed in the near future.

python cli_demo.py The program will automatically download the sat model and conduct an interactive conversation on the command line. Enter instructions and press Enter to generate a reply. Enter clear to clear the conversation history. Enter stop to terminate the program.

The program provides the following hyperparameters to control the generation process and quantization accuracy:

The program provides the following hyperparameters to control the generation process and quantization accuracy:

usage: cli_demo.py [-h] [--max_length MAX_LENGTH] [--top_p TOP_P] [--top_k TOP_K] [--temperature TEMPERATURE] [--english] [--quant {8,4}]

optional arguments:

-h, --help show this help message and exit

--max_length MAX_LENGTH

max length of the total sequence

--top_p TOP_P top p for nucleus sampling

--top_k TOP_K top k for top k sampling

--temperature TEMPERATURE

temperature for sampling

--english only output English

--quant {8,4} quantization bits

It should be noted that during training, the prompt words for English question and answer pairs are Q: A: :, while the Chinese prompts are问:答: The Chinese prompts are used in the web demo, so the English responses will be worse and mixed with Chinese; if necessary To reply in English, please use the --english option in cli_demo.py .

We also provide a typewriter effect command line tool inherited from ChatGLM-6B . This tool uses the Huggingface model:

python cli_demo_hf.pyWe also support parallel multi-card deployment of models: (You need to update the latest version of sat. If you have downloaded checkpoint before, you also need to manually delete it and download it again)

torchrun --nnode 1 --nproc-per-node 2 cli_demo_mp.py

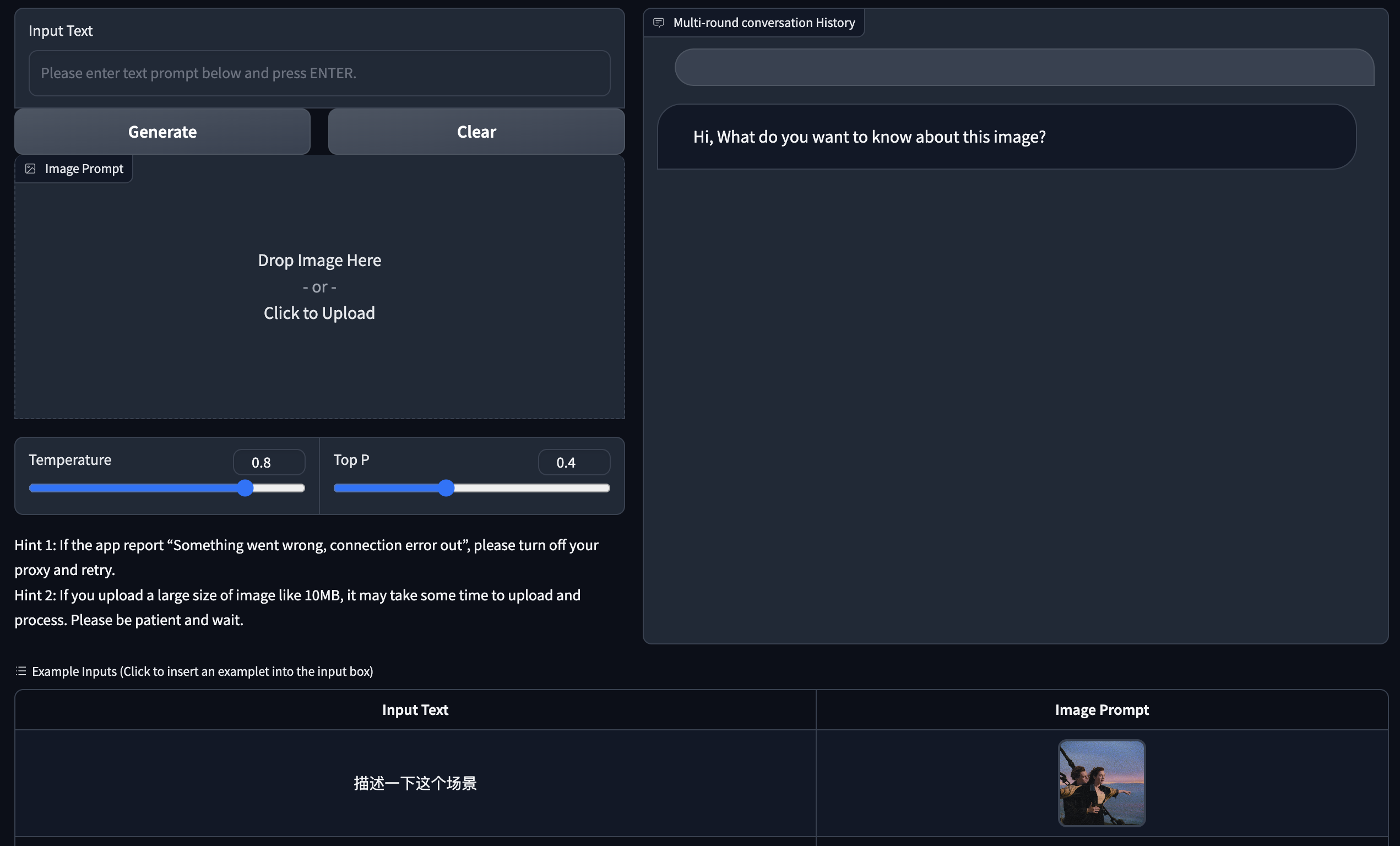

We provide a web version Demo based on Gradio. First install Gradio: pip install gradio . Then download and enter this warehouse to run web_demo.py :

git clone https://github.com/THUDM/VisualGLM-6B

cd VisualGLM-6B

python web_demo.py

The program will automatically download the sat model, run a Web Server, and output the address. Open the output address in a browser to use it.

We also provide a typewriter effect web version tool inherited from ChatGLM-6B . This tool uses the Huggingface model and will run on port :8080 after startup:

python web_demo_hf.py Both web version demos accept the command line parameter --share to generate gradio public links, and accept --quant 4 and --quant 8 to use 4-bit quantization/8-bit quantization respectively to reduce video memory usage.

First, you need to install additional dependencies pip install fastapi uvicorn , and then run api.py in the warehouse:

python api.py The program will automatically download the sat model, which is deployed on the local port 8080 by default and called through the POST method. The following is an example of using curl to request. Generally speaking, you can also use the code method to perform POST.

echo " { " image " : " $( base64 path/to/example.jpg ) " , " text " : "描述这张图片" , " history " :[]} " > temp.json

curl -X POST -H " Content-Type: application/json " -d @temp.json http://127.0.0.1:8080The return value obtained is

{

"response":"这张图片展现了一只可爱的卡通羊驼,它站在一个透明的背景上。这只羊驼长着一张毛茸茸的耳朵和一双大大的眼睛,它的身体是白色的,带有棕色斑点。",

"history":[('描述这张图片', '这张图片展现了一只可爱的卡通羊驼,它站在一个透明的背景上。这只羊驼长着一张毛茸茸的耳朵和一双大大的眼睛,它的身体是白色的,带有棕色斑点。')],

"status":200,

"time":"2023-05-16 20:20:10"

}

We also provide api_hf.py that uses the Huggingface model. The usage is consistent with the API of the sat model:

python api_hf.pyIn the Huggingface implementation, the model is loaded with FP16 precision by default, and running the above code requires approximately 15GB of video memory. If your GPU has limited memory, you can try loading the model in quantized mode. How to use it:

# 按需修改,目前只支持 4/8 bit 量化。下面将只量化ChatGLM,ViT 量化时误差较大

model = AutoModel . from_pretrained ( "THUDM/visualglm-6b" , trust_remote_code = True ). quantize ( 8 ). half (). cuda () In the sat implementation, you need to first pass the parameter to change the loading location to cpu , and then perform quantification. The method is as follows, see cli_demo.py for details:

from sat . quantization . kernels import quantize

quantize ( model , args . quant ). cuda ()

# 只需要 7GB 显存即可推理This project is in the V1 version. The parameters and calculation volume of the visual and language models are relatively small. We have summarized the main improvement directions as follows:

The code of this repository is open source according to the Apache-2.0 agreement. The use of the weights of the VisualGLM-6B model needs to comply with the Model License.

If you find our work helpful, please consider citing the following papers

@inproceedings{du2022glm,

title={GLM: General Language Model Pretraining with Autoregressive Blank Infilling},

author={Du, Zhengxiao and Qian, Yujie and Liu, Xiao and Ding, Ming and Qiu, Jiezhong and Yang, Zhilin and Tang, Jie},

booktitle={Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)},

pages={320--335},

year={2022}

}

@article{ding2021cogview,

title={Cogview: Mastering text-to-image generation via transformers},

author={Ding, Ming and Yang, Zhuoyi and Hong, Wenyi and Zheng, Wendi and Zhou, Chang and Yin, Da and Lin, Junyang and Zou, Xu and Shao, Zhou and Yang, Hongxia and others},

journal={Advances in Neural Information Processing Systems},

volume={34},

pages={19822--19835},

year={2021}

}

The data set in the instruction fine-tuning phase of VisualGLM-6B includes part of the English graphic and text data from the MiniGPT-4 and LLAVA projects, as well as many classic cross-modal working data sets. We sincerely thank them for their contributions.