Project | ArXiv | Paper | Huggingface-demo | Colab-demo

2024.02 Test the model using custom handwriting samples:

A Huggingface demo is now available and running

Colab demo for custom handwritings

Colab demo for IAM/CVL dataset

Ankan Kumar Bhunia, Salman Khan, Hisham Cholakkal, Rao Muhammad Anwer, Fahad Shahbaz Khan & Mubarak Shah

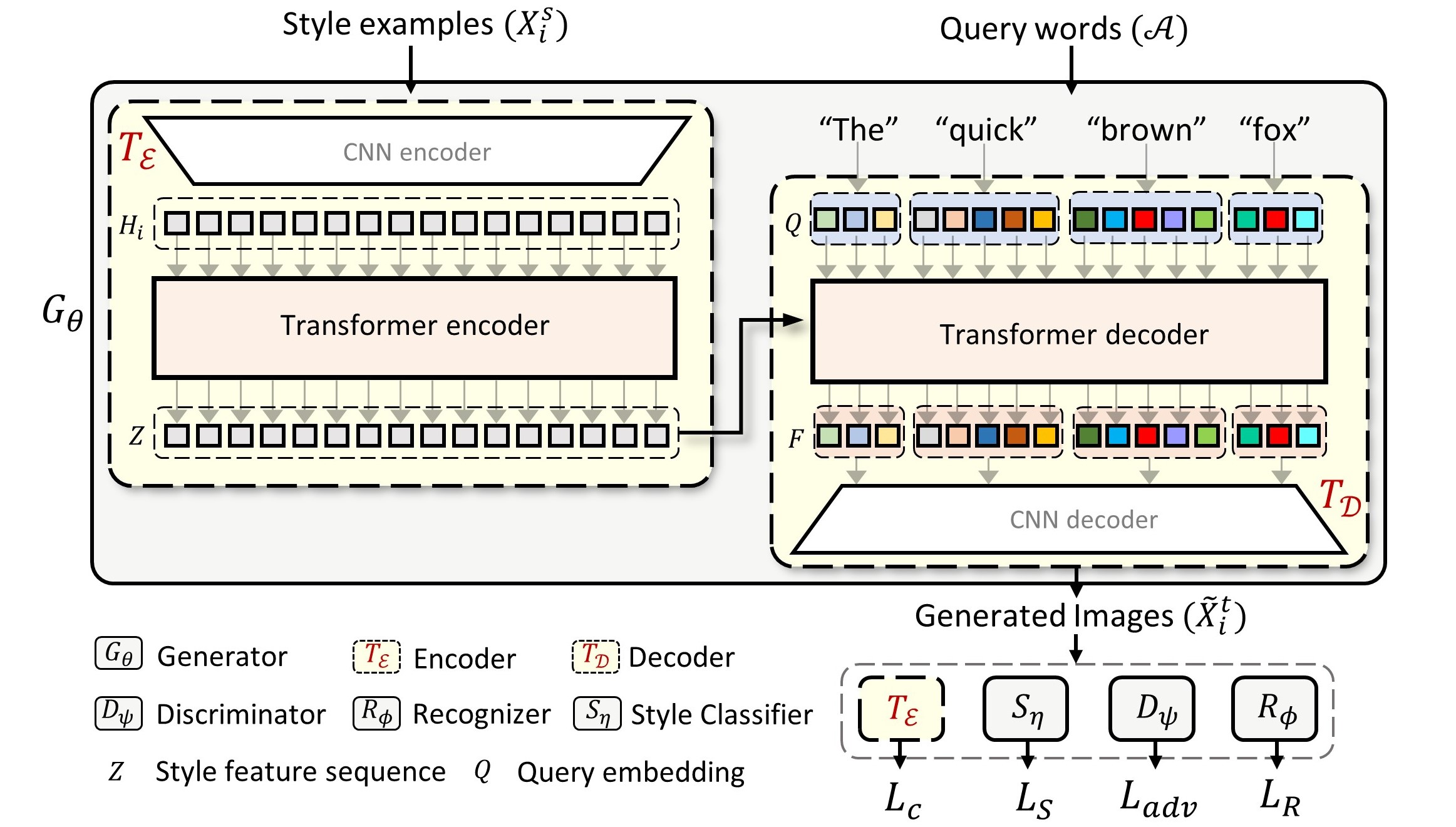

Abstract:We propose a novel transformer-based styled handwritten text image generation approach, HWT, that strives to learn both style-content entanglement as well as global and local writing style patterns. The proposed HWT captures the long and short range relationships within the style examples through a self-attention mechanism, thereby encoding both global and local style patterns. Further, the proposed transformer-based HWT comprises an encoder-decoder attention that enables style-content entanglement by gathering the style representation of each query character. To the best of our knowledge, we are the first to introduce a transformer-based generative network for styled handwritten text generation. Our proposed HWT generates realistic styled handwritten text images and significantly outperforms the state-of-the-art demonstrated through extensive qualitative, quantitative and human-based evaluations. The proposed HWT can handle arbitrary length of text and any desired writing style in a few-shot setting. Further, our HWT generalizes well to the challenging scenario where both words and writing style are unseen during training, generating realistic styled handwritten text images.

Python 3.7

PyTorch >=1.4

Please see INSTALL.md for installing required libraries. You can change the content in the file mytext.txt to visualize generated handwriting while training.

Download Dataset files and models from https://drive.google.com/file/d/16g9zgysQnWk7-353_tMig92KsZsrcM6k/view?usp=sharing and unzip inside files folder. In short, run following lines in a bash terminal.

git clone https://github.com/ankanbhunia/Handwriting-Transformerscd Handwriting-Transformers pip install --upgrade --no-cache-dir gdown gdown --id 16g9zgysQnWk7-353_tMig92KsZsrcM6k && unzip files.zip && rm files.zip

To start training the model: run

python train.py

If you want to use wandb please install it and change your auth_key in the train.py file (ln:4).

You can change different parameters in the params.py file.

You can train the model in any custom dataset other than IAM and CVL. The process involves creating a dataset_name.pickle file and placing it inside files folder. The structure of dataset_name.pickle is a simple python dictionary.

{'train': [{writer_1:[{'img': <PIL.IMAGE>, 'label':<str_label>},...]}, {writer_2:[{'img': <PIL.IMAGE>, 'label':<str_label>},...]},...],

'test': [{writer_3:[{'img': <PIL.IMAGE>, 'label':<str_label>},...]}, {writer_4:[{'img': <PIL.IMAGE>, 'label':<str_label>},...]},...],

}docker run -it -p 7860:7860 --platform=linux/amd64 registry.hf.space/ankankbhunia-hwt:latest python app.py

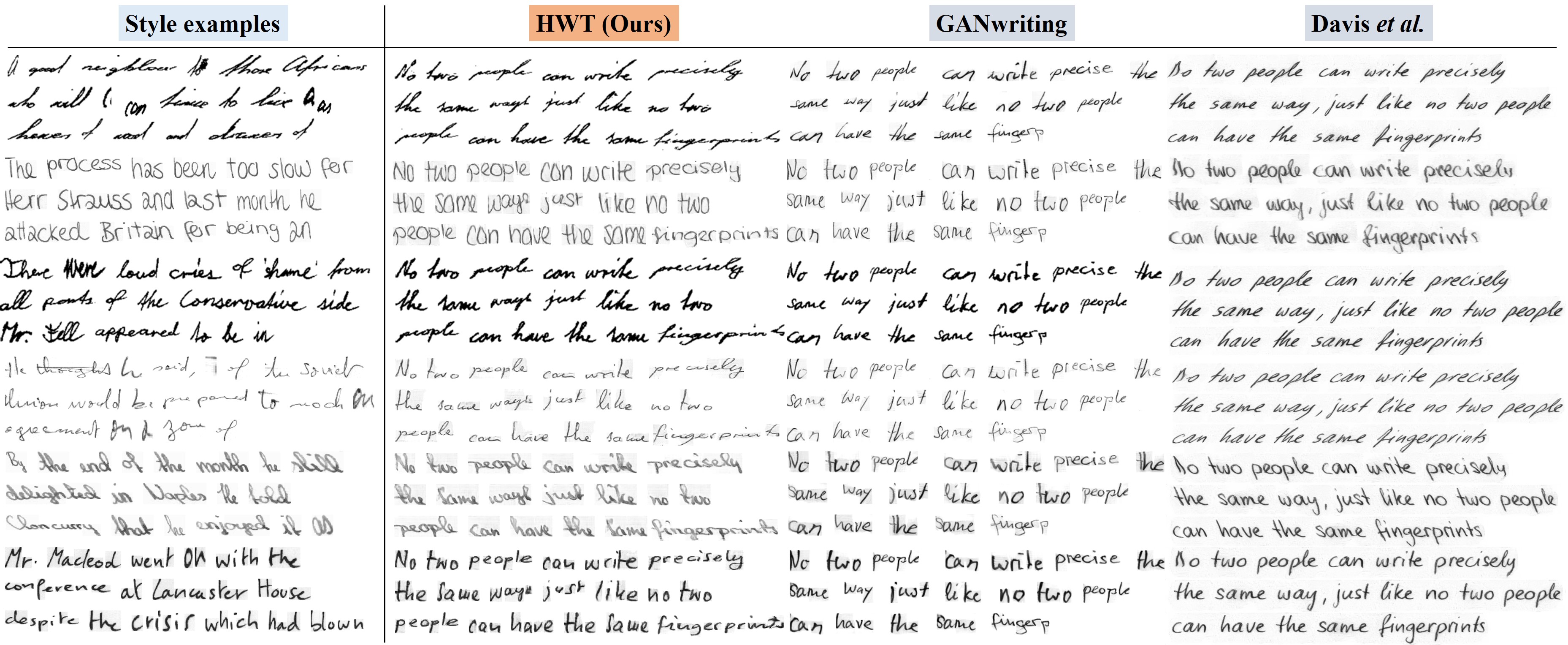

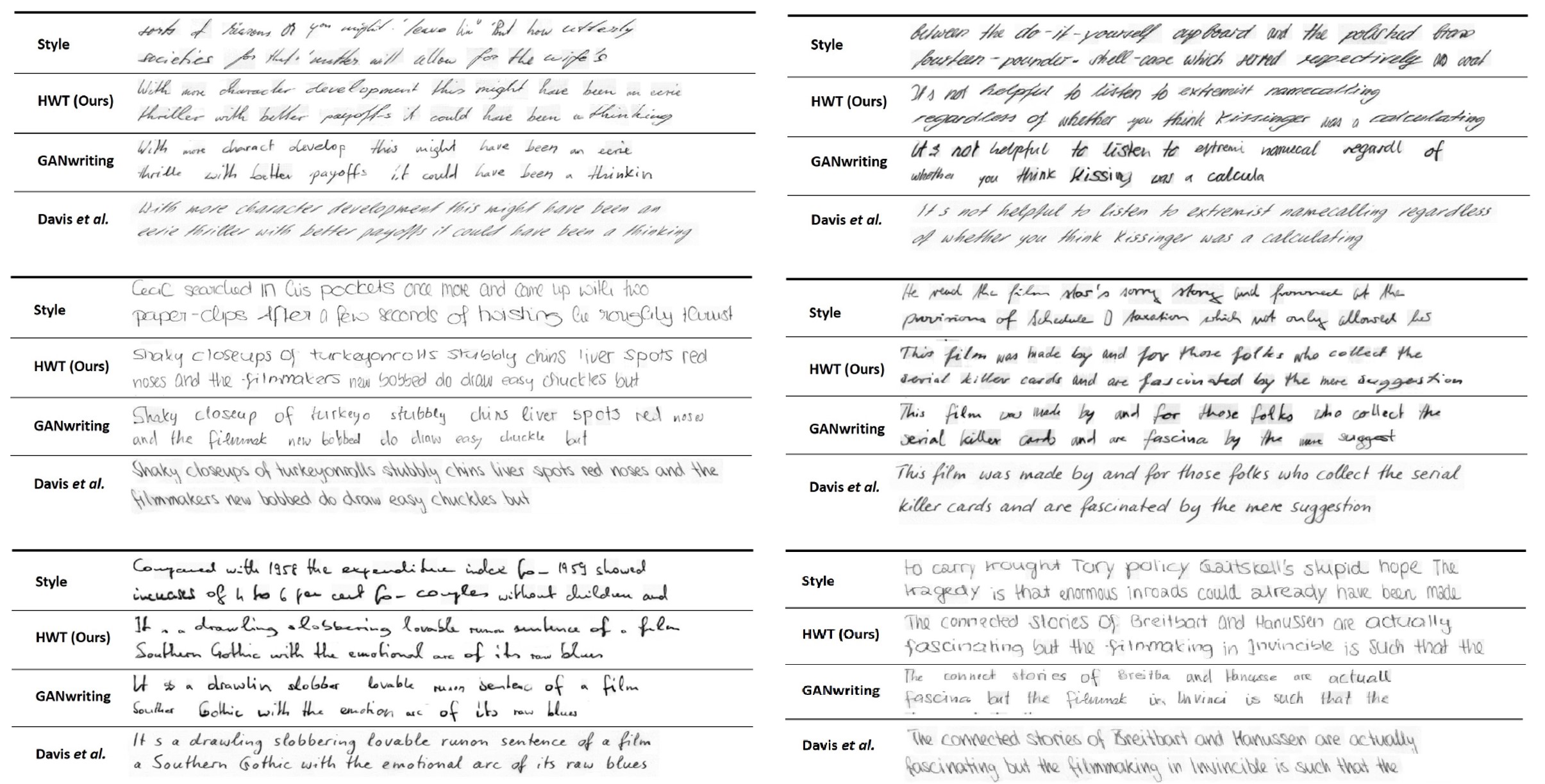

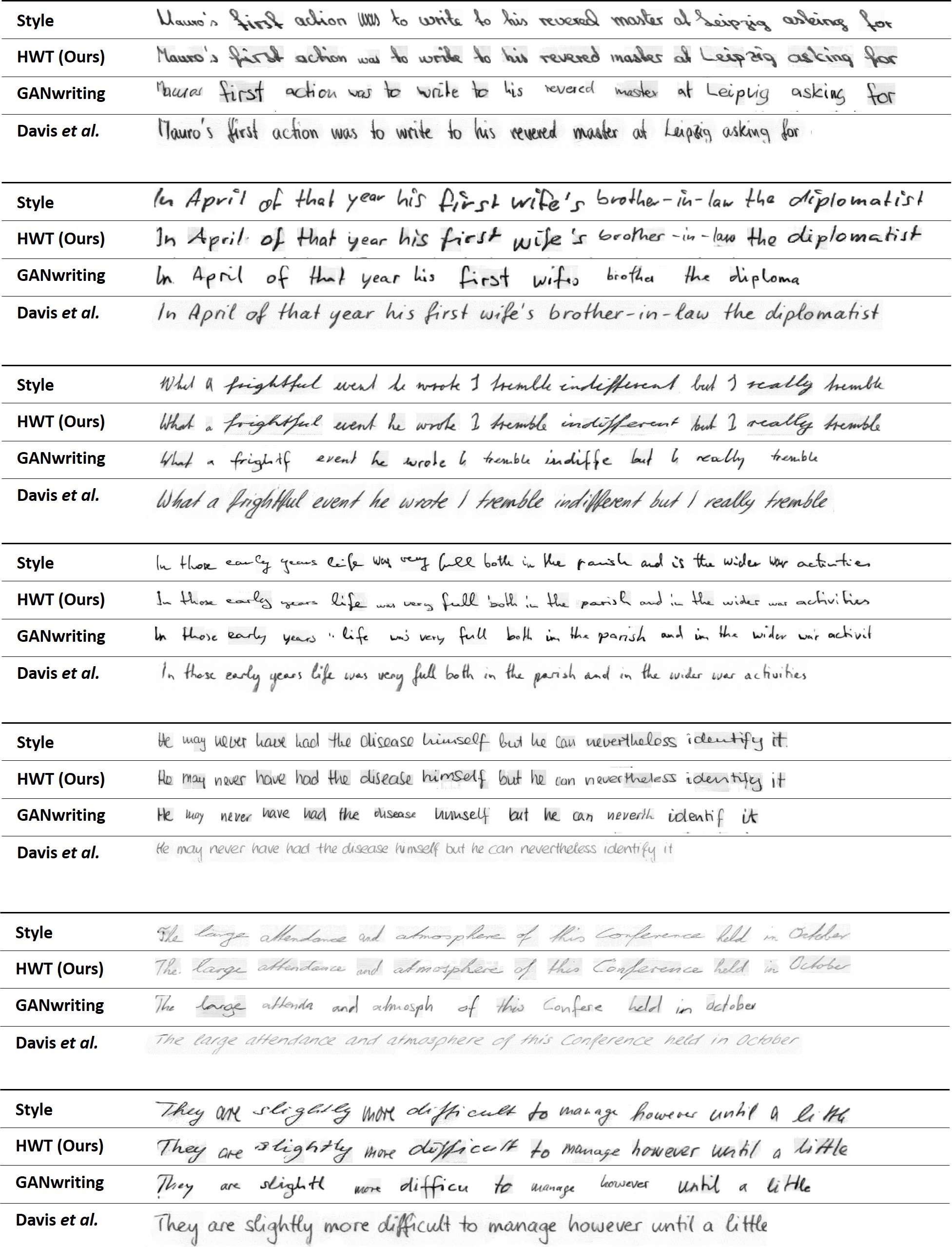

Please check the results folder in the repository to see more qualitative analysis. Also, please check out colab demo to try with your own custom text and writing style

Reconstruction results using the proposed HWT in comparison to GANwriting and Davis et al. We use the same text as in the style examples to generate handwritten images.

If you use the code for your research, please cite our paper:

@InProceedings{Bhunia_2021_ICCV,

author = {Bhunia, Ankan Kumar and Khan, Salman and Cholakkal, Hisham and Anwer, Rao Muhammad and Khan, Fahad Shahbaz and Shah, Mubarak},

title = {Handwriting Transformers},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {1086-1094}

}