See the GENERATIVE MODELS FOR 3D SHAPE COMPLETION paper.

|

|

|

The repository contains code for training and sampling from a generative model for 3D shape completion. The model implemented in this repository is based on the diffusion model proposed in the paper DiffComplete: Diffusion-based Generative 3D Shape Completion.

Credits:

base taken from improved-diffusion.

evaluation part taken from PatchComplete.

Pretrained models can be downloaded from this link.

virtualenv -p python3.8 venv

source venv/bin/activate

export PYTHONPATH="${PYTHONPATH}:${pwd}"

pip install -r requirements.txtNote: CUDA is required to run the code (because of evaluation part).

To generate the dataset for shape completion script dataset_hole.py is used. To have same moddel used as in the paper, use --filter_path option to specify the path to the file with the list of models to be used for given dataset. The files are located in ./datasets/txt directory.

All avaiable arguments can be found by running python ./dataset_hole.py --help.

Data sources:

To generate shape completion dataset run the following command:

cd dataset_processingObjaverse Furniture

python ./dataset_hole.py --output datasets/objaverse-furniture --tag_names chair lamp bathtub chandelier bench bed table sofa toiletObjaverse Vehicles

python ./dataset_hole.py --output datasets/objaverse-vehicles --category_names cars-vehicles --tag_names car truck bus airplaneObjaverse Animals

python ./dataset_hole.py --output datasets/objaverse-animals --category_names animals-pets --tag_names cat dogShapeNet

python ./dataset_hole.py --dataset shapenet --source SHAPENET_DIR_PATH --output datasets/shapenetModelNet40

python ./dataset_hole.py --dataset modelnet --source MODELNET40_DIR_PATH --output datasets/modelnet40Super resolution dataset used for training was created by running the shape completion model over traning and validation dataset to obtaion the predicted shapes, which where used as input for the super resolution model.

To train model the script train.py is used. All avaiable arguments can be found by running python ./train.py --help.

To train the BaseComplete model run the following command:

python ./scripts/train.py --batch_size 32

--data_path "./datasets/objaverse-furniture/32/"

--train_file_path "../datasets/objaverse-furniture/train.txt"

--val_file_path "../datasets/objaverse-furniture/val.txt"

--dataset_name completeto train with ROI mask add --use_roi = True option.

To train the low res processing model run the following command:

python ./scripts/train.py --batch_size 32

... # data options

--in_scale_factor 1

--dataset_name complete_32_64To train the superes model run the following command:

python ./scripts/train.py --batch_size 32

--data_path "./datasets/objaverse-furniture-sr/"

--val_data_path "./datasets/objaverse-furniture-sr-val/"

--super_res True

--dataset_name srTo sample one shape from the mesh model run the following command:

python ./scripts/sample.py --model_path MODEL_PATH

--sample_path SAMPLE_PATH # Condition

--input_mesh True

--condition_size 32 # Expected condition size

--output_size 32 # Expected output sizeor using .npy file as input:

python ./scripts/sample.py --model_path MODEL_PATH

--sample_path SAMPLE_PATH # Condition

--input_mesh False

--output_size 32 # Expected output sizeTo evaluate on whole dataset run the following command:

python ./scripts/evaluate_dataset.py

--data_path "./datasets/objaverse-furniture/32"

--file_path "./datasets/objaverse-furniture/test.txt"

--model_path MODEL_PATHEvaluation on TEST dataset:

| Metric | BaseComplete | BaseComplete + ROI mask |

|---|---|---|

| CD | 3.53 | 2.86 |

| IoU | 81.62 | 84.77 |

| L1 | 0.0264 | 0.0187 |

Note: CD and IoU are scaled by 100. Lower values are better for CD and L1, while higher values are better for IoU.

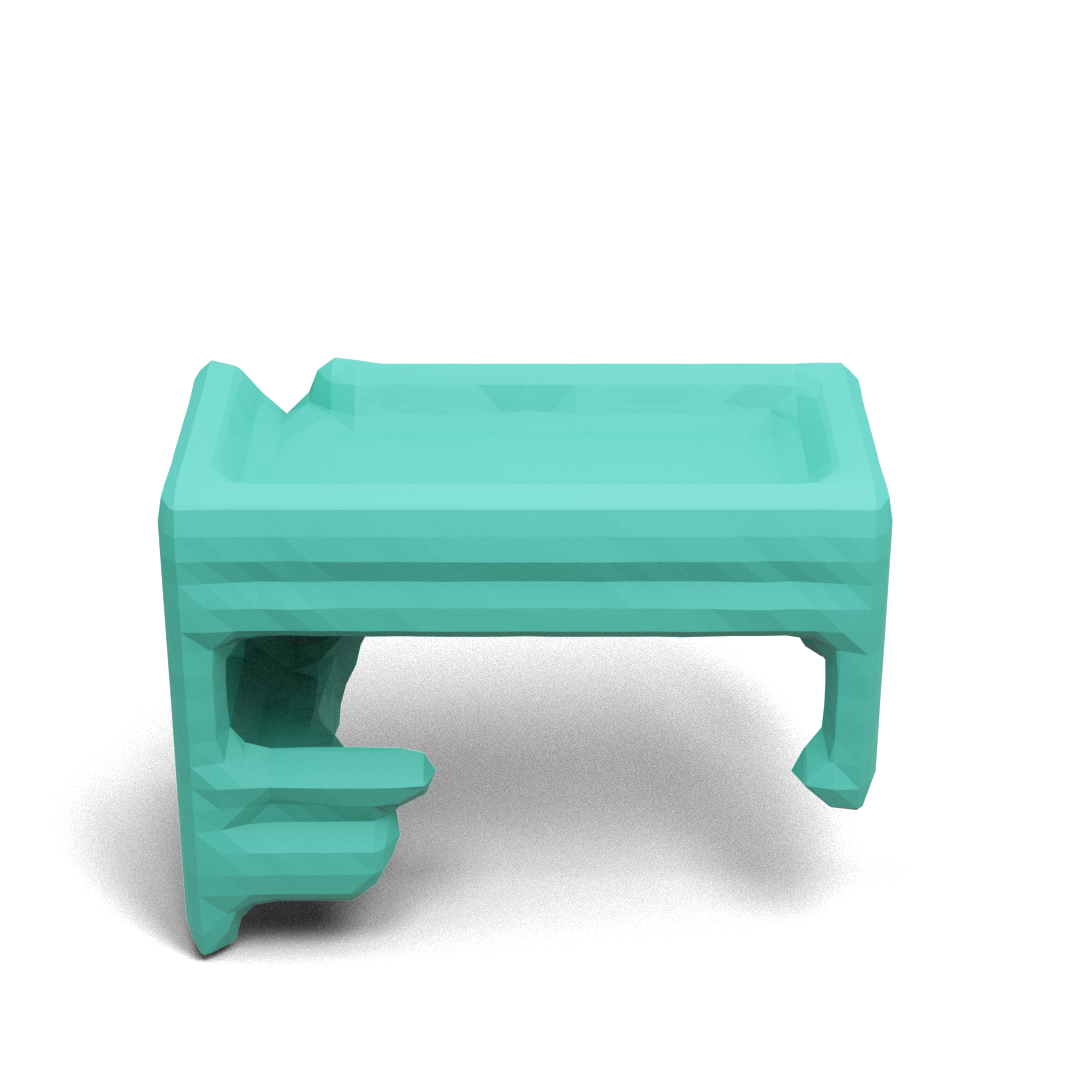

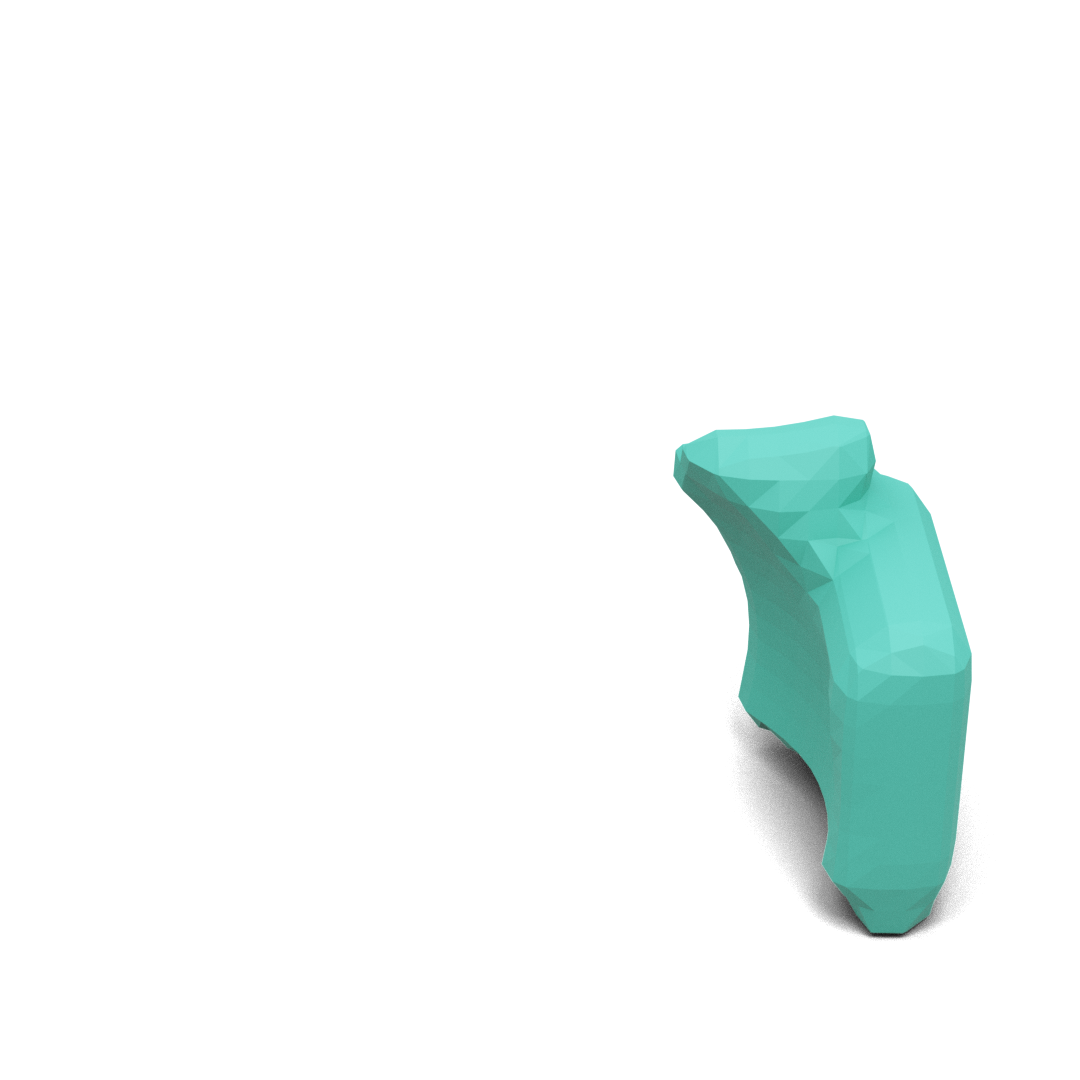

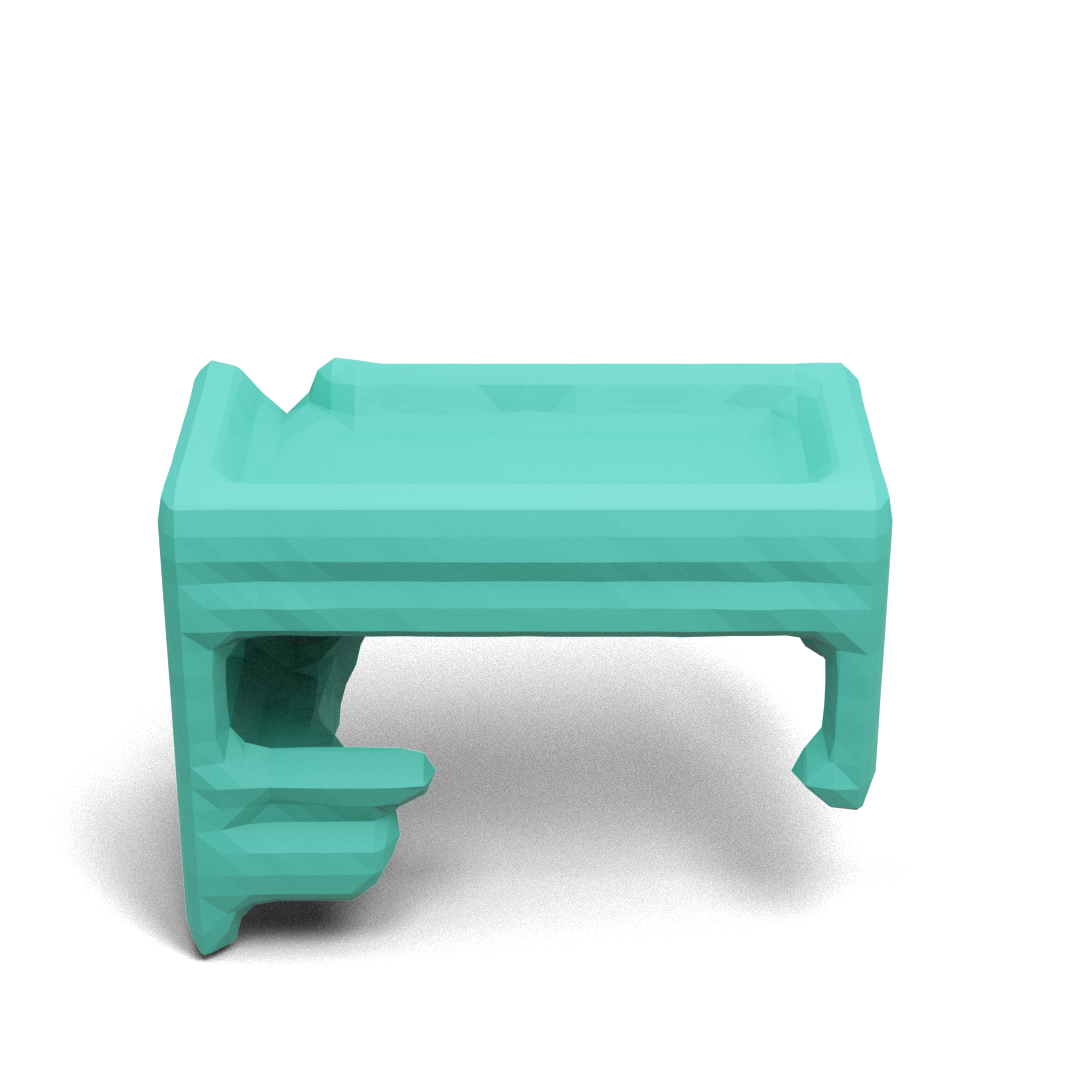

| Condition | Prediction | Ground Truth |

|---|---|---|

|

|

|

|

|

|

|

|

|