tinker chat

1.0.0

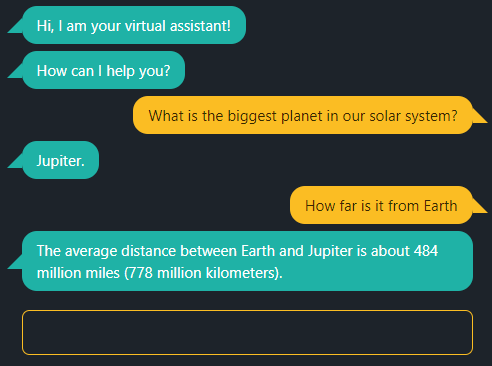

This chat app supports GPT from OpenAI or your own local LLM.

To use GPT from OpenAI, set the environment variable OPENAI_API_KEY to your API key.

To utilize llama.cpp locally with its inference engine, first load a quantized model such as Phi-3 Mini, e.g.:

/path/to/llama.cpp/server -m Phi-3-mini-4k-instruct-q4.ggufBefore launching the demo, set the environment variable OPENAI_API_BASE:

export OPENAI_API_BASE=http://127.0.0.1:8080With Node.js >= v18:

npm install

npm start

and open localhost:5000 with a web browser.