I regularly use a thesaurus, both while writing copy for documentation and READMEs, and while writing code for naming variables and functions.

I used to use online thesauri, especially thesaurus.com, but I hated the experience. Although the results are useful and very well-organized, they are not keyboard-friendly and they are slow to navigate, especially when the results extend to many pages.

So I made my own thesaurus. I use it far more often than I ever used thesaurus.com. It has fewer features, but it is much quicker to access and less disruptive of the creative process.

In a terminal window, type th followed by a word. For example,

to lookup the word attention:

|

|---|

Invoking th for attention |

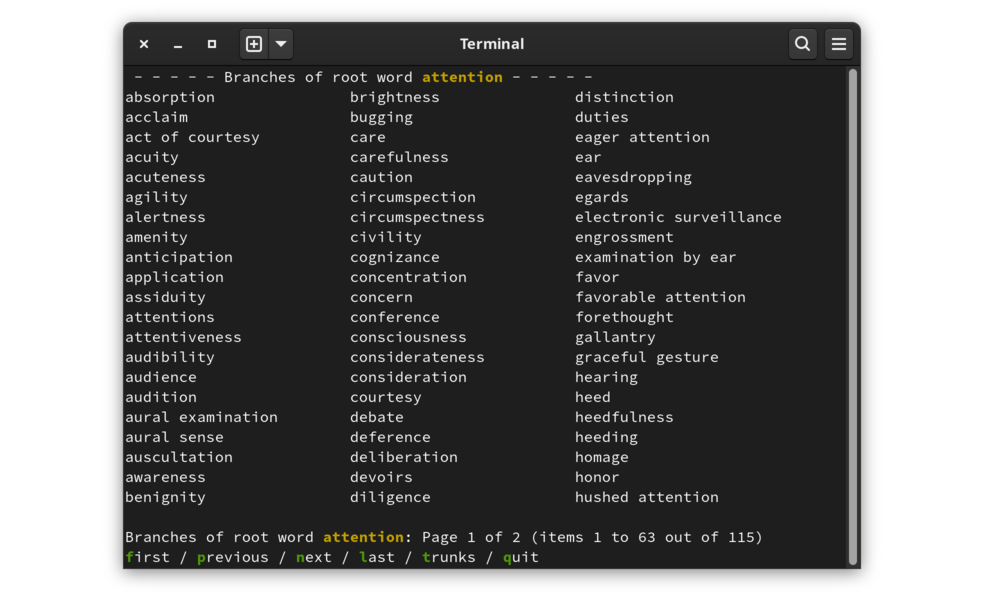

The output is a list of related words and phrases, organized into columns, with context lines above and below, and a list of navigation options at the bottom.

|

|---|

| Search result for attention |

The bottom line of the results display shows the list of available actions. Initiate an action by typing the first letter of the action (highlighted on screen for emphasis).

The data is organized as thesaurus entries, each with a collection of related words and phrases. An entry is a trunk and the related words and phrases are the branches.

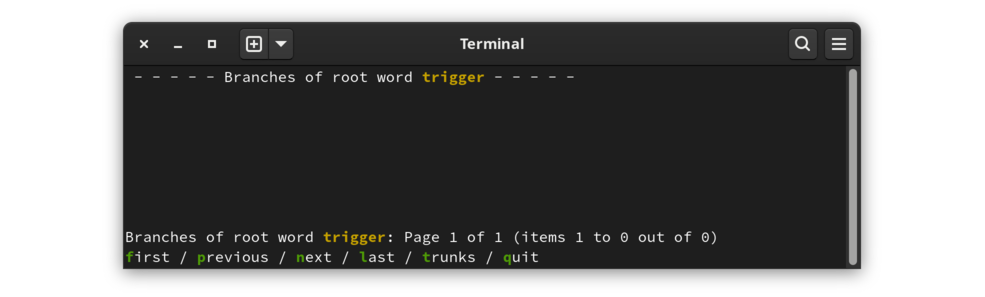

The default view is in branches mode. The words displayed are the words and phrases listed after the entry in the source thesaurus. Switching to trunks mode will show the entries that contain the word. This is most clearly demonstrated by an example without any branches:

|

|---|

| trigger is a word without an entry |

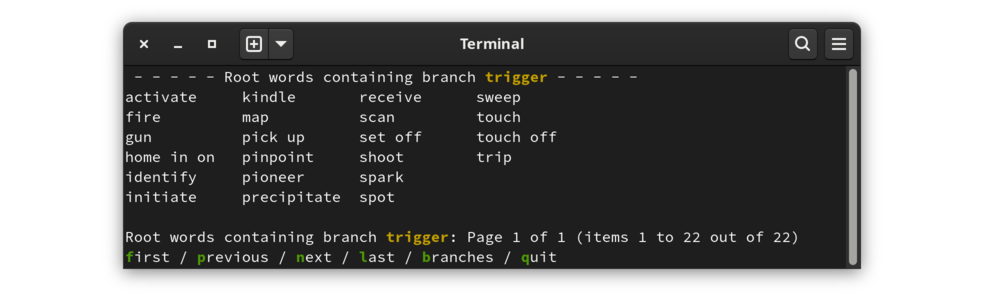

There is no thesaurus entry for the word trigger. The word trigger is in the thesaurus, however, as branches of other entries. Switch to trunks mode to see the entries that include trigger:

|

|---|

| List of words for which trigger is a related word |

This program is not available as a package; the source code must be downloaded and the project built. The following steps will produce a working thesaurus:

git clone https://www.github.com/cjungmann/th.gitcd thmakemake thesaurus.dbPlay with the program to see if you like it. If you want to install it to be available outside of the build directory, invoke the following command:

sudo make install

It is easy to remove the program is if you decide you don't need the program.

If you've installed the program, first uninstall with

sudo make uninstall

This will remove the program, the support files, and the directory

in which the support files were installed.

If the program is not installed, you can safely just delete the cloned directory.

The following material will mainly interest developers, if anyone.

The project is easy to build, but depends on other software. The following is a list of dependencies, of which only the first (the Berkeley Database) may require some intervention. Items 3 and 4 below are downloaded into a subdirectory under the build directory, and the code found there is statically-linked to the executable, so they won't affect your environment.

db version 5 (Berkeley Database) is necessary for the B-Tree databases in the project. If you're using git, you should already have this, even on FreeBSD which otherwise only includes an older version of db. Make will immediately terminate with a message if it can't find an appropriate db, in which case it's up to you to use your package manager to install db or build it from sources.

git is used to download some dependencies. While project dependencies can be directly downloaded without git, doing so requires undocumented knowledge about the source files, a problem that is avoided when make can use git to download the dependencies.

readargs is one of my projects that processes command line arguments. While this project is still used by th, it is no longer necessary to install this library for th to work. The th Makefile now downloads the readargs project into a subdirectory, builds it and uses the static library instead.

c_patterns is another of my projects, an experiment in managing reusable code without needing a library. The Makefile uses git to download the project, then makes links to some c_patterns modules in the src directory to be included in the th build.

This project, while useful (to me, at least), is also an experiment.

One of my goals here is to improve my makefile-writing skills. Some

of the build and install decisions I have made may not be best

practices, or may even be frowned-upon by more experienced developers.

If you are worried about what will happen to your system if you install

th, I hope the following will inform your decision.

As expected, make will compile the th application. Unconventionally, perhaps, make performs other tasks that may take some time:

Downloads my repository of C modules and uses several of them by making links into the src directory.

Instead of using configure to check dependencies, the makefile

identifies and immediately terminates with a helpful message if

it detects missing dependencies.

Download and import the public domain moby thesaurus from The Gutenberg Project. This populates the application's word database.

Abandoned Download and import a word count database. The idea is to offer alternate sorting orders to make it easier to find a word from a longer list. This is not working right now. I'm not sure I'll come back to this because I'm finding the benefit of reading an alphapetic list far outweighs the dubious benefit of trying to put more commonly-used words first. The reason is that it's much easier to keep track of words in consideration when they are not randomly scattered in a long list of words.

I just noticed that there is a Moby Part of Speech list resource that could help organize the output. It's intriguing, but I'm not sure it will be helpful, based on how much alphabetical sorting helps with using the output. We'll see.

I had several objectives when I started this project.

I wanted more experience with the Berkeley Database. This key-store database underpins many other applications, including git and sqlite.

I wanted to practice using some of my c_patterns project modules. Using these modules in a real project helps me understand their design flaws and missing features. I use

columnize.c to generate the columnar output,

prompter.c for the minimal option menu at the bottom of the output,

get_keypress.c for non-echoed keypresses, mostly used by prompter.c.

I wanted to practice designing a build process that works both in Gnu Linux and BSD. This includes identifies missing modules (especially db, of which BSD includes a too-old library), and redesigned conditional processing.

I'm not targeting Windows because it varies more significantly from Linux than BSD, and I don't expect that many Windows users would be comfortable dropping to a command line application.

The Berkeley Database (bdb) seems like an interesting database product. Its low-level C-library approach seems similar to the FairCom DB engine I used back in the late 1990s.

The Berkeley Database is appealing because it is part of Linux and BSD distributions and has a small footprint. It rewards detailed planning of the data, and it is an excuse to explore some of my C language ideas.

This project is a restart of my words project, which is meant to be a command-line thesaurus and dictionary. That project was my first use of bdb, so some of my work there is a little clumsy. I want to design the bdb code again from scratch. I will be copying some of the text parsing code from the words project that will be applicable here.

Using the large datasets that are the thesaurus and dictionary, I also want to test the performance differences between the Queue and Recno data access methods. I expect that Queue will be faster with the beginning and end of fixed-length records could be calculated. Accessing by record number of a given variable-length record would require a lookup of the file location. I'd like to measure the performace diffence to weigh that advantage against the storage efficiency of variable-length records.

There are two public-domain sources of thesauri:

I am using the Moby thesaurus because its organisation is much simpler and thus easier to parse. The problem is that the synonyms are numerous and, lacking organization, much harder to scan when searching for an appropriate synonym.

With hundreds of synonyms for many words, it is very difficult to scan the list to find an appropriate word. I will try to impose some order on the list to make it easier to use. After using the tool for some time, I have concluded that alphabetic order is best. It's much easier to return to a word in an alphabetic list. I have removed the option to select other word orders.

The easiest classification to use is word usage frequency. I plan to list the words from the greatest to the least frequency of use. Presumably, more popular words may be the best choices, while less popular words may be obsolete.

There are several sources of word frequencies. The one I'm using is based on Google ngrams:

Natural Language Corpus Data: Beautiful Data

I haven't really studied the Norvig source, so it's possible that it has a lot of nonsense. There is another source that may have a more sanitized list, hackerb9/gwordlist. If Norvig is a problem, I want to remember this alternate list with which I may replace it.

This part is no longer attempted. Interpreting the source data is complicated by the need to recognize and convert the dictionary's unique notation to unicode characters. I solved many of these problems, but many still remain. The Makefile still includes instructions for downloading this information and and the repository retains some conversion scripts in case I want to come back to this.

Grouping synonyms by part of speech (ie noun, verb, adjective, etc.) has potential to be useful, as well. The first problem is in identifying the part of speech represented by each word. The second problem is in presentation: it would be better, but harder to program, the have an interface that has the user choose the part of speech before displaying the words.

Electronic, public-domain dictionaries

My first attempt is to use the GNU Collaborative International Dictionary of English (GCIDE). It is based on an old (1914) version of Webster's, with some words added by more modern editors.