KoGPT2 chatbot

1.0.0

Simple chatbot experiment using publicly available Hangul chatbot data and pre-trained KoGPT2

We explore the potential for various uses of KoGPT2 and qualitatively evaluate its performance.

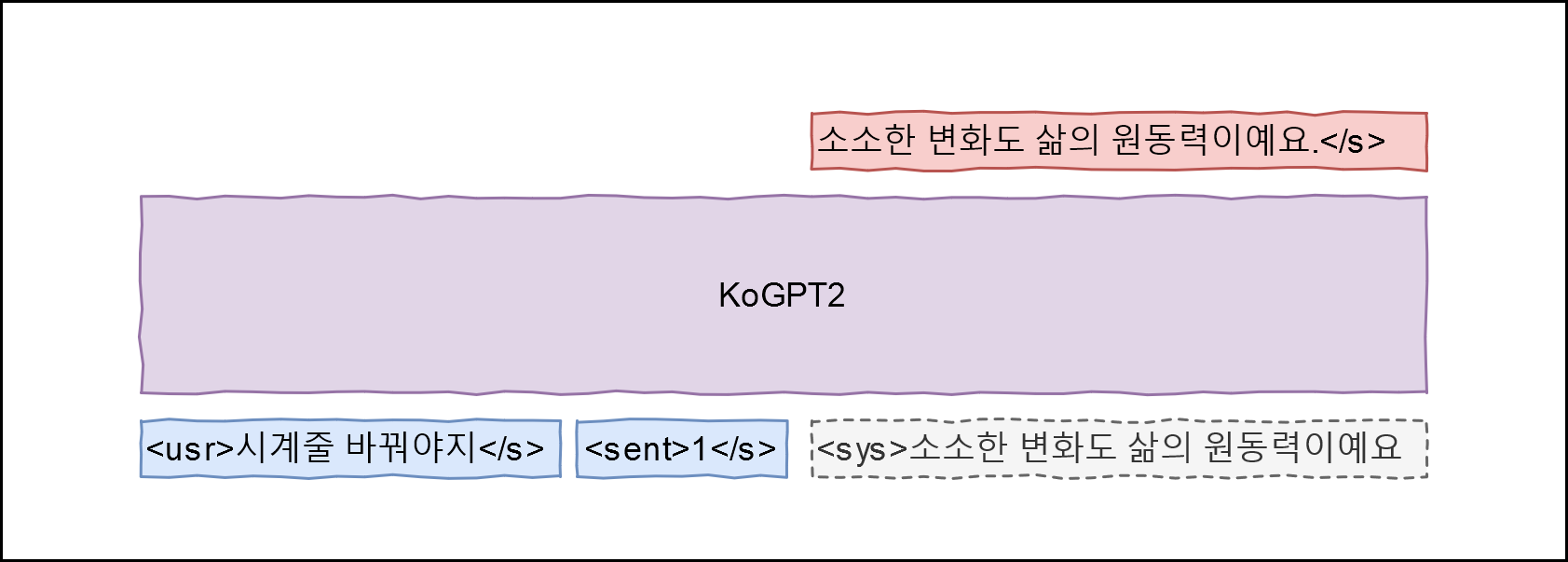

Hello! is suitable for training data. Applying GPT-2, we designed the following architecture

Learn a model that can maximize P(<sys>|<usr>, <sent>) by mapping the Q field of the data to <usr> utterance, the A field to <sys> utterance, and the emotion label to <sent> . Emotion labels follow the definitions here (everyday occurrence 0, breakup (negative) 1, love (positive) 2).

git clone --recurse-submodules https://github.com/haven-jeon/KoGPT2-chatbot.gitcd KoGPT2-chatbot pip3 install -r requirements.txt

CUDA_VISIBLE_DEVICES=0 python train_torch.py --gpus 1 --train --max_epochs 2

# CUDA_VISIBLE_DEVICES=0 python train.py --chatCUDA_VISIBLE_DEVICES=0 python train_torch.py --gpus 1 --chat user > jackpot Simsimi > It will be fine. user > What is your name?Simsimi > I am Wirobot. user > What do we do on Parents' Day? Simsimi > Play with me. user > It's raining Simsimi > Take an umbrella. user > It's snowing Simsimi > Build a snowman. user > Thunder strikes Simsimi > I miss you too. user > Spring has come. Simsimi > I'm always excited about spring. user > I have an upset stomach. Simsimi > Go home and rest quickly. user > Who made you?Simsimi > You are the person who made you.

PyTorch