This is Keras implementation for the task of sentence classification using CNNs.

Dataset for the above task was obtained from the project Natural Language Understanding benchmark

Text used for the training falls under the six categories namely, AddToPlaylist, BookRestaurant, GetWeather , RateBook , SearchCreativeWork, SearchScreeningEvent each having nearly 2000 sentences.

To prepare the dataset, from the main project's directory, open terminal and type:

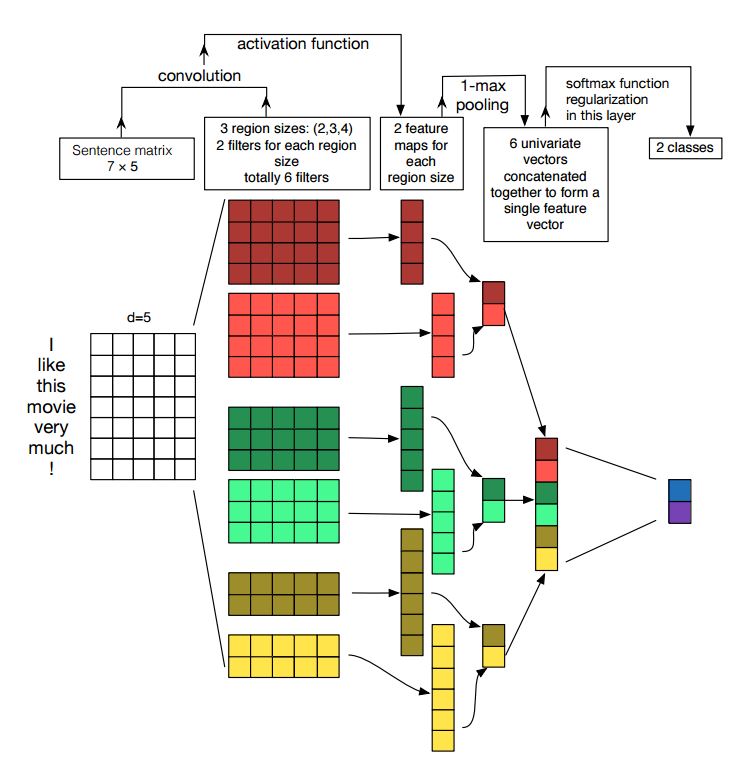

$ python prepare_data.pyCheck Intent_Classification_Keras_Glove.ipynb for the model building and training part. Below is the model overview.

Although RNN's like LSTM and GRU are widely used for language modelling tasks but CNN's have also proven to be quite faster to train owing to data parallelization while training and give better results than the LSTM ones. Here is a brief comparison between different methods to solve sentence classification, as can be seen TextCNN gives best result of all and also trains faster. I was able to achieve 99% accuracy on training and validation dataset within a minute after 3 epochs when trained on a regular i7 CPU.

Intent classification and named entity recognition are the two most important parts while making a goal oriented chatbot.

There are many open source python packages for making a chatbot, Rasa is one of them. The cool thing about Rasa is that every part of the stack is fully customizable and easily interchangeable. Although Rasa has an excellent built in support for intent classification task but we can also specify our own model for the task, check Processing Pipeline for more information on it.

Using pre-trained word embeddings in a Keras model

Convolutional Neural Networks for Sentence Classification

A Sensitivity Analysis of (and Practitioners' Guide to) Convolutional Neural Networks for Sentence Classification

An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling