Chinese-ChatBot/Chinese chatbot

- The author has fully transferred to

GNN graph neural network direction C++ development will no longer follow up on NLP, and the project code will cease to be maintained. When the Yuanxiang project was completed, there were very few online resources. The author came into contact with NLP and Deep Learning for the first time on a whim. Overcoming many difficulties, he finally wrote this Toy Model. Therefore, the author knows that it is not easy for beginners, so even if the project is no longer maintained, issues or emails ([email protected]) will be responded to in a timely manner to help newcomers to Deep Learning. (The version of Tensorflow I use is too old. If you run the new version directly, you will definitely get various errors. If you encounter difficulties, don’t bother to install the old version of the environment. It is recommended to use Pytorch to reconstruct it according to my processing logic. I Too lazy to write)

- GNN aspect:

- A set of benchmark comparison models: GNNs-Baseline has been adapted and compiled to facilitate quick verification of ideas.

- The open source code of my paper ACMMM 2023 (CCF-A) is here LSTGM.

- The open source code of my paper ICDM 2023 (CCF-B) is still being compiled. . . GRN

- Fellows are welcome to add, communicate, and learn.

Environment configuration

| program | Version |

|---|

| python | 3.68 |

| tensorflow | 1.13.1 |

| Keras | 2.2.4 |

| windows10 | |

| jupyter | |

Main reference materials

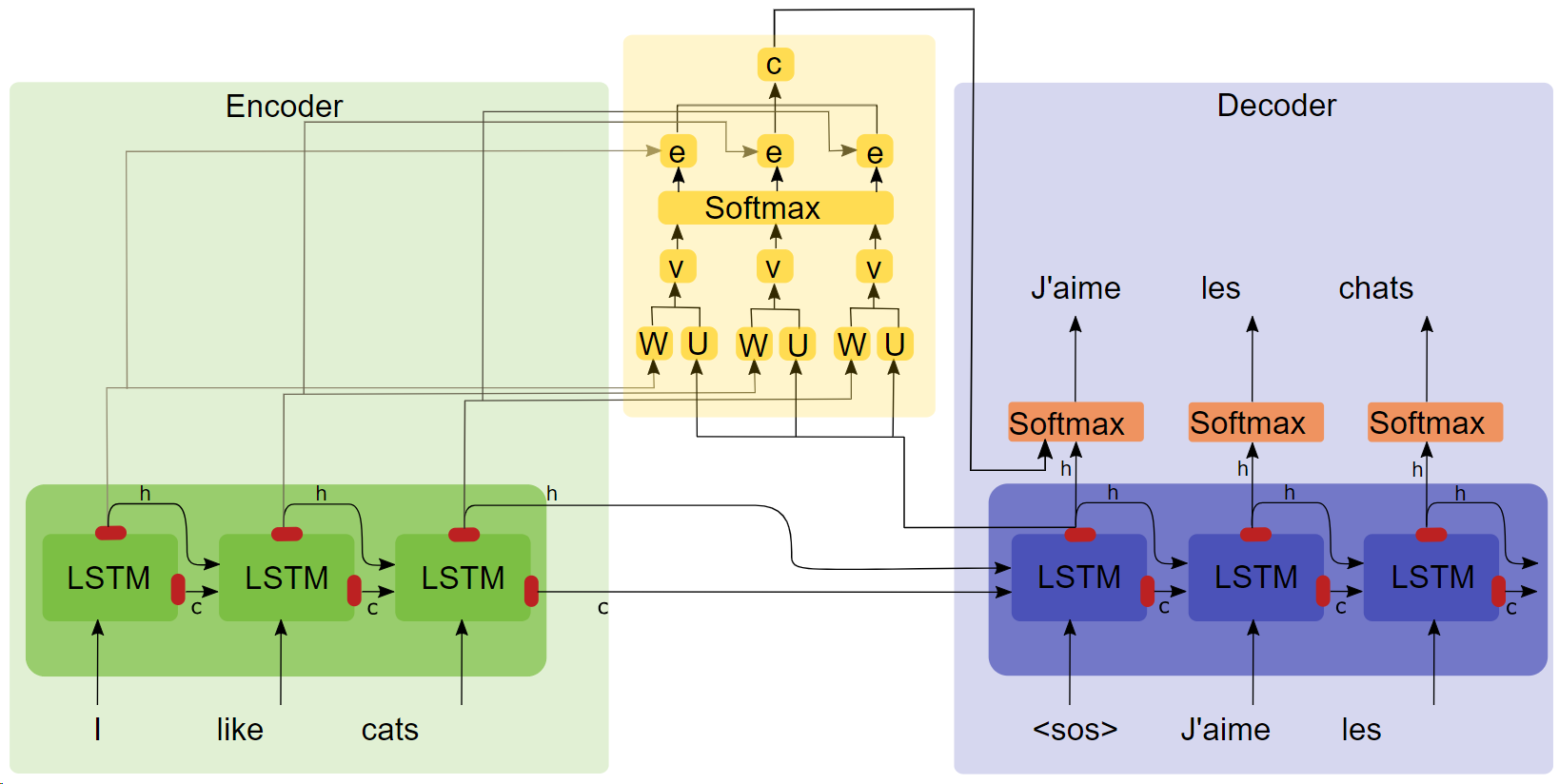

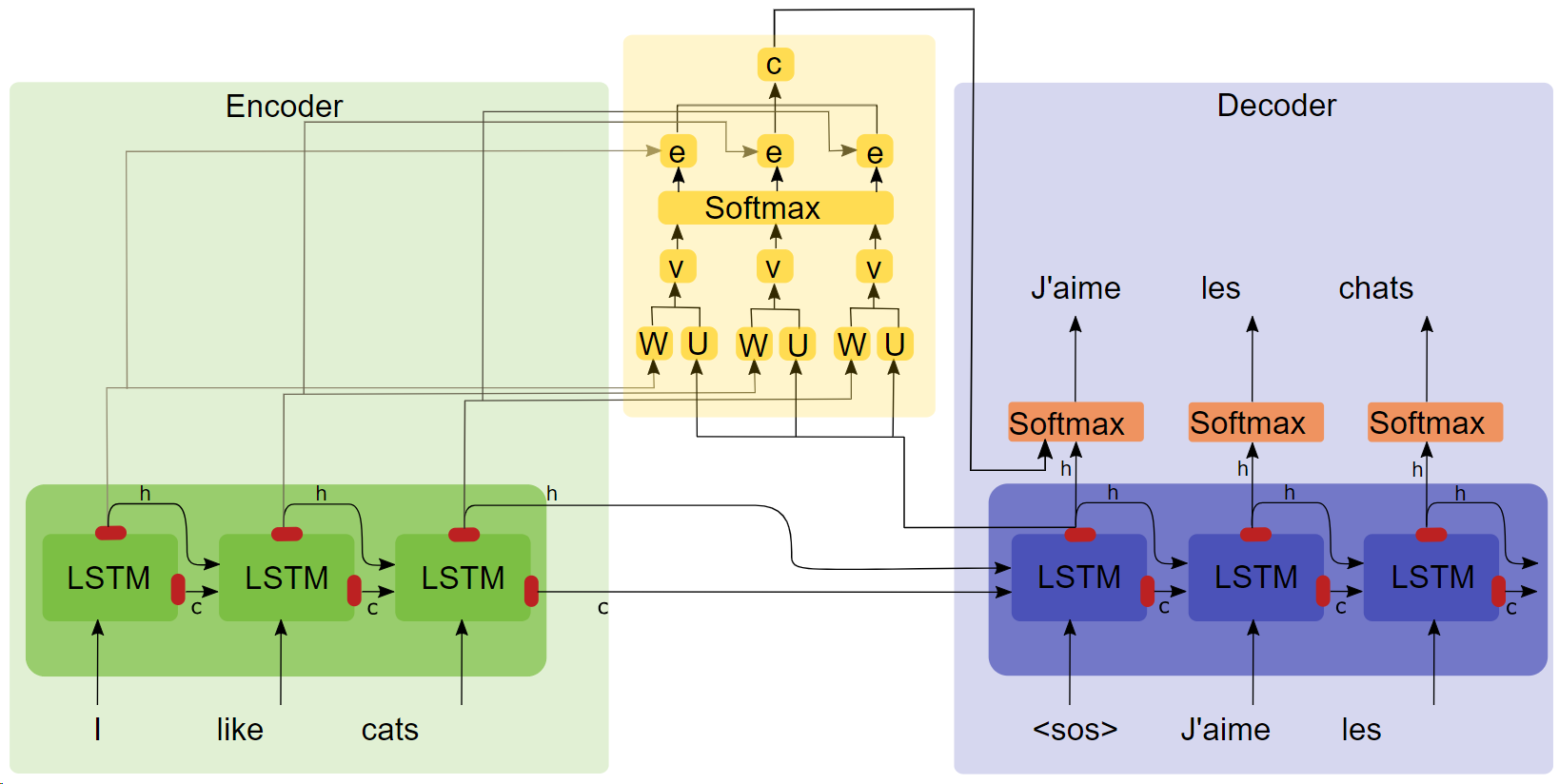

- Thesis "NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE ( Click on the title to download )"

- Attention structure diagram

Key points

- LSTM

- seq2seq

- Attention experiments show that after adding the attention mechanism, the training speed is faster, the convergence is faster, and the effect is better.

Corpus and training environment

100,000 dialogue groups from the Qingyun corpus, trained in Google colaboratory.

run

Method 1: Complete process

- Data preprocessing

get_data

- Model training

chatbot_train (This is the version mounted to google colab, the local running path needs to be slightly modified)

- Model prediction

chatbot_inference_Attention

Method 2: Load an existing model

- Run

chatbot_inference_Attention

- Load

models/W--184-0.5949-.h5

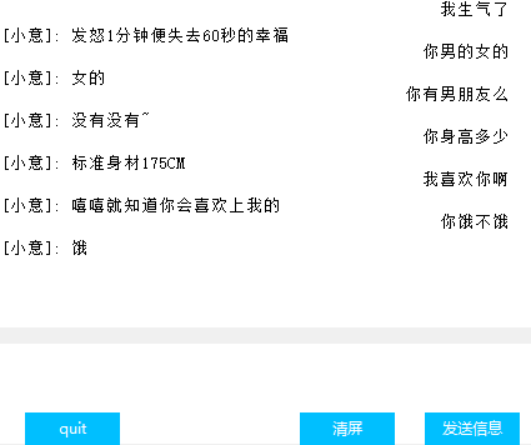

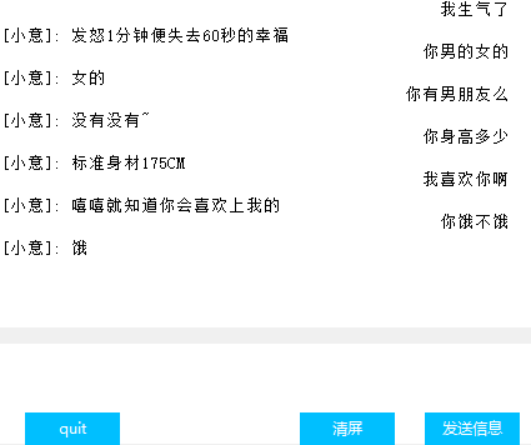

Interface(Tkinter)

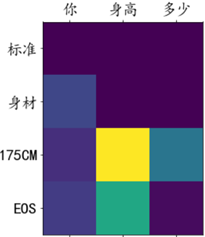

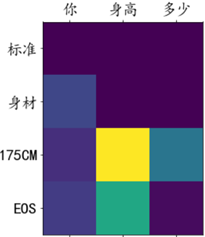

Attention weight visualization

other

- In the training file chat_bot, the first two of the last three blocks of code are used to mount Google Cloud Disk, and the last one is used to obtain those losses to facilitate drawing. I don’t know why the tensorbord in the callback function does not work, so I came up with this strategy;

- The penultimate block of code in the prediction file only has text input but no interface. The last block of code is the interface. One of the two blocks can be run immediately according to the needs;

- There are a lot of intermediate outputs in the code, I hope it helps you understand the code;

- There is a model that I have trained in models. There should be no problem in normal operation. You can also train it yourself.

- The author has limited ability and has not found an indicator to quantify the dialogue effect, so loss can only roughly reflect the training progress.