Food analyzer app is a personalized GenAI nutritional webapp for your shopping and cooking recipes built with serverless architecture and generative AI capabilities. It was first created as the winner of the AWS Hackathon France 2024 and then introduced as a booth exhibit at the AWS Summit Paris 2024.

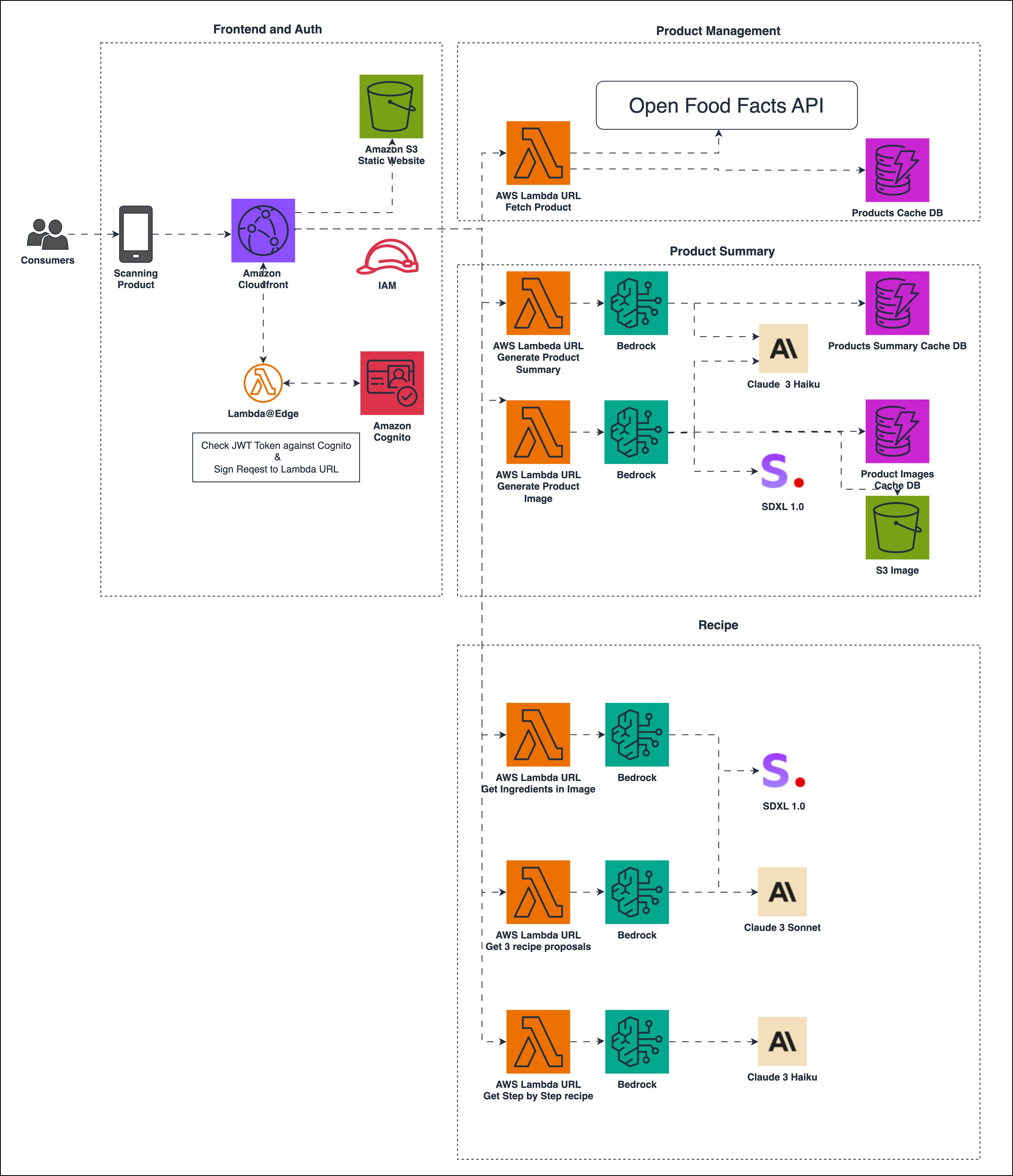

The back-end of the app is made using AWS services such as:

The app is designed to have minimal code, be extensible, scalable, and cost-efficient. It uses Lazy Loading to reduce cost and ensure the best user experience.

We developed this exhibit to create an interactive serverless application using generative AI services.

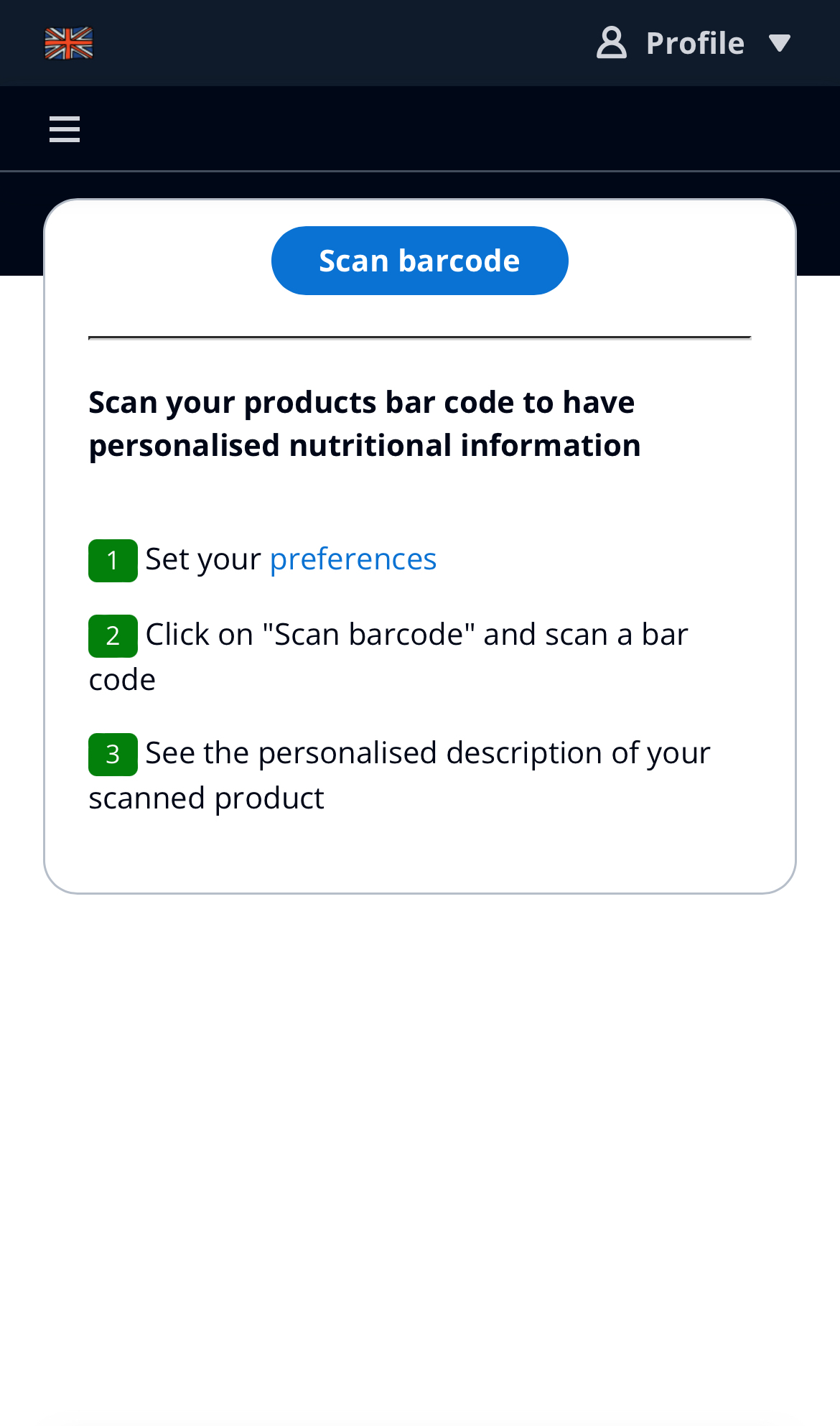

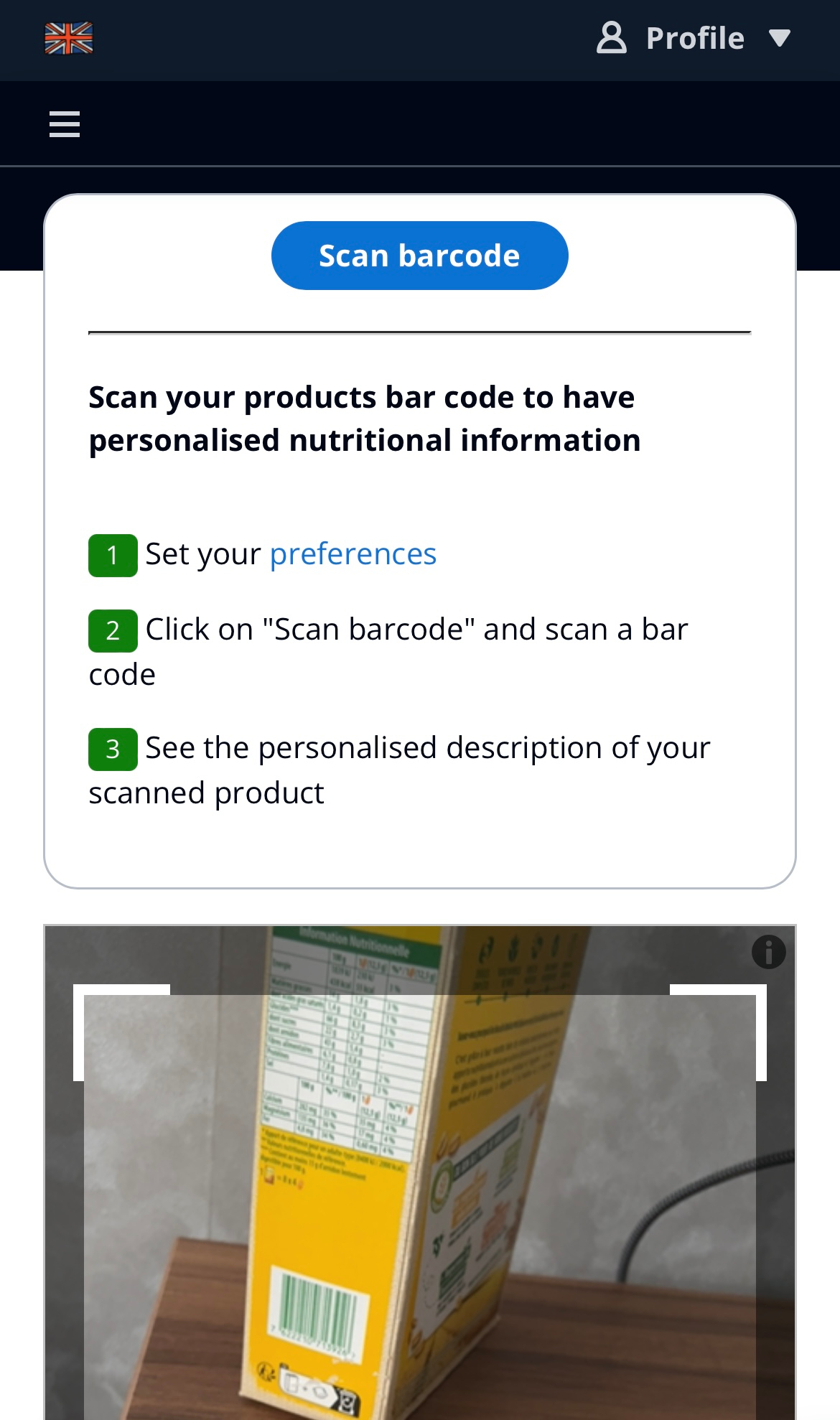

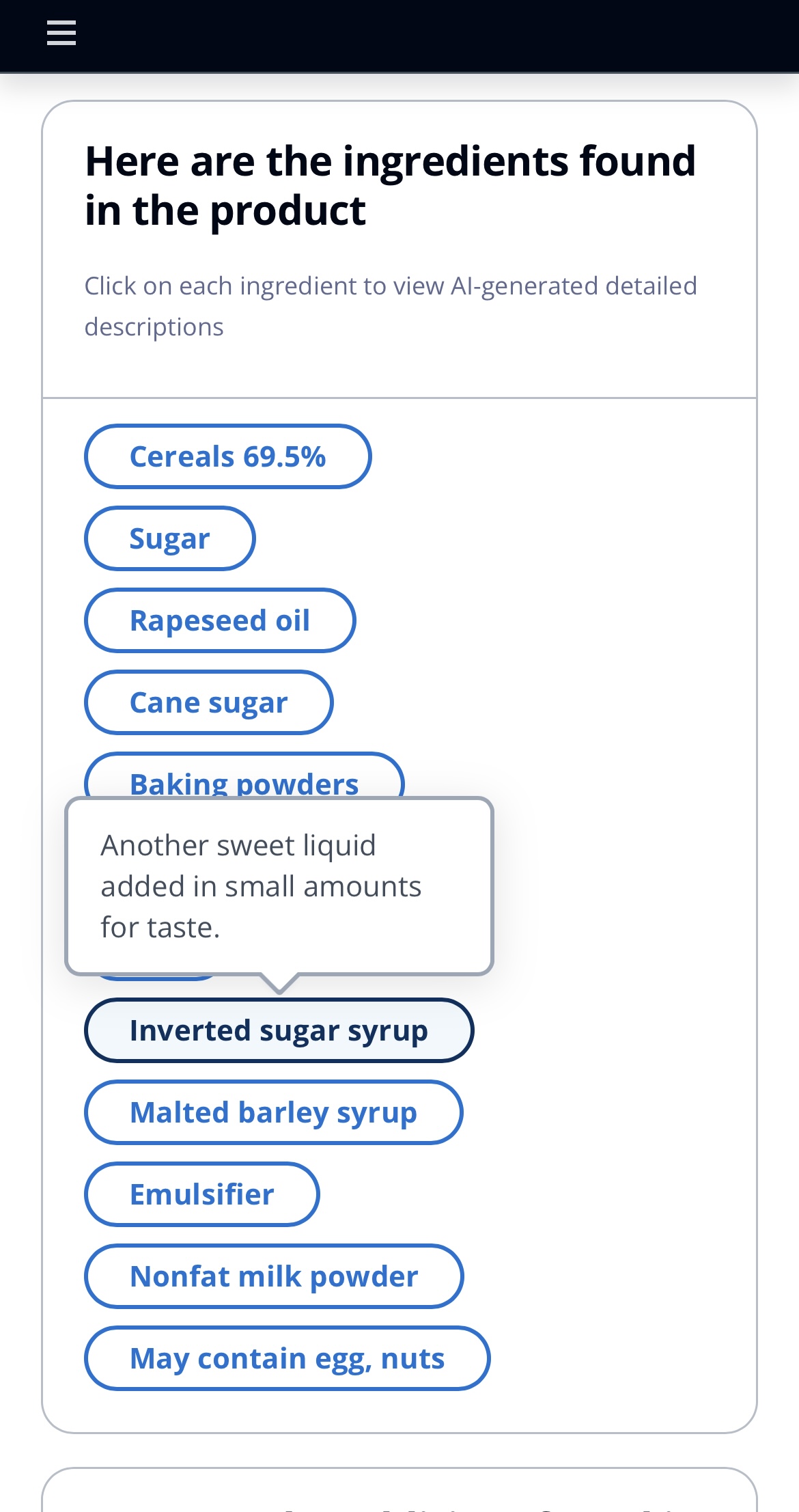

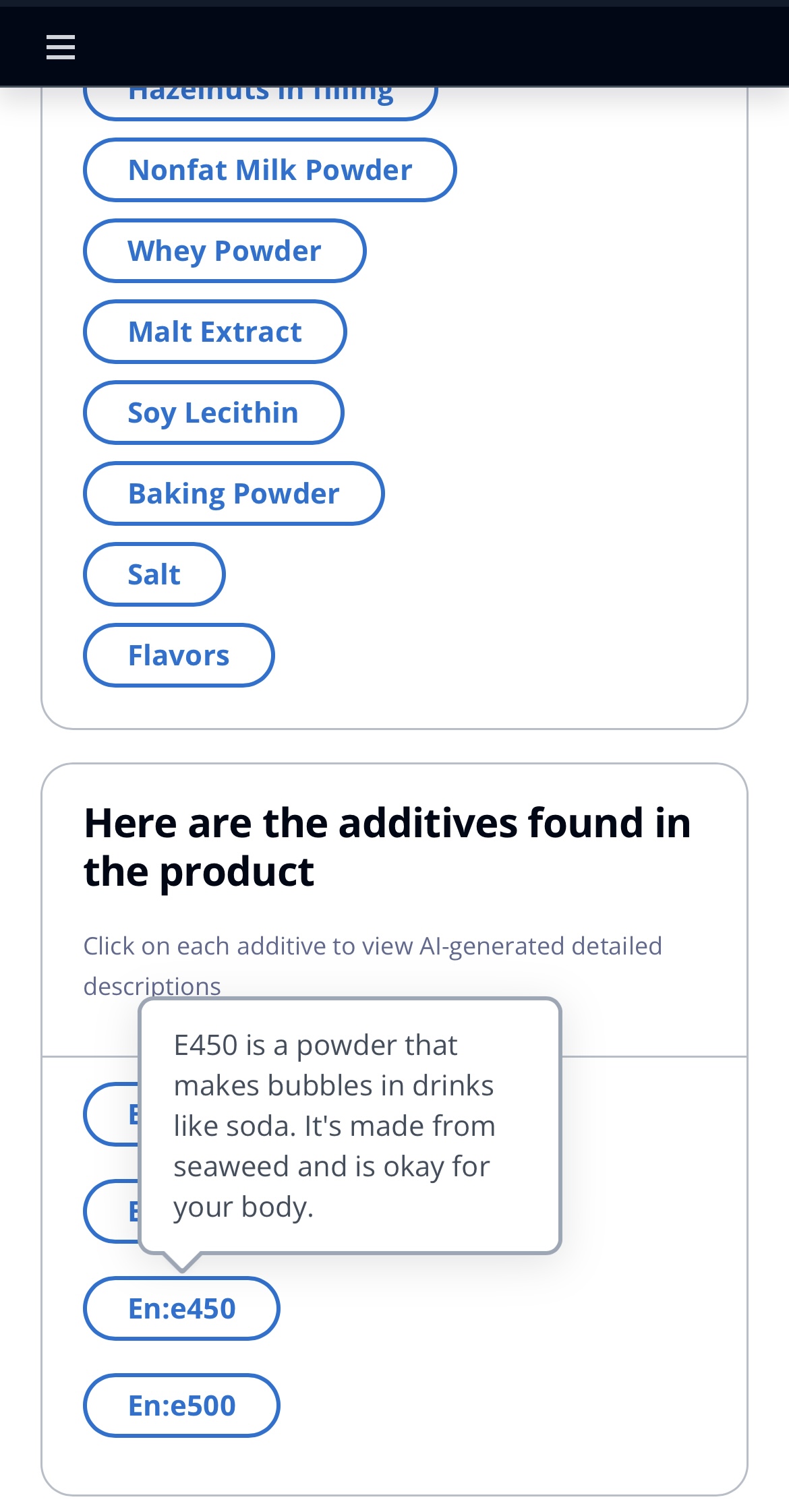

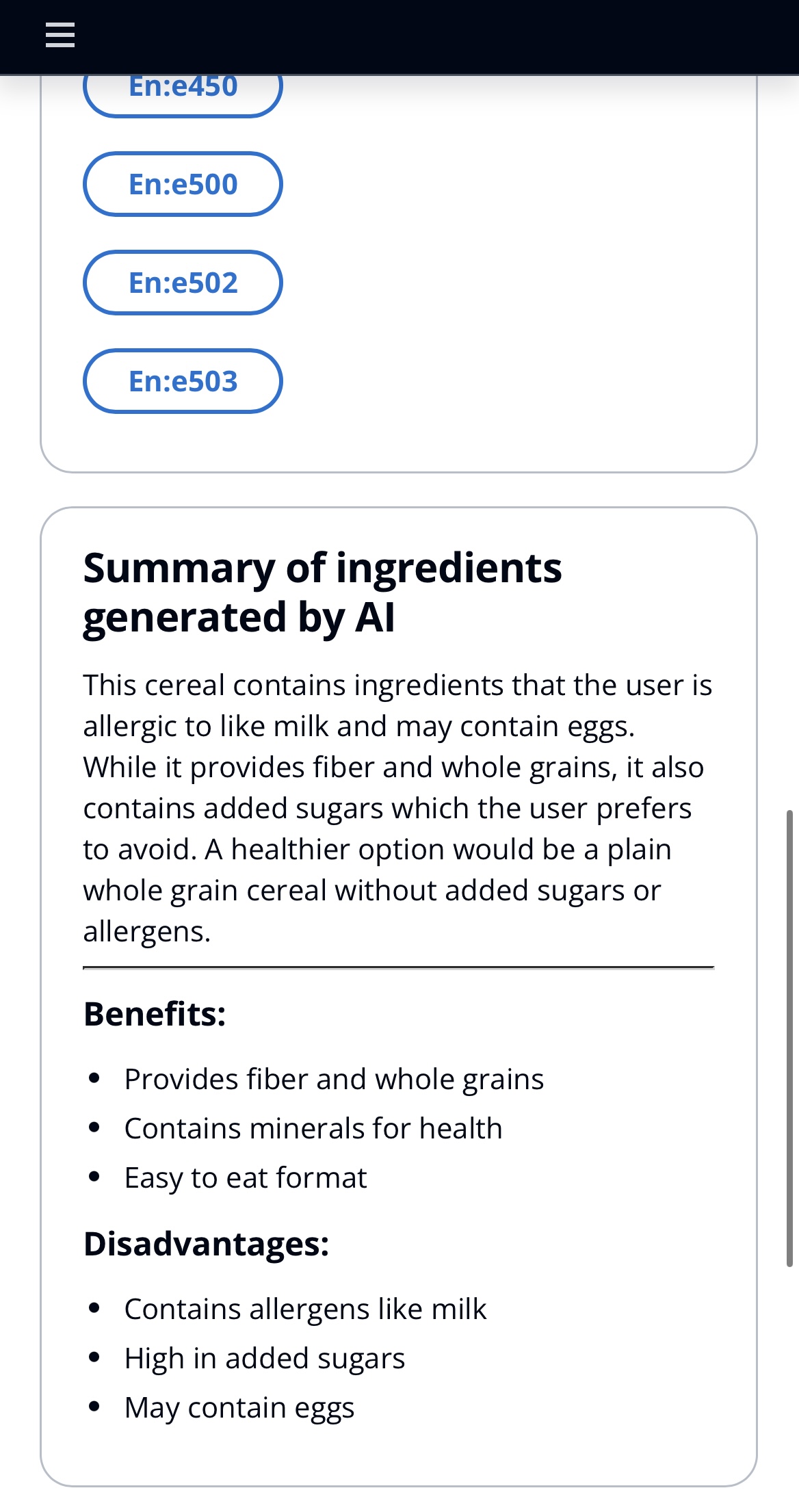

Personalized product information: Curious about what is in a product and if it is good for you? Just scan the barcode with the app for an explained list of ingredients/alergens and a personalized summary based on your preferences.

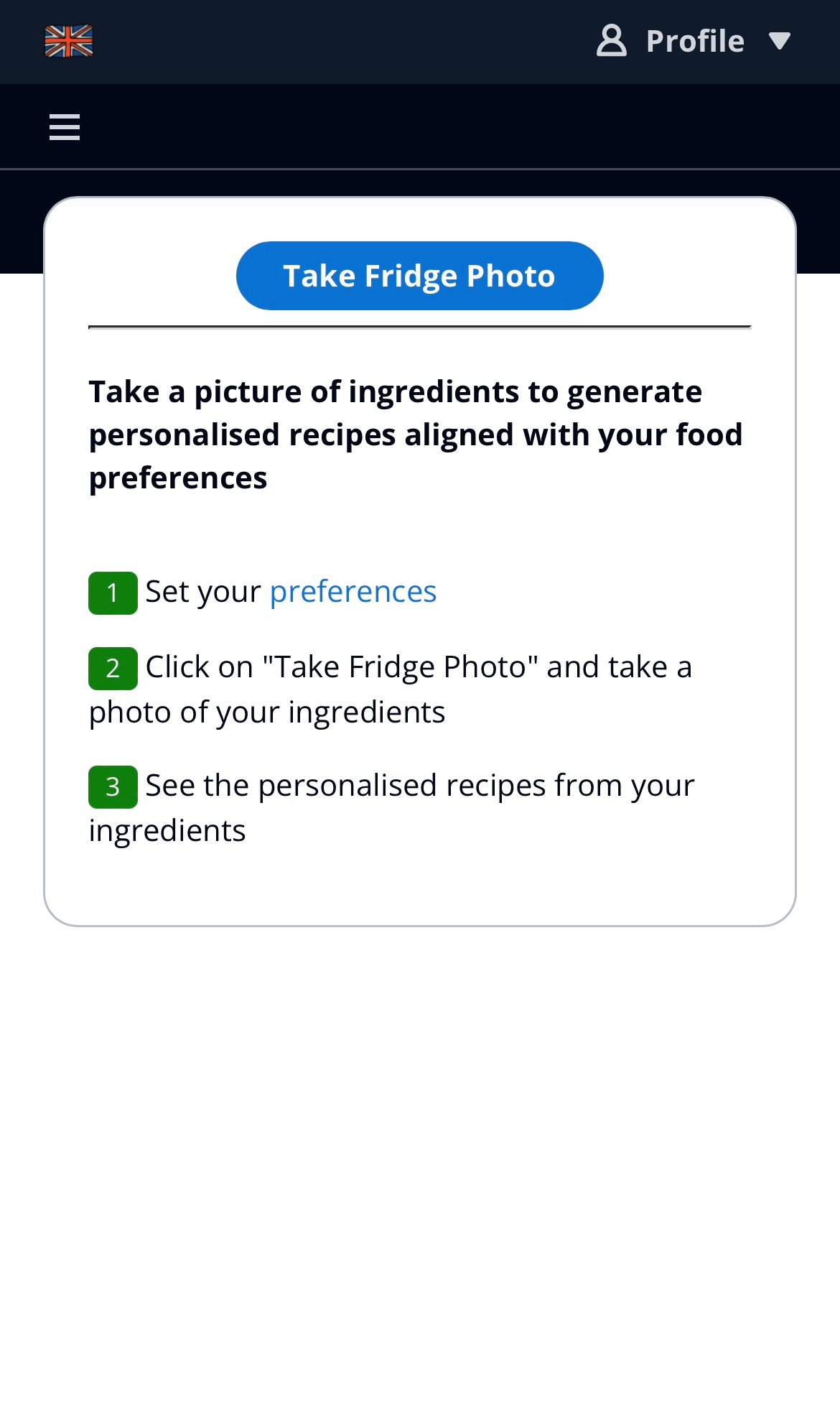

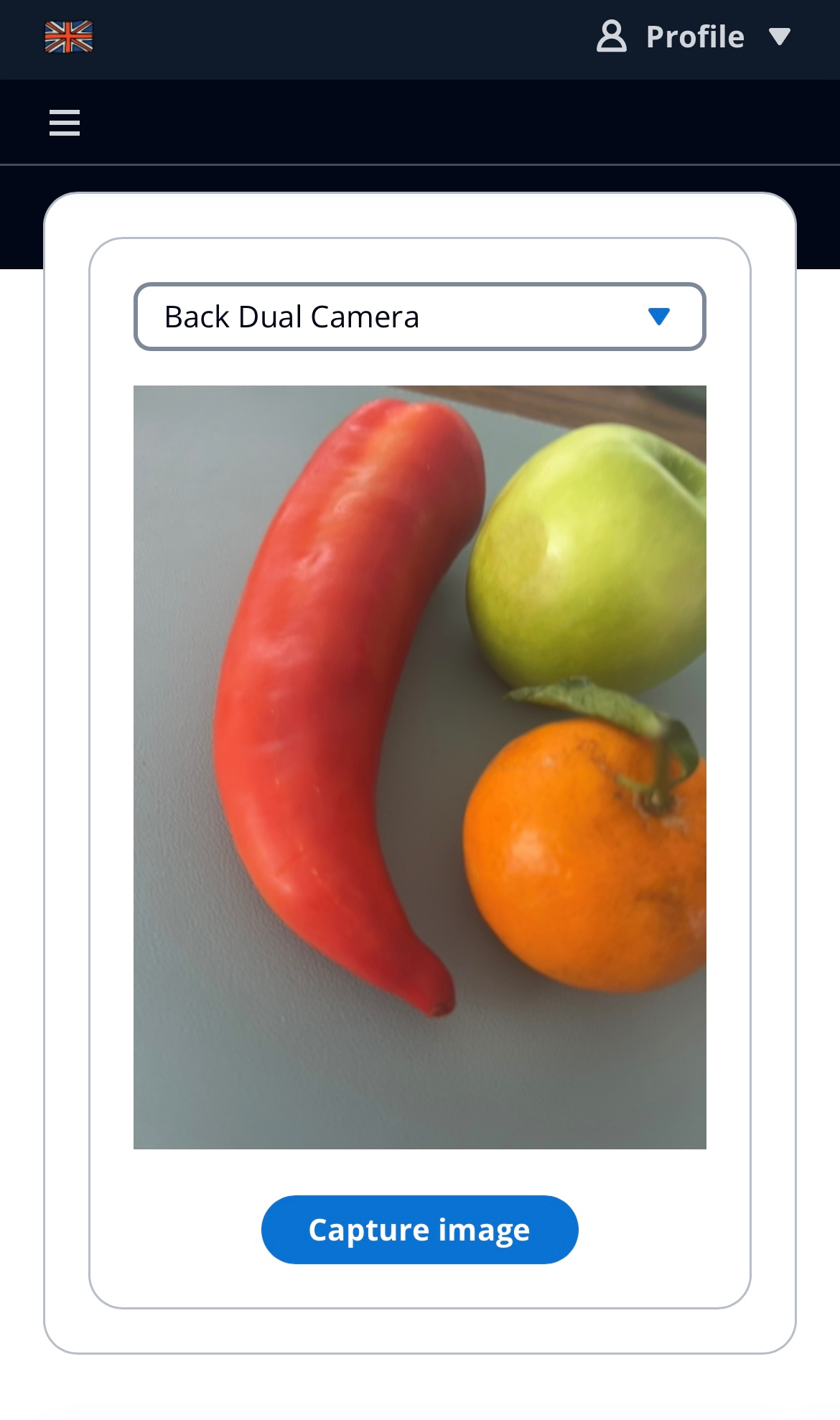

Personalized recipe generator: Capture a photo of the ingredients in your fridge, and the app will generate recipes based on your preferences using those ingredients.

The architecture of the application can be split in 4 blocks:

Implementation: Using AWS Lambda for server-side logic, Amazon Bedrock as a generative artificial intelligence (GenAI) building platform, Anthropic Claude as Large Language Models (LLM) and Stable Diffusion XL from StabilityAI as diffusion model for generating images.

AI Model Development: Choosing the LLM model had an impact on response quality and latency. Ultimately, we chose Anthropic Claude 3 Haiku as a good ratio between latency and quality.

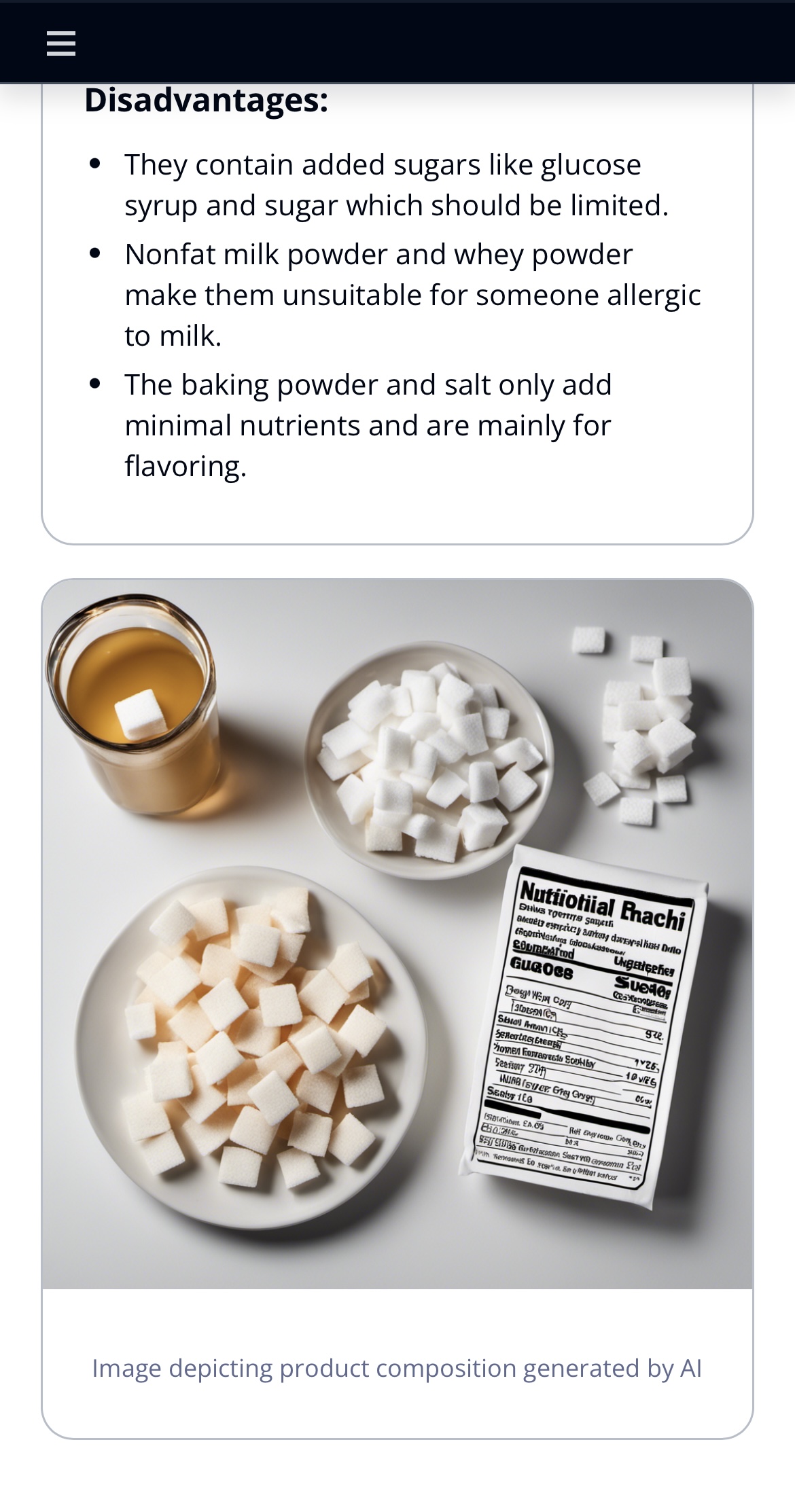

AI-Generated Images: Prompting for an image is very sensitive and was a challenge to generate an image that truly highlights the nutritive features of products. To craft the prompt, we used a first LLM to generate the prompt based on product nutritive features. This technique is similar to a self-querying for vector databases. Using multi-shot prompt-engineering also helped a lot to improve the quality of the prompt.

Strategy: "Do not reinvent the wheel"

Implementation: Bar code scanners have been there for a long time, we picked an open source library that was compatible with our stack. With time constraint, we did not spend much time on library comparison, focusing on having a working prototype rather than finding the perfect one. "Perfect is the enemy of good".

Strategy: Acknowledging the diversity of user preferences and dietary needs, our app incorporates a robust personalization feature. Beyond providing raw data, the app aims to educate users about the nutritional implications of their choices.

Implementation: Users feel a sense of ownership and connection as the app tailors its insights to align with their individual health goals and dietary constraints. Incorporating concise and informative content within the app ensures that users understand the significance of various nutritional components. This educational aspect transforms the app into a learning tool, fostering a deeper connection with users seeking to enhance their nutritional literacy.

Strategy: To captivate users' attention and communicate key nutritional information effectively, our app employs AI-generated images.

Implementation: Amazon Bedrock offers an out-of-the-box developer experience in generating visually striking representations of scanned products. If a product contains excessive sugar, for example, the AI image surrounds it with a visual depiction of sugar, serving as an engaging and memorable visual cue.

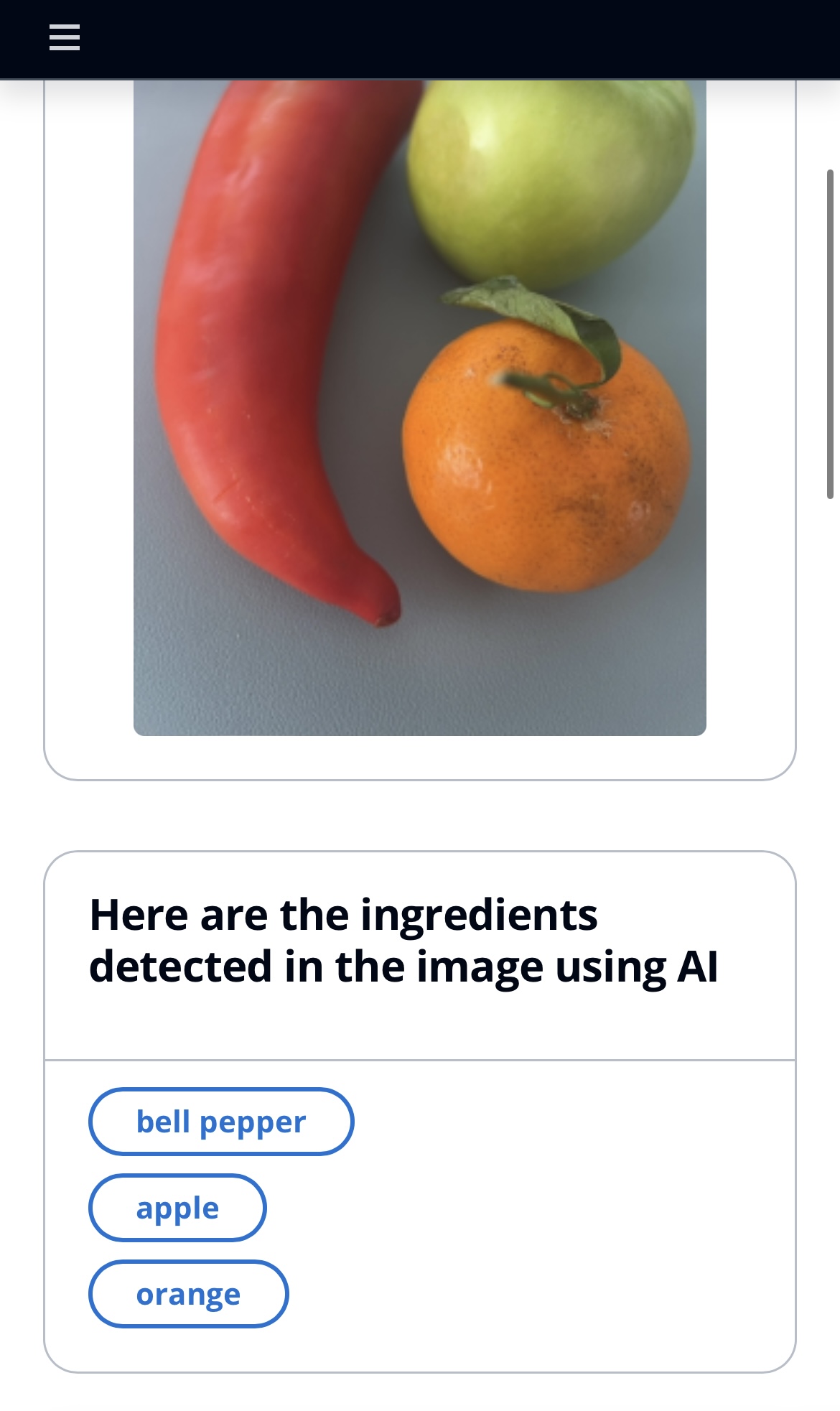

Strategy: Extract ingredients from the image, works well on fruits and vegetables.

Implementation: We use Anthropic Claude 3 Sonnet on Amazon Bedrock with its vision capabilities to extract only food elements from the image. This allows us to focus on the food elements and ignore the background or other elements in the image. Claude 3 is a multi-modal model that can handle both text and images. The output is a list of ingredients present in the image.

Prompt Engineering: To exploit the full potential of the model, we use a system prompt. A system prompt is a way to provide context, instructions, and guidelines to Claude before presenting it with a question or task. By using a system prompt, you can set the stage for the conversation, specifying Claude's role, personality, tone, or any other relevant information that will help it to better understand and respond to the user's input.

system_prompt="You have perfect vision and pay great attention to ingredients in each picture, you are very good at detecting food ingredients on images"

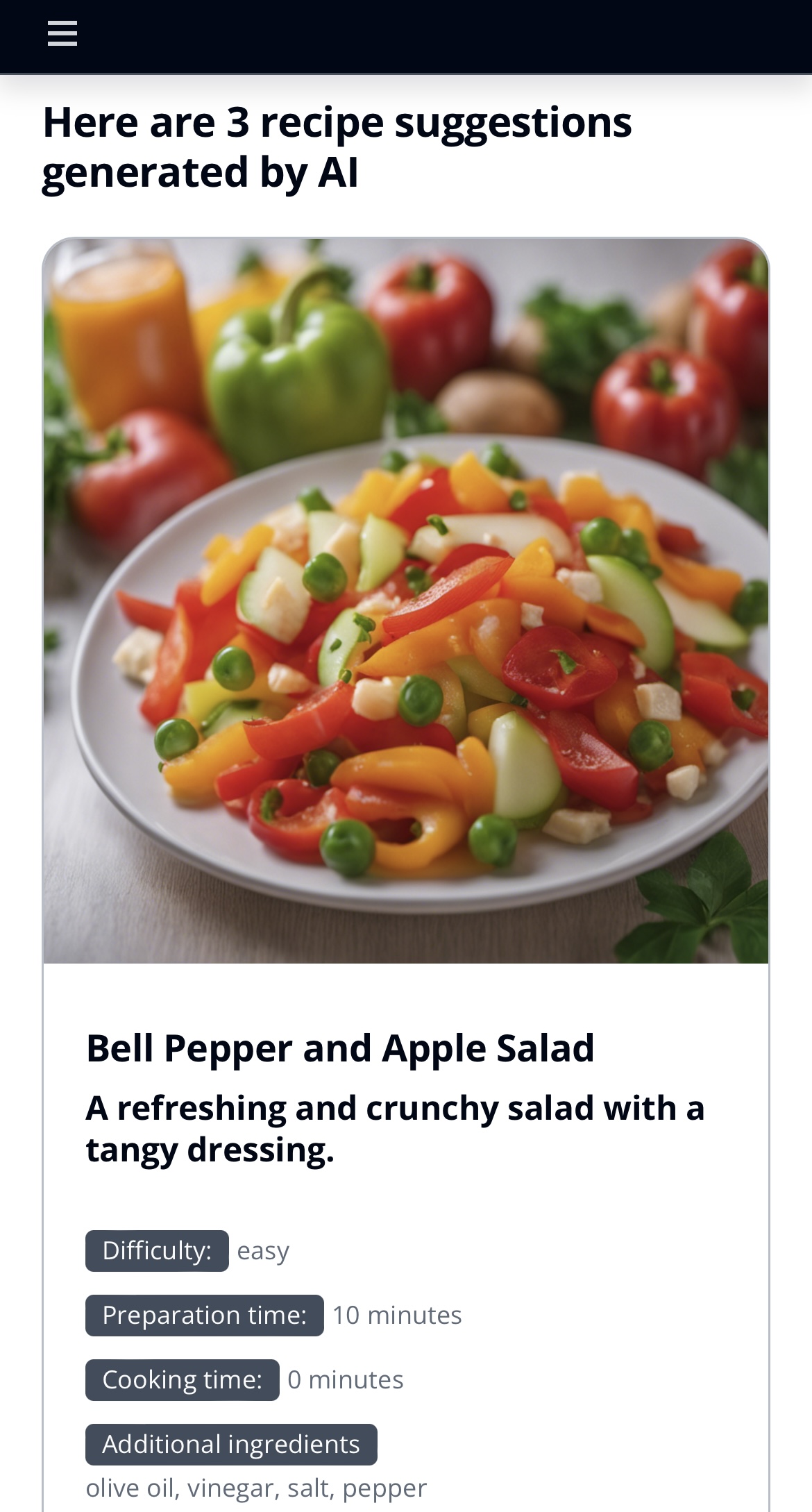

Strategy: Generate 3 recipes from ingredients matched in the pictures:

Implementation: We use Claude 3 Sonnet to generate the 3 recipes. Each recipe contains the following JSON information:

{

"recipe_title": "Succulent Grilled Cheese Sandwich",

"description": "A classic comforting and flavorful dish, perfect for a quick meal",

"difficulty": "easy",

"ingredients": ["bread", "cheese", "butter"],

"additional_ingredients": ["ham", "tomato"],

"preparation_time": 5,

"cooking_time": 6

}

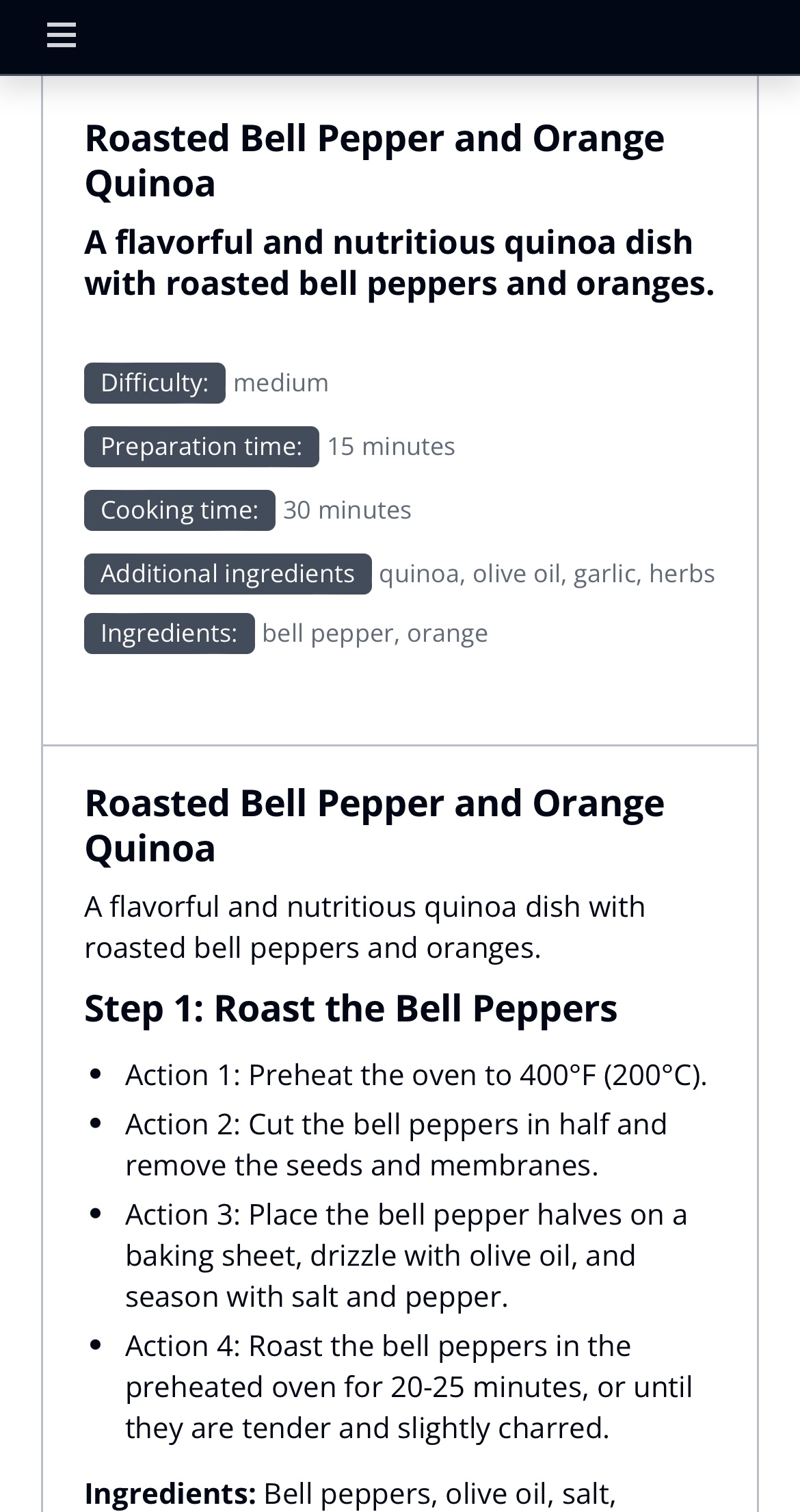

Strategy: Generate a step by step recipe for the user to follow.

Implementation: We use Anthropic Claude 3 Haiku on Amazon Bedrock to generate the step by step recipe. The steps are streamed to the user to reduce the latency of the response, we leverage lambda URL streaming. This method ensures smoother access to text content, improving user experience and interaction.

The output format is a Markdown file to faciliate the display of the recipe on the website. It also greatly simplifies the parsing of the recipe by the front-end when using streaming mode.

Picking the Right AI Model

Challenge: The selection of the Language Model (LM) significantly influenced both response latency and quality, posing a critical decision point.

Solution: Following a comprehensive assessment of various models, we've chosen the following Anthropic Claude models for different components within the app:

Use Lazy Loading to reduce cost/bandwidth

Challenge: Generative AI is costly in price and bandwidth. We wanted to be frugal and efficient when running in packed events.

Solution: Using Lazy loading with the key being the hash of the prompt allow us to reduce cost and deliver response faster.

Prompt Engineering for AI Image Generation

Challenge: Crafting a prompt for generating AI images that effectively highlighted nutritional features posed a nuanced challenge.

Solution: Leveraging a two-step approach, we utilized a first LLM to generate a prompt based on product nutritional features. This self-querying technique (akin to a self-querying process for a vector database) was complemented by incorporating multi-shot prompting. This method significantly improved the quality and relevance of the generated images, ensuring that users received visually compelling representations of product features.

User Personalization Complexity

Challenge: Integrating personalized dietary preferences and restrictions into the model presented complexity.

Solution: To enhance the LLM's understanding, we dynamically incorporated a header in our prompt containing personalized allergy and diet inputs. This approach significantly improved the accuracy and relevance of the LLM's responses, ensuring a tailored experience for users. Personalized prompts became a cornerstone in delivering precise and relevant information based on individual preferences.

Multi language support

Challenge: Present the application in multiple languages

Solution: The same prompt is utilized, but the LLM is instructed to generate the output in a specific language, catering to the user's language preference (English/French).

AWS Lambda URL & Amazon CloudFront

Challenge: Calling an LLM to execute some tasks in a request response mode can be slow.

Solution: To address the 30-second timeout of an API Gateway endpoint, the selected approach involves utilizing an AWS Lambda URL via Amazon CloudFront. Within Amazon CloudFront, a Lambda@Edge function is triggered for each request, responsible for verifying user authentication against Amazon Cognito. If authentication is successful, the Lambda@Edge function signs the request to the Lambda URL (which utilizes AWS_IAM as the authentication method). While utilizing a Lambda URL offers a feasible solution, it's essential to acknowledge that opting for AWS AppSync presents an alternative offering additional benefits. AWS AppSync offers an alternative solution, featuring built-in authentication and authorization mechanisms that seamlessly meet these needs. However, for this demo app, we opted to use Lambda URL instead.

Amazon Bedrock synchronous/asynchronous response

Challenge: Obtaining the response from Amazon Bedrock can occur either in a request/response mode or in a streaming mode, wherein the Lambda initiates streaming the response instead of awaiting the entire response to be generated.

Solution: Some sections of the application operate in request/response mode (such as Product ingredients description or retrieving the three recipe proposals), while another part (Product summary, Getting the Step-by-Step Recipe) employs streaming mode to demonstrate both implementation methods.

Illustrated Use Cases of the GenAi Application

npm installus-east-1 region.cdk deployBefore accessing the application, ensure you've established a user account in Amazon Cognito. To accomplish this, navigate to the AWS Console, then Amazon Cognito, and locate a user pool named something similar to AuthenticationFoodAnalyzerUserPoolXXX.

Check the stack outputs for a URL resembling Food analyzer app.domainName. Paste this URL into your browser, log in with the previously created user, and start enjoying the app.

You can run this vite react app locally following these steps.

Follow instructions above to deploy the cdk app.

Grab the aws-exports.json from the Amazon CloudFront distribution endpoint you obtained from the CDK Output, and save it into ./resources/ui/public/ folder.

The URL is something like:

https://dxxxxxxxxxxxx.cloudfront.net/aws-exports.json

cd resources/ui

npm run devNode JS 18+ must be installed on the deployment machine. (Instructions)

AWS CLI 2+ must be installed on the deployment machine. (Instructions)

Request access to Anthropic Claude models and Stable Diffusion XL on Amazon Bedrock

This project is licensed under the MIT-0 License. See the LICENSE file.