This End-to-end project that simplifies the lifecycle of Large Language Model (LLM) applications, including development, productionization, and deployment. The repository contains folders and files as components of the project, such as agents, APIs, chains, chatbots, GROQ (a query language), Hugging Face models, ObjectBox (an embedded database), OpenAI models, and Retrieval-Augmented Generation (RAG). ) models. The project aims to provide a comprehensive solution for working with LLMs, covering data handling, chatbot development, and integration with various tools and frameworks.

This project demonstrates the creation of a chatbot using both paid and open source large-scale language models (LLMs) via Langchain and Ollama. It covers the entire life cycle of LLM applications, including development, production and deployment.

Yeah. All APIs are secret, with restricted user access and anonymized .gitignore and another layer of security through Github Secret Environments (Sorry! you'll have to use your own!)

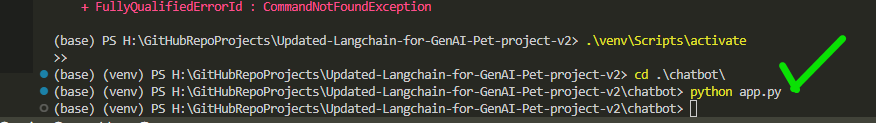

conda create -p venv python==3.10 -y

venv S cripts a ctivate

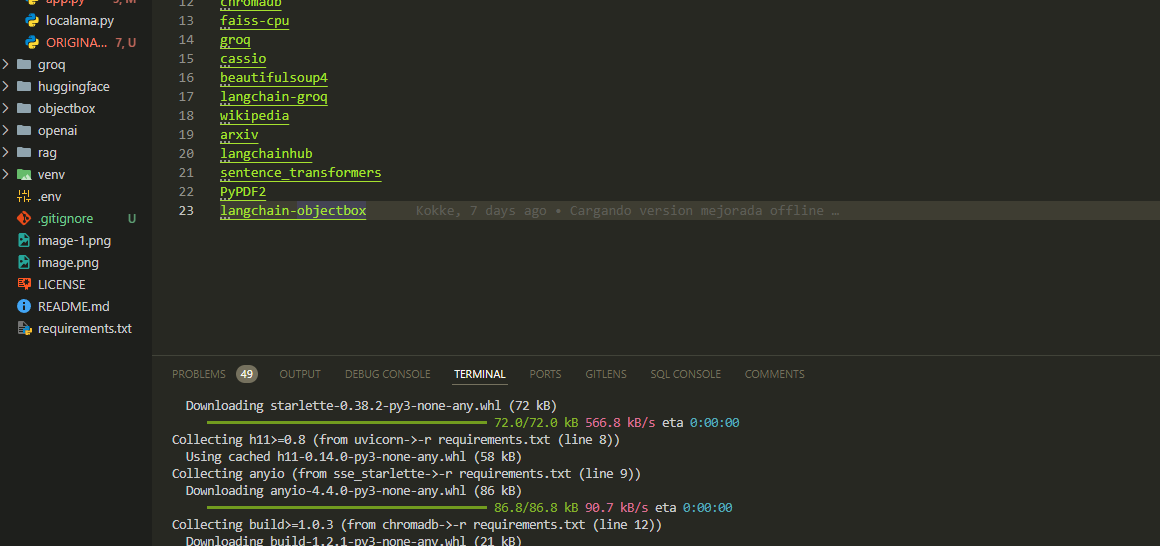

pip install -r requirements.txt Configure the necessary API keys and settings in the app.py file.

python app.pyOpen a web browser and navigate to (or the assigned progressive port on your computer). mine is that:

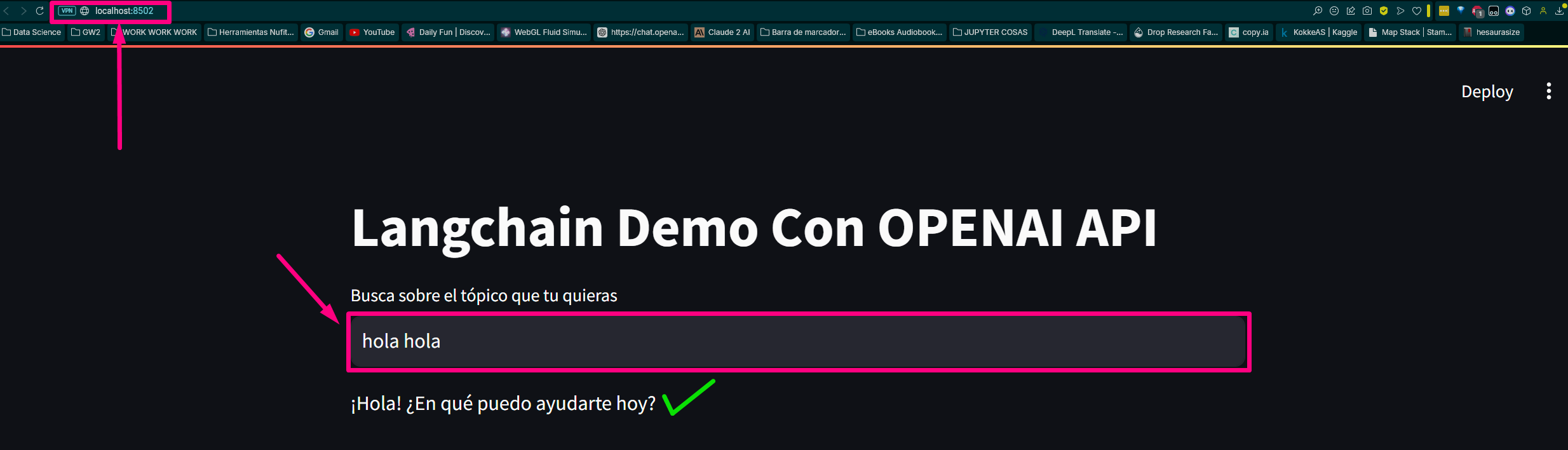

http://localhost:8502We create the file by calling the 3 main chained functions of our base chatbot.

We load requirements for modules and libraries:

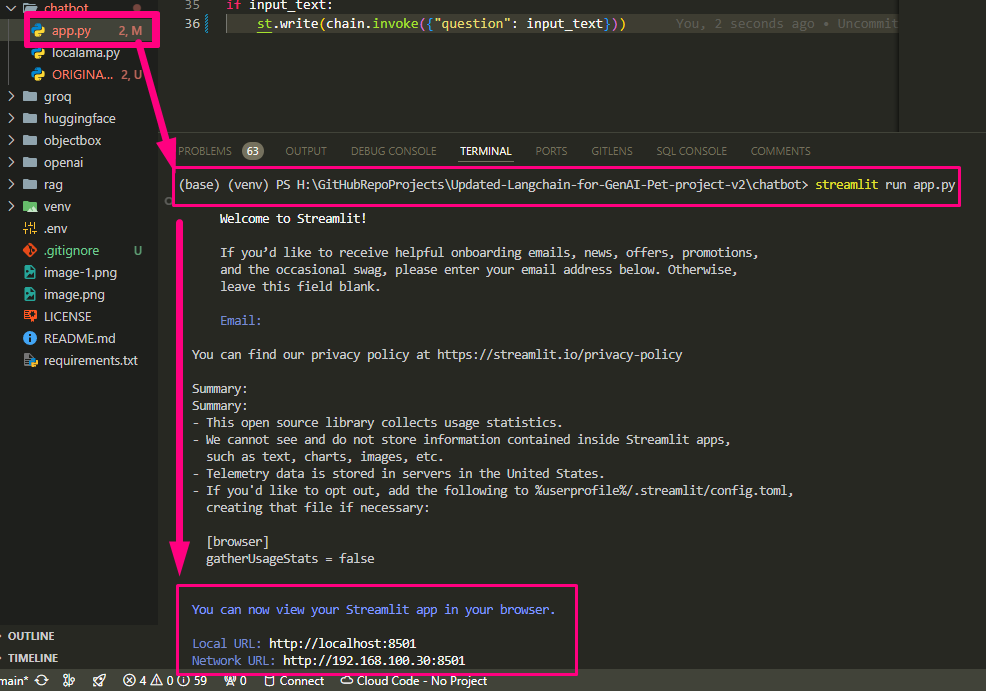

We run our app.py

We finish configuring app.py and run

It returns the route and opens the host in the test browser

Local URL: http://localhost:8502

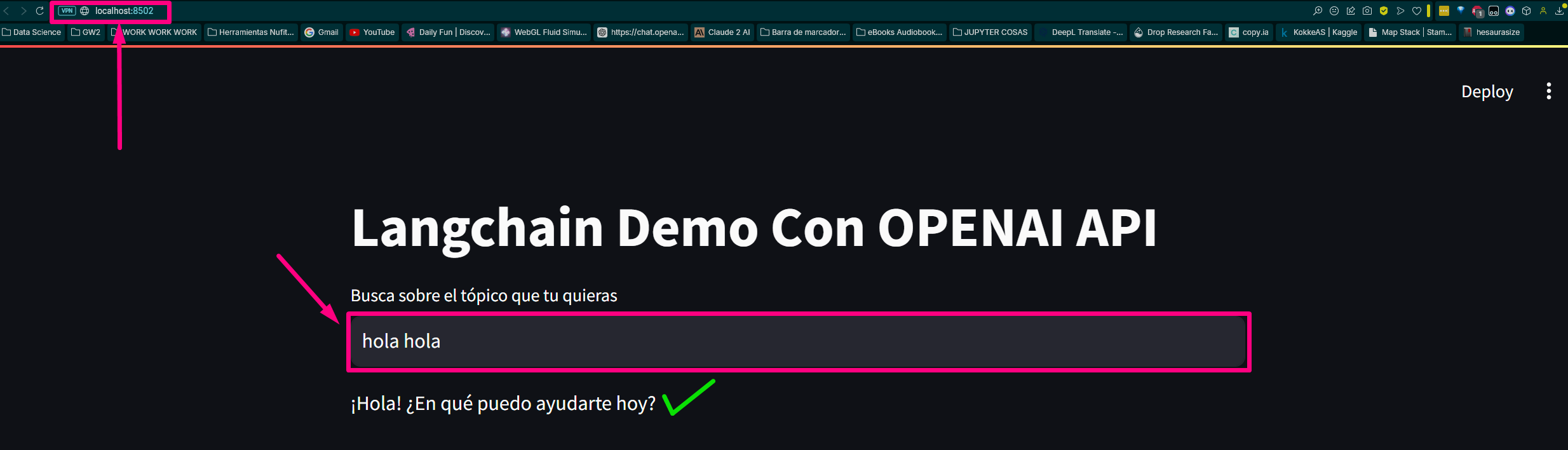

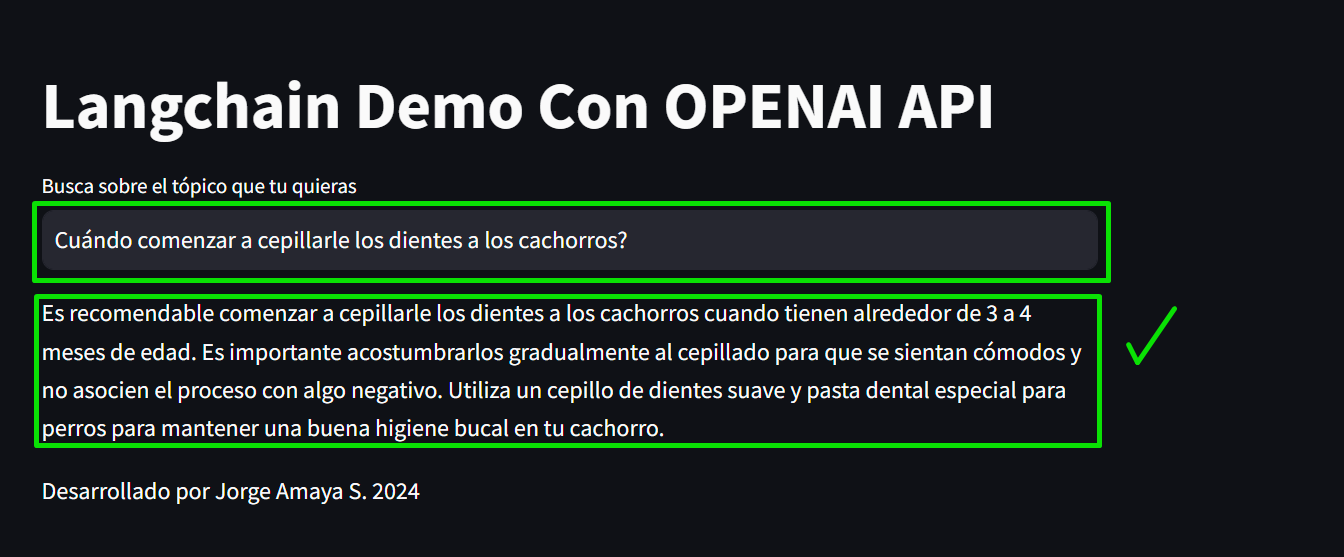

Network URL: http://192.168.100.30:8502 Let's check the page:

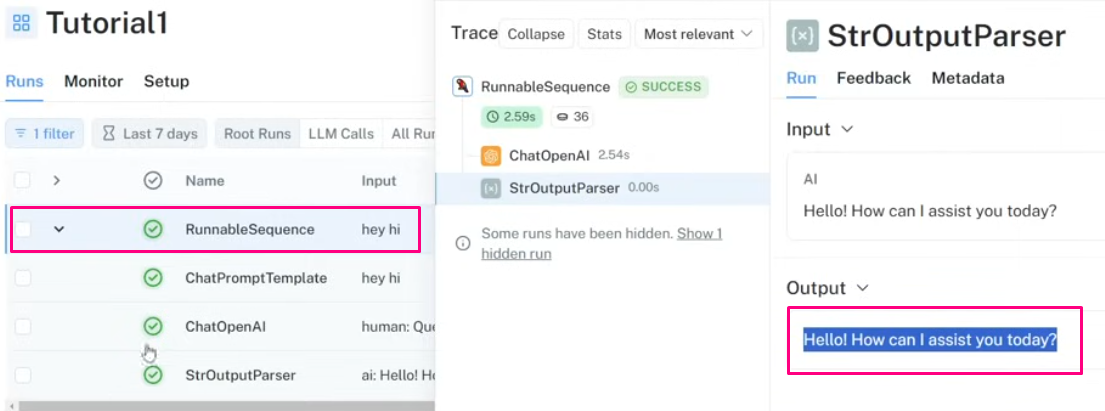

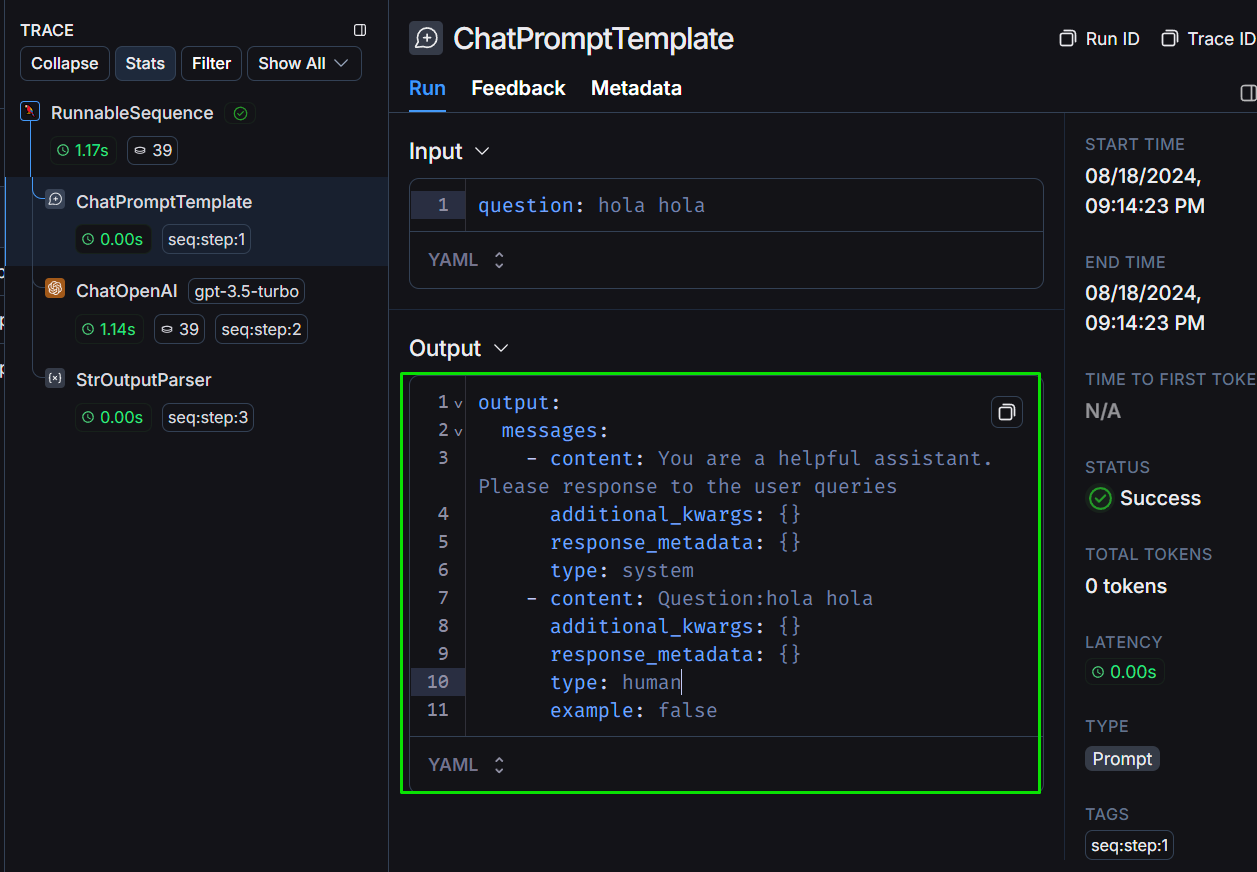

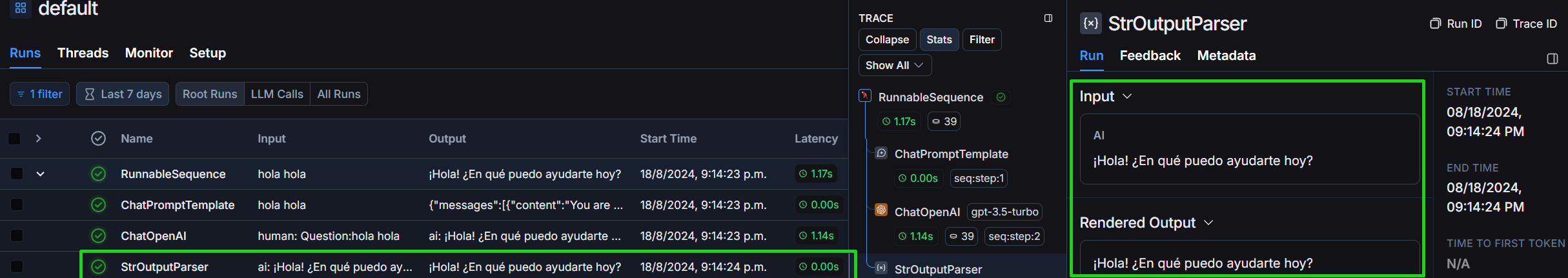

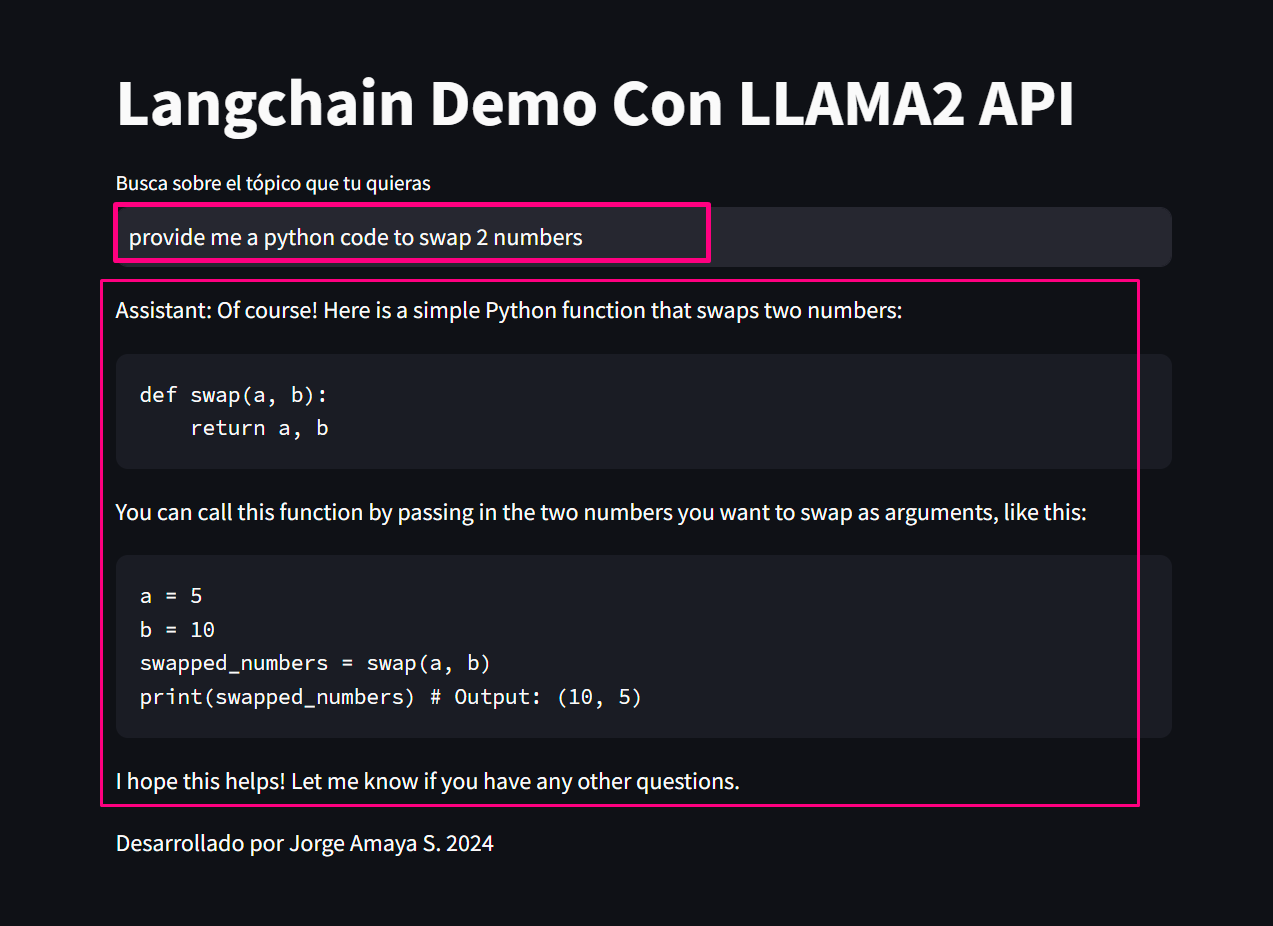

Let's review the project in the Langchain framework:

Let's look at the structure of the output as we define it:

We look at the StrOutputParser in detail:

We will ask you about “providing python code that exchanges two values” to evaluate cost, tokenization and delay time to resolve the question:

*proveeme de un código python que swapee 2 valores.* :

# Definir los dos valores a intercambiar

a = 5

b = 10

print ( "Valores originales:" )

print ( "a =" , a )

print ( "b =" , b )

# Intercambiar los valores

temp = a

a = b

b = temp

print ( " n Valores intercambiados:" )

print ( "a =" , a )

print ( "b =" , b )Finally we generate a common query:

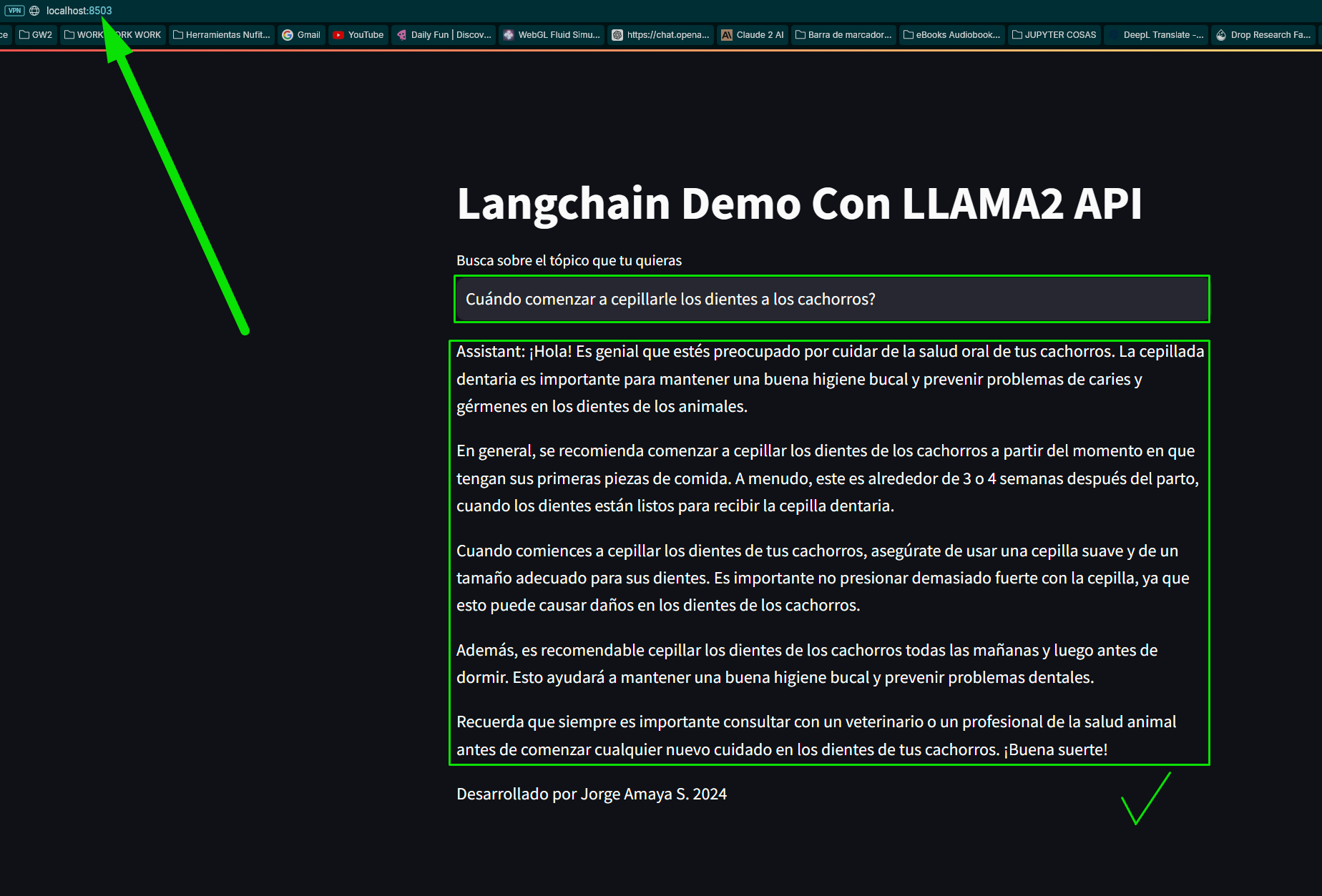

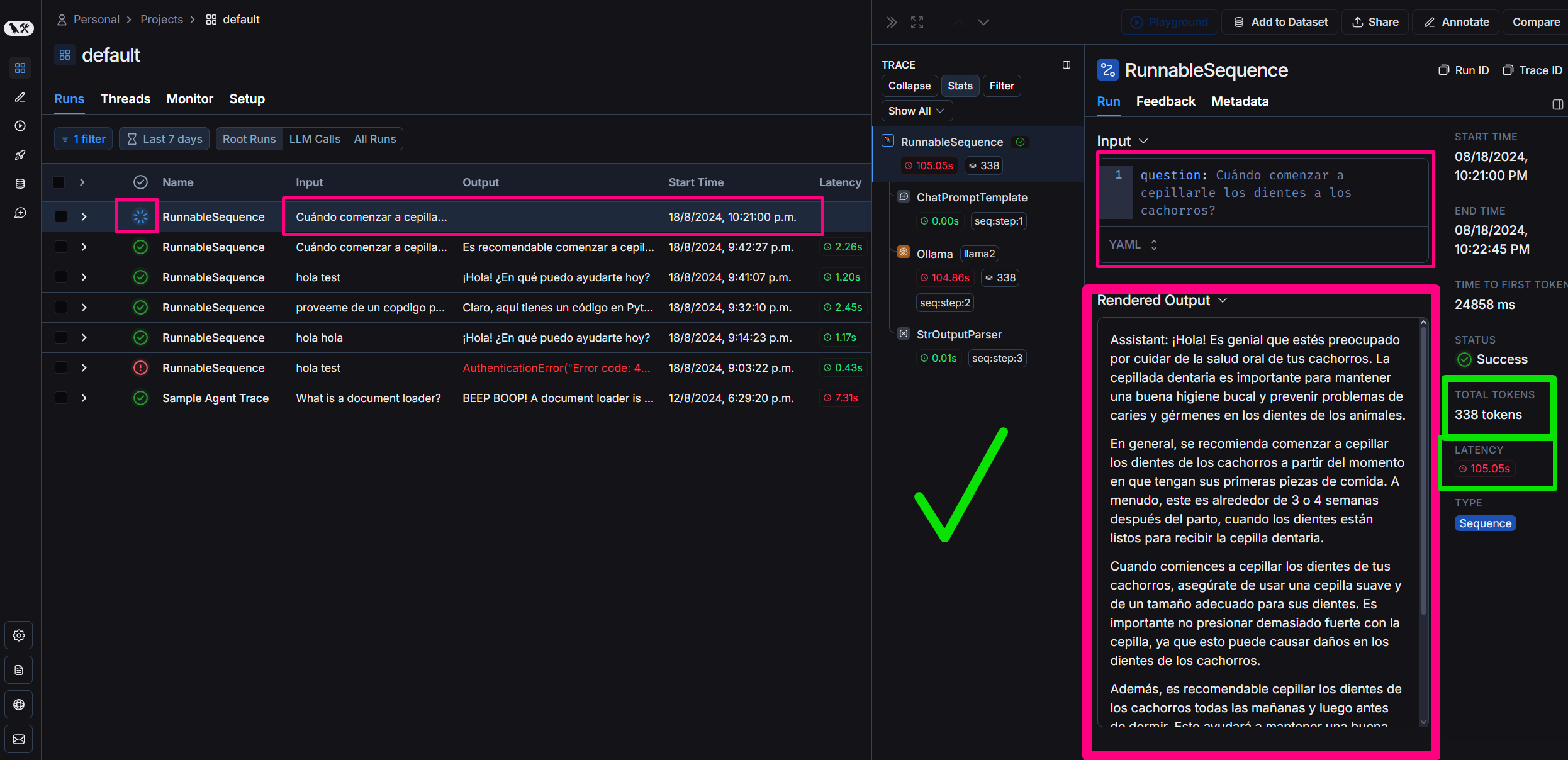

“question": "When to start brushing puppies' teeth?"

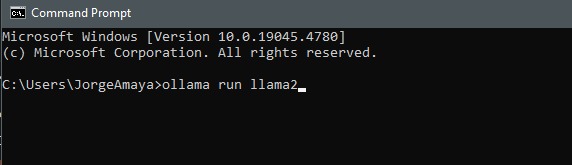

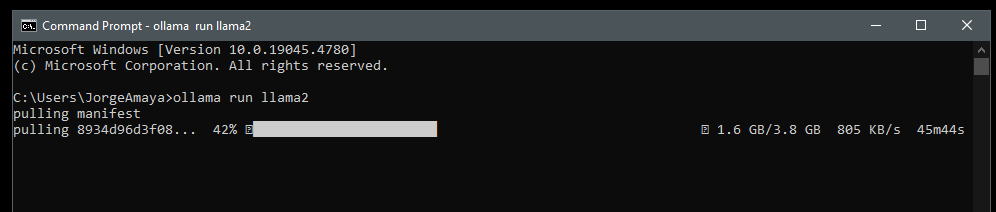

We will load Llamama specifically by downloading Llama2 to our computer to run it from my local environment

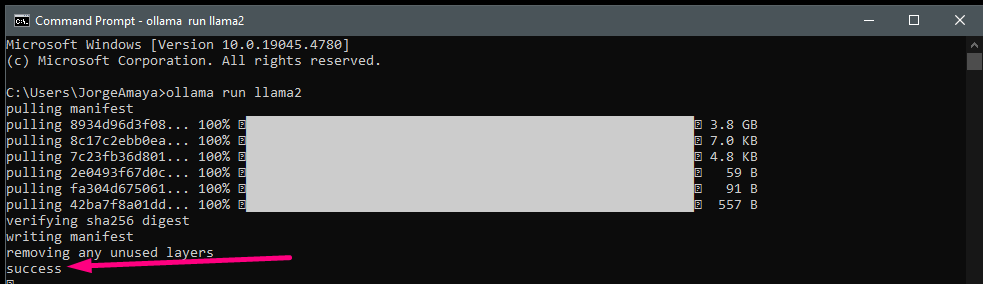

I open a cmd

ollama run llama2 we download llama2

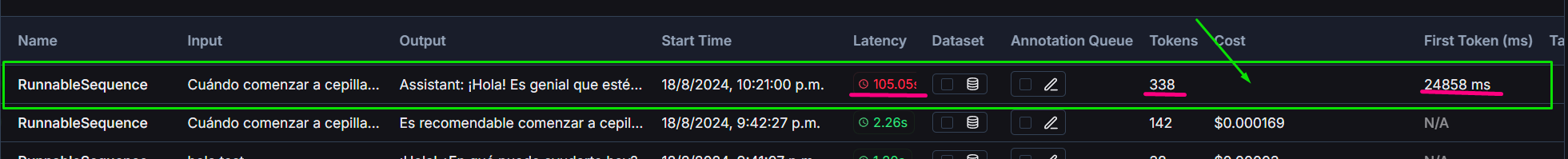

Llama2 compared to that generated with gpt3.5 turbo

The project includes a comparison between OpenAI's GPT-3.5-turbo and the locally run Llama2 model:

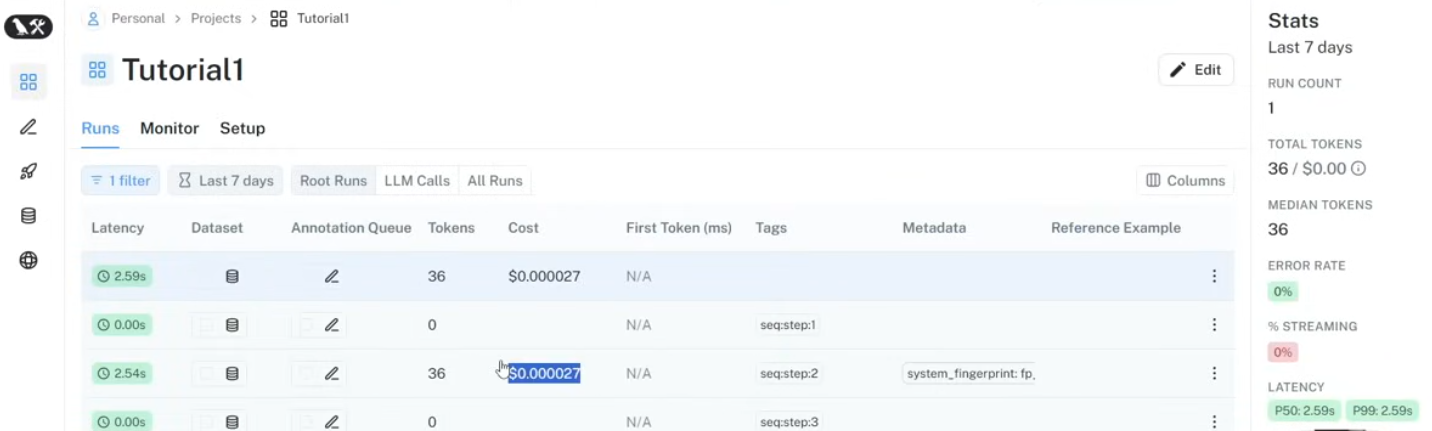

We use Langsmith on Langchain to monitor:

Contributions to improve the chatbot or extend its capabilities are always welcome. Please submit pull requests or open issues for any improvements.

#### Do you want to learn? send me a DM!

This project is open source and available under the MIT License.