David is a specialist in vinyl records. You can ask him for a recommendation or for additional information about any of the records in your Discogs collection. David will be happy to help you.

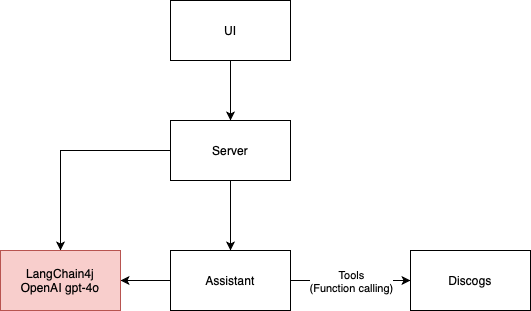

This LLM application is a simple example of a conversational agent that uses the Discogs API to provide information about vinyl records. It consists of 4 main building blocks:

It currently uses the LangChain4j as framework with GPT-4o as the AI assistant engine, but it can be easily adapted to use other engines.

The application architecture from below diagram is enforced by the ArchUnit framework through tests in the ArchitectureTest class.

The UI for this project was developed using the following prompt:

I need the code for an HTML 5 page that contains an input field for a Discogs username

and a text area for inserting prompts for the application to send to AI agents.

Above the text area there should be the space in which the AI responses are displayed, in the ChatGPT style

The generated HTML5 code from GitHub Copilot gave the initial visuals for the UI which I then modified to add the websocket connection and the logic to send and receive messages from the AI assistant. I found this to be a very quick approach to prototyping. Then, I moved to more robust components from ant-design, including pro-chat.

I initially wanted to use llama3. The llama3 model currently has no support for tools (June 2024). This means that the AI assistant cannot collect the Discogs username and retrieve the record collection on its own. We moved to GPT-4o so David can ask for Discogs information and remove the need for any forms.

Some LLMs are not as smart as others. Even though Mistral 7b model supports tools, I was not able to get good answers from it. It would not even pass my integration tests. With that, I was not able to run an LLM with tools free of charge.

Hallucinations are a pain. I am beginning my journey into RAG as a way to minimize it. Since David operates in the domain of music, Wikipedia is the first knowledge base that comes to mind for RAG. Maybe I can leverage the MediaWiki API for searching music pages that are relevant to the conversation. For now, I am using just Google Search and it helps sometimes but definately not enough for the cost it adds with tokens.

Testing the LLM application was a challenge. I did more integration tests than usual. This led to a slower development cycle. Also, the probabilistic nature of the AI assistant makes it hard to test the application in a deterministic way.

In the LLM world unit tests involve prompting an AI model instead of just calling a unit of code. When using a cloud-based model, running unit tests has a cost. I also experimented with using a second AI agent to help me with asserting results from the main AI. It is a promising approach since we can do semantic assertions, and not just string processing. The tradeoff here is that it also generates cost, and stacks the risk of probability errors introduced by LLMs.

You must have a valid OpenAI API key to run this application.

./gradlew bootRun to start the application.http://localhost:8080 in your browser to interact with the AI assistant.