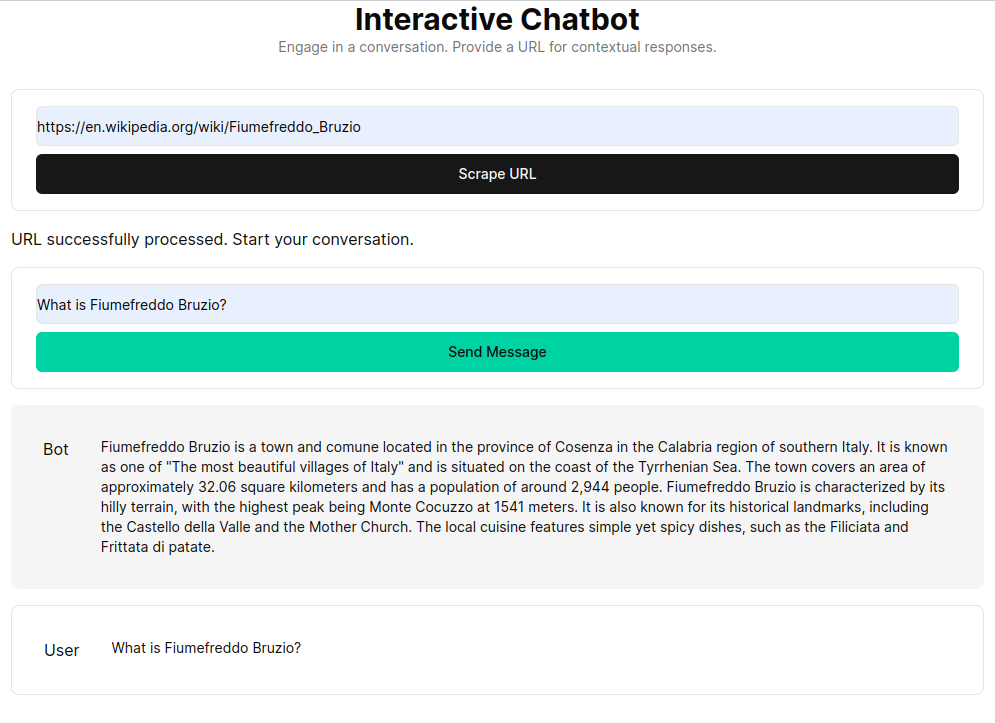

This application facilitates a chatbot by leveraging Next.js as the frontend and FastAPI as the backend, utilizing the power of LangChain for dynamic web interactions.

The application integrates the Python/FastAPI server into the Next.js app under the /api/ route. This is achieved through next.config.js rewrites, directing any /api/:path* requests to the FastAPI server located in the /api folder. Locally, FastAPI runs on 127.0.0.1:8000, while in production, it operates as serverless functions on Vercel.

npm install.env file with your OpenAI API key:

OPENAI_API_KEY=[your-openai-api-key]

npm run devFor backend-only testing:

conda create --name nextjs-fastapi-your-chat python=3.10

conda activate nextjs-fastapi-your-chat

pip install -r requirements.txt

uvicorn api.index:app --reloadOptions for preserving chat history include:

RAG (Retrieval Augmented Generation) enhances language models with context retrieved from a custom knowledge base. The process involves fetching HTML documents, splitting them into chunks, and vectorizing these chunks using embedding models like OpenAI's. This vectorized data forms a vector store, enabling semantic searches based on user queries. The retrieved relevant chunks are then used as context for the language model, forming a comprehensive response to user inquiries.

The get_vectorstore_from_url function extracts and processes text from a given URL, while get_context_retriever_chain forms a chain that retrieves context relevant to the entire conversation history. This pipeline approach ensures that responses are contextually aware and accurate.