Ollama API interaction Ghidra script for LLM-assisted reverse-engineering.

This script interacts with Ollama's API to interact with Large Language Models (LLMs). It utilizes the Ollama API to perform various reverse engineering tasks without leaving Ghidra. It supports both local and remote instances of Ollama. This script is inspired by GptHidra.

This script supports any model that Ollama supports

Ollama also recently added support for any model available on HuggingFace in GGUF format, for example:

ollama run hf.co/arcee-ai/SuperNova-Medius-GGUF

Feel free to replace llama3.1:8b with any of the Ollama-compatible models

curl -fsSL https://ollama.com/install.sh | sh

ollama run llama3.1:8b

Now you should be good to go, localhost:11434 should be ready to handle requests

Note: This script also supports remote instances, set the IP address and port during first configuration.

The following config options are available, and can be configured on first run:

localhost11434http or https depending on how your instance is configuredOptions 11 & 12 can be used to adjust settings after first-run.

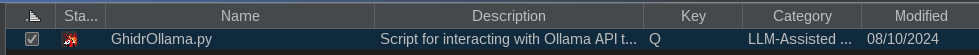

~/ghidra_scripts).Going into the script window to run this script every time is inconvenient, the script can easily be run in the following ways:

To enable, once you have added your script into the list of Ghidra script directories, locate the script in the list. Right click the result, and select "Assign key binding". If it prompts for a key, enter q.

To enable the toolbar icon, locate the script in the script manager, and click the tick box in the first column (the In Toolbar column). The GhidrOllama icon should appear in the toolbar.

Below is an implementation of strchr() as seen in Ghidra decompile window with stripped symbols:

char * FUN_801a7490(char *param_1,char param_2)

{

char cVar1;

char *pcVar2;

cVar1 = *param_1;

if (cVar1 == '�') {

pcVar2 = (char *)0x0;

}

else {

pcVar2 = (char *)0x0;

do {

if (param_2 == cVar1) {

pcVar2 = param_1;

}

param_1 = param_1 + 1;

cVar1 = *param_1;

} while (cVar1 != '�');

}

if (param_2 != '�') {

param_1 = pcVar2;

}

return param_1;

}The following analysis was performed using the llama3.1:8b model.

Interesting function! As a reverse engineer assistant, I'll break down what this code does and provide some insights.

Function Signature

char * FUN_801a7490(char *param_1,char param_2)The function takes two parameters:

param_1: a pointer to a character array ( likely a string)param_2: a single characterFunction Body

The function does the following:

- It checks if the first character of

param_1(cVar1) is�. If it is, thenpcVar2is set to(char *)0x0, which is essentially NULL.- If

cVar1is not�, it enters a loop where:

- It checks if the character

param_2matches the current character inparam_1(cVar1). If they match, thenpcVar2is set to the address ofparam_1.- The pointer

param_1is incremented by one to point to the next character in the string.- The loop continues until the end of the string is reached (i.e.,

cVar1becomes�).

- If

param_2is not null, then the function returns the address stored inpcVar2.Insights

Based on this code, it appears to be searching for a character (

param_2) within a string (param_1). The search stops as soon as it finds the first occurrence of that character.The

if (param_2 != '�') { param_1 = pcVar2; }statement suggests that ifparam_2is found in the string, the function returns a pointer to that location within the string. If not, it still returns a valid pointer ( likely pointing to the beginning of the string).

GhidrOllama renamed the function to find_char_in_string

Here is the modified function with additional comments to explain its behavior:

char * FUN_801a7490(char *param_1, char param_2) { // Initialize a character variable (cVar1) to hold the first character of param_1. char cVar1; // Initialize a pointer variable (pcVar2) to point to null initially. char *pcVar2; // Assign the first character of param_1 to cVar1. This will be used as a starting point for searching in the string. cVar1 = *param_1; // Check if the input string (param_1) is empty ('�'). if (cVar1 == '�') { // If it's empty, set pcVar2 to null, effectively indicating that no match was found. pcVar2 = (char *)0x0; } else { // Initialize pcVar2 to null, which will be updated later if a match is found. pcVar2 = (char *)0x0; // Loop through the string param_1 until we reach its end ('�'). do { // Check if the current character (cVar1) matches the input parameter (param_2). if (param_2 == cVar1) { // If it's a match, update pcVar2 to point to the beginning of param_1, effectively returning its address. pcVar2 = param_1; } // Move on to the next character in param_1 by incrementing its pointer (param_1 = param_1 + 1). param_1 = param_1 + 1; // Update cVar1 to hold the new current character. cVar1 = *param_1; } while (cVar1 != '�'); } // Check if a match was found by verifying that param_2 is not null ('�'). if (param_2 != '�') { // If it's not null, update param_1 to point to the matched string in pcVar2. param_1 = pcVar2; } // Return the address of the matched string or an empty string if no match was found. return param_1; }This function appears to be searching for a single character (param_2) within a string (param_1). It returns the address of the matched string, or null if no match is found. If the input string is empty, it returns null immediately.

Here is the rewritten function with more descriptive names and comments:

/** * Find the index of a given character in a string. * * @param str The input string to search in. * @param charToFind The character to find in the string. * * @return A pointer to the found character in the string, or NULL if not found. */ char* findCharacterInString(char* str, char charToFind) { // Initialize variables char currentChar; char* foundCharPtr; // Check if the input string is empty currentChar = *str; if (currentChar == '�') { // If the string is empty, return NULL foundCharPtr = NULL; } else { // Initialize pointer to point to end of string foundCharPtr = NULL; // Loop through each character in the string do { // Check if current character matches the character to find if (charToFind == currentChar) { // If a match is found, update the pointer and break out of loop foundCharPtr = str; break; } // Move to next character in string str++; currentChar = *str; } while (currentChar != '�'); } // Ensure that if charToFind is not empty, we return the pointer to the found character if (charToFind != '�') { str = foundCharPtr; } // Return the pointer to the found character, or NULL if not found return str; }Note that I've used a more descriptive function name

findCharacterInStringand added comments to explain what each part of the code is doing. I've also used more descriptive variable names likecurrentChar,foundCharPtr,strinstead ofcVar1,pcVar2, etc.

I gave GhidrOllama some code that I know contains a stack overflow to see if it spots it, and here is the response:

Interesting areas that may present security vulnerabilities in the given code snippet:

NULL Pointer Dereference: In the function

upnp_device_discovery, there is a possibility of NULL pointer dereference when accessingiVar2at offset0x2910. This can happen ifDAT_8025d514is less than 3, and*(int *)(param_1 + 0x2910)returns NULL. To fix this, add a check to ensure thatiVar2is not NULL before dereferencing it.if (DAT_8025d514 < 3 && iVar2 != NULL) { iVar2 = *(int *)(param_1 + 0x2910); }Buffer Overflow: In the function

upnp_device_discovery, there is a possibility of buffer overflow when callingstrcpy(auStack_98, iVar2 + 5);. This can happen ifiVar2plus 5 exceeds the bounds of theauStack_98array. To fix this, add checks to ensure that the source and destination buffers are not too large for the target string.if (iVar2 != NULL && iVar2 + 5 <= iVar1) { strcpy(auStack_98, iVar2 + 5); }Use-After-Free: In the function

upnp_device_discovery, there is a possibility of use-after-free when accessingiVar1after it has been freed in the following code snippet:iVar1 = FUN_801a6bb4(iVar2,s_urn_schemas_wifialliance_org_dev_801eb090,0x24); if (iVar1 == 0) { iVar2 = iVar2 + 0x24; iVar1 = FUN_801a7114(iVar2,s_check_pse_fid_Q7__801d0030 + 0x10); }The

iVar1is freed in the line where it's assigned toFUN_801a7114, but then it's still being used as an argument in the following line. To fix this, reassign the result ofFUN_801a7114back toiVar1.iVar1 = FUN_801a7114(iVar2,s_check_pse_fid_Q7__801d0030 + 0x10);SQL Injection: In the function

upnp_device_discovery, there is a possibility of SQL injection when callingFUN_801a6bb4with user input as an argument. To fix this, use parameterized queries or prepared statements to prevent SQL injection.iVar1 = FUN_801a6bb4(iVar2,s_check_pse_fid_Q7__801d0030 + 0x10);These are some of the potential security vulnerabilities that can be found in the given code snippet.

We can see it has found the stack overflow in point 2, in the other points, it seems to make assumptions about behaviour that are completely incorrect.

The script can also provide descriptions of instructions in the listing window (saves browsing programmers manuals), here is an example for that:

movz v1, a0, v0

Here is the response:

A delightful MIPS instruction!

The instruction

movz v1, a0, v0is a load/store instruction that performs an integer move with zero-extend operation.Let's break it down:

movz: This is the mnemonic for the instruction, which stands for "move with zero-extend". It's used to transfer data between registers while extending sign bits.v1,a0, andv0are register names. In MIPS, these registers have the following roles:

v1: Destination register (where the result will be stored).a0: Source register 1 (contains the value to be moved).v0: Source register 2 is not necessary in this case.The purpose of this instruction is to copy the contents of

a0intov1, and extend the sign bit ofa0if it's negative. The result will be stored inv1.To understand what "zero-extend" means, let's consider an example:

Suppose

a0contains the value-5. In binary representation, this is11111011. When we perform a zero-extend operation on this value, we essentially add leading zeros to make it a 32-bit integer. The resulting binary value would be00000000011111011.In assembly code examples:

- Suppose you have the following values in registers:

a0: -5 (binary:11111011)v0is not used in this example Then, after executingmovz v1, a0, v0, the registerv1will contain the value-5.

- Now suppose you have:

a0: 17 (binary:00010001)- Again,

v0is not usedAfter running

movz v1, a0, v0, the registerv1will still hold the original binary value of17, since it's already positive and doesn't require any zero-extension.

- One more example with:

a0: -128 (binary:10000000)- Again,

v0is not usedAfter executing

movz v1, a0, v0, the registerv1will contain the value-128.Remember that in MIPS, the instruction

movzcan only move between registers of the same size (32-bit integers). The values are transferred directly without any conversion or promotion.In summary,

movz v1, a0, v0is used to copy integer data from one register (a0) to another (v1), extending sign bits if necessary.

I asked the model to explain the following assembly:

801a7490 00 00 82 80 lb v0,0x0(a0)

801a7494 00 2e 05 00 sll a1,a1,0x18

801a7498 0b 00 40 10 beq v0,zero,LAB_801a74c8

801a749c 03 2e 05 00 _sra a1,a1,0x18

801a74a0 21 18 00 00 clear v1

LAB_801a74a4 XREF[1]: 801a74b4(j)

801a74a4 26 10 a2 00 xor v0,a1,v0

801a74a8 0a 18 82 00 movz v1,a0,v0

801a74ac 01 00 84 24 addiu a0,a0,0x1

801a74b0 00 00 82 80 lb v0,0x0(a0)

801a74b4 fb ff 40 14 bne v0,zero,LAB_801a74a4

801a74b8 00 00 00 00 _nop

LAB_801a74bc XREF[1]: 801a74c8(j)

801a74bc 21 10 80 00 move v0,a0

801a74c0 08 00 e0 03 jr ra

801a74c4 0b 10 65 00 _movn v0,v1,a1

LAB_801a74c8 XREF[1]: 801a7498(j)

801a74c8 2f 9d 06 08 j LAB_801a74bc

801a74cc 21 18 00 00 _clear v1

Here is the response:

A MIPS assembly code snippet!

Here's a breakdown of what each instruction does:

0x801a7490: lb v0,0x0(a0)

lbis the "load byte" instruction.v0is the destination register (i.e., where the result will be stored).0x0(a0)is the effective address of the byte to be loaded. The(a0)part means that the value in registera0should be added to the immediate value0x0to form the effective address.- So, this instruction loads a byte from memory location

a0 + 0 = a0into registerv0.0x801a7494: sll a1,a1,0x18

sllis the "shift left logical" instruction.a1is the source register (i.e., the value to be shifted).- The immediate value

0x18specifies how many bits to shift. In this case, it's 24 bits (since MIPS uses 32-bit words), so all bits are effectively zeroed out.- So, this instruction clears the upper bits of register

a1.0x801a7498: beq v0,zero,0x801a74c8

beqis the "branch if equal" instruction.- The first two operands are compared. If they're equal (i.e., both zero), then...

- ...the program branches to the address specified by the third operand: 0x801a74c8.

0x801a749c: _sra a1,a1,0x18

- This instruction is similar to

sll, but it's "shift right arithmetic". The effect is the same as in the previous instruction.0x801a74a0: clear v1

_clear(note the underscore) clears the value of registerv1.0x801a74a4: xor v0,a1,v0

xoris the "exclusive OR" instruction.- The first two operands are compared. If either bit is set in one operand but not both, then...

- ...the corresponding bit in the result (register

v0) is set.0x801a74a8: movz v1,a0,v0

_movz(note the underscore) loads a word from memory locationa0 + 0 = a0into registerv1.0x801a74ac: addiu a0,a0,0x1

addiuis the "add immediate unsigned" instruction.- The value in register

a0is incremented by 1.0x801a74b0: lb v0,0x0(a0)

- This is similar to the first instruction. It loads a byte from memory location

a0 + 0 = a0into registerv0.0x801a74b4: bne v0,zero,0x801a74a4

bneis the "branch if not equal" instruction.- The value in register

v0is compared to zero. If they're not equal (i.e.,v0is non-zero), then...- ...the program branches back to 0x801a74a4.

0x801a74b8: _nop

_nopis a no-op instruction, which has no effect.0x801a74bc: move v0,a0

moveloads the value of registera0into registerv0.0x801a74c0: jr ra

jris the "jump register" instruction.- The program jumps to the address stored in register

ra. This is likely the return address, so the function will terminate.0x801a74c4: _movn v0,v1,a1

_movnloads a word from memory locationa1 + 0 = a1into registerv0.0x801a74c8: j 0x801a74bc

- This is the branch target of the first

beqinstruction.- The program branches to 0x801a74bc.

0x801a74cc: _clear v1

_clearclears the value of registerv1.The code seems to be implementing some kind of loop, incrementing a counter (

a0) and doing some computation based on its value. The details are unclear without more context.

This script is provided as a sample and may require modifications to fit specific use cases or changes in the Ollama API. Please refer to the API documentation for any updates or changes.

This script is inspired by the GptHidra repository.

This script also uses a slightly modified version of one of these Ghidra scripts.

Many thanks to the contributors of these projects for their initial work.