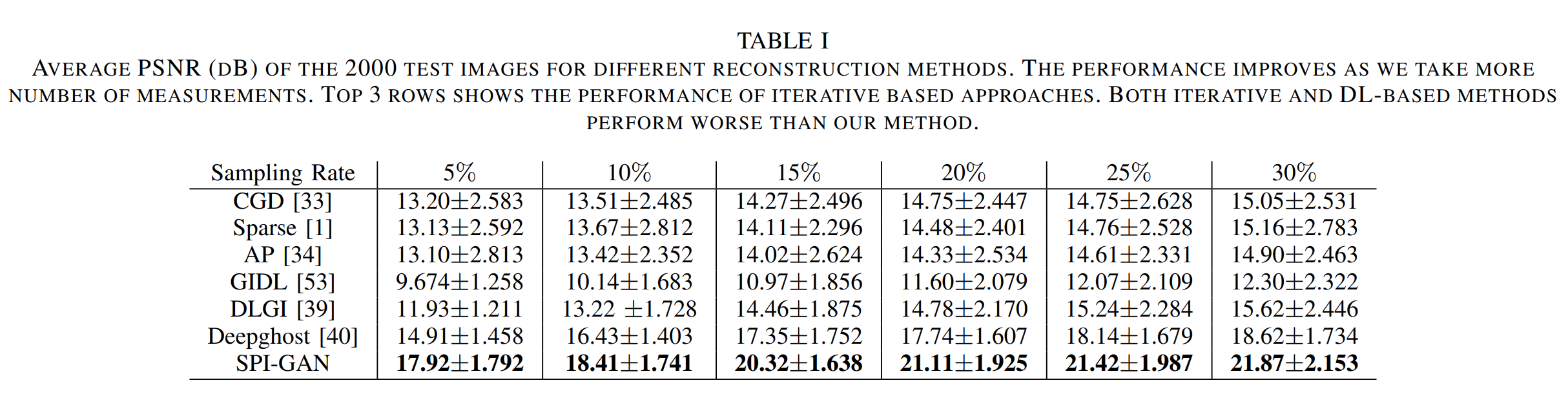

We design a novel DL-based reconstruction framework to tackle the problem of high-quality and fast image recovery in single-pixel imaging

Welcome to watch ? this repository for the latest updates.

✅ [2023.12.18] : We have released our code!

✅ [2021.07.21] : We have released our paper, SPI-GAN on arXiv.

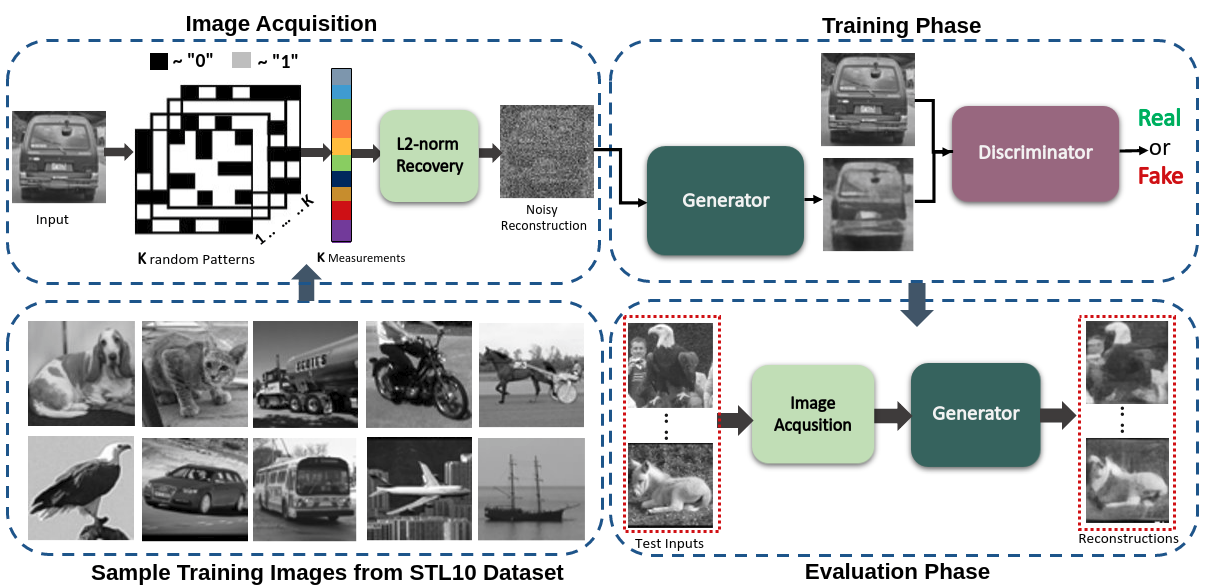

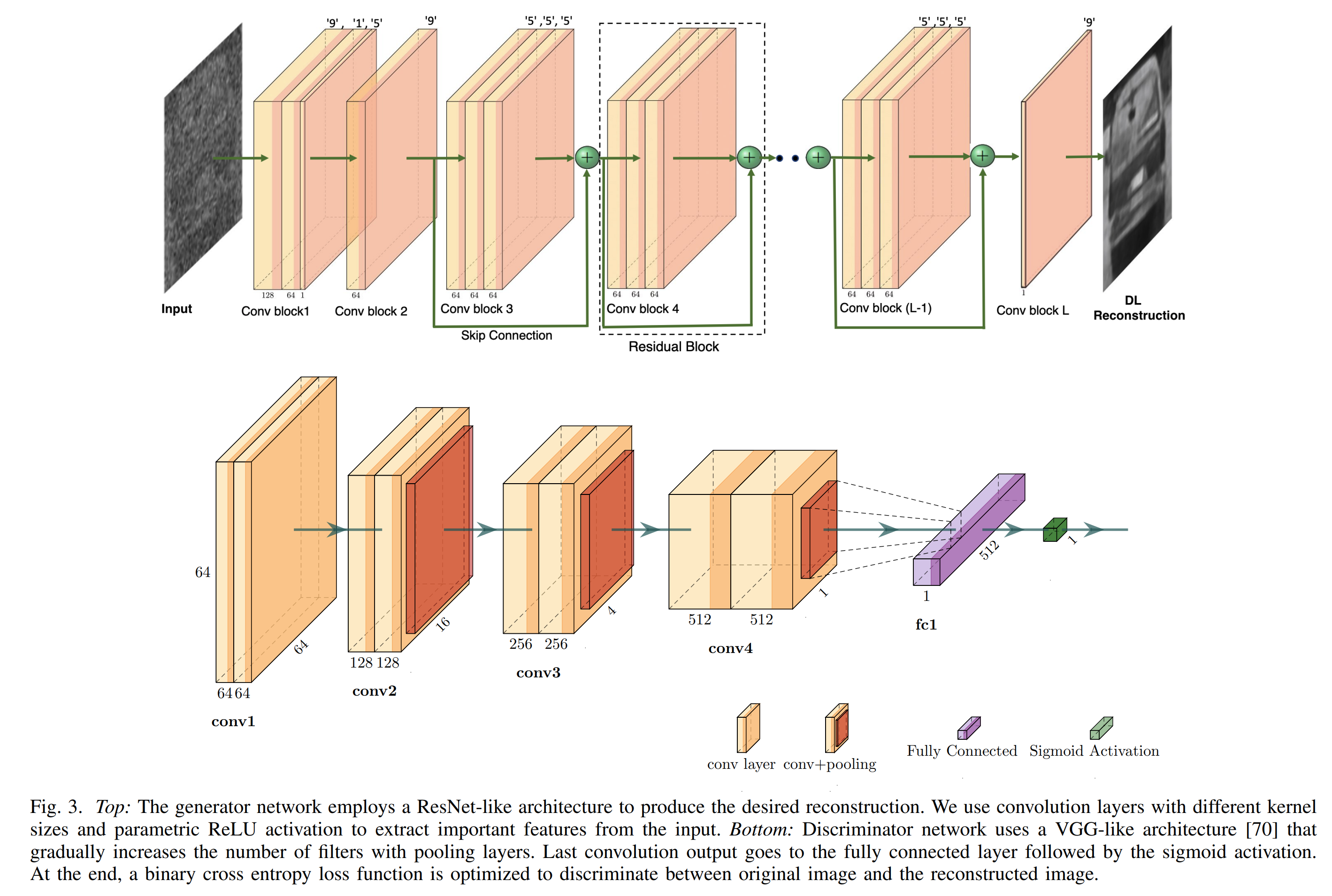

Our proposed SPI-GAN framework mainly consists of a generator that takes the noisy l2-norm solution (xˆ_noisy) and produce a clear reconstruction

(xˆ) that is comparable to x. On the other hand, a discriminator learns to differentiate between x and xˆ in an attempt to not to be fooled by the generator.

Our proposed SPI-GAN framework mainly consists of a generator that takes the noisy l2-norm solution (xˆ_noisy) and produce a clear reconstruction

(xˆ) that is comparable to x. On the other hand, a discriminator learns to differentiate between x and xˆ in an attempt to not to be fooled by the generator.

Install Anaconda and create an environment

conda create -n spi_gan python=3.10

conda activate spi_ganAfter creating a virtual environment, run

pip install -r requirements.txtFirst download the STL10 and UCF101 datasets. You can find both of these datasets very easily.

If you Want to Create the images that will be fed to the GAN, Run Matlab code "L2Norm_Solution.m" for generating the l2-norm solution. Make Necessary Folders before run. I will also upload the python version of this in future.

Execute this to create the .npy file under different settings

python save_numpy.pyFor Training-

python Main_Reconstruction.pyDownload videos and train/test splits here.

Convert from avi to jpg files using util_scripts/generate_video_jpgs.py

python -m util_scripts.generate_video_jpgs avi_video_dir_path jpg_video_dir_path ucf101Generate annotation file in json format similar to ActivityNet using util_scripts/ucf101_json.py

annotation_dir_path includes classInd.txt, trainlist0{1, 2, 3}.txt, testlist0{1, 2, 3}.txt

python -m util_scripts.ucf101_json annotation_dir_path jpg_video_dir_path dst_json_path

If you find our paper and code useful in your research, please consider giving a star and a citation .

@misc{karim2021spigan,

title={SPI-GAN: Towards Single-Pixel Imaging through Generative Adversarial Network},

author={Nazmul Karim and Nazanin Rahnavard},

year={2021},

eprint={2107.01330},

archivePrefix={arXiv},

primaryClass={cs.CV}

}