Korean Handwriting Recognition AI

Konkuk Univ. Senior, Multimedia Programming - Term project (Individual project)

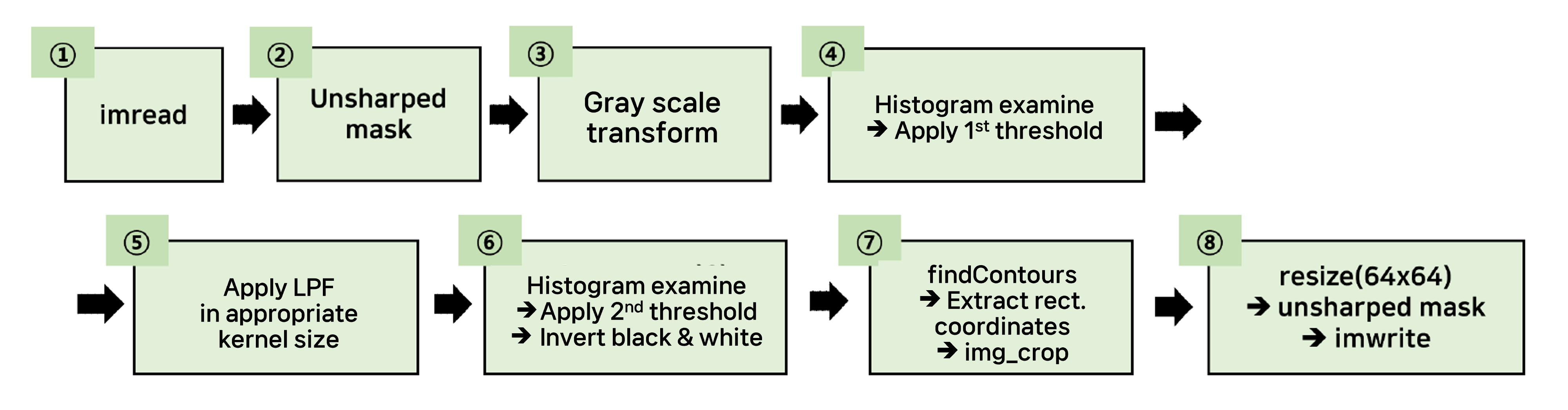

1. Introduction

"Can an individual's Korean handwriting be learned through an artificial neural network?"

Even when writing the same letters, each person's handwriting style looks subtly different. The handwriting style of people who are forced to write or who write while belching will leave different traces than usual, making it highly reliable as evidence. This uniqueness is what makes a signature unique. In important documents and exams, it is very important to check the handwriting for authenticity.

As it is used to prove identity, handwriting is unique, but if there are two different handwritings with only subtle differences, it is difficult for humans to distinguish the difference with the naked eye. Therefore, I wanted to implement an artificial intelligence model for Korean handwriting recognition.

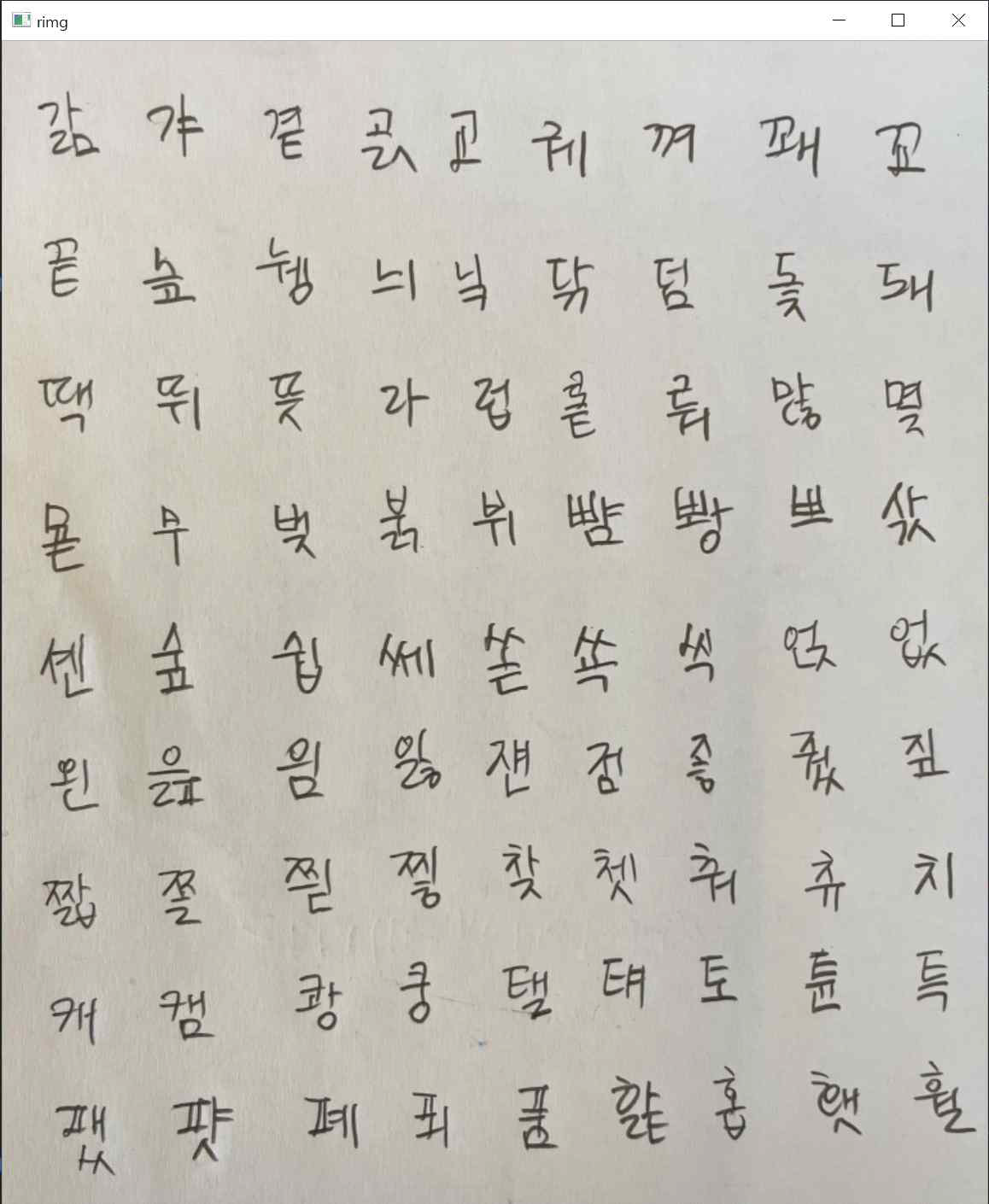

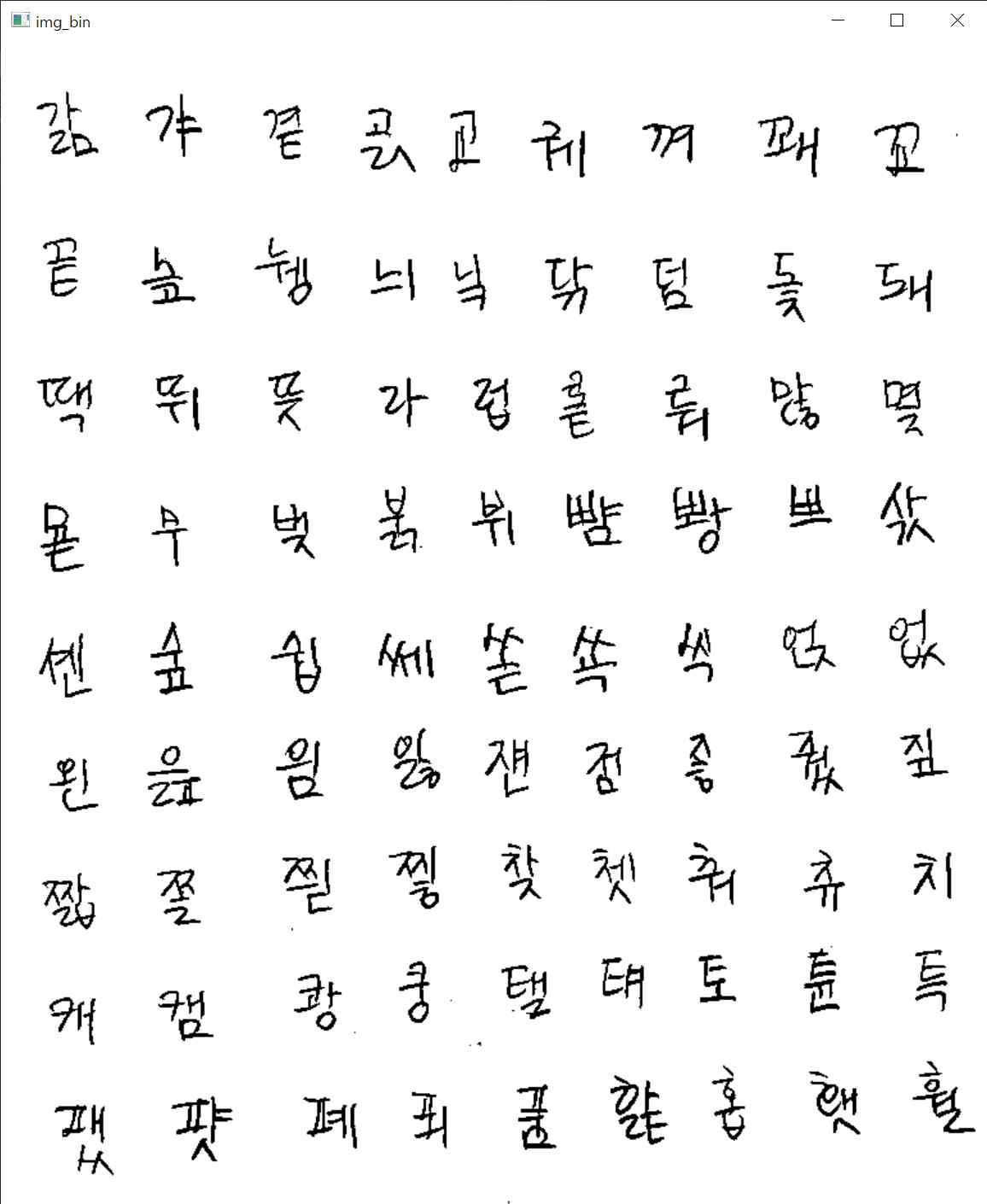

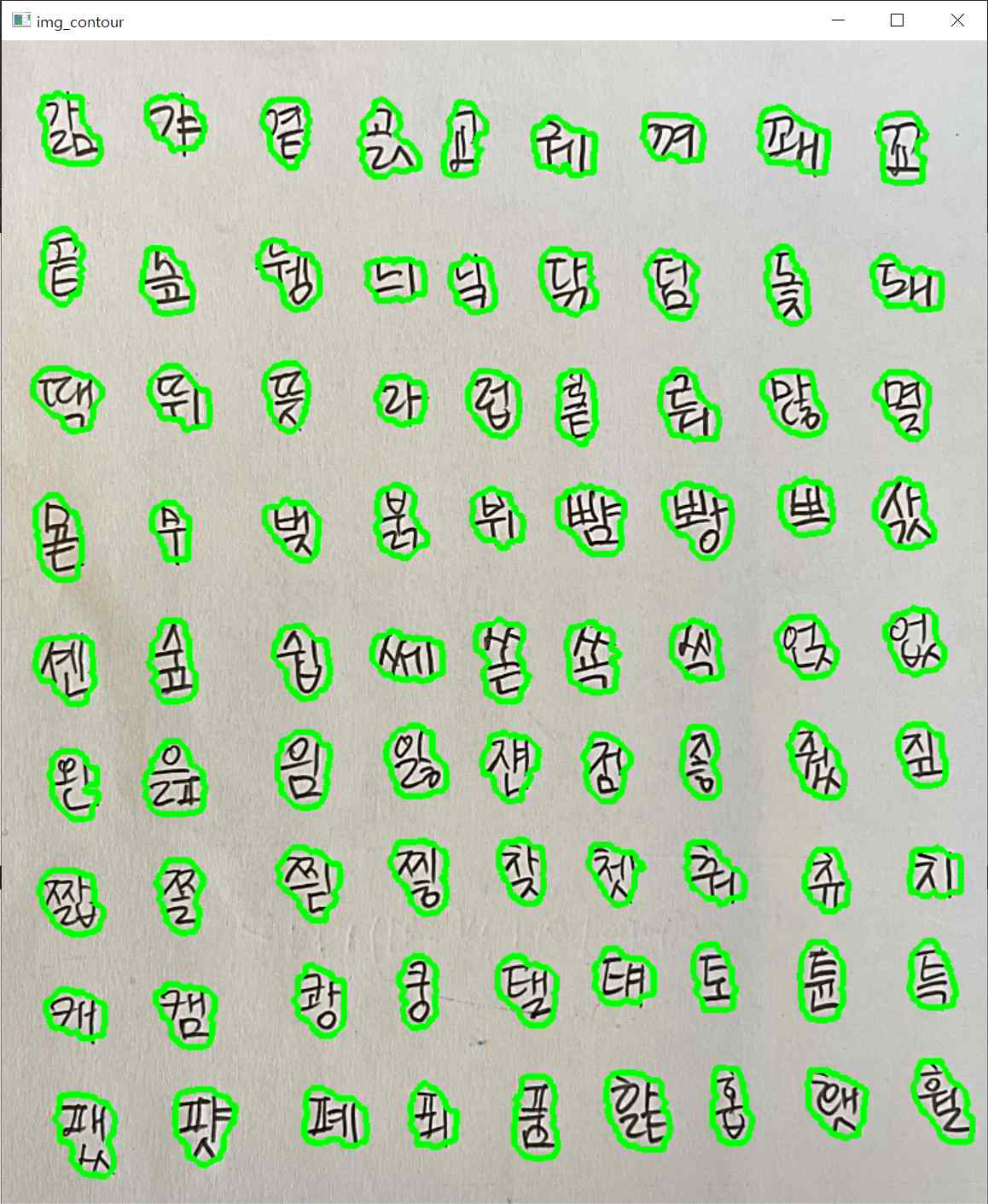

(1-1) Input data

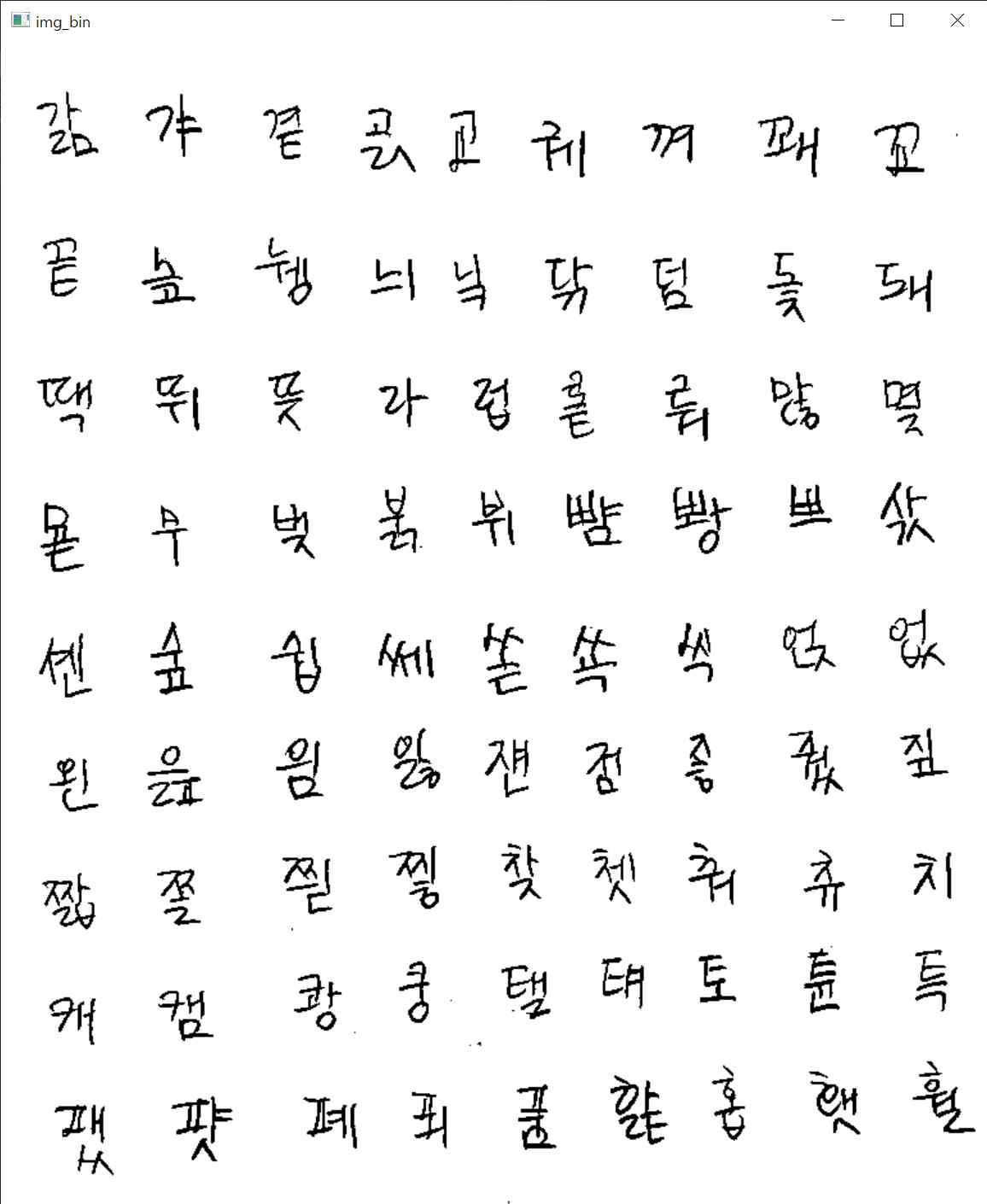

- Korean Handwriting from 10 people

- The picture above is all written by different person. (You can see that they are the same letters, but subtly different.)

(1-2) Target

- 10 people (3 family members, 7 close friends)

- BSN(Sona Bang), CHW(Howon Choi), KBJ(Beomjun Kim), KJH(Joonhyung Kwon), LJH(Jongho Lee), LSE(Seungeon Lee), PJH(Jonghyuk Park), PSM(Sangmoon Park), SHB(Suk Hyunbin), SWS(Woosub Shin)

(1-3) Expected application field

- Handwriting verification of national examination and important documents.

- OCR (Optical Character Recognition)

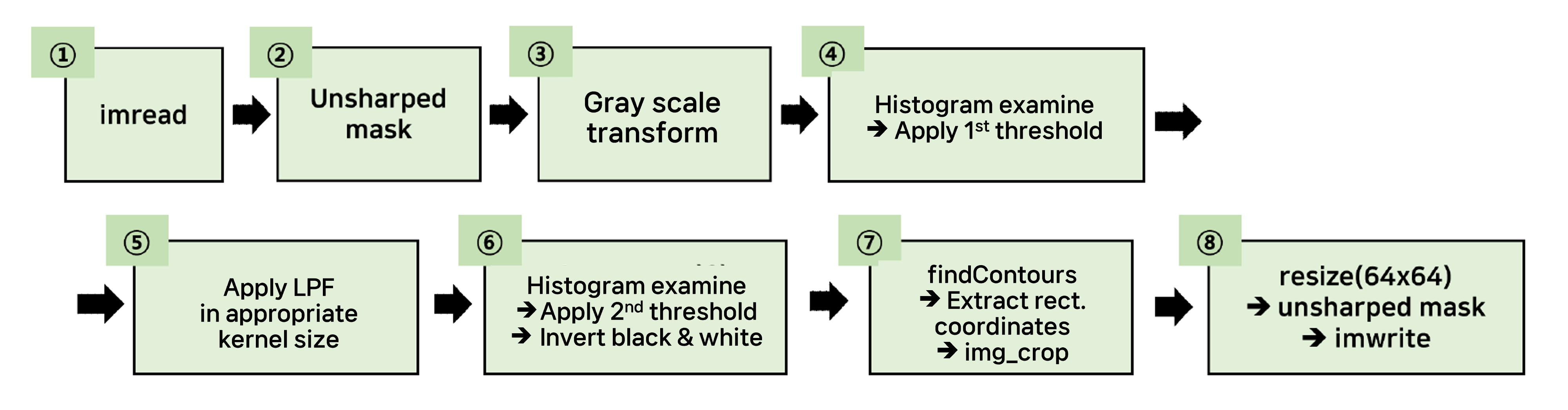

2. Creating database

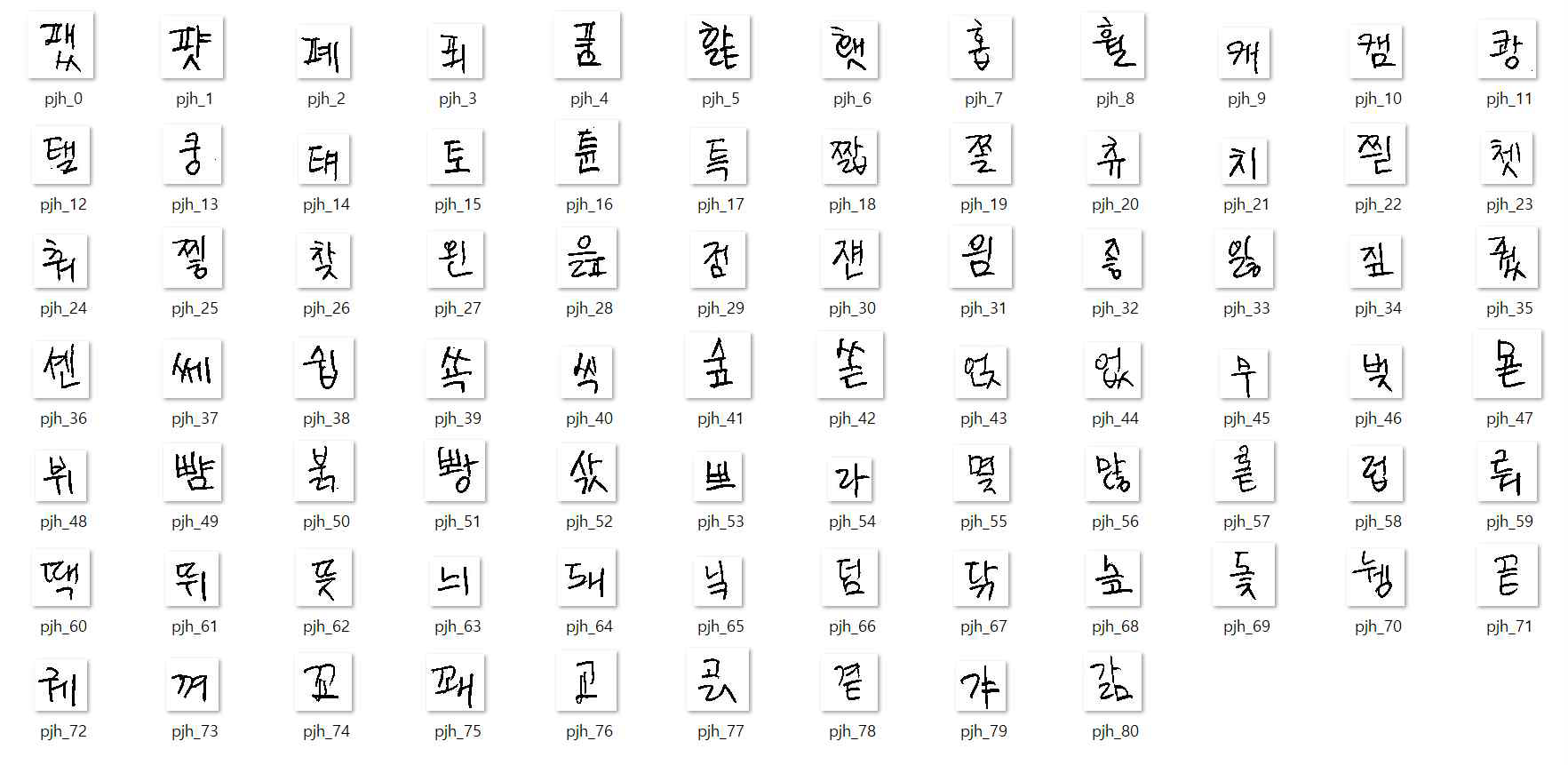

2-1. One-letter data

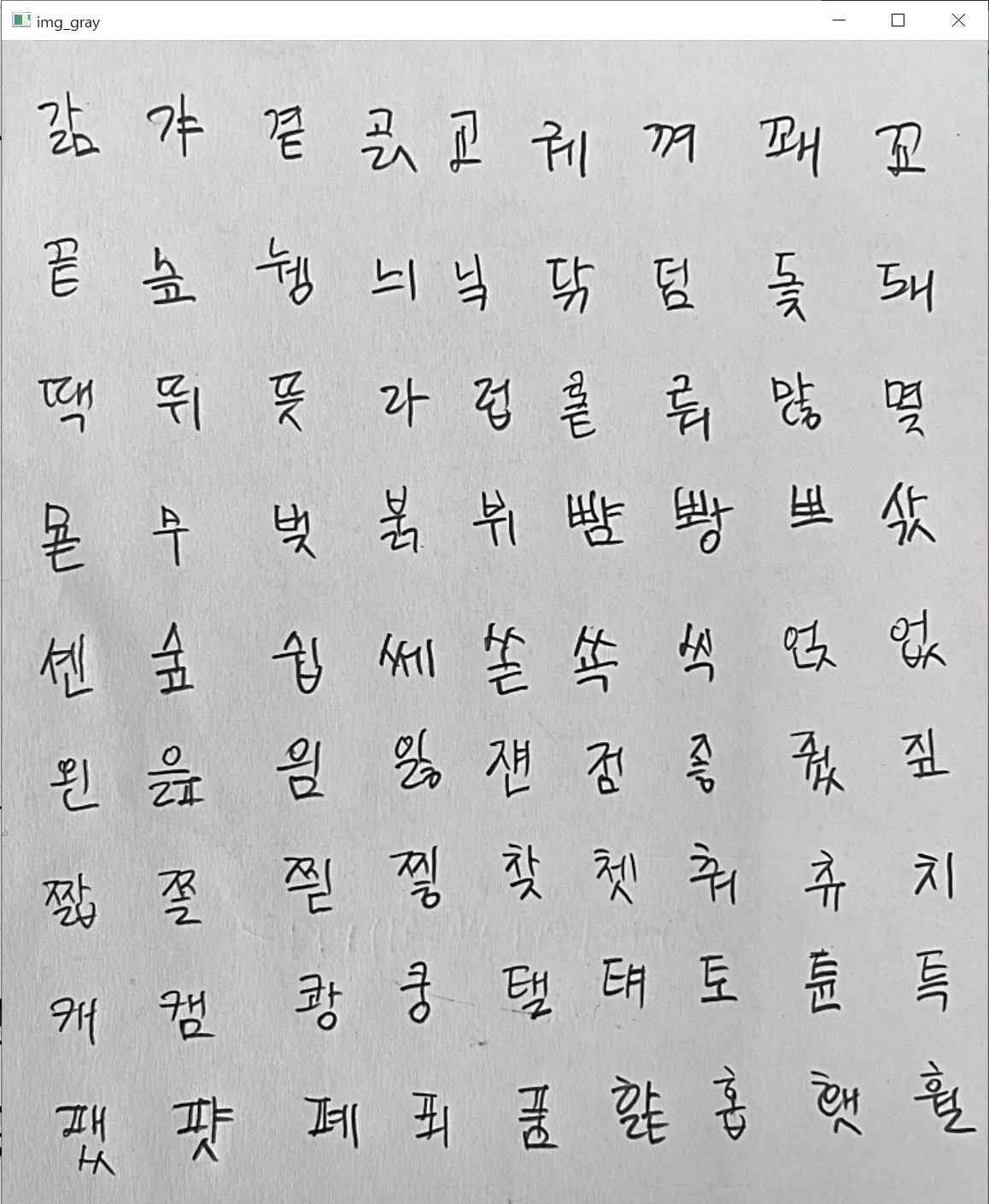

(1) imread

- Used template provided from "Ongle-leap", a font design company

- Included every combination of Korean letter

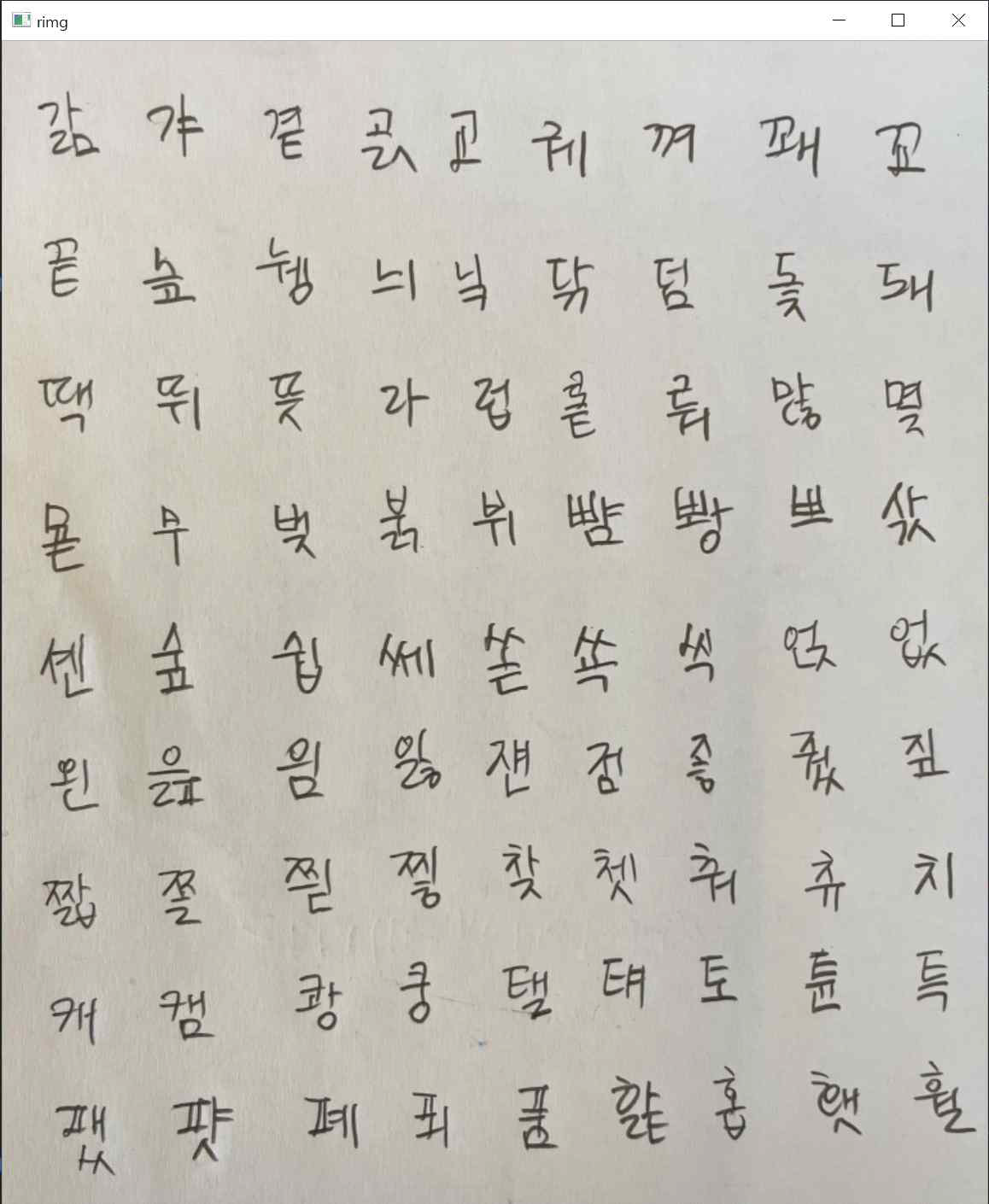

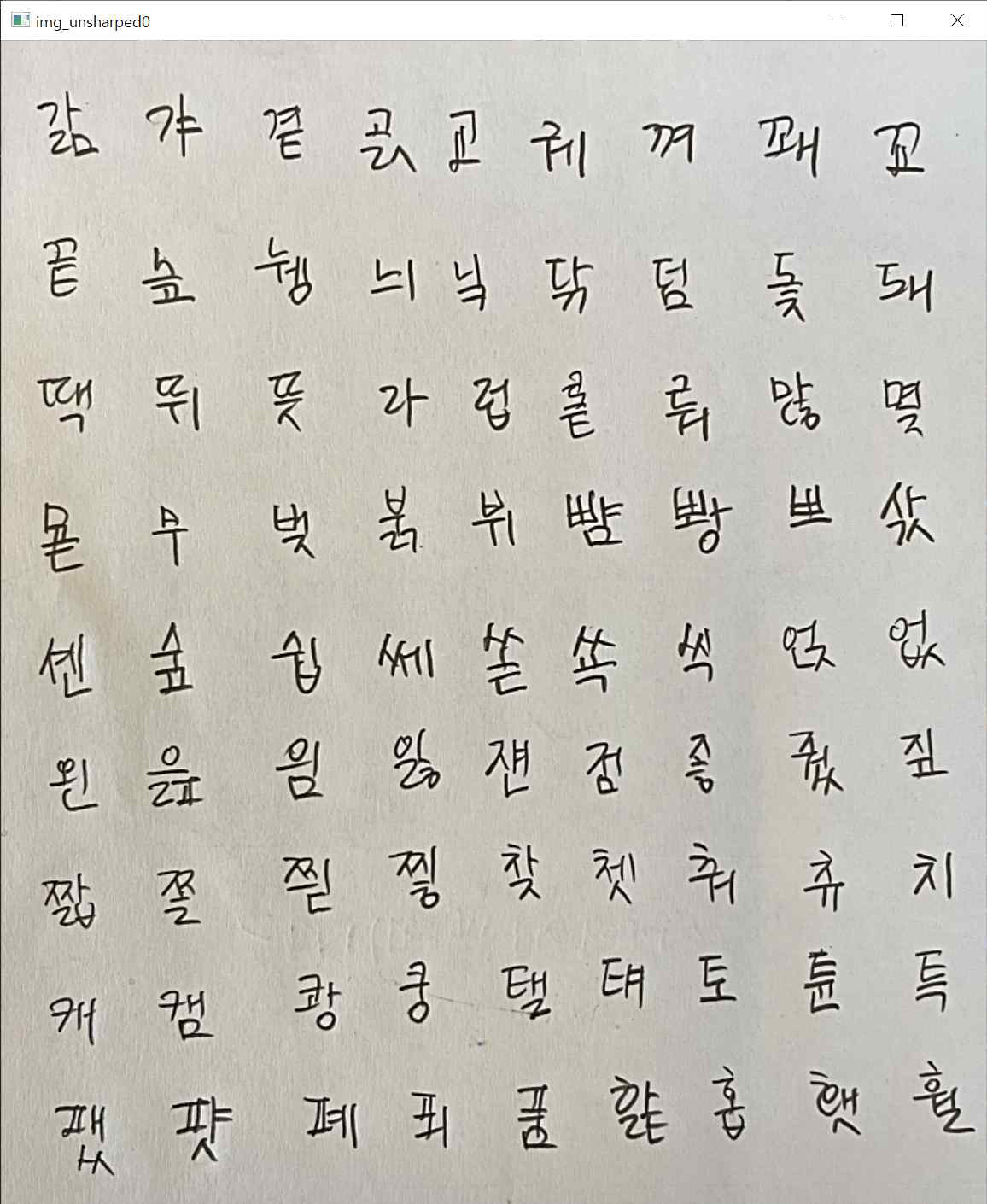

(2) Unsharped mask

- Used unsharped mask to sharpen one's handwriting

(3) Grayscale transform

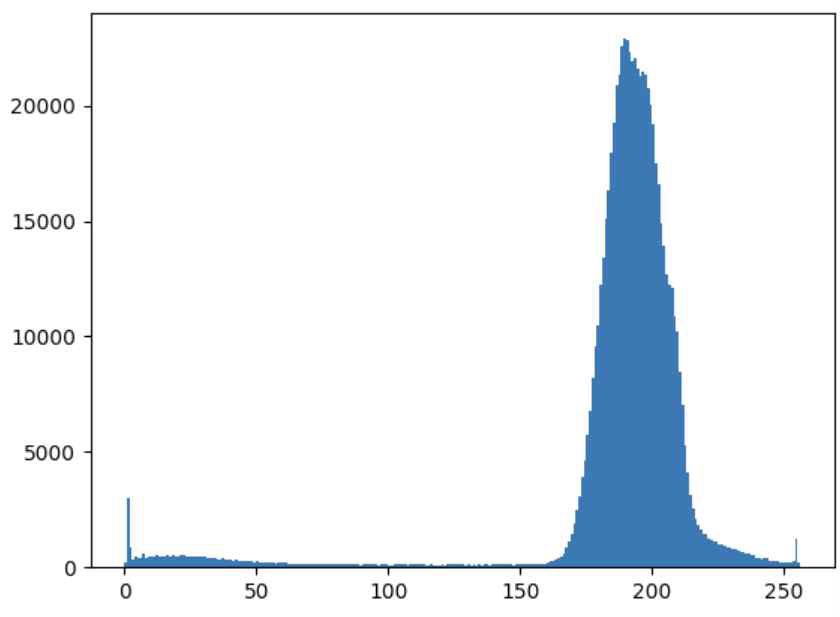

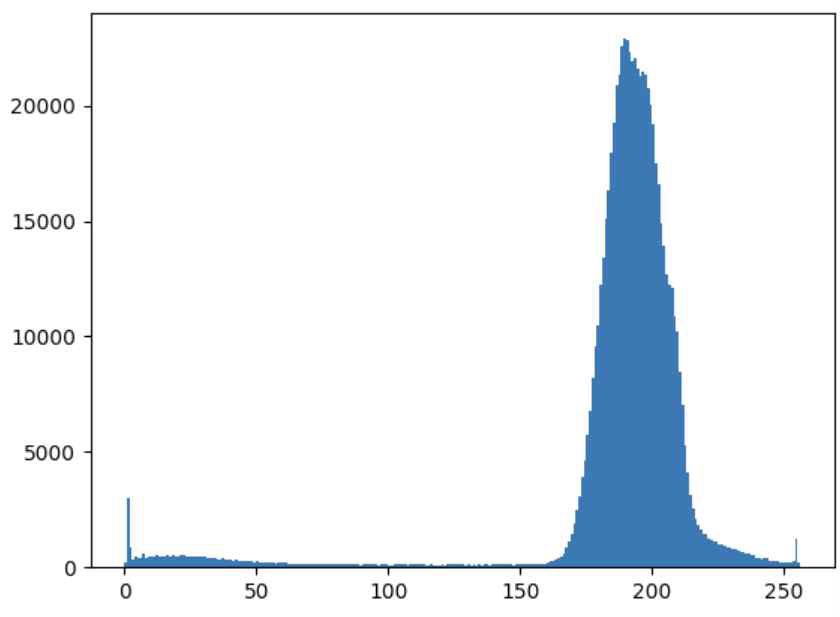

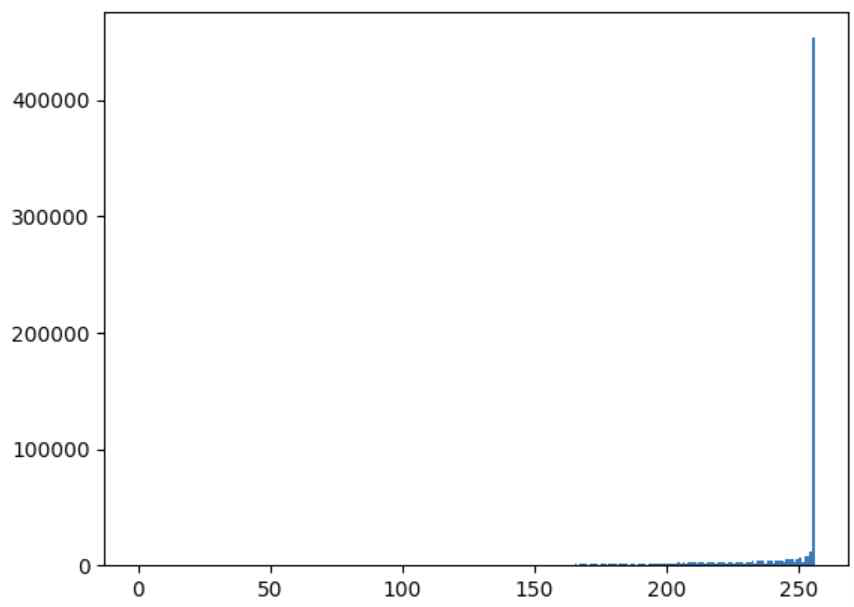

(4) Histogram examine & Apply 1st threshold

- The threshold is set through the Histogram, and the image is binarized based on the threshold.

- (In this example, the threshold is set to 150 of 0 to 255)

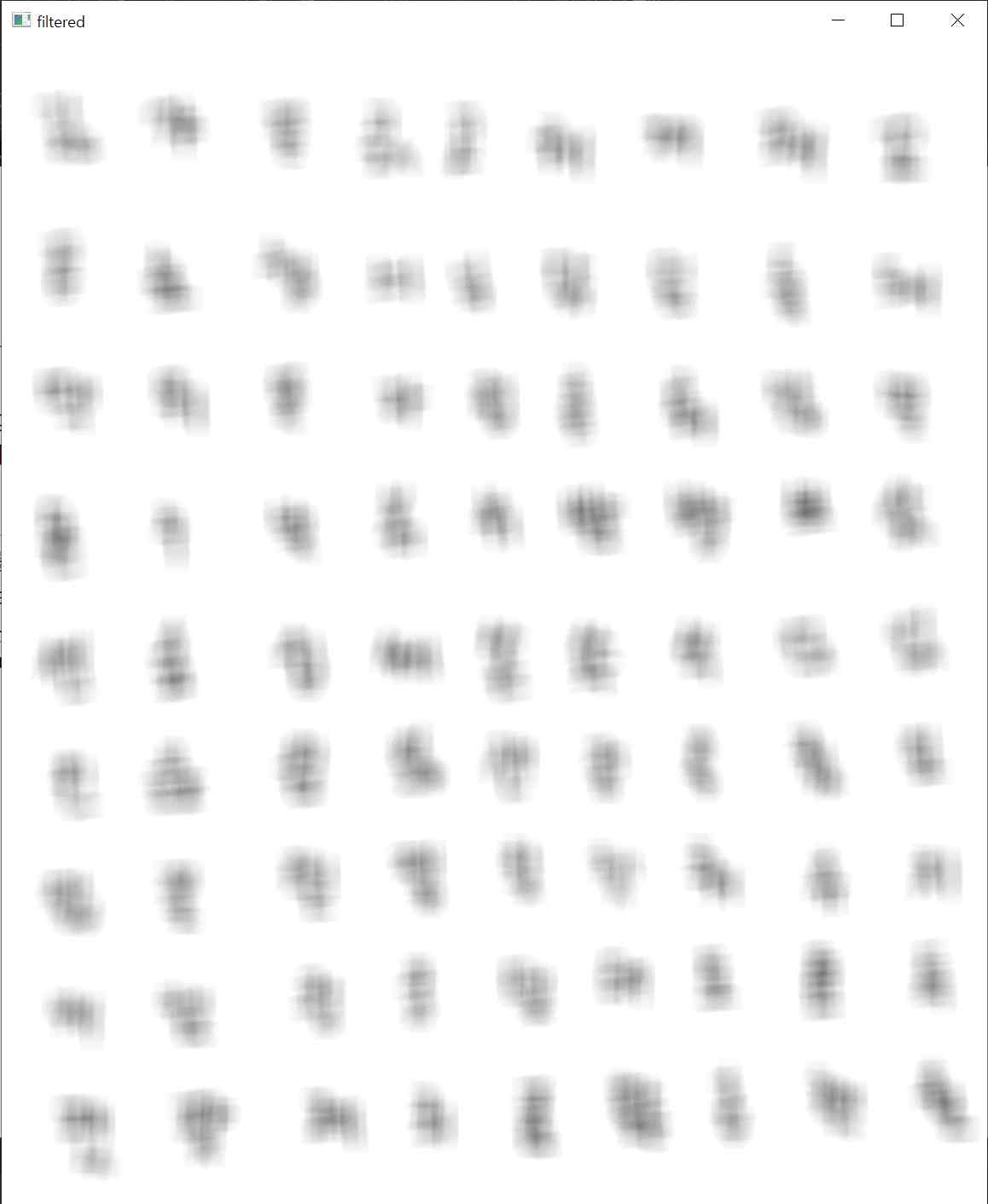

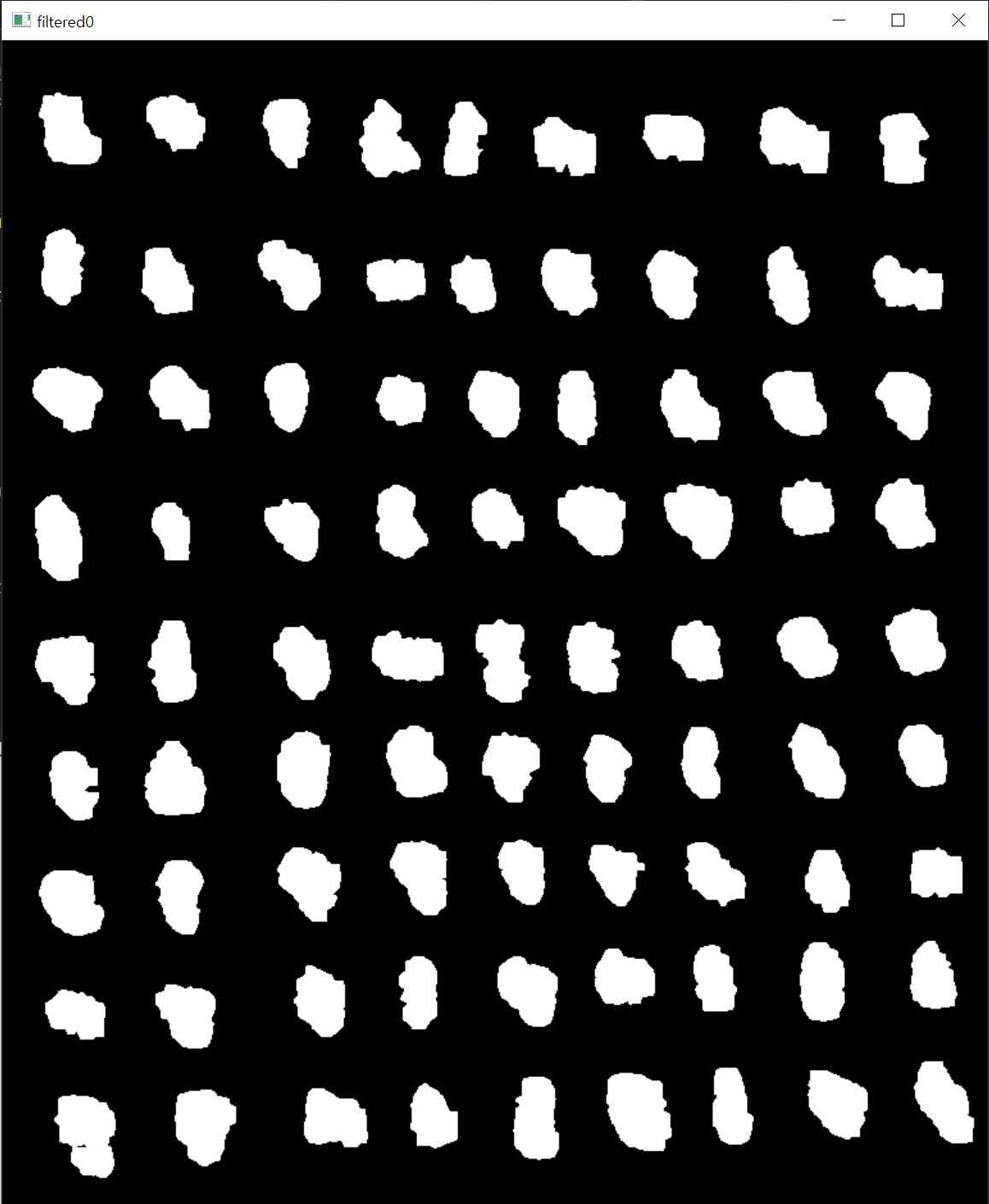

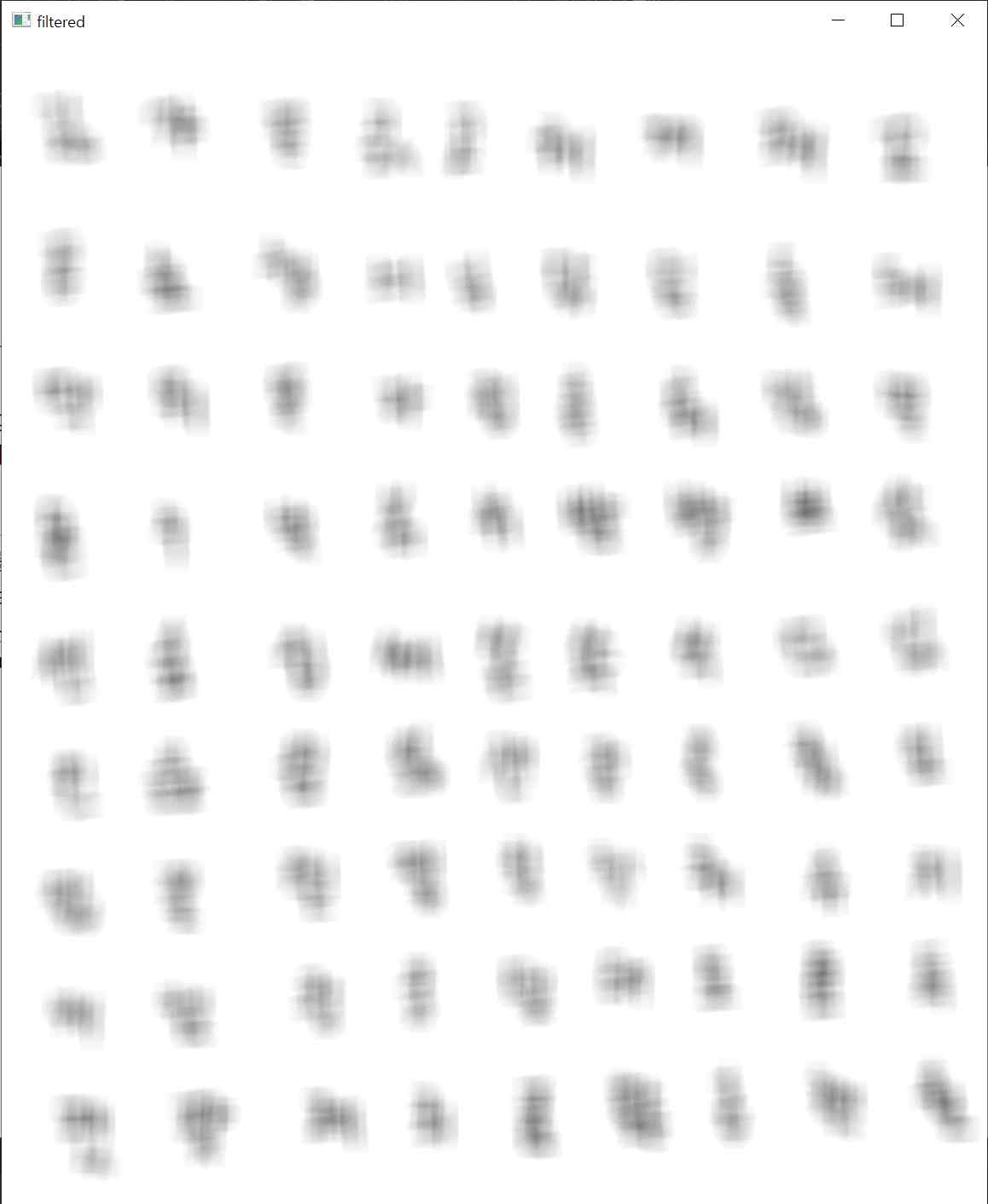

(5) Apply LPF

- In order to extract the position of the handwriting, it is necessary to smooth the handwriting through LPF so that the contour is exposed.

- Set the kernel size appropriately and apply LPF to binarized images through cv2.filter2D.

- The smaller the Kernel size, the easier it is to detect smaller units such as vowels and consonants, and the larger the Kernel size, the easier it is to detect the contour of the letter itself.

- (Example applies 21x21 kernel)

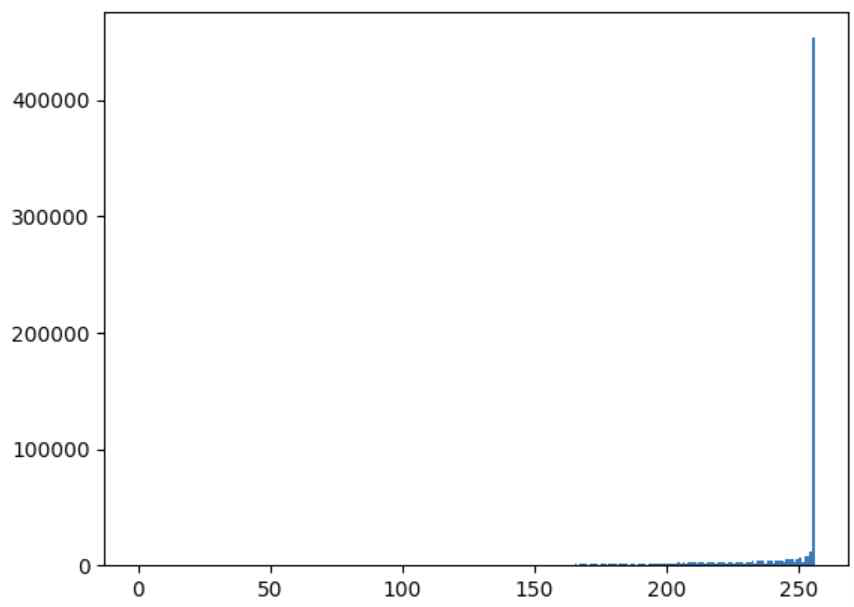

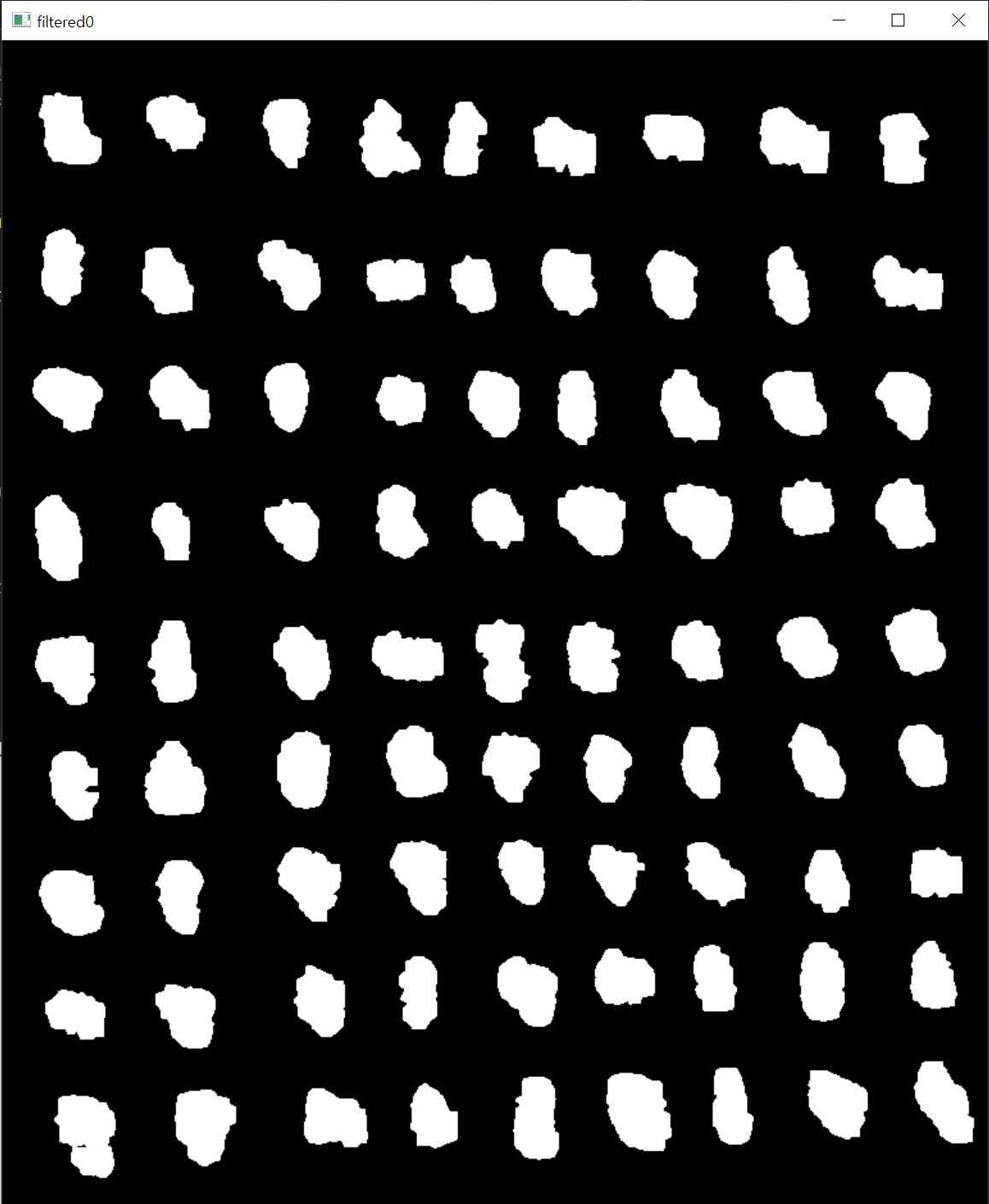

(6) Histogram examine & Apply 2nd threshold

- Set the threshold through the histogram of the picture smoothed with LPF, then binarization is performed once again based on the threshold.

- (Example threshold value : 230)

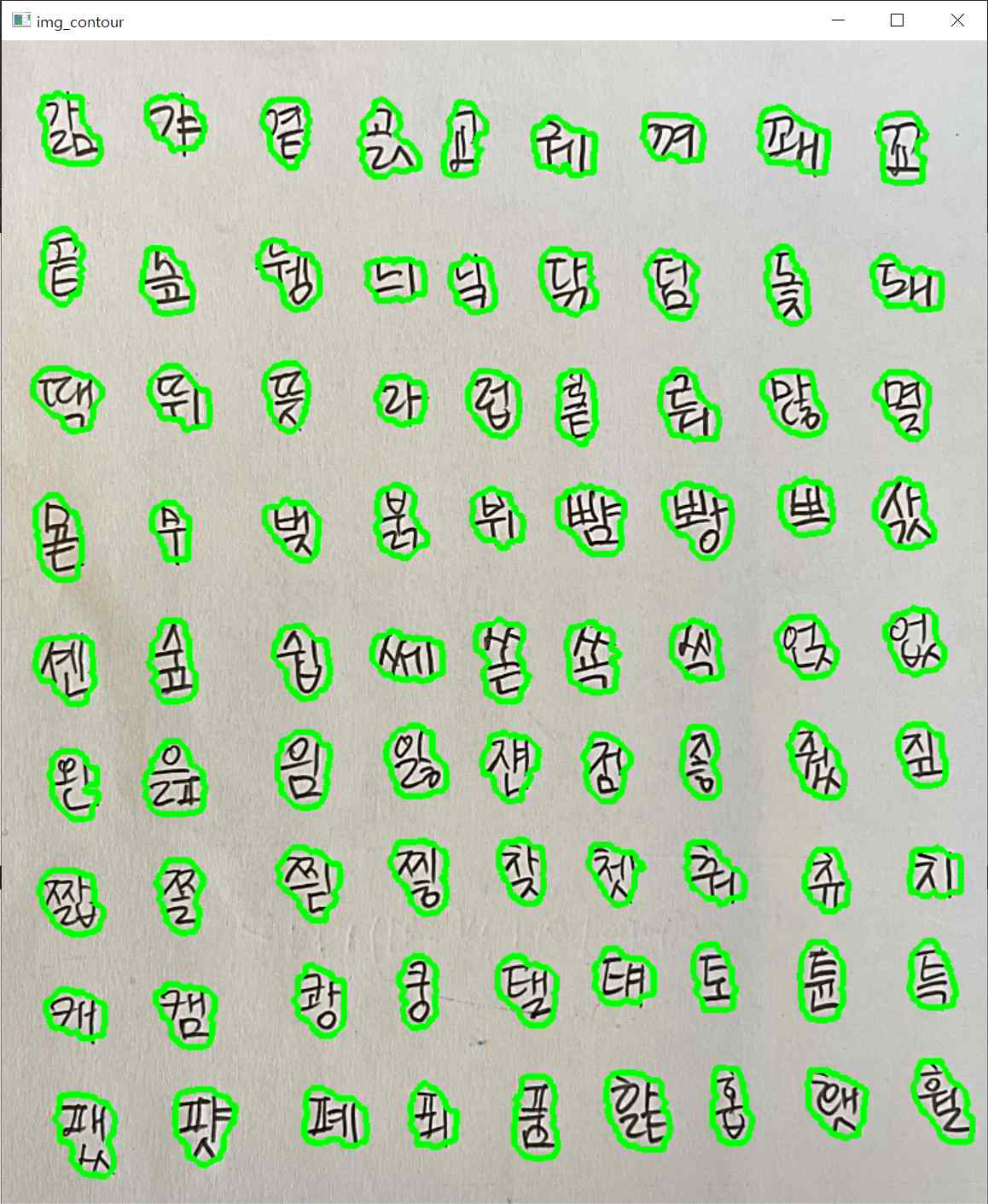

(7) Extract contour & coordinates, image crop

- A small outline that is not a letter, was not extracted.

- The x,y coordinates and w,h values were extracted from the extracted contour, and the coordinates were calculated again with a square so that the handwriting characteristics were not lost as much as possible because they had to be resized to 64x64 sizes later.

- Through the calculated coordinates, the image was cropped into a square shape.

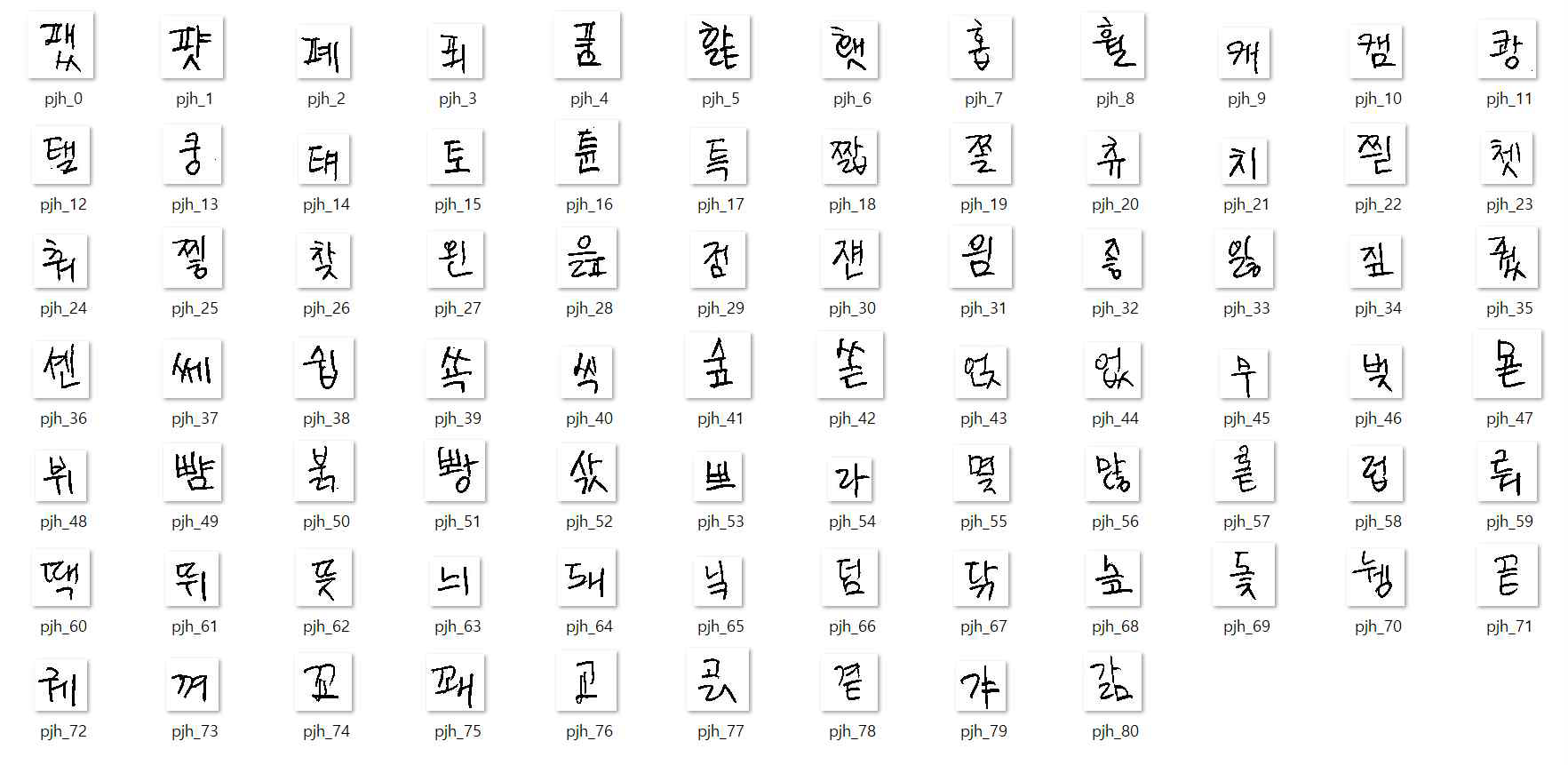

(8) Imwrite

- 81 one-letter data per person

- total : 810 one-letter data were collected,

2-2. Two-letter data

- The two-letter data was created by combining different single-letter data, each scaled appropriately.

- In order to reduce the loss of feature information during resizing, the img_concat(img1, img2) function was created and used to connect the images, and forming them into squares.

- Acquired 6,480 two-letter data per target (81P2)

2-3. Three-letter data

- Three-letter data was made by combining one-letter data and two-letter data, each scaled appropriately.

- Acquired 7,980 three-letter data per target

2-4. Actual handwritten data from note-taking

- Data was acquired from the target's actual note taking.

- Acquired 30 actual handwritten data per target

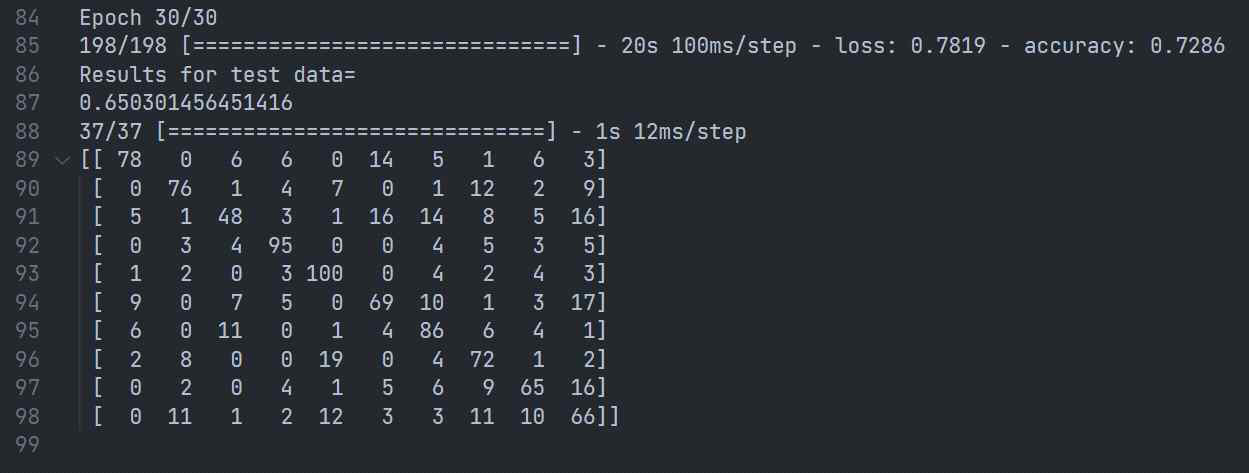

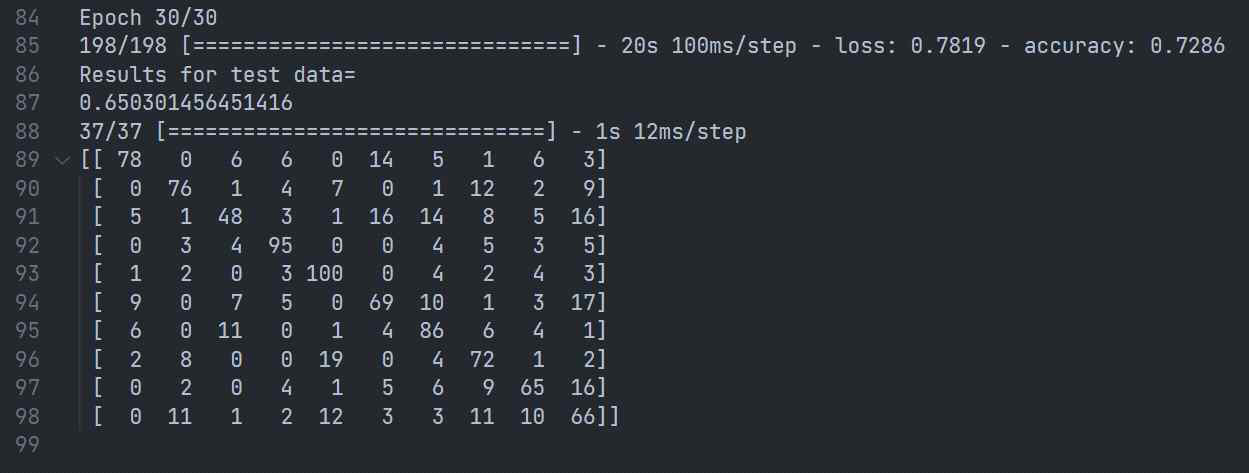

3. 1st Result

-

Train data : Test data = 9 : 1

-

Used data = One-letter(81) + Two-letter(500) + Three-letter(500) + Actuall handwriting(30)

-

n.Epoch = 20, Batch size = 50, Learning rate = 0.01

-

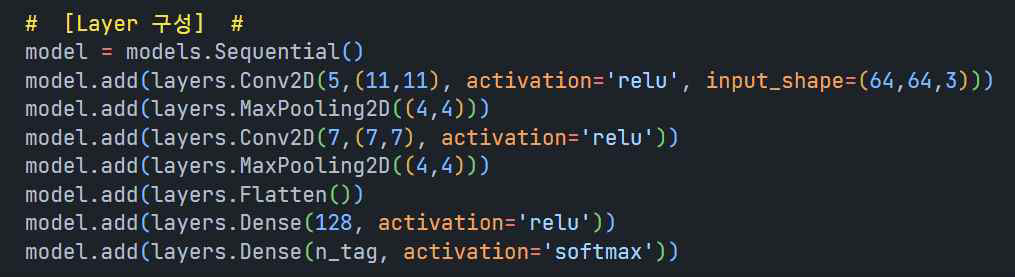

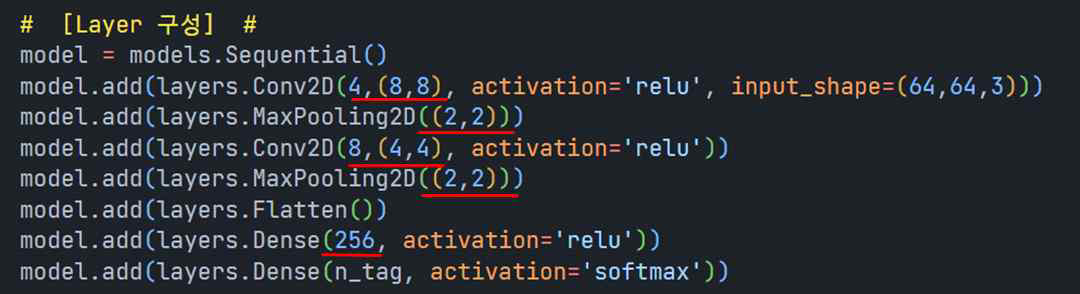

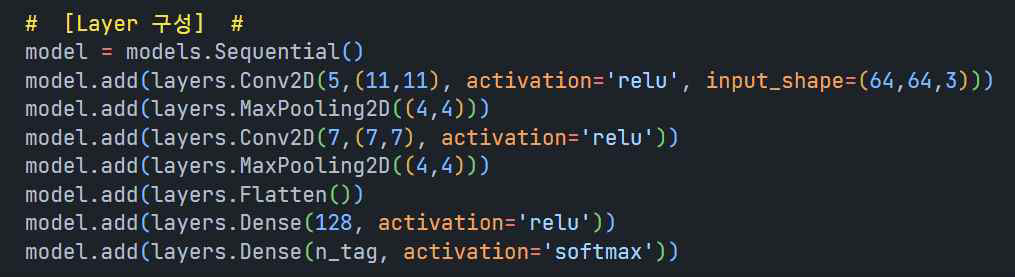

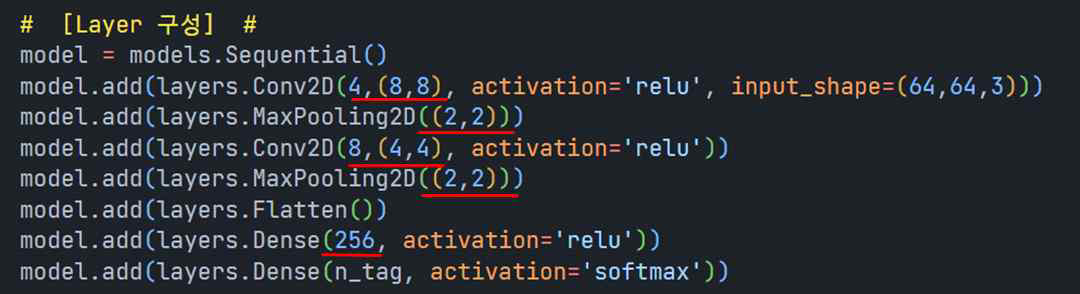

Layer configure

- Result

- Loss=0.7819, Accuracy=0.72

- Result for test data=0.6503

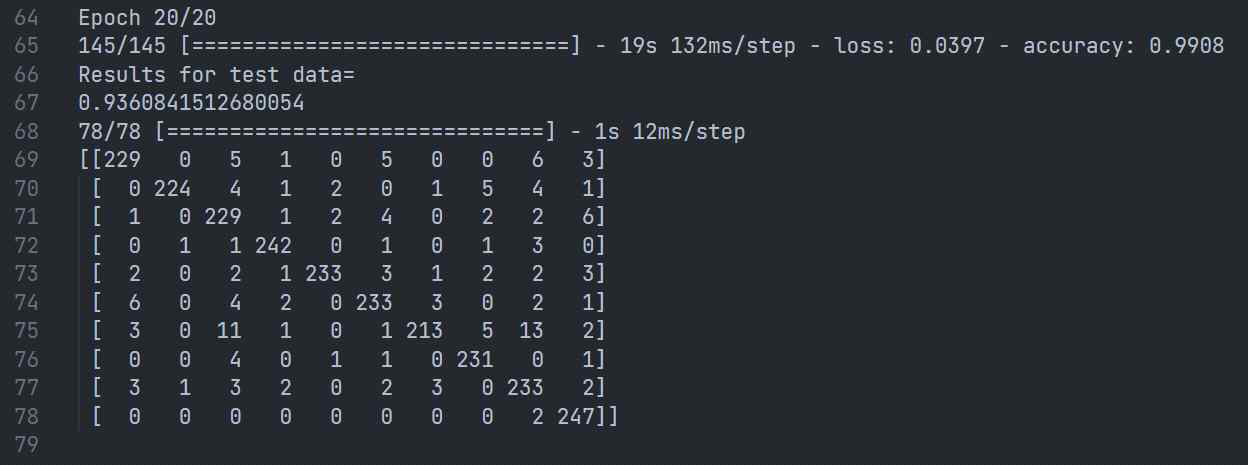

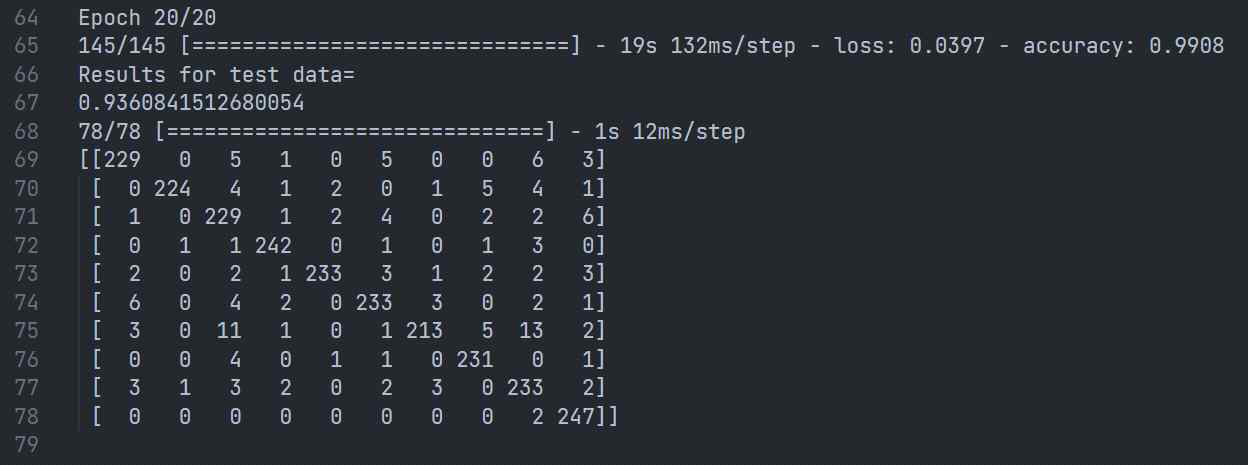

4. 2nd Result

-

Train data : Test data = 9 : 1

-

Used data = One-letter(81) + Two-letter(1000) + Three-letter(1300) + Actuall handwriting(30)

-

n.Epoch = 20, Batch size = 150, Learning rate = 0.04

-

Layer configure

- Result

- Loss=0.0397, Accuracy=0.9908

- Result for test data=0.9360

5. Result analysis

Compared to the first result the second result were all significantly improved. I think the reason why the results have improved is as follows.

(5-1) Increasing the data used for learning

- In the first attempt, 1,111 data were used per target, and in the second attempt, 2,411 data were used, which is more than double.

- Since learning and evaluation were conducted through more data, more delicate characteristics of the target's handwriting could have been caputured.

(5-2) Changes in the artificial neural network layer

- In the second attempt, the size of the convolution mask and the size of the pooling were modified.

- The size of the convolution mask was reduced to a smaller size than before to capture very fine handwriting characteristics

- Reduced the loss of handwriting characteristics in the learning process by reducing the pooling size.

- In addition, to contain more information about handwriting as parameters, the number of nodes in fully connected was increased from 128 to 256

(5-3) Increase of batch size and learning rate