Operant conditioning is a classical paradigm used in experimental psychology in which animals learn to perform an action in order to achieve a reward. By using this paradigm, it is possible to extract learning curves and measure accurately reaction times. Here we describe a fully 3D printable device that is able to perform operant conditioning on freely moving mice, while performing real-time tracking of the animal position.

You can find 3D printed models here or here.

In figures/EXPLODED VIEW.jpg there is an intuitive diagram of the assembling scheme.

We printed all the components using Cura 4.0 with a medium resolution (100 µm), a speed of 90 mm/s and an infill of 20%. The design of the OC chamber is quite simple so almost all the printers are sufficiently precise to successfully print the entire chamber.

A list of all the components can be found here: Bill of materials

OC CHAMBER

CAMERA

DELIVERY

In addition you need:

Connect all the components as described in figures/diagram_scheme.png

To install the software in the Raspberry Pi(RPI) just download or copy the entire code in a folder of the Raspian OS.

Python

Arduino

Compile and load on the Arduino UNO the sketch called skinner.ino

To calibrate capacitive sensors thresholds load the Arduino sketch called skinnerCapacitiveTest. This function just prints on the serial port capacitive sensor values. It is helpful to set the proper threshold value to detect mouse touches.

To run the code type in terminal:

cd homepioc_chamber \ or replace with the folder path containing the scpript

python3 cvConditioningTracking.pyAlternatively open cvConditioningTracking in IDLE IDE and push F5.

The user can customize some of the low-level parameters of the experiments by editing the value of the variables in the first 25 lines of the file cvConditioningTracking.py. A more detailed explanation of those parameters is given in the file itself.

The chamber can run experiments in 2 modes: Training mode and Permutation mode. The user can select one of the two modes by editing the parameter task in the cvConditioningTracking file. The details and differences of the 2 modes can be found in the paper.

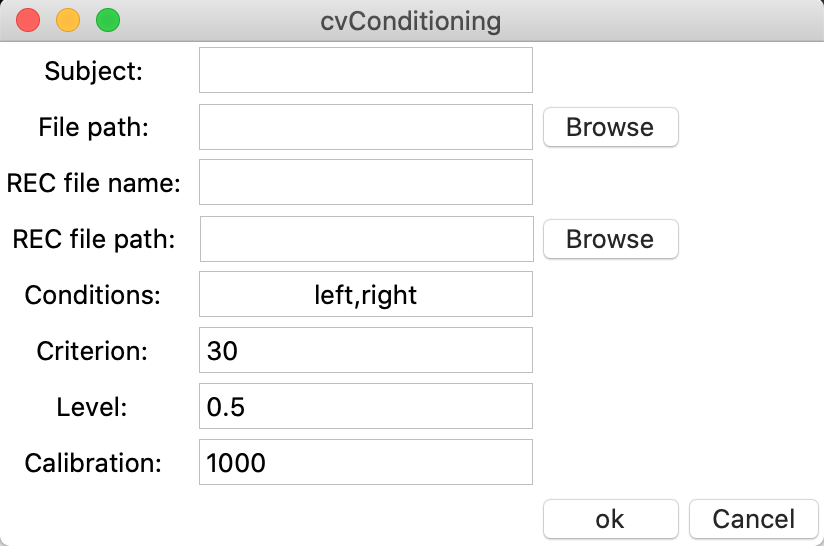

At the start of the experiments, the user is prompted with a GUI that collects some basic informations about the experiments that is about to run.

Subject: A string containing an identifier for the current mouse. If left empty no file is saved.

File Path: Location to save experiment file. The output consists of two .txt files containing the dataset of the experiment as described in the dataset section,stored inside DATA and DATAtracker folder. The user can browser for a location on the PC, if the field is left empty the default is the current working directory.

REC file name: A string containing the name to use for saving the video recording. If left empty no file is saved.

REC file path: Location to save the video recording with an overlay containing the mouse position and the active area. The user can browser for a location on the PC, if the field is left empty the default is the current working directory.

Conditions: Experimental conditions. The list of stimuli that will be presented in the experiment. One or more conditions, divided by a comma, can be specified:

All the conditions specified here will be presented in a random order.

Criterion: Number of frames necessary for the mouse to stay in the active area to activate a trial. 20 frames = 1 sec

Level: Select the vertical position of the line segregating the active area of the chamber from the inactive one. The value is normalized to the chamber height. 0 = bottom of the chamber, 1 = top of the chamber, 0.5(default) = middle of the chamber

Calibration: Number of frames to be used at the beginning of the experiment for camera calibration. Calibrating the camera at the beginning of the experiment is important to better track the mouse over the background.

Other customization options are available by editing the first lines of the following files:

To allow the use of more complex visual stimuli you can find a backbone version of the code that works with an LCD display. To run the code Psychopy2 is required. To install Psychopy on RPI follow these instructions. Once Psychopy is installed open cvConditioningTracking.py in the Psychopy IDE and run the code. This code contains a module called LCD.py that can be used to show selected images. For now, the code is a stub, an untested version and runs for demonstrative purposes.

Dataset folder contains our raw data, described in this paper, with 6 subjects. Each subject is contained in its own folder and coded using the scheme: CAGE-LABEL-GENO. Furthermore, there are two Jupyter Notebooks with an example on how you can read txt output files in Python as pandas dataframes.

Detailed description of the apparatus can be found here: 3D printable device for automated operant conditioning in the mouse

For any info and troubleshooting don't hesitate to contact us at