Update: we have released our code and paper for our new vision system here, which took 1st place in the stowing task at the Amazon Robotics Challenge 2017.

This repository contains toolbox code for our vision system that took 3rd and 4th place at the Amazon Picking Challenge 2016. Includes RGB-D Realsense sensor drivers (standalone and ROS package), deep learning ROS package for 2D object segmentation (training and testing), ROS package for 6D pose estimation. This is the reference implementation of models and code for our paper:

Andy Zeng, Kuan-Ting Yu, Shuran Song, Daniel Suo, Ed Walker Jr., Alberto Rodriguez and Jianxiong Xiao

IEEE International Conference on Robotics and Automation (ICRA) 2017

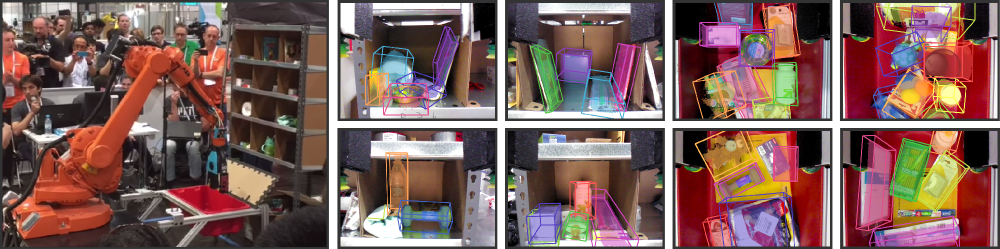

Warehouse automation has attracted significant interest in recent years, perhaps most visibly by the Amazon Picking Challenge (APC). Achieving a fully autonomous pick-and-place system requires a robust vision system that reliably recognizes objects and their 6D poses. However, a solution eludes the warehouse setting due to cluttered environments, self-occlusion, sensor noise, and a large variety of objects. In this paper, we present a vision system that took 3rd- and 4th- place in the stowing and picking tasks, respectively at APC 2016. Our approach leverages multi-view RGB-D data and data-driven, self-supervised learning to overcome the aforementioned difficulties. More specifically, we first segment and label multiple views of a scene with a fully convolutional neural network, and then fit pre-scanned 3D object models to the resulting segmentation to get the 6D object pose. Training a deep neural network for segmentation typically requires a large amount of training data with manual labels. We propose a self-supervised method to generate a large labeled dataset without tedious manual segmentation that could be scaled up to more object categories easily. We demonstrate that our system can reliably estimate the 6D pose of objects under a variety of scenarios.

If you find this code useful in your work, please consider citing:

@inproceedings{zeng2016multi,

title={Multi-view Self-supervised Deep Learning for 6D Pose Estimation in the Amazon Picking Challenge},

author={Zeng, Andy and Yu, Kuan-Ting and Song, Shuran and Suo, Daniel and Walker Jr, Ed and Rodriguez, Alberto and Xiao, Jianxiong},

booktitle={ICRA},

year={2016}

}This code is released under the Simplified BSD License (refer to the LICENSE file for details).

All relevant dataset information and downloads can be found here.

If you have any questions or find any bugs, please let me know: Andy Zeng andyz[at]princeton[dot]edu

Estimates 6D object poses on the sample scene data (in data/sample) with pre-computed object segmentation results from Deep Learning FCN ROS Package:

git clone https://github.com/andyzeng/apc-vision-toolbox.git (Note: source repository size is ~300mb, cloning may take a while)cd apc-vision-toolbox/ros-packages/catkin_ws/src/pose_estimation/src/mdemoA Matlab ROS Package for estimating 6D object poses by model-fitting with ICP on RGB-D object segmentation results. 3D point cloud models of objects and bins can be found here.

ros_packages/.../pose_estimation into your catkin workspace source directory (e.g. catkin_ws/src)pose_estimation/src/make.m to compile ROS custom messages for Matlabpose_estimation/src:nvcc -ptx KNNSearch.curoscorepose_estimation/src/startService.m. At each call (see service request format described in pose_estimation/srv/EstimateObjectPose.srv), the service:roscore in terminalmkdir /path/to/your/data/tmprosrun marvin_convnet detect _read_directory:="/path/to/your/data/tmp"pose_estimation/srcdemo.mstartService.m

demo.mA standalone C++ executable for streaming and capturing data (RGB-D frames and 3D point clouds) in real-time using librealsense. Tested on Ubuntu 14.04 and 16.04 with an Intel® RealSense™ F200 Camera.

See realsense_standalone

cd realsense_standalone

./compile.shAfter compiling, run ./stream to begin streaming RGB-D frames from the Realsense device. While the stream window is active, press the space-bar key to capture and save the current RGB-D frame to disk. Relevant camera information and captured RGB-D frames are saved to a randomly named folder under data.

If your Realsense device is plugged in but remains undetected, try using a different USB port. If that fails, run the following script while the device is unplugged to refresh your USB ports:

sudo ./scripts/resetUSBports.shA C++ ROS package for streaming and capturing data (RGB-D frames and 3D point clouds) in real-time using librealsense. Tested on Ubuntu 14.04 and 16.04 with an Intel® RealSense™ F200 Camera.

This ROS packages comes in two different versions. Which version is installed will depend on your system's available software:

See ros-packages/realsense_camera

ros_packages/.../realsense_camera into your catkin workspace source directory (e.g. catkin_ws/src)realsense_camera/CMakeLists.txt according to your respective dependenciescatkin_makedevel/setup.shroscorerosrun realsense_camera capture/realsense_camera returns data from the sensor (response data format described in realsense_camera/srv/StreamSensor.srv)rosrun realsense_camera capture _display:=TrueA C++ ROS package for deep learning based object segmentation using FCNs (Fully Convolutional Networks) with Marvin, a lightweight GPU-only neural network framework. This package feeds RGB-D data forward through a pre-trained ConvNet to retrieve object segmentation results. The neural networks are trained offline with Marvin (see FCN Training with Marvin).

See ros-packages/marvin_convnet

Realsense ROS Package needs to be compiled first.

CUDA 7.5 and cuDNN 5. You may need to register with NVIDIA. Below are some additional steps to set up cuDNN 5. NOTE We highly recommend that you install different versions of cuDNN to different directories (e.g., /usr/local/cudnn/vXX) because different software packages may require different versions.

LIB_DIR=lib$([[ $(uname) == "Linux" ]] && echo 64)

CUDNN_LIB_DIR=/usr/local/cudnn/v5/$LIB_DIR

echo LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CUDNN_LIB_DIR >> ~/.profile && ~/.profile

tar zxvf cudnn*.tgz

sudo cp cuda/$LIB_DIR/* $CUDNN_LIB_DIR/

sudo cp cuda/include/* /usr/local/cudnn/v5/include/ros_packages/.../marvin_convnet into your catkin workspace source directory (e.g. catkin_ws/src)realsense_camera/CMakeLists.txt according to your respective dependenciescatkin_makedevel/setup.shros_packages/.../marvin_convnet/models/competition/ and run bash script ./download_weights.sh to download our trained weights for object segmentation (trained on our training dataset)marvin_convnet/src/detect.cu: Towards the top of the file, specify the filepath to the network architecture .json file and .marvin weights.tmp in apc-vision-toolbox/data (e.g. apc-vision-toolbox/data/tmp). This where marvin_convnet will read/write RGB-D data. The format of the data in tmp follows the format of the scenes in our datasets and the format of the data saved by Realsense Standalone.save_images and detect. The former retrieves RGB-D data from the Realsense ROS Package and writes to disk in the tmp folder, while the latter reads from disk in the tmp folder and feeds the RGB-D data forward through the FCN and saves the response images to diskrosrun marvin_convnet save_images _write_directory:="/path/to/your/data/tmp" _camera_service_name:="/realsense_camera"rosrun marvin_convnet detect _read_directory:="/path/to/your/data/tmp" _service_name:="/marvin_convnet"tmp folder):rosservice call /marvin_convnet ["elmers_washable_no_run_school_glue","expo_dry_erase_board_eraser"] 0 0Code and models for training object segmentation using FCNs (Fully Convolutional Networks) with Marvin, a lightweight GPU-only neural network framework. Includes network architecture .json files in convnet-training/models and a Marvin data layer in convnet-training/apc.hpp that randomly samples RGB-D images (RGB and HHA) from our segmentation training dataset.

See convnet-training

/usr/local/cudnn/vXX) because different software packages may require different versions.LIB_DIR=lib$([[ $(uname) == "Linux" ]] && echo 64)

CUDNN_LIB_DIR=/usr/local/cudnn/v5/$LIB_DIR

echo LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CUDNN_LIB_DIR >> ~/.profile && ~/.profile

tar zxvf cudnn*.tgz

sudo cp cuda/$LIB_DIR/* $CUDNN_LIB_DIR/

sudo cp cuda/include/* /usr/local/cudnn/v5/include/convnet-training/models/train_shelf_color.jsonmodels/weights/ and run bash script ./download_weights.sh to download VGG pre-trained weights on ImageNet (see Marvin for more pre-trained weights)convnet-training/ and run in terminal ./compile.sh to compile Marvin../marvin train models/rgb-fcn/train_shelf_color.json models/weights/vgg16_imagenet_half.marvin to train a segmentation model on RGB-D data with objects in the shelf (for objects in the tote, use network architecture models/rgb-fcn/train_shelf_color.json).Code used to perform the experiments in our paper; tests the full vision system on the 'Shelf & Tote' benchmark dataset.

See evaluation

apc-vision-toolbox/data/benchmark (e.g. apc-vision-toolbox/data/benchmark/office, `apc-vision-toolbox/data/benchmark/warehouse', etc.)evaluation/getError.m, change the variable benchmarkPath to point to the filepath of your benchmark dataset directoryevaluation/predictions.mat. To compute the accuracy of these predictions against the ground truth labels of the 'Shelf & Tote' benchmark dataset, run evaluation/getError.mAn online WebGL-based tool for annotating ground truth 6D object poses on RGB-D data. Follows an implementation of RGB-D Annotator with small changes. Here's a download link to our exact copy of the annotator.