For the latest benchmark results, please see: https://github.com/AI-performance/embedded-ai.bench/releases

One-click compilation: pull the framework code and compile the library;

One-click conversion: pull the original model, compile the conversion tool, and convert the model;

One-click speed measurement: pull the frame model and measure the speed. Pull the framework model. The framework model is stored in different code warehouses. The speed test process will automatically complete the pull.

# tnncd ./tnn ./build_tnn_android.sh # follow & read `./tnn/build_tnn_android.sh` if build failedcd -# mnncd ./mnn ./build_mnn_android.sh # follow & read `./mnn/build_mnn_android.sh` if build failedcd -# ncnncd ./ncnn ./build_ncnn_android.sh # follow & read `./ncnn/build_ncnn_android.sh` if build failed cd -# tflitecd ./tflite ./build_tflite_android.sh # follow & read `./tflite/build_tflite_android.sh` if build failedcd -# benchpython bench.py# if execution is okay:# ===> edit ./core/global_config.py# === > edit value of `GPU_REPEATS=1000`, `CPU_REPEATS=100`, `WARMUP=20`, `ENABLE_MULTI_THREADS_BENCH=True`# ===> ./clean_bench_result.sh# ===> python bench.py# see benchmark result below# ./tnn/*.csv# ./mnn/*. csv# ./ncnn/*.csv

Currently supported models are limited, see: tnn-models, mnn-models, ncnn-models, tflite-models.

mnn bench result demo

ncnn bench result demo

tnn bench result demo

tflite bench result demo

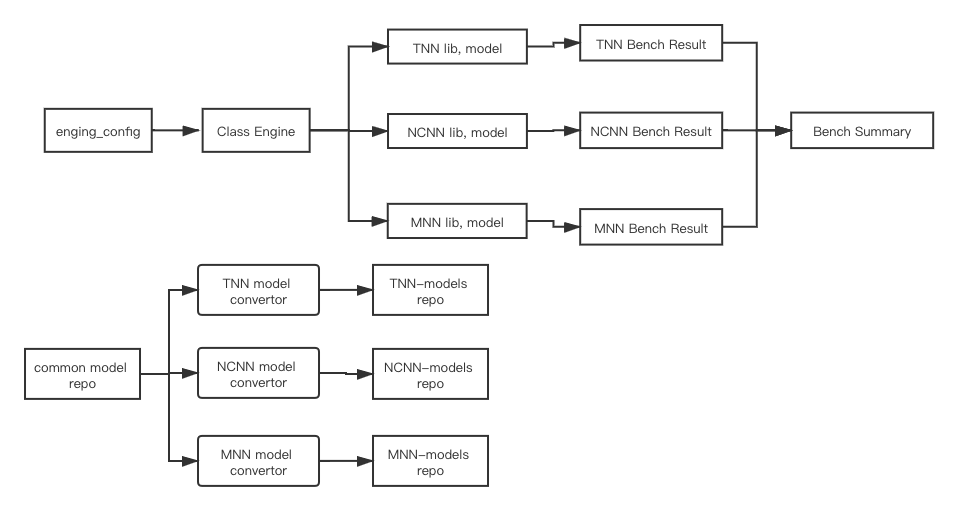

The following figure describes the architecture of this project:

class Engine: Create an instance of each framework by engine_config , load models, compile engine lib (TODO: to be integrated into py script), and run the bench results;

BenchSum(TODO): Summarizes the Bench results of each Engine instance;

common model repo (TODO): A repository that stores public original models. Such as Caffe, classic models of TensorFlow framework (MobileNetV1/V2, etc.).

Independent model warehouse for each engine, such as tnn-models. Contains the tnn model converted from the public public model warehouse, the one-click compilation script (TODO) of the model converter, the one-click conversion script (TODO) of the model conversion, and the script for one-click refresh of the model version to README;

Each engine's independent model warehouse is updated independently and regularly (TODO);

During execution, each Engine instance will pull models from its own independent model warehouse to prepare for the bench.

Subjects other than the AI-Performance open source organization are prohibited from [publicly] publishing [benchmark results based on this project]. If published publicly, it will be considered an infringement, and AI-Performance has the right to pursue legal liability.

The AI-Performance open source organization takes neutrality, fairness, impartiality, and openness as its organizational principles and is committed to creating and formulating benchmark standards in the AI field.

Usually the "Developer Mode" is not turned on. After confirming that it is turned on, it still can't be found. It can usually be solved by following the following sequence:

The USB connection setting is changed from "Charging Only" to "Transfer Files";

Change the USB interface (maybe voltage);

Add vendor ID to ~/.android/adb_usb.ini and then adb kill-server and then adb start-server;

Restart the phone;

Restart the computer;

Change the data cable (I found this happened before);

The CD drive Hisuite of Huawei mobile phones will occupy adb. Eject the drive;

Flash the phone.

To submit code for the first time, you need to execute the following command to install the hook. After successful installation, each time git commit is executed, the check items set in .pre-commit-config.yaml will be automatically checked. For example, the current format check is for Python code.

# The first execution of the hook may be slow pre-commit install # If it cannot be found, you need to install it first pre-commitpip install pre-commit # If you want to uninstall, execute pre-commit uninstall

If you cannot find python3.8, you can install minconda3 and use the following command:

# Automatically install miniconda3 and write the current user's environment variable.github/workflows/pre-commit-job.sh# Before submitting the code, create an environment named dev_env_py as an example. If you encounter it, choose yconda create -n dev_env_py python= 3.8# Activate the newly created environment conda activate dev_env_py# Reinstall pre-commitpre-commit install

If CI hangs, check the specific log of Github Action to see if it is due to timeout such as

git clonewarehouse. At this time, you canRe-run this jobs.

.github/workflows/unit-test-job.sh