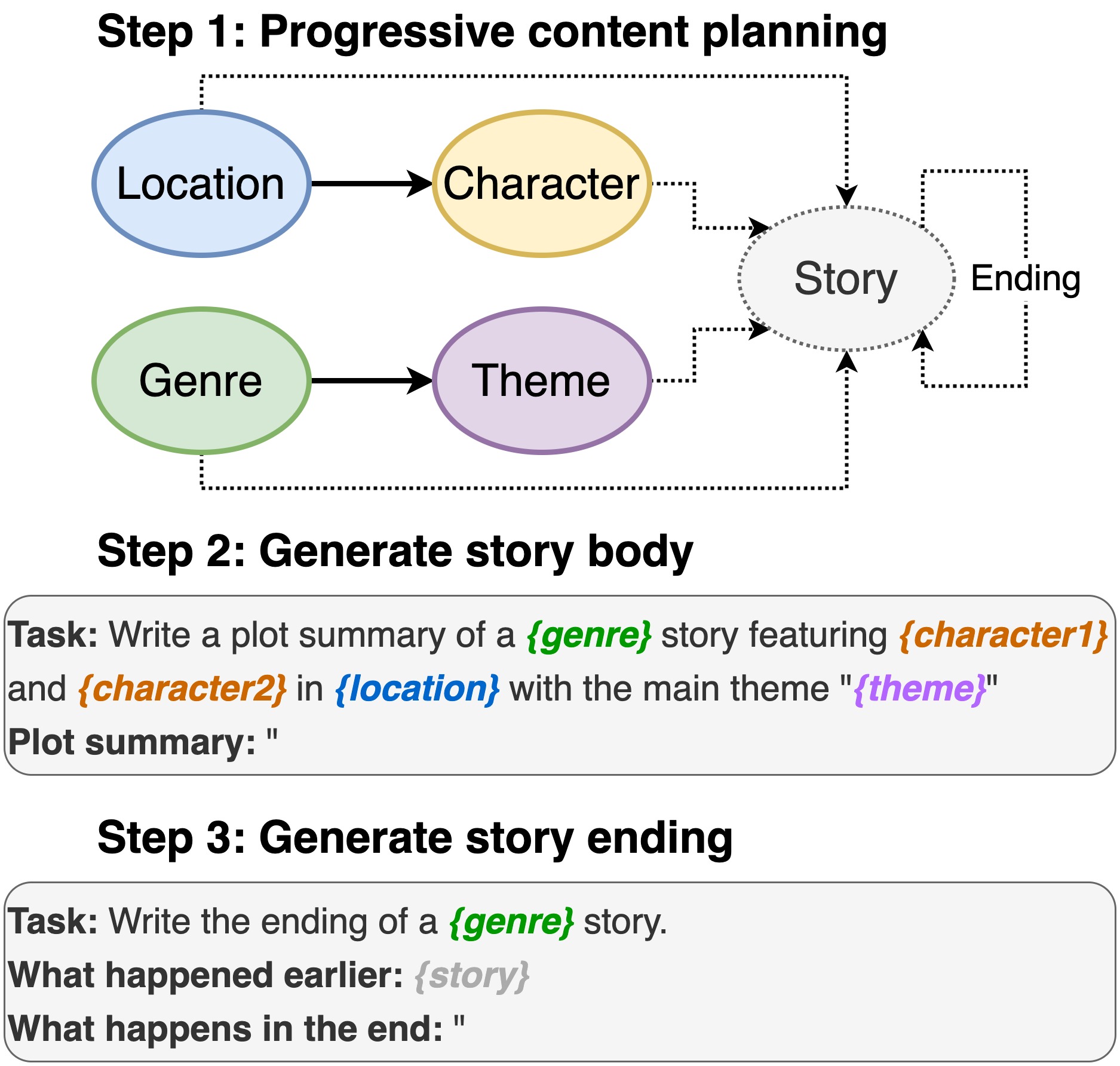

This repository contains the code for Plot Writing From Pre-Trained Language Models, to appear in INLG 2022. The paper introduces a method to first prompts a PLM to compose a content plan. Then, we generate the story’s body and ending conditioned on the content plan. Furthermore, we take a generate-and-rank approach by using additional PLMs to rank the generated (story, ending) pairs.

This repo relies heavily on DINO. Since we made some minor changes, we include the complete code for ease of use.

Including location, cast, genre and theme.

sh run_plot_static_gpu.shThe content plan elements are generated once and stored. When generating the stories, the system samples from the offline-generated plot elements.

sh run_plot_dynamic_gpu_single.shsh run_plot_dynamic_gpu_batch.sh--no_cuda to all the commands that calls dino.py.Requires Python3. Tested on Python 3.6 and 3.8.

pip3 install -r requirements.txtimport nltk

nltk.download('punkt')

nltk.download('stopwords')If you make use of the code in this repository, please cite the following paper:

@inproceedings{jin-le-2022-plot,

title = "Plot Writing From Pre-Trained Language Models",

author = "Jin, Yiping and Kadam, Vishakha and Wanvarie, Dittaya",

booktitle = "Proceedings of the 15th International Natural Language Generation conference",

year = "2022",

address = "Maine, USA",

publisher = "Association for Computational Linguistics"

}

If you use DINO for other tasks, please also cite the following paper:

@article{schick2020generating,

title={Generating Datasets with Pretrained Language Models},

author={Timo Schick and Hinrich Schütze},

journal={Computing Research Repository},

volume={arXiv:2104.07540},

url={https://arxiv.org/abs/2104.07540},

year={2021}

}