【2024.06.13】 Support MiniCPM-Llama3-V-2_5 model, modify the environment variable MODEL_NAME=minicpm-v PROMPT_NAME=minicpm-v DTYPE=bfloat16

[2024.06.12] Support the GLM-4V model, modify the environment variable MODEL_NAME=glm-4v PROMPT_NAME=glm-4v DTYPE=bfloat16 , see glm4v for test examples.

【2024.06.08】The QWEN2 model has been supported, modify the environment variable MODEL_NAME=qwen2 PROMPT_NAME=qwen2

【2024.06.05】 Support the GLM4 model and modify the environment variable MODEL_NAME=chatglm4 PROMPT_NAME=chatglm4

【2024.04.18】 Support Code Qwen model, SQL Q&A demo

【2024.04.16】 Support Rerank reorder model, usage method

【 QWEN1.5 】 The environment variable MODEL_NAME=qwen2 PROMPT_NAME=qwen2

For more news and history please go here

Main content of this project

This project implements a unified backend interface for the reasoning of open source large models, which is consistent with OpenAI 's response and has the following characteristics:

Call various open source models in the form of OpenAI ChatGPT API

?️ Supports streaming response to achieve printer effect

Implement text embedding model to provide support for document knowledge Q&A

?️ Supports various functions of langchain , a large-scale language model development tool

? You only need to simply modify the environment variables to use the open source model as an alternative model for chatgpt , providing back-end support for various applications

Supports loading of self-trained lora models

⚡ Support vLLM inference acceleration and processing of concurrent requests

| chapter | describe |

|---|---|

| ??♂Support model | Open source models supported by this project and brief information |

| ?Start method | Environment configuration and startup commands for startup models |

| ⚡VLLM startup method | Environment configuration and startup commands for starting models using vLLM |

| Call method | How to call after starting the model |

| ❓FAQ | Replies to some FAQs |

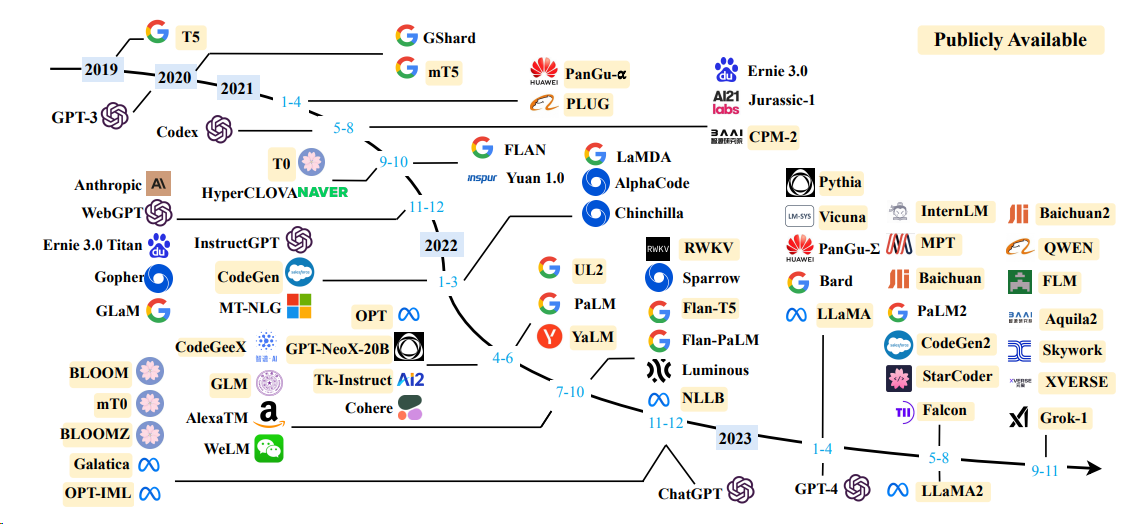

Language Model

| Model | Model parameter size |

|---|---|

| Baichuan | 7B/13B |

| ChatGLM | 6B |

| DeepSeek | 7B/16B/67B/236B |

| InternLM | 7B/20B |

| LLaMA | 7B/13B/33B/65B |

| LLaMA-2 | 7B/13B/70B |

| LLaMA-3 | 8B/70B |

| Qwen | 1.8B/7B/14B/72B |

| Qwen1.5 | 0.5B/1.8B/4B/7B/14B/32B/72B/110B |

| Qwen2 | 0.5B/1.5B/7B/57B/72B |

| Yi (1/1.5) | 6B/9B/34B |

For details, please refer to vLLM startup method and transformers startup method.

Embed Model

| Model | Dimension | Weight link |

|---|---|---|

| bge-large-zh | 1024 | bge-large-zh |

| m3e-large | 1024 | moka-ai/m3e-large |

| text2vec-large-chinese | 1024 | text2vec-large-chinese |

| bce-embedding-base_v1 (recommended) | 768 | bce-embedding-base_v1 |

OPENAI_API_KEY : Just fill in a string here

OPENAI_API_BASE : The interface address of the backend startup, such as: http://192.168.0.xx:80/v1

cd streamlit-demo

pip install -r requirements.txt

streamlit run streamlit_app.py

from openai import OpenAI

client = OpenAI (

api_key = "EMPTY" ,

base_url = "http://192.168.20.59:7891/v1/" ,

)

# Chat completion API

chat_completion = client . chat . completions . create (

messages = [

{

"role" : "user" ,

"content" : "你好" ,

}

],

model = "gpt-3.5-turbo" ,

)

print ( chat_completion )

# 你好!我是人工智能助手 ChatGLM3-6B,很高兴见到你,欢迎问我任何问题。

# stream = client.chat.completions.create(

# messages=[

# {

# "role": "user",

# "content": "感冒了怎么办",

# }

# ],

# model="gpt-3.5-turbo",

# stream=True,

# )

# for part in stream:

# print(part.choices[0].delta.content or "", end="", flush=True) from openai import OpenAI

client = OpenAI (

api_key = "EMPTY" ,

base_url = "http://192.168.20.59:7891/v1/" ,

)

# Chat completion API

completion = client . completions . create (

model = "gpt-3.5-turbo" ,

prompt = "你好" ,

)

print ( completion )

# 你好!我是人工智能助手 ChatGLM-6B,很高兴见到你,欢迎问我任何问题。 from openai import OpenAI

client = OpenAI (

api_key = "EMPTY" ,

base_url = "http://192.168.20.59:7891/v1/" ,

)

# compute the embedding of the text

embedding = client . embeddings . create (

input = "你好" ,

model = "text-embedding-ada-002"

)

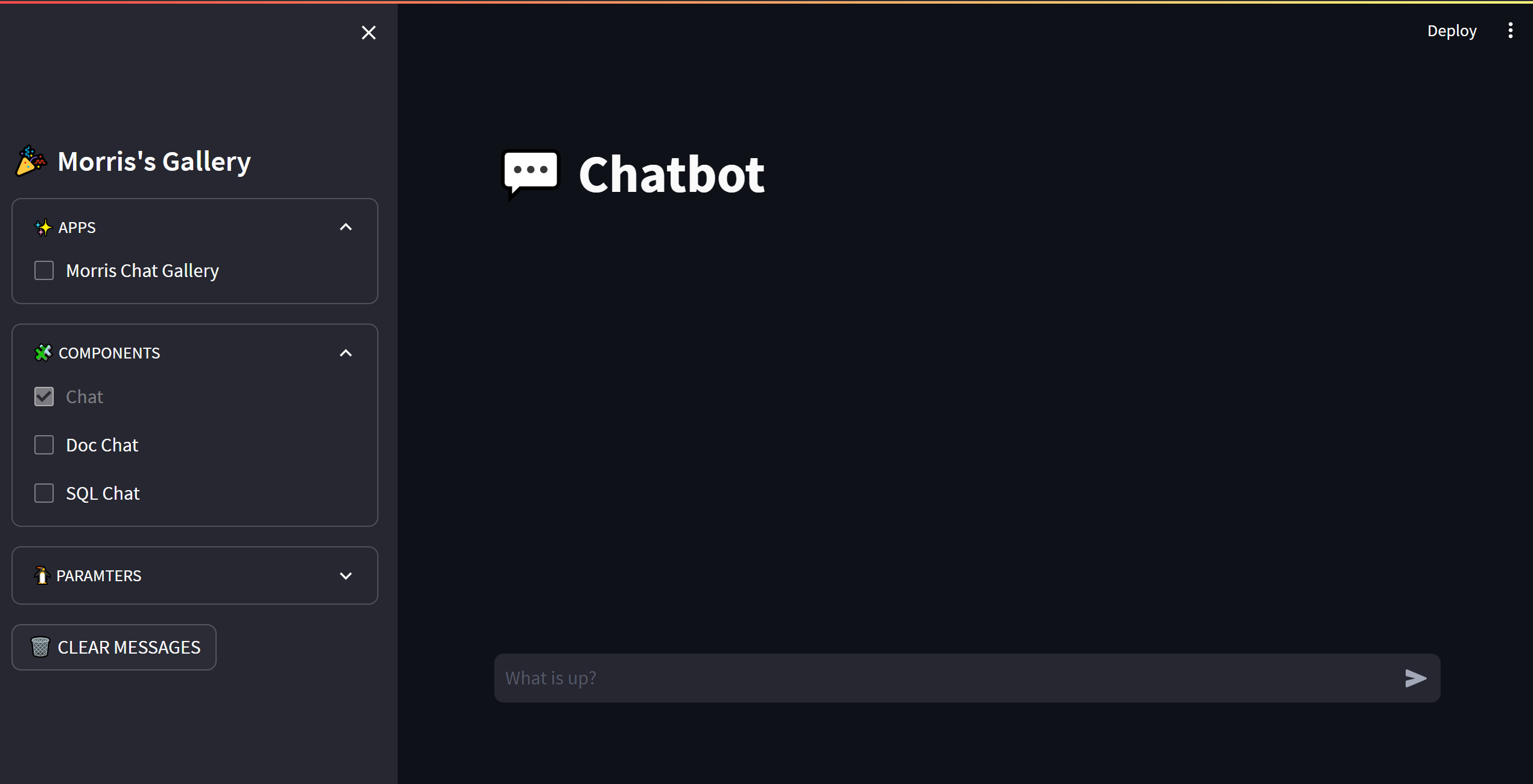

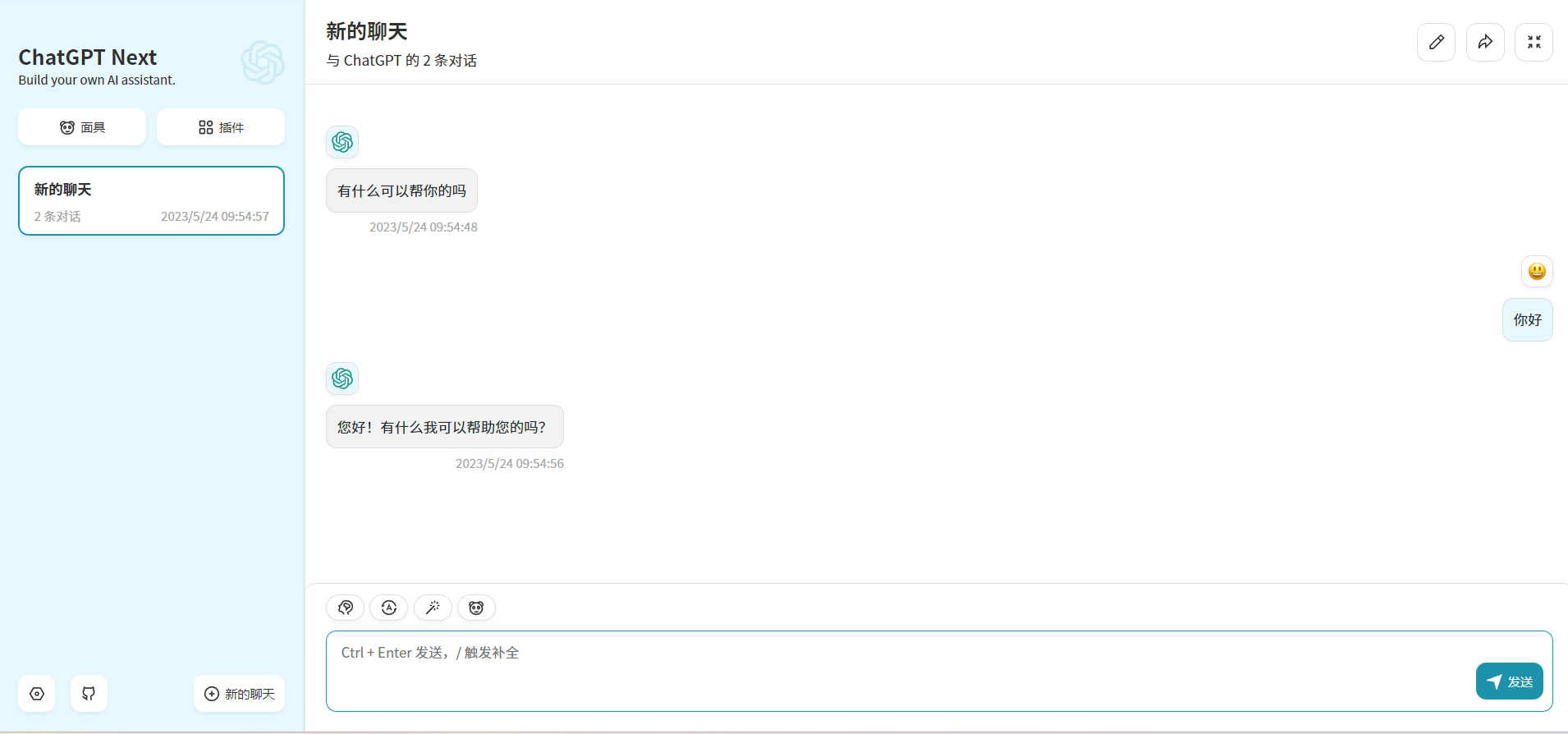

print ( embedding ) By modifying OPENAI_API_BASE environment variable, most chatgpt applications and front-end projects can be seamlessly connected!

docker run -d -p 3000:3000

-e OPENAI_API_KEY= " sk-xxxx "

-e BASE_URL= " http://192.168.0.xx:80 "

yidadaa/chatgpt-next-web

# 在docker-compose.yml中的api和worker服务中添加以下环境变量

OPENAI_API_BASE: http://192.168.0.xx:80/v1

DISABLE_PROVIDER_CONFIG_VALIDATION: ' true '

This project is licensed under the Apache 2.0 license, see the LICENSE file for more information.

ChatGLM: An Open Bilingual Dialogue Language Model

BLOOM: A 176B-Parameter Open-Access Multilingual Language Model

LLaMA: Open and Efficient Foundation Language Models

Efficient and Effective Text Encoding for Chinese LLaMA and Alpaca

Phoenix: Democratizing ChatGPT across Languages

MOSS: An open-sourced plugin-augmented conversational language model

FastChat: An open platform for training, serving, and evaluating large language model based chatbots

LangChain: Building applications with LLMs through composability

ChuanhuChatgpt