Open Interface

Full Autopilot for All Computers Using LLMs

Open Interface

- Self-drives computers by sending user requests to an LLM backend (GPT-4V, etc) to figure out the required steps.

- Automatically executes the steps by simulating keyboard and mouse input.

- Course-corrects by sending the LLMs a current screenshot of the computer as needed.

Self-Driving Software for All Your Computers

Demo

["Make me a meal plan in Google Docs"]

More Demos

Install ?

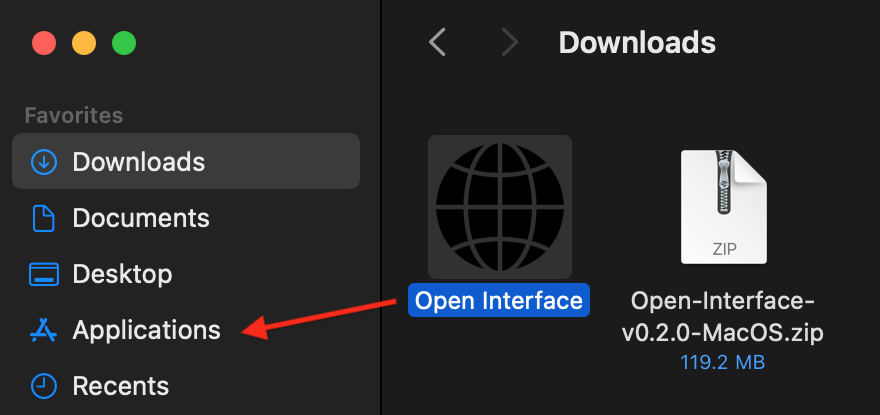

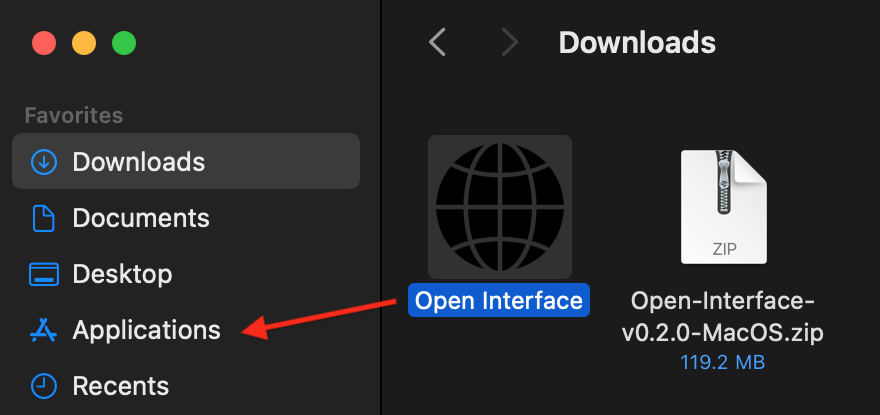

MacOS

- Download the MacOS binary from the latest release.

- Unzip the file and move Open Interface to the Applications Folder.

Apple Silicon M-Series Macs

-

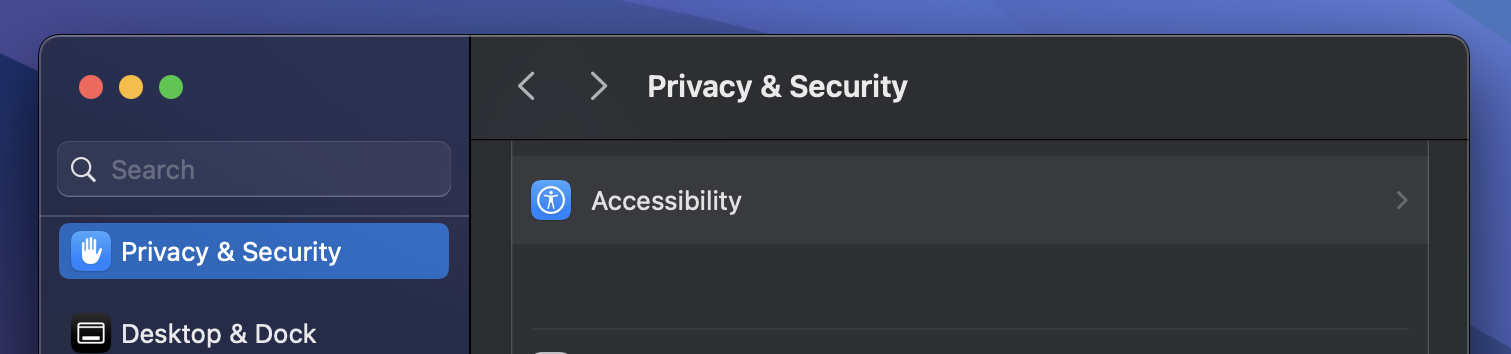

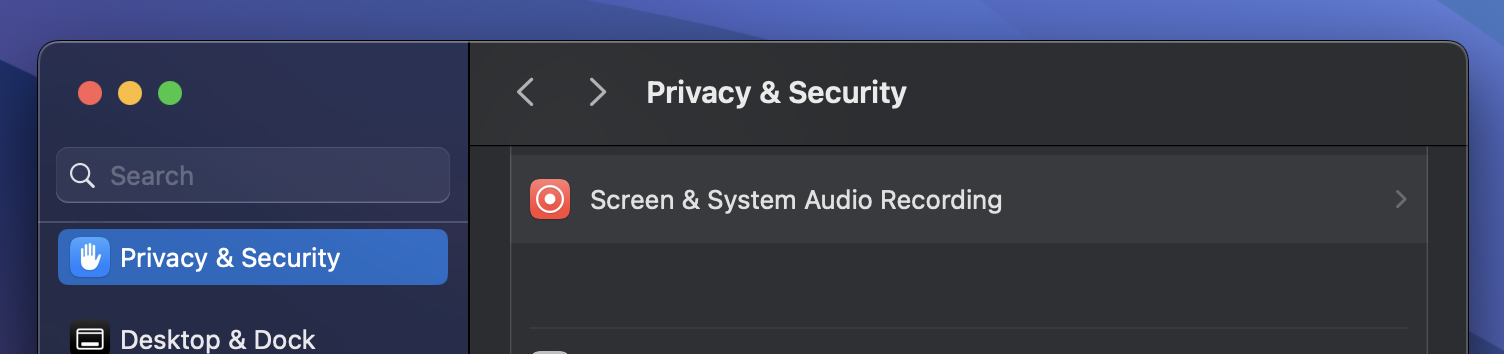

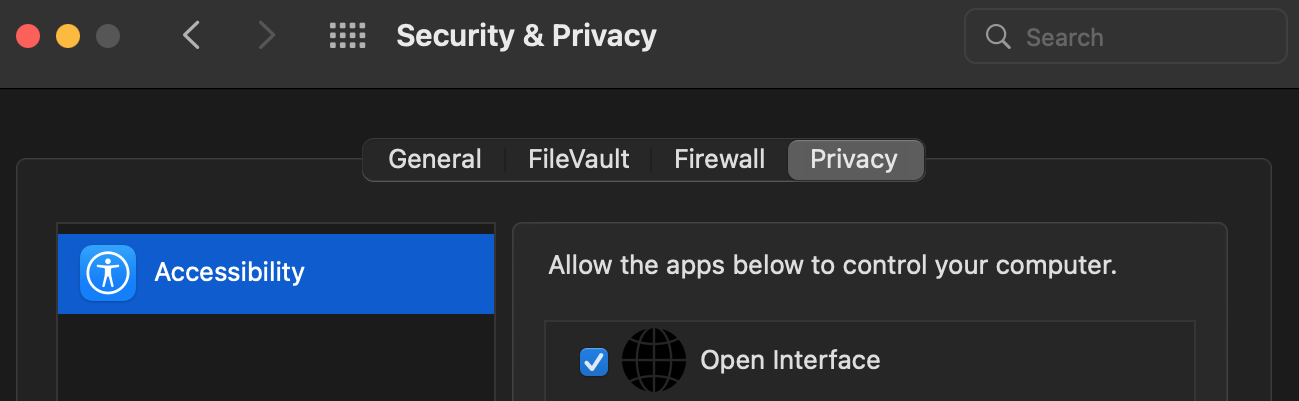

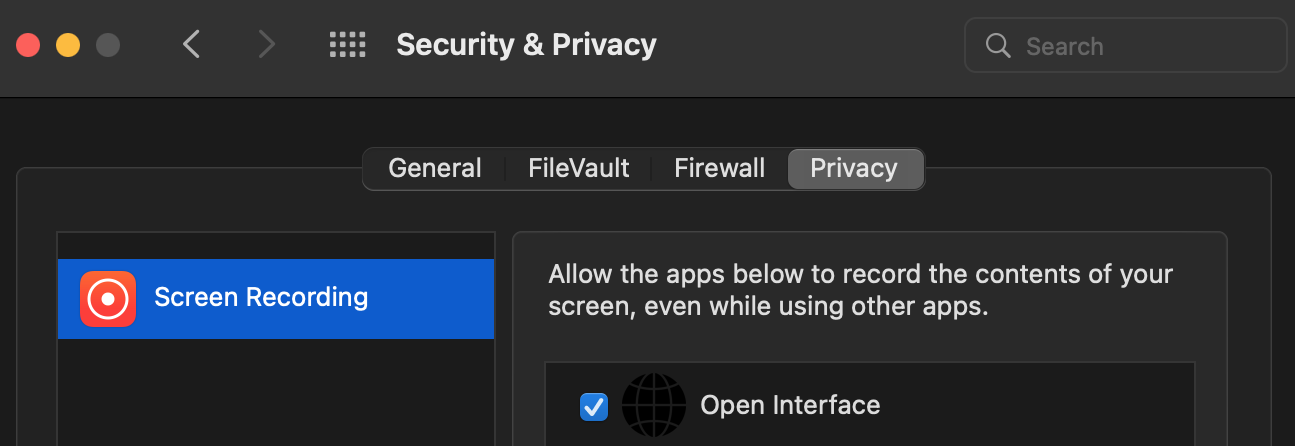

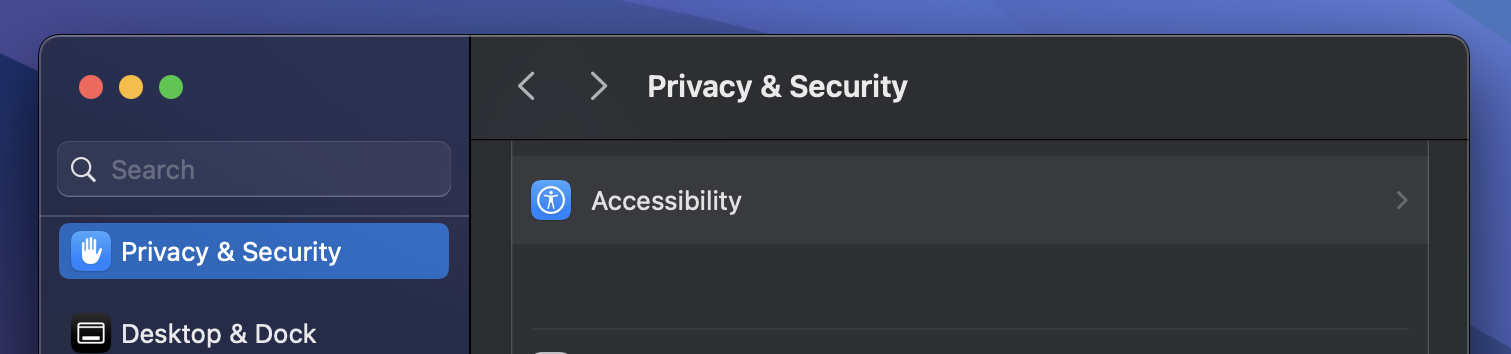

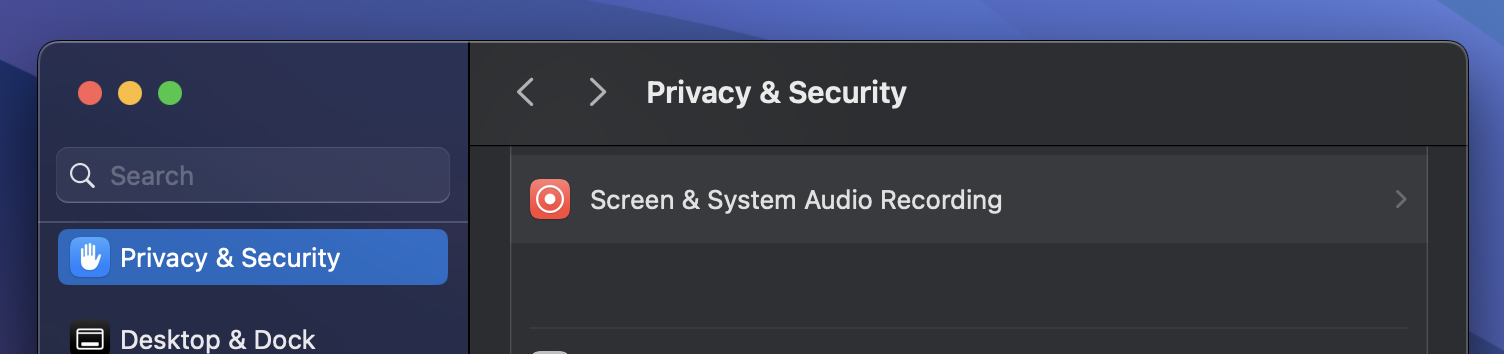

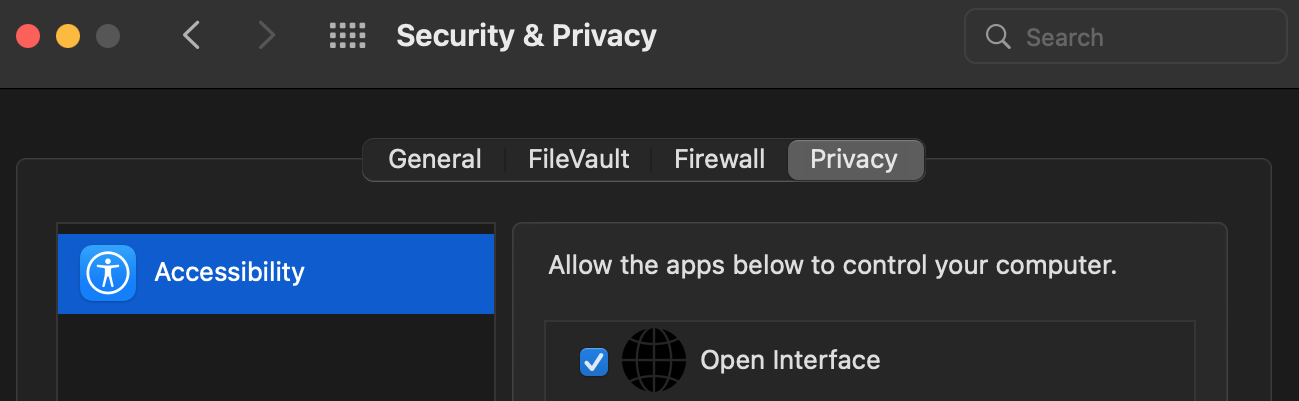

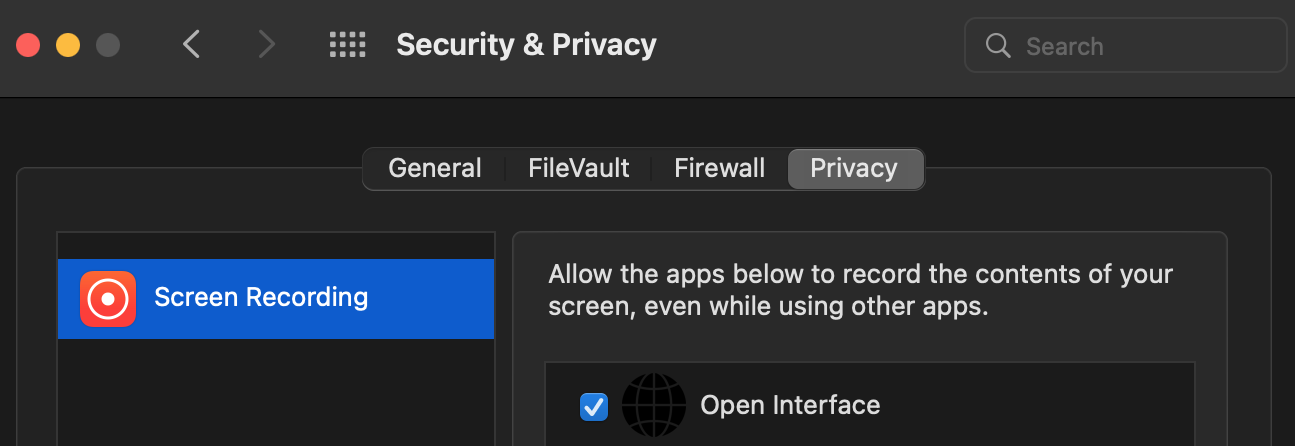

Open Interface will ask you for Accessibility access to operate your keyboard and mouse for you, and Screen Recording access to take screenshots to assess its progress.

-

In case it doesn't, manually add these permission via System Settings -> Privacy and Security

Intel Macs

-

Launch the app from the Applications folder.

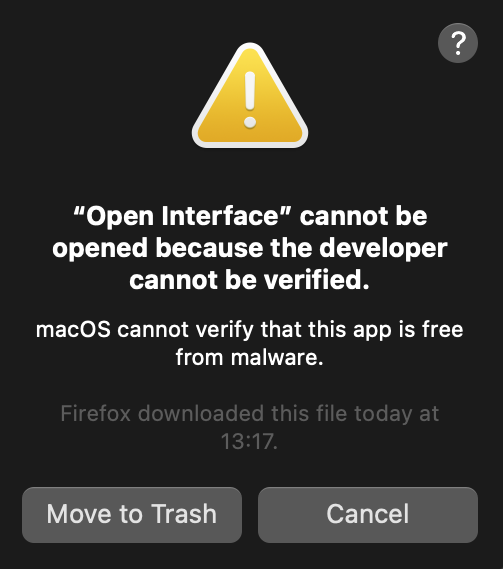

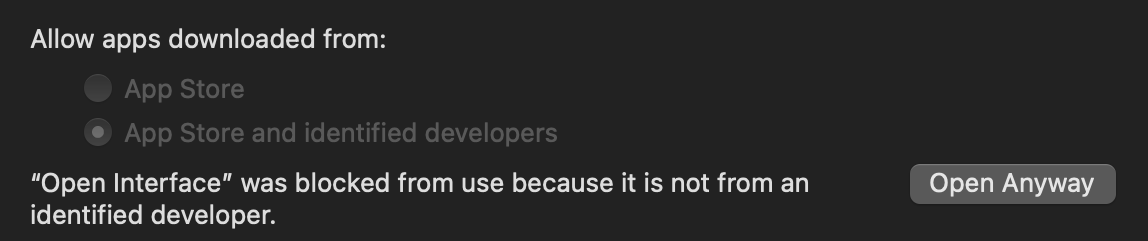

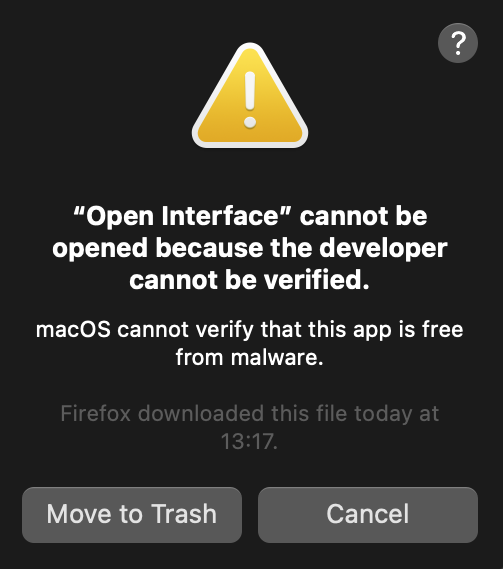

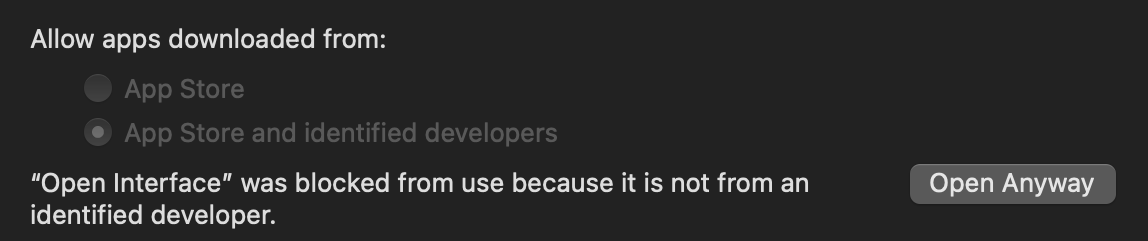

You might face the standard Mac "Open Interface cannot be opened" error.

In that case, press "Cancel".

Then go to System Preferences -> Security and Privacy -> Open Anyway.

-

Open Interface will also need Accessibility access to operate your keyboard and mouse for you, and Screen Recording access to take screenshots to assess its progress.

- Lastly, checkout the Setup section to connect Open Interface to LLMs (OpenAI GPT-4V)

Linux

- Linux binary has been tested on Ubuntu 20.04 so far.

- Download the Linux zip file from the latest release.

-

Extract the executable and run it from the Terminal via

./Open Interface

- Checkout the Setup section to connect Open Interface to LLMs (OpenAI GPT-4V)

Windows

- Windows binary has been tested on Windows 10.

- Download the Windows zip file from the latest release.

- Unzip the folder, move the exe to the desired location, double click to open, and voila.

- Checkout the Setup section to connect Open Interface to LLMs (OpenAI GPT-4V)

Setup

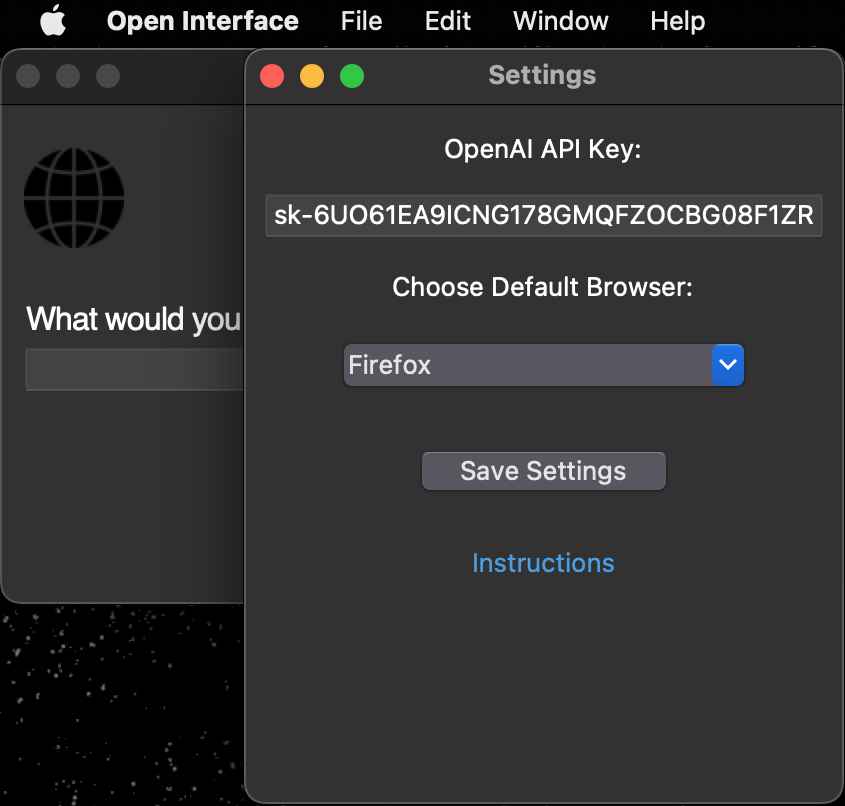

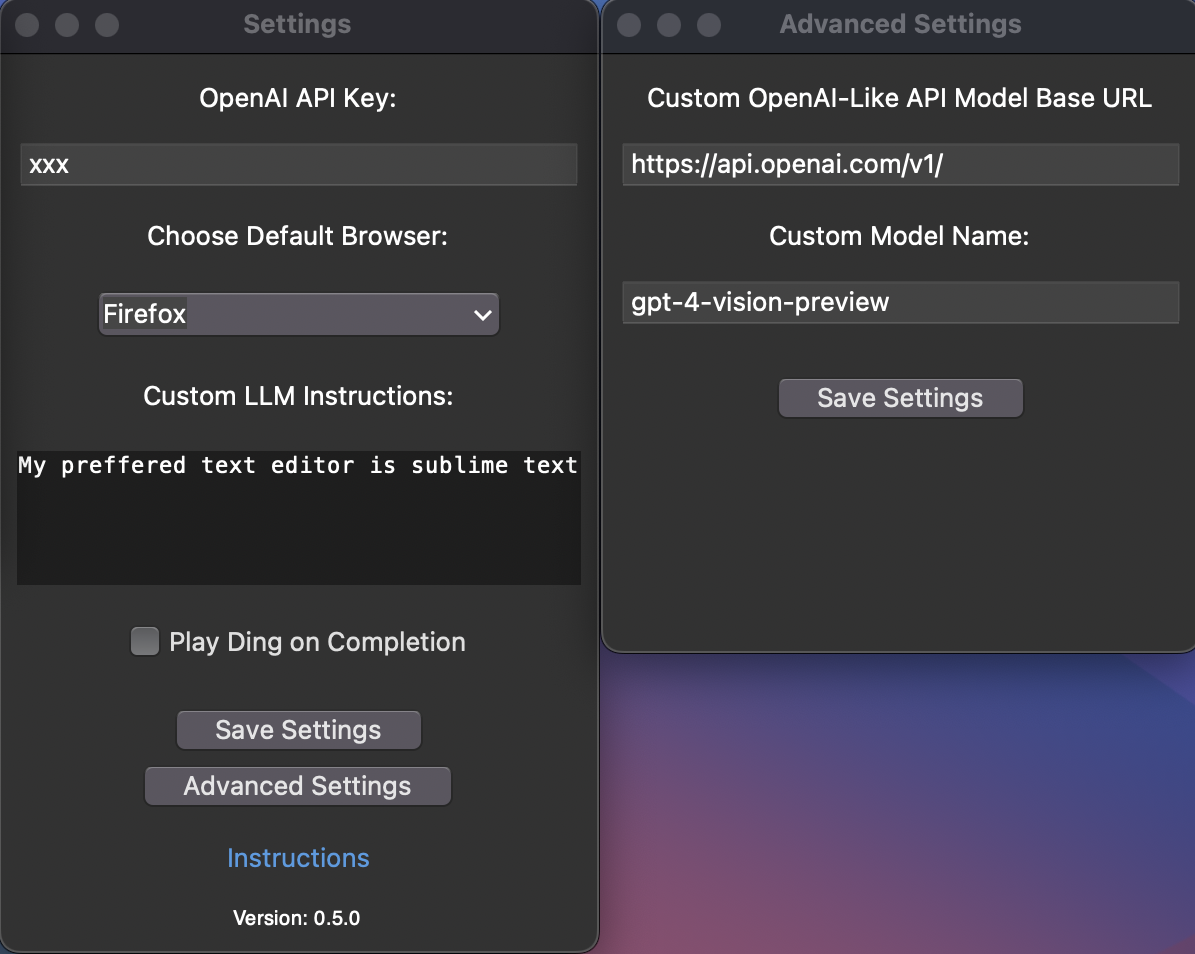

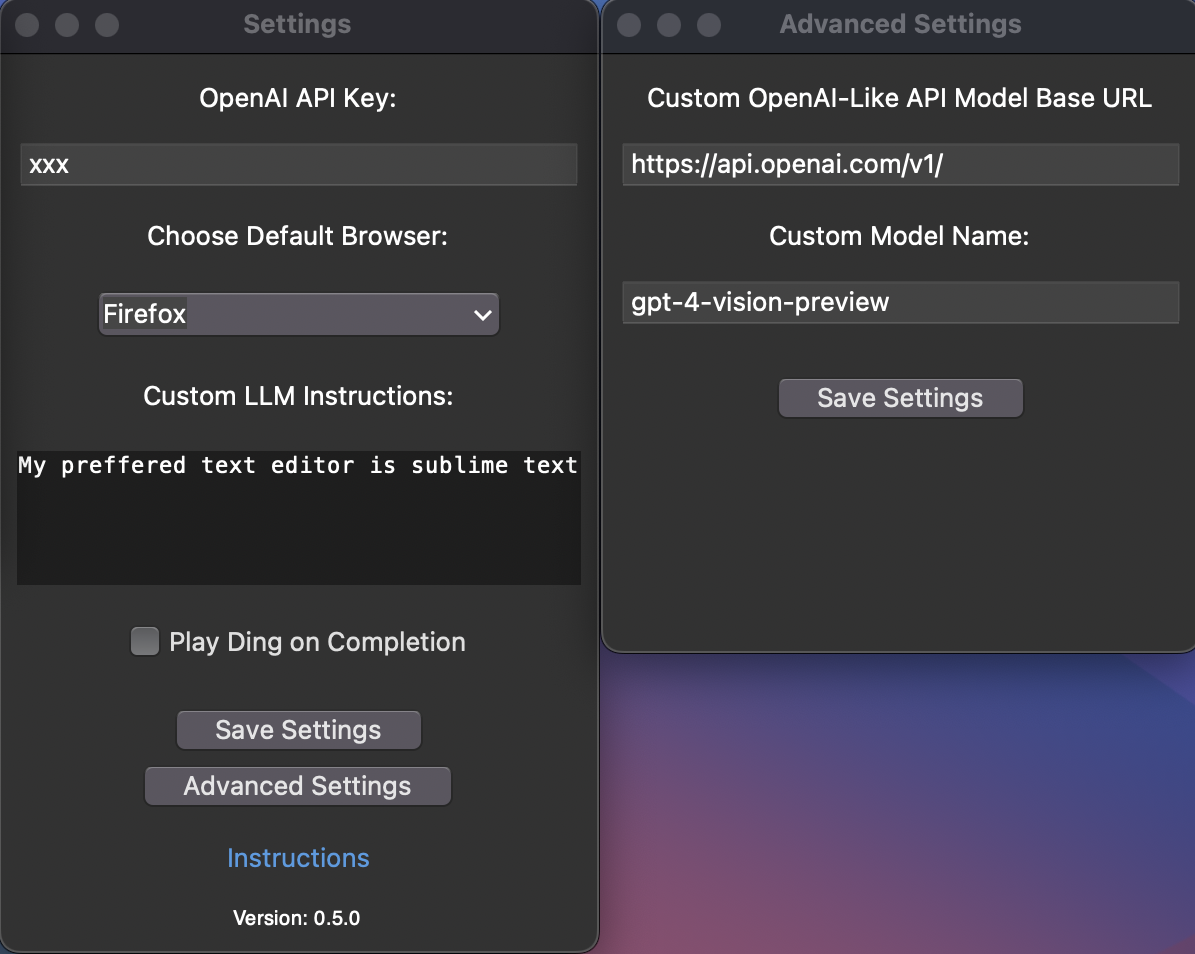

Set up the OpenAI API key

Optional: Setup a Custom LLM

- Open Interface supports using other OpenAI API style LLMs (such as Llava) as a backend and can be configured easily in the Advanced Settings window.

- Enter the custom base url and model name in the Advanced Settings window and the API key in the Settings window as needed.

- If your LLM does not support an OpenAI style API, you can use a library like this to convert it to one.

- You will need to restart the app after these changes.

Stuff It’s Bad At (For Now) ?

- Accurate spatial-reasoning and hence clicking buttons.

- Keeping track of itself in tabular contexts, like Excel and Google Sheets, for similar reasons as stated above.

- Navigating complex GUI-rich applications like Counter-Strike, Spotify, Garage Band, etc due to heavy reliance on cursor actions.

Future ?

(with better models trained on video walkthroughs like Youtube tutorials)

- "Create a couple of bass samples for me in Garage Band for my latest project."

- "Read this design document for a new feature, edit the code on Github, and submit it for review."

- "Find my friends' music taste from Spotify and create a party playlist for tonight's event."

- "Take the pictures from my Tahoe trip and make a White Lotus type montage in iMovie."

Notes

- Cost: $0.05 - $0.20 per user request.

(This will be much lower in the near future once GPT-4V enables assistant/stateful mode)

- You can interrupt the app anytime by pressing the Stop button, or by dragging your cursor to any of the screen corners.

- Open Interface can only see your primary display when using multiple monitors. Therefore, if the cursor/focus is on a secondary screen, it might keep retrying the same actions as it is unable to see its progress (especially in MacOS with launching Spotlight).

System Diagram ?️

+----------------------------------------------------+

| App |

| |

| +-------+ |

| | GUI | |

| +-------+ |

| ^ |

| | |

| v |

| +-----------+ (Screenshot + Goal) +-----------+ |

| | | --------------------> | | |

| | Core | | LLM | |

| | | <-------------------- | (GPT-4V) | |

| +-----------+ (Instructions) +-----------+ |

| | |

| v |

| +-------------+ |

| | Interpreter | |

| +-------------+ |

| | |

| v |

| +-------------+ |

| | Executer | |

| +-------------+ |

+----------------------------------------------------+

Star History ️

Links ?

- Check out more of my projects at AmberSah.dev.

- Other demos and press kit can be found at MEDIA.md.