⚡Chat with GitHub Repo Using 200k context window of Claude instead of RAG!⚡

Take the advantage of Claude 200k! Put all examples and codes to the contexts!

We need copilot rather than agent sometimes!

Have troubles memorizing all the apis in llama-index or langchain?

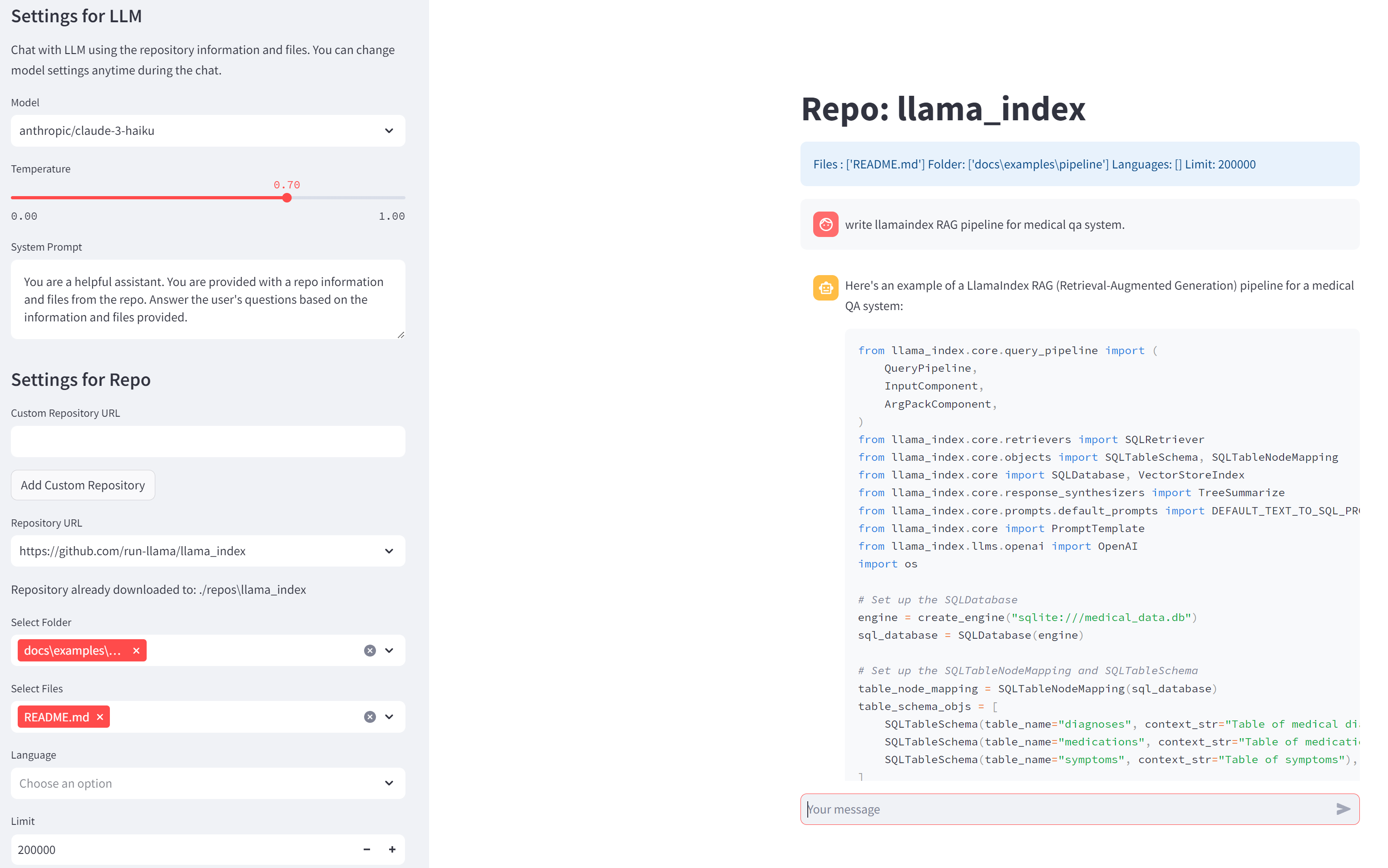

No worries, just include the components examples and the documents from the repo and let Claude Opus - the strongest model and long context window of 200k to write your agent for you!

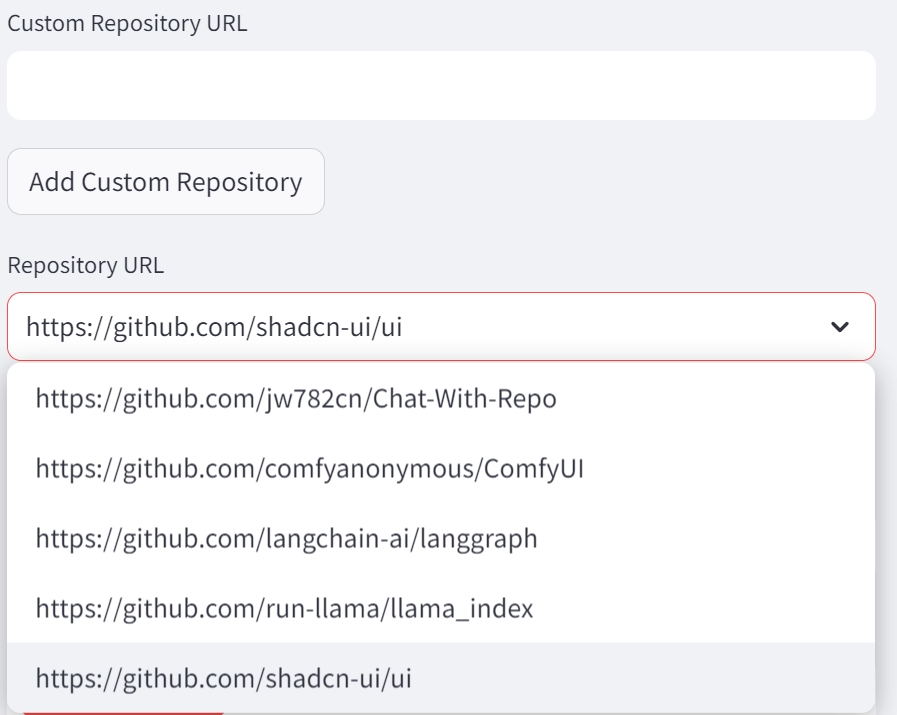

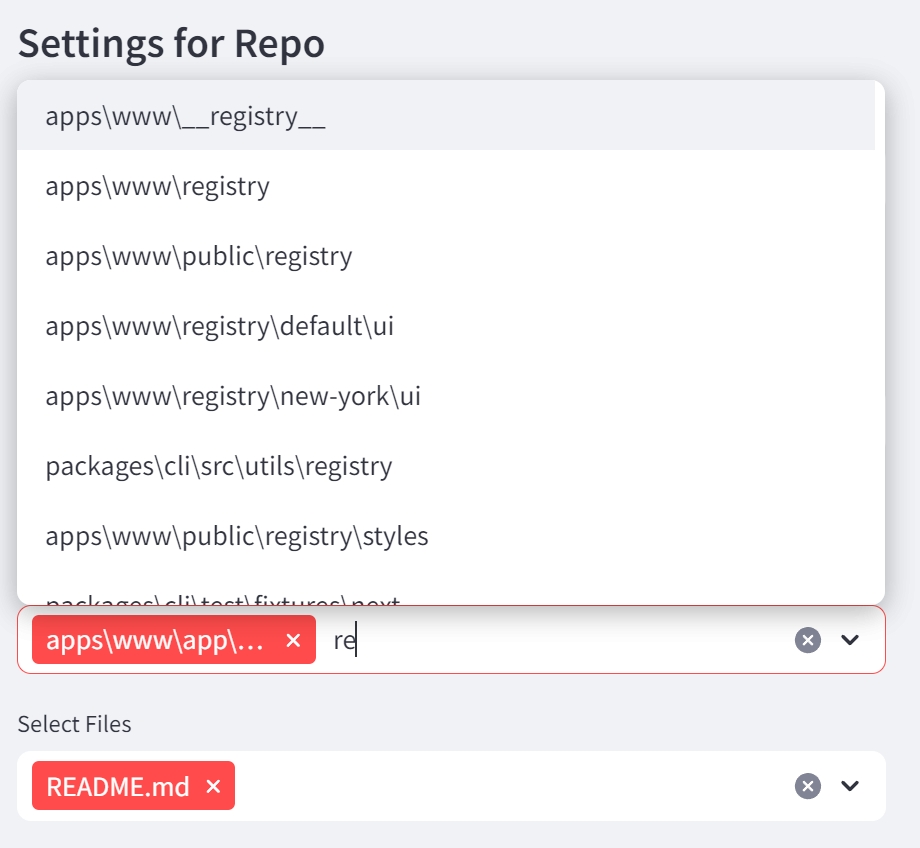

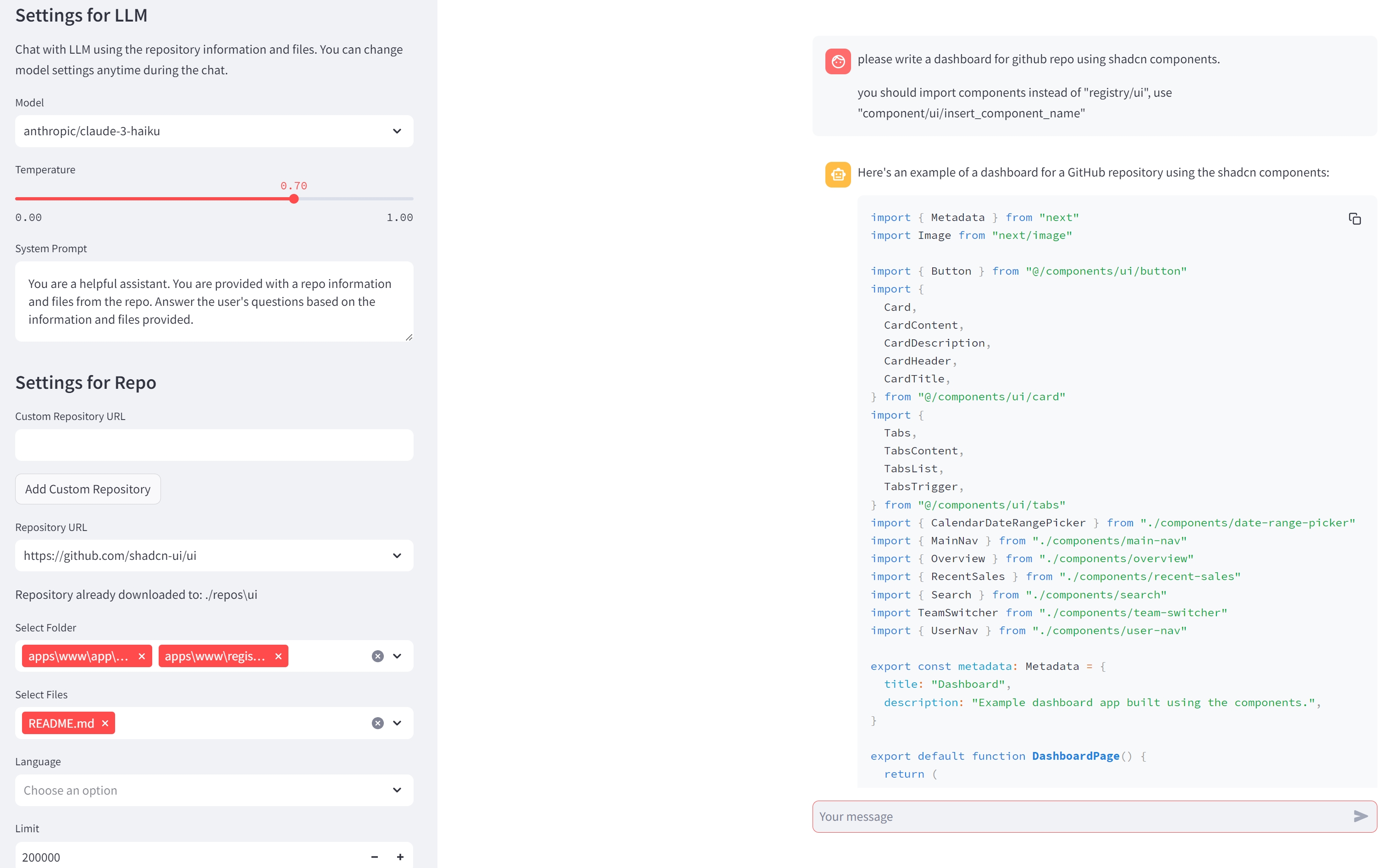

Download/Clone your Repo from Github then just select the files you'd like, I got you covered on constructing the prompt.

I've seen many Chat with Repo projects, they all have the painpoints:

Which files do this query need?

They use embedding search in Code database but most of the time I already knew what documents I'm refering... So make your own choices each time when you are coding.

Coding Frontend? Just select components and examples.

Coding Agents? Just select Jupyter Notebook of langgraph.

Coding RAG? Just select Jupyter Notebook of llamaindex.

select llamaindex example of pipeline to write RAG graph.

select examples and components definition.

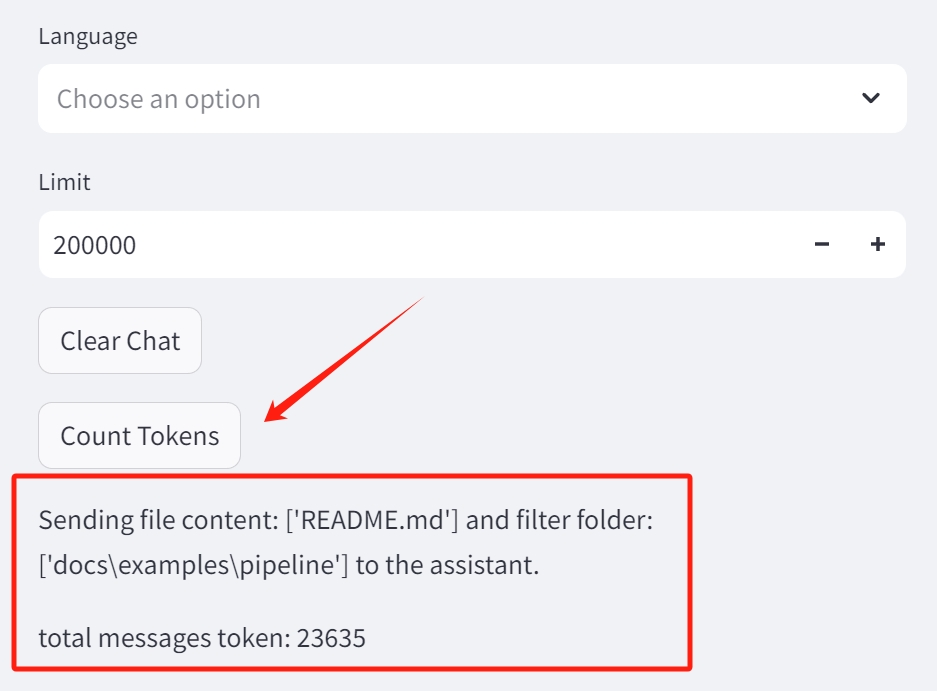

COUNT TOKENS on the sidebar to see how many tokens you will send!!!

Currently I only supported Openrouter. Planing to add more and refactor someday.

Environment Settings: Run pip install -r requirements.txt to set up environment.

Create a .env file: Create a .env file in the root directory of the project and add your OpenRouter API key (Recommended):

OPENROUTER_API_KEY=your_openrouter_api_key_hereI recommend OpenRouter because it has all models!

If you want to use OpenAI GPT models, add your openai api key as well.

OPENAI_API_KEY=your_openai_api_key_hereapp.py script using Streamlit:streamlit run app.pyIf you encounter some issues with repo, you can always delete the repo dir in ./repos dir and download it again.

The application's behavior can be customized through the following configuration options:

These settings can be adjusted in the sidebar of the Streamlit application.

If you'd like to contribute to the RepoChat-200k project, please feel free to submit issues or pull requests on the GitHub repository.

This project is licensed under the MIT License.