An out-of-the-box AI intelligent assistant API

English | 简体中文 | 日本語

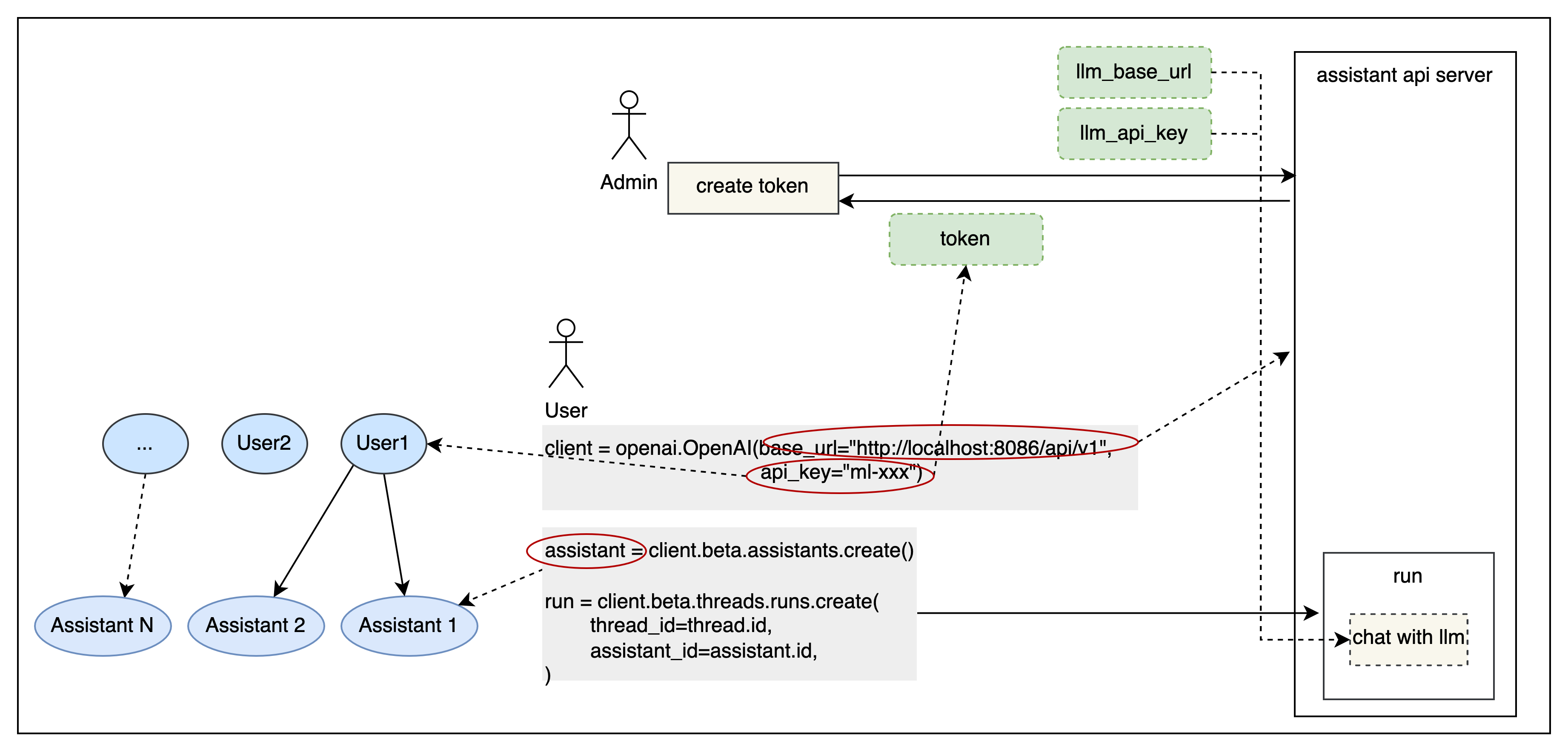

Open Assistant API is an open-source, self-hosted AI intelligent assistant API, compatible with the official OpenAI interface. It can be used directly with the official OpenAI Client to build LLM applications.

It supports One API for integration with more commercial and private models.

It supports R2R RAG engine。

Below is an example of using the official OpenAI Python openai library:

import openai

client = openai.OpenAI(

base_url="http://127.0.0.1:8086/api/v1",

api_key="xxx"

)

assistant = client.beta.assistants.create(

name="demo",

instructions="You are a helpful assistant.",

model="gpt-4-1106-preview"

)| Feature | Open Assistant API | OpenAI Assistant API |

|---|---|---|

| Ecosystem Strategy | Open Source | Closed Source |

| RAG Engine | Support R2R | Supported |

| Internet Search | Supported | Not Supported |

| Custom Functions | Supported | Supported |

| Built-in Tool | Extendable | Not Extendable |

| Code Interpreter | Under Development | Supported |

| Multimodal | Supported | Supported |

| LLM Support | Supports More LLMs | Only GPT |

| Message Streaming Output | Supports | Supported |

| Local Deployment | Supported | Not Supported |

The easiest way to start the Open Assistant API is to run the docker-compose.yml file. Make sure Docker and Docker Compose are installed on your machine before running.

Go to the project root directory, open docker-compose.yml, fill in the openai api_key and bing search key (optional).

# openai api_key (supports OneAPI api_key)

OPENAI_API_KEY=<openai_api_key>

# bing search key (optional)

BING_SUBSCRIPTION_KEY=<bing_subscription_key>It is recommended to configure the R2R RAG engine to replace the default RAG implementation to provide better RAG capabilities. You can learn about and use R2R through the R2R Github repository.

# RAG config

# FILE_SERVICE_MODULE=app.services.file.impl.oss_file.OSSFileService

FILE_SERVICE_MODULE=app.services.file.impl.r2r_file.R2RFileService

R2R_BASE_URL=http://<r2r_api_address>

R2R_USERNAME=<r2r_username>

R2R_PASSWORD=<r2r_password>docker compose up -dApi Base URL: http://127.0.0.1:8086/api/v1

Interface documentation address: http://127.0.0.1:8086/docs

In this example, an AI assistant is created and run using the official OpenAI client library. If you need to explore other usage methods,

such as streaming output, tools (web_search, retrieval, function), etc., you can find the corresponding code under the examples directory.

Before running, you need to run pip install openai to install the Python openai library.

# !pip install openai

export PYTHONPATH=$(pwd)

python examples/run_assistant.pySimple user isolation is provided based on tokens to meet SaaS deployment requirements. It can be enabled by configuring APP_AUTH_ENABLE.

Authorization: Bearer *** in the header for authentication.APP_AUTH_ADMIN_TOKEN and defaults to "admin".According to the OpenAPI/Swagger specification, it allows the integration of various tools into the assistant, empowering and enhancing its capability to connect with the external world.

Join the Slack channel to see new releases, discuss issues, and participate in community interactions.

Join the Discord channel to interact with other community members.

Join the WeChat group:

We mainly referred to and relied on the following projects:

Please read our contribution document to learn how to contribute.

This repository follows the MIT open source license. For more information, please see the LICENSE file.