Pico MLX Server is the easiest way to get started with Apple's MLX AI framework

Pico MLX Server provides a GUI for MLX Server. MLX server provides an API for local MLX models conform the OpenAI API. This allows you to use most existing OpenAI chat clients with Pico MLX Server.

See MLX Community on HuggingFace

To install Pico MLX Server, build the source using Xcode, or download the notarized executable directly from GitHub.

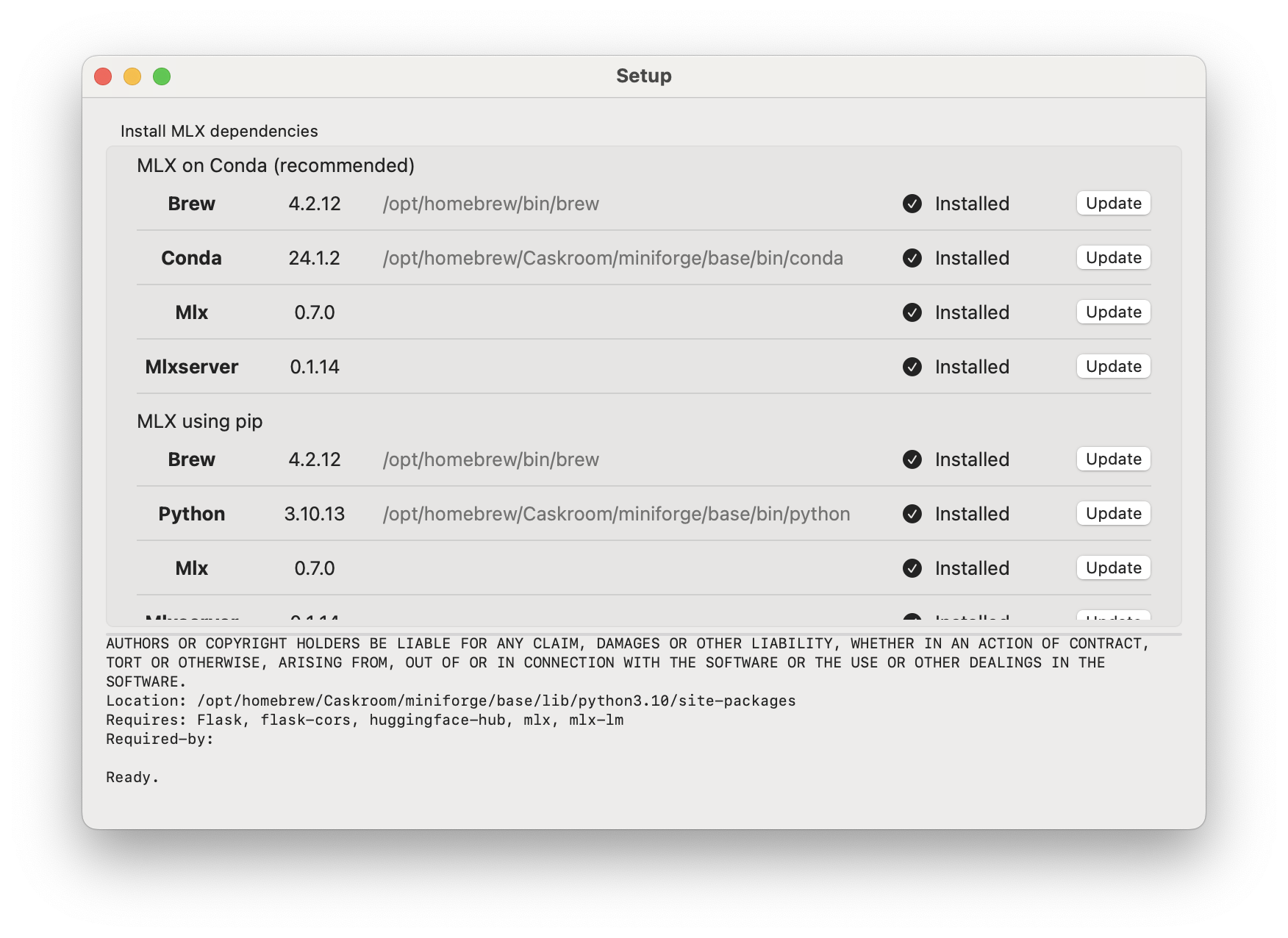

To set up Pico MLX Server, open the app and

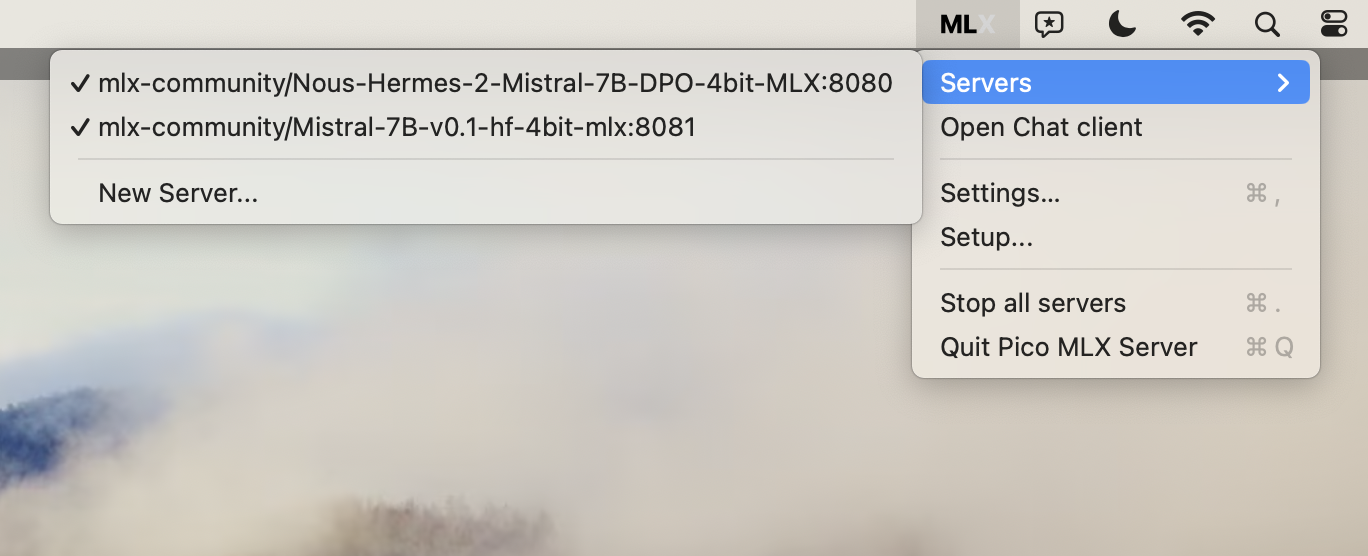

MLX -> Setup...)Settings

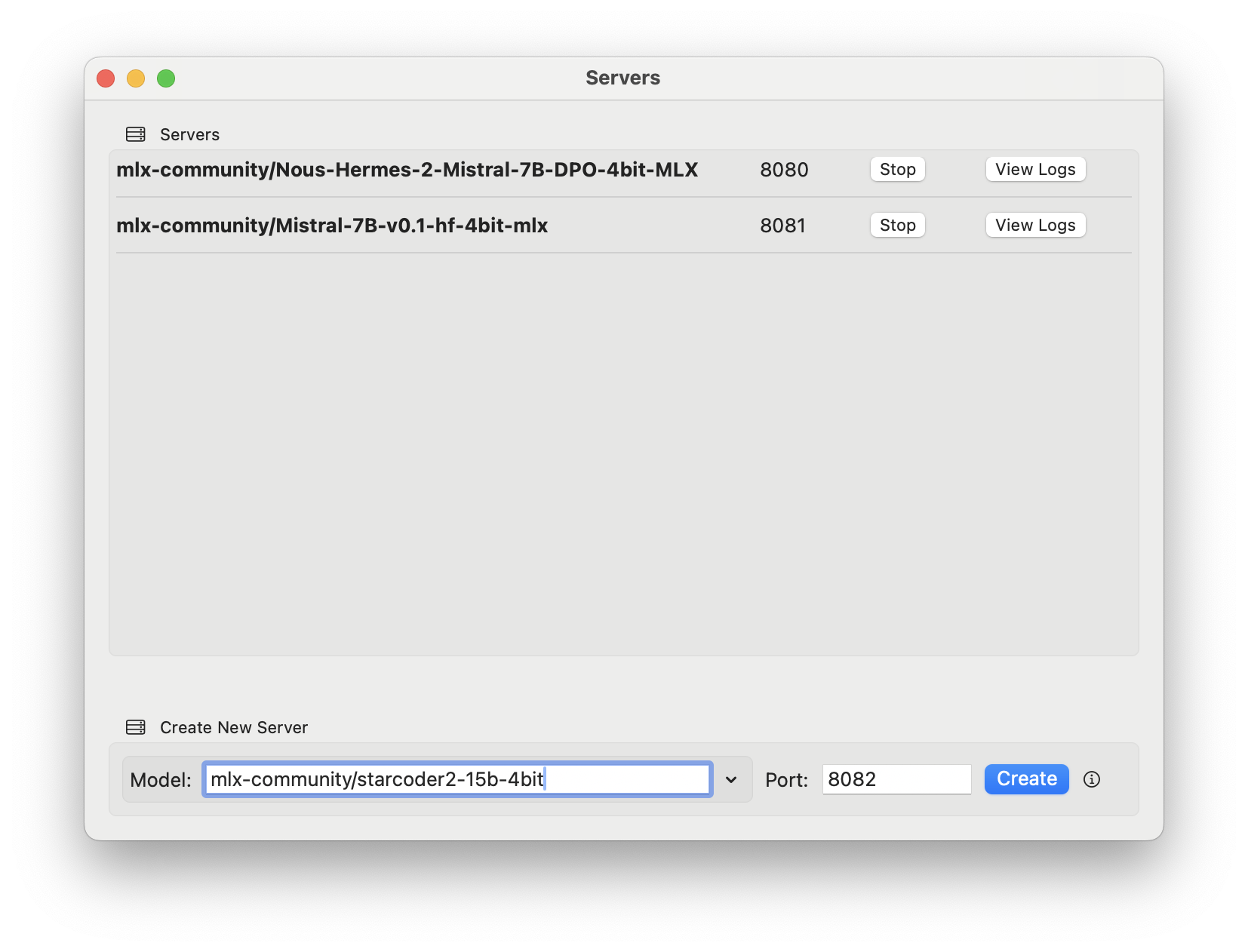

MLX -> Servers -> New Server...

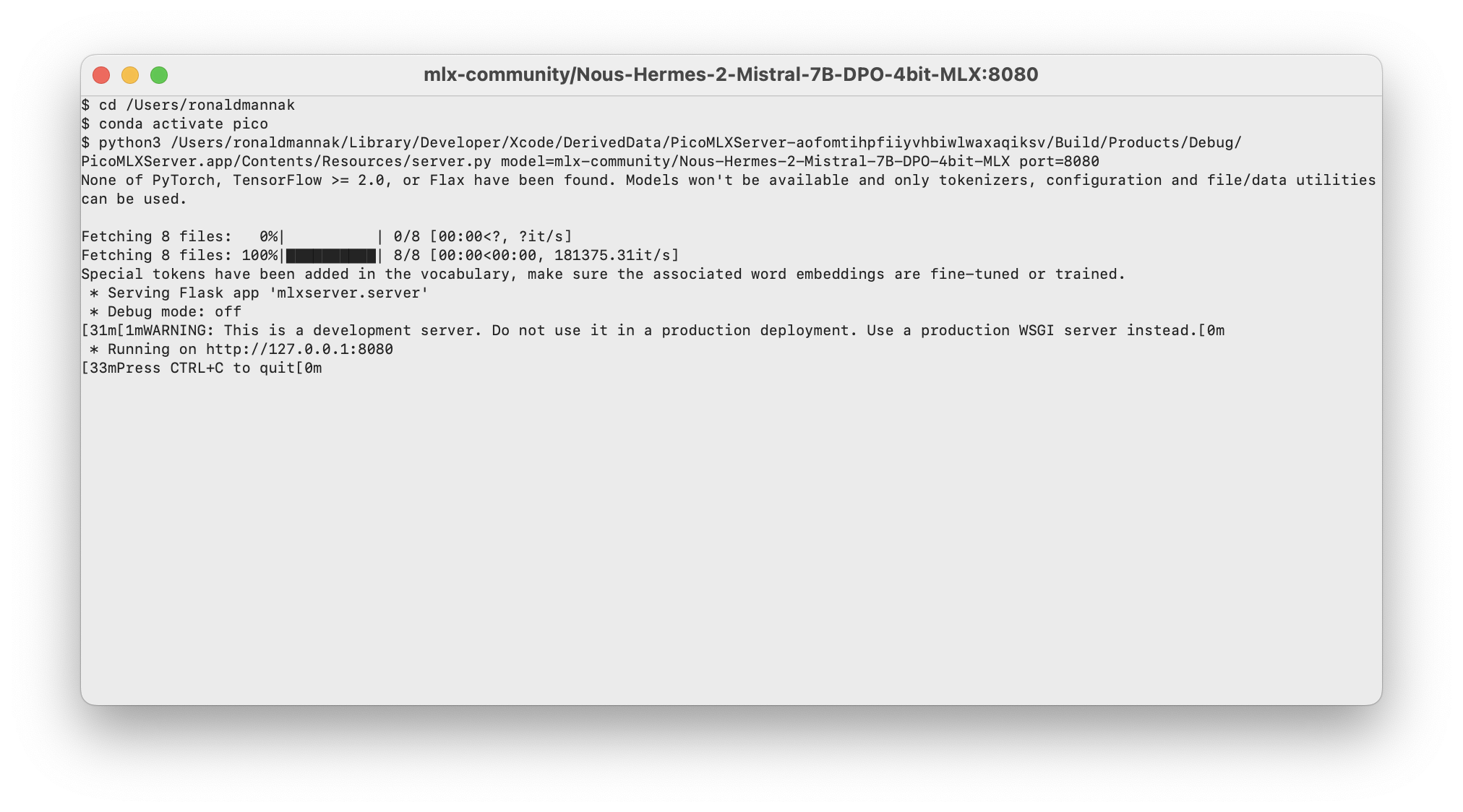

Create to create the default server mlx-community/Nous-Hermes-2-Mistral-7B-DPO-4bit-MLX on port 8080

v button or type in a model manually from the MLX Community on HuggingFace (make sure to use the mlx-community/ prefix)View Logs buttons to open a window with the server's real-time logs

Point any OpenAI API compatible AI assistant to http://127.0.0.1:8080 (or any other port you used in Pico MLX Server). (Instructions for Pico AI Assistant coming soon)

Curl:

curl -X GET 'http://127.0.0.1:8080/generate?prompt=write%20me%20a%20poem%20about%the%20ocean&stream=true'

POST /v1/completions API. See for more information https://platform.openai.com/docs/api-reference/completions/create.lsof -i:8080 in the terminal to find the PID of the running server)New Server window and the Servers menu where the state of servers isn't updatedPico MLX Server is part of a bundle of open source Swift tools for AI engineers. Looking for a server-side Swift OpenAI proxy to protect your OpenAI keys? Check out Swift OpenAI Proxy.

Pico MLX Server, Swift OpenAI Proxy, and Pico AI Assistant were created by Ronald Mannak with help from Ray Fernando

MLX Server was created by Mustafa Aljadery & Siddharth Sharma

Code used from MLX Swift Chat and Swift Chat