Это репо для нашего опроса TMLR, объединяющего перспективы НЛП и разработки программного обеспечения: опрос по языковым моделям для кода - всесторонний обзор исследований LLM для кода. Работы в каждой категории упорядочены хронологически. Если у вас есть базовое понимание машинного обучения, но новичок в NLP, мы также предоставляем список рекомендуемых показаний в разделе 9.

[2024/11/28] Показанные статьи:

Оптимизация предпочтений для рассуждений с псевдо обратной связью из Технологического университета Наняна.

ScribeAgent: к специализированным веб-агентам с использованием данных рабочего процесса в масштабе производства от Scribe.

Программирование, управляемое планированием: большой рабочий процесс программирования с большим языком из Мельбурнского университета.

Цель перевода кода на уровне репозитория нацелен на ржавчину из Университета Sun Yat-Sen.

Использование предыдущего опыта: расширяемая вспомогательная база знаний для текста до SQL от Университета науки и техники Китая.

Codexembed: универсальное семейство модели встраиваемого для многолигенового и многозадачного кода поиска из исследований Salesforce AI.

PROSEC: Укрепляющий код LLM с упреждающим выравниванием безопасности в Университете Пердью.

[2024/10/22] Мы собрали 70 документов с сентября и октября 2024 года в одной статье WeChat.

[2024/09/06] Наш опрос был принят для публикации с помощью транзакций по исследованиям машинного обучения (TMLR).

[2024/09/14] Мы собрали 57 документов с августа 2024 года (в том числе 48, представленных в ACL 2024) в одной статье WeChat.

Если вы обнаружите, что документ отсутствует в этом репозитории, неуместно в категории или не имея ссылки на информацию в журнале/конференции, пожалуйста, не стесняйтесь создавать проблему. Если вы обнаружите, что это репо является полезным, пожалуйста, процитируйте наш опрос:

@article{zhang2024unifying,

title={Unifying the Perspectives of {NLP} and Software Engineering: A Survey on Language Models for Code},

author={Ziyin Zhang and Chaoyu Chen and Bingchang Liu and Cong Liao and Zi Gong and Hang Yu and Jianguo Li and Rui Wang},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2024},

url={https://openreview.net/forum?id=hkNnGqZnpa},

note={}

}

Опросы

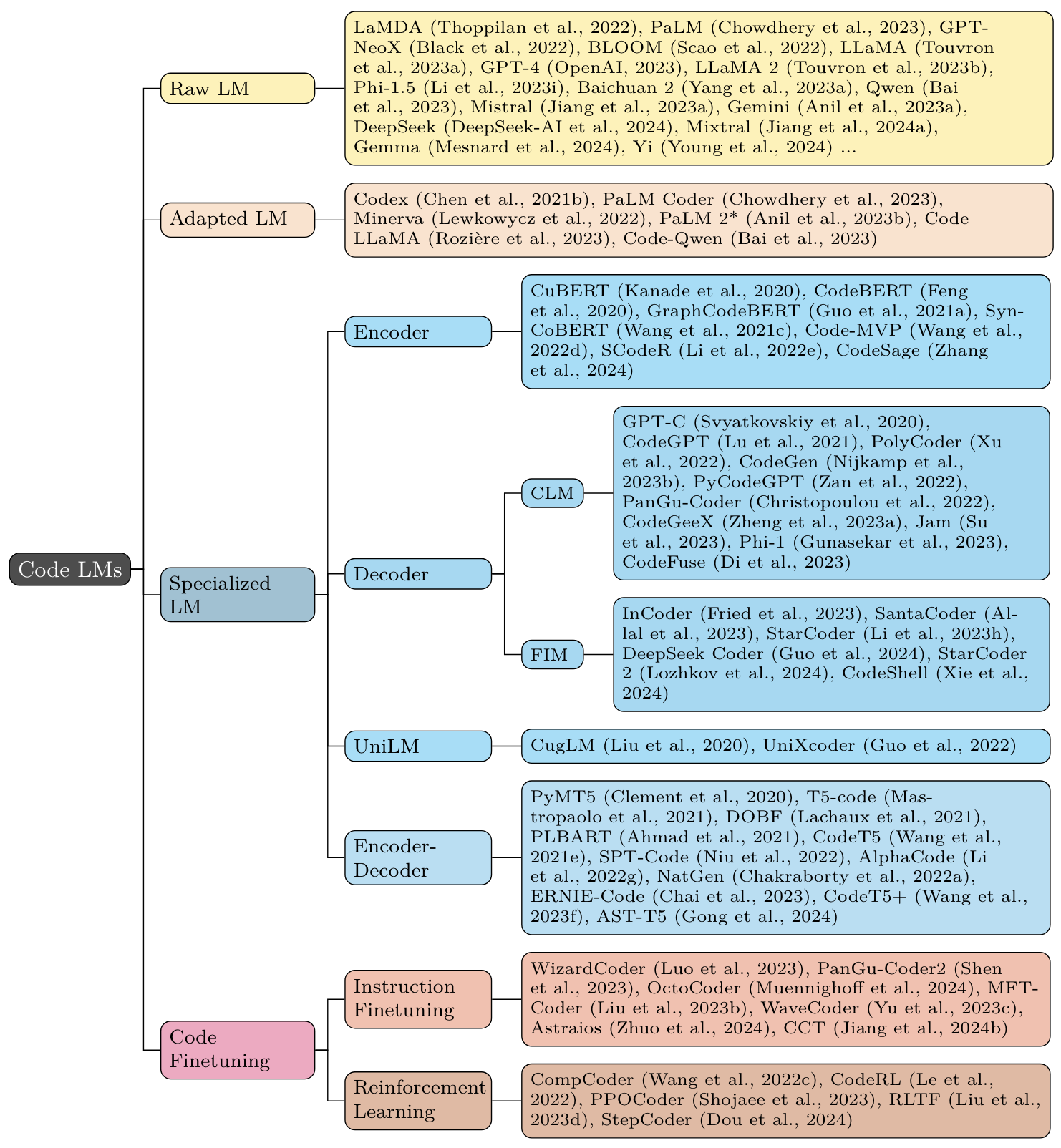

Модели

2.1 Base LLM и стратегии предварительной подготовки

2.2 Существующий LLM адаптирован к коду

2.3 Общая предварительная подготовка кода

2.4 (инструкция) тонкая настройка кода

2.5 Подкрепление обучения на коде

Когда кодирование встречается

3.1 Кодирование для рассуждения

3.2 Моделирование кода

3.3 Кодовые агенты

3.4 Интерактивное кодирование

3.5 Фронтальная навигация

Код LLM для языков с низким ресурсом, низким уровнем и доменом

Методы/модели для нижестоящих задач

Программирование

Тестирование и развертывание

DevOps

Требование

Анализ кода, сгенерированного AI,

Человеческое взаимодействие

Наборы данных

8.1 Предварительная подготовка

8.2 тесты

Рекомендуемые показания

Цитирование

Звездная история

Присоединяйтесь к нам

Мы перечисляем несколько недавних опросов по аналогичным темам. Хотя они все о языковых моделях для кода, 1-2 фокусируются на стороне NLP; 3-6 Фокус на стороне SE; 7-11 выпускаются после нашего.

«Модели крупных языков встречаются с NL2Code: опрос» [2022-12] [ACL 2023] [Paper]

«Опрос о предварительно проведенных языковых моделях для интеллекта нейронного кода» [2022-12] [Paper]

«Эмпирическое сравнение предварительно обученных моделей исходного кода» [2023-02] [ICSE 2023] [Paper]

«Большие языковые модели для разработки программного обеспечения: систематический обзор литературы» [2023-08] [Paper]

«На пути к пониманию больших языковых моделей в задачах разработки программного обеспечения» [2023-08] [Paper]

«Подводные камни в языковых моделях для интеллекта кода: таксономия и опрос» [2023-10] [Paper]

«Опрос о крупных языковых моделях для разработки программного обеспечения» [2023-12] [Paper]

«Глубокое обучение для интеллекта кода: опрос, эталонный и инструментарий» [2023-12] [Paper]

«Обследование интеллекта нейронного кодекса: парадигмы, достижения и за его пределами» [2024-03] [Paper]

«Задачи, которые люди приглашают: таксономия LLM-нижних задач в подходах к проверке и фальсификации программного обеспечения» [2024-04] [Paper]

«Автоматическое программирование: модели больших языков и за пределами» [2024-05] [Paper]

«Программное обеспечение и модели фундамента: понимание отраслевых блогов с использованием жюри моделей фундамента» [2024-10] [Paper]

«Глубокое обучение разработке программного обеспечения: прогресс, проблемы и возможности» [2024-10] [Paper]

Эти LLMS не специально обучены для кода, но продемонстрировали различную возможность кодирования.

Ламда : «Ламда: Языковые модели для диалоговых приложений» [2022-01] [Paper]

Palm : «Палм: моделирование языка масштабирования с помощью путей» [2022-04] [JMLR] [Paper]

GPT-neox : «GPT-NEOX-20B: модель авторегрессии с открытым исходным кодом» [2022-04]

Bloom : «Bloom: модель многоязычного языка с открытым доступом 176B-параметра» [2022-11] [Paper] [Модель]

Лама : «Лама: открытые и эффективные языковые модели фундамента» [2023-02] [Paper]

GPT-4 : «Технический отчет GPT-4» [2023-03] [Paper]

Llama 2 : «Llama 2: Open Foundation и тонкие модели чата» [2023-07] [Paper] [Repo]

PHI-1.5 : «Учебники-это все, что вам нужно II: PHI-1.5 Технический отчет» [2023-09] [Paper] [Модель]

Baichuan 2 : «Baichuan 2: открытые крупномасштабные языковые модели» [2023-09] [Paper] [Repo]

QWEN : «Технический отчет QWEN» [2023-09] [Paper] [Repo]

Мишстраль : «Мисстраль 7b» [2023-10] [Paper] [Repo]

Близнецы : «Близнецы: семейство высокоэффективных мультимодальных моделей» [2023-12] [Paper]

Phi-2 : «Phi-2: удивительная сила малых языковых моделей» [2023-12] [блог]

Yayi2 : «Yayi 2: многоязычные модели с открытым исходным кодом» [2023-12] [Paper] [Repo]

DeepSeek : «Deepseek LLM: масштабирование языковых моделей с открытым исходным кодом с долгомрождением» [2024-01] [Paper] [Repo]

Mixtral : «Миктрал экспертов» [2024-01] [Paper] [Блог]

DeepSeekmoe : «Deepseekmoe: к окончательной экспертной специализации в смеси языковых моделей» [2024-01] [Paper] [Repo]

Orion : «Orion-14b: многоязычные большие языковые модели с открытым исходным кодом» [2024-01] [Paper] [Repo]

OLMO : «Olmo: ускорение науки о языковых моделях» [2024-02] [Paper] [Repo]

Джемма : «Джемма: открытые модели на основе исследований и технологий Близнецов» [2024-02] [Paper] [Блог]

Claude 3 : «Модельная семейство Claude 3: Opus, Sonnet, Haiku» [2024-03] [Paper] [Блог]

YI : "Yi: Open Foundation Models By 01.ai" [2024-03] [Paper] [Repo]

Поро : «Поро 34b и благословение многоязычной» [2024-04] [Paper] [Модель]

Jetmoe : «Jetmoe: достижение производительности Llama2 с 0,1 млн долларов» [2024-04] [Paper] [Repo]

Llama 3 : «стадо ламы 3 моделей» [2024-04] [блог] [Repo] [Paper]

Reka Core : «Reka Core, Flash и Edge: серия мощных мультимодальных языковых моделей» [2024-04] [Paper]

PHI-3 : «Технический отчет PHI-3: высоко способная языковая модель локально на вашем телефоне» [2024-04] [Paper]

Openelm : «Openelm: эффективная семейство моделей языка с рамками обучения и вывода с открытым исходным кодом» [2024-04] [Paper] [Repo]

Tele-Flm : «Технический отчет Tele-FLM» [2024-04] [Paper] [Модель]

DeepSeek-V2 : «DeepSeek-V2: сильная, экономичная и эффективная модель языковой смеси экспертов» [2024-05] [Paper] [Repo]

Гекко : «Гекко: модель генеративного языка для английского, кода и корейского» [2024-05] [Paper] [Модель]

MAP-neo : «MAP-neo: очень способный и прозрачный двуязычный серия моделей большого языка» [2024-05] [Paper] [Repo]

Skywork-Moe : «Skywork-Moe: глубокое погружение в методы обучения для языковых моделей смеси» [2024-06] [Paper]

Xmodel-LM : «Технический отчет Xmodel-LM» [2024-06] [Paper]

GEB : «GEB-1.3B: открытая легкая модель большой языка» [2024-06] [Paper]

Заяц : «Заяц: Priors, ключ к эффективности модели малого языка» [2024-06] [Paper]

DCLM : «DataComp-LM: в поисках следующего поколения обучающих наборов для языковых моделей» [2024-06] [Paper]

Nemotron-4 : «Nemotron-4 340b технический отчет» [2024-06] [Paper]

Чатглм : «Чатглм: семейство больших языковых моделей от GLM-130B до GLM-4 Все инструменты» [2024-06] [Paper]

ЮЛАН : «Юлан: большая языковая модель с открытым исходным кодом» [2024-06] [Paper]

Gemma 2 : «Gemma 2: улучшение моделей открытого языка при практическом размере» [2024-06] [Paper]

H2O-Danube3 : «Технический отчет H2O-Danube3» [2024-07] [Paper]

QWEN2 : «Технический отчет QWEN2» [2024-07] [Paper]

Аллам : «Аллам: Большие языковые модели для арабского и английского языка» [2024-07] [Paper]

SEALLMS 3 : «SEALLMS 3: Open Foundation и чат многоязычных больших языковых моделей для языков Юго-Восточной Азии» [2024-07] [Paper]

AFM : «Языковые модели Apple Intelligence Foundation» [2024-07] [Paper]

«Кодировать или не кодировать? Изучение влияния кода на предварительном обучении» [2024-08] [Paper]

Olmoe : «Olmoe: Open Mix-Of Experts Language Models» [2024-09] [Paper]

«Как предварительная подготовка кода влияет на выполнение задачи языковой модели?» [2024-09] [бумага]

Eurollm : «Eurollm: многоязычные языковые модели для Европы» [2024-09] [Paper]

«Какой язык программирования и какие функции на стадии предварительного обучения влияют на производительность логического вывода вниз по течению?» [2024-10] [бумага]

GPT-4O : «Системная карта GPT-4O» [2024-10] [Paper]

Hunyuan-Large : «Hunyuan-Large: модель MOE с открытым исходным кодом с 52 миллиардами активированных параметров Tencent» [2024-11] [Paper]

Кристалл : «Кристалл: освещающие способности LLM на языке и коде» [2024-11] [Paper]

Xmodel-1.5 : «Xmodel-1.5: многоязычный LLM в масштабе 1B» [2024-11] [Paper]

Эти модели представляют собой LLMS общего назначения, которые далее предварительно предварительно предоставлены данными, связанными с кодом.

Кодекс (GPT-3): «Оценка моделей крупных языков, обученных коду» [2021-07] [Paper]

Palm Coder (Palm): «Палм: моделирование языка масштабирования с путями» [2022-04] [JMLR] [Paper]

Minerva (Palm): «Решение количественных проблем рассуждений с языковыми моделями» [2022-06] [Paper]

Пальма 2 * (пальма 2): «Палм 2 Технический отчет» [2023-05] [Paper]

Код Llama (Llama 2): «Code Llama: Open Foundation Models для кода» [2023-08] [Paper] [Repo]

Лемур (лама 2): «Лемур: гармонизирующий естественный язык и код для языковых агентов» [2023-10] [ICLR 2024 Spotlight] [Paper]

BTX (Llama 2): «Mranch-Train-Mix: Mixing Expert LLMS в смесь Experts LLM» [2024-03] [Paper]

Хироп : «Хироп: экстраполяция длины для моделей кода с использованием иерархической позиции» [2024-03] [ACL 2024] [Paper]

«Освоение текста, кода и математику одновременно путем слияния высокоспециализированных языковых моделей» [2024-03] [Paper]

Codegemma : «CodeGemma: Open Code Models на основе Gemma» [2024-04] [Paper] [Модель]

DeepSeek-Coder-V2 : «DeepSeek-Coder-V2: нарушение барьер моделей с закрытым исходным кодом в интеллекте кода» [2024-06] [Paper]

«Обещание и опасность моделей генерации совместного кода: баланс эффективности и запоминания» [2024-09] [Paper]

QWEN2.5-CODER : «Технический отчет QWEN2,5-CODER» [2024-09] [Paper]

Lingma swe-gpt : «Lingma swe-gpt: открытая ориентированная на языковая модель разработки для автоматизированного улучшения программного обеспечения» [2024-11] [Paper]

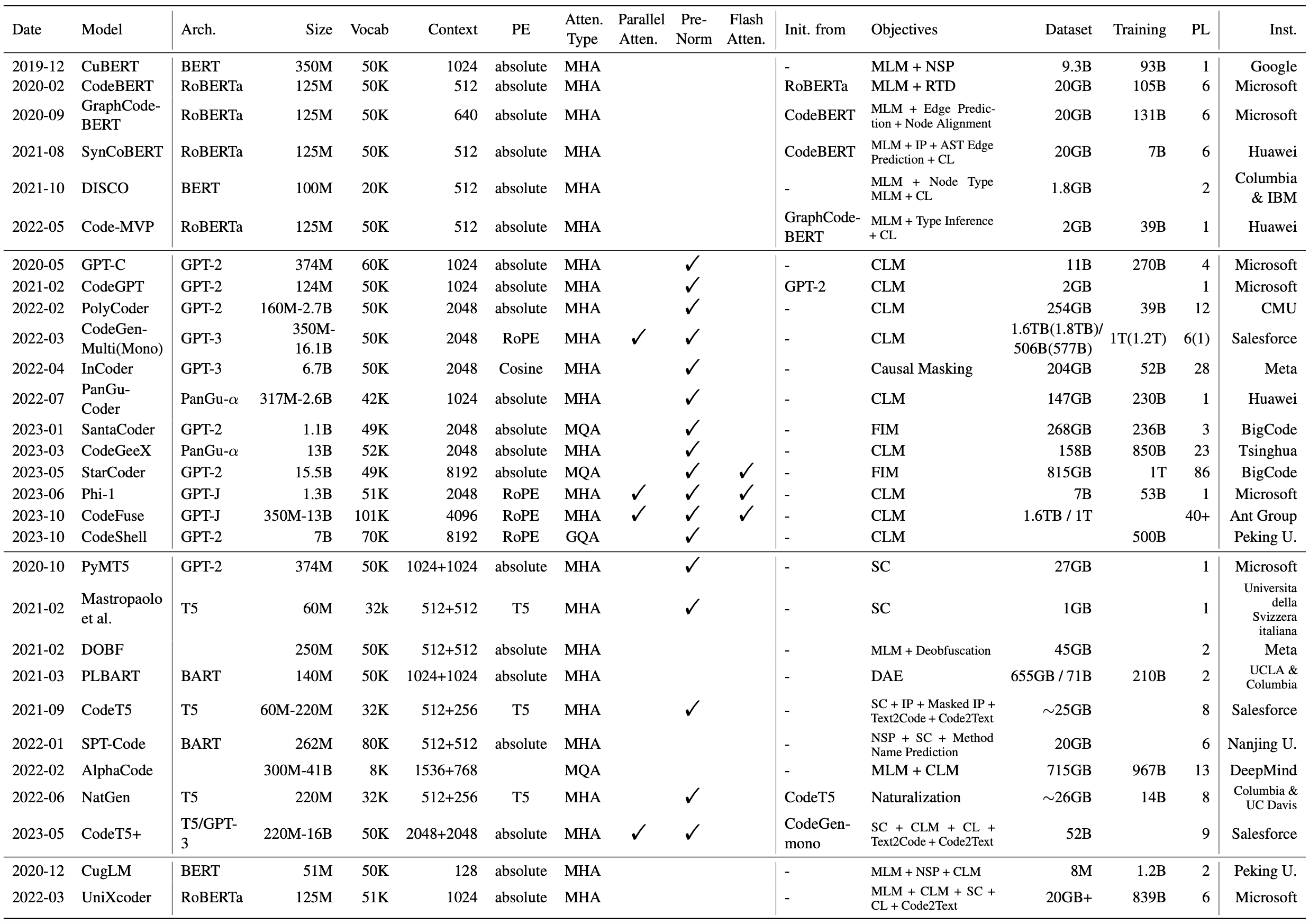

Эти модели представляют собой трансформаторные кодеры, декодеры и декодеры энкодеров, предоставленные с нуля с использованием существующих целей для общего языкового моделирования.

Cubert (MLM + NSP): «Изучение и оценка контекстуального встраивания исходного кода» [2019-12] [ICML 2020] [Paper] [Repo]

Codebert (MLM + RTD): «Codebert: предварительно обученная модель для программирования и естественных языков» [2020-02] [EMNLP 2020 выводы] [Paper] [Repo]

GraphCodebert (MLM + DFG Edge Production + выравнивание узлов DFG): «GraphCodebert: представления кода до обучения с помощью потока данных» [2020-09] [ICLR 2021] [Paper] [Repo]

Syncobert (MLM + идентификатор прогнозирование + AST Edge прогнозирование + контрастное обучение): «Syncobert: Многомодальное контрастное предварительное обучение, управляемое синтаксисом, для представления кода» [2021-08] [Paper]

Disco (MLM + тип узла MLM + контрастное обучение): «На пути к обучению (DIS) -SImility исходного кода из программных контрастов» [2021-10] [ACL 2022] [Paper]

Code-MVP (MLM + тип вывод + контрастное обучение): «Code-MVP: обучение для представления исходного кода из нескольких представлений с контрастным предварительным тренировком» [2022-05] [NAACL 2022 Технический трек] [Paper]

Codesage (MLM + Deobfuscation + Contrastive Learning): «Обучение представления кода в масштабе» [2024-02] [ICLR 2024] [Paper]

Колсберт (MLM): «Законы масштабирования модели понимания кода» [2024-02] [Paper]

GPT-C (CLM): «Компостит Intellicode: генерация кода с использованием трансформатора» [2020-05] [ESEC/FSE 2020] [Paper]

CodeGpt (CLM): «CodexGlue: набор данных контрольного управления для понимания и генерации кода» [2021-02] [Наборы и контрольные показатели Neurips 2021] [Paper] [Repo]

CodeParrot (CLM) [2021-12] [блог]

Polycoder (CLM): «Систематическая оценка крупных языковых моделей кода» [2022-02] [DL4C@ICLR 2022] [Paper] [Repo]

CodeGen (CLM): «CodeGen: открытая большая языковая модель для кода с синтезом программы с несколькими разворотами» [2022-03] [ICLR 2023] [Paper] [Repo]

Incoder (причинно-следственная маскировка): «Инкодер: генеративная модель для кода заполнения и синтеза» [2022-04] [ICLR 2023] [Paper] [Repo]

Pycodegpt (CLM): «Cert: постоянное предварительное обучение по эскизам для генерации кода, ориентированной на библиотеку» [2022-06] [IJCAI-Ecai 2022] [Paper] [Repo]

Pangu-Coder (CLM): «Pangu-Coder: Синтез программы с языком функционального уровня» [2022-07] [Paper]

Santacoder (FIM): "Santacoder: не дотягитесь за звездами!" [2023-01] [Paper] [Модель]

Codegeex (CLM): «CodeGeex: предварительно обученная модель для генерации кода с многоязычными оценками на HumaneVal-X» [2023-03] [Paper] [Repo]

StarCoder (FIM): «StarCoder: Да пребудет источник!» [2023-05] [Paper] [Модель]

PHI-1 (CLM): «Учебники-это все, что вам нужно» [2023-06] [Paper] [Модель]

CodeFuse (CLM): «CodeFuse-13B: предварительно предварительно проведенный многоязычный код большой язык» [2023-10] [Paper] [Модель]

DeepSeek Coder (CLM+FIM): «DeepSeek-Coder: Когда модель большого языка соответствует программированию-рост интеллекта кода» [2024-01] [Paper] [Repo]

StarCoder2 (CLM+FIM): «StarCoder 2 и стек V2: следующее поколение» [2024-02] [Paper] [Repo]

CodeShell (CLM+FIM): «Технический отчет CodeShell» [2024-03] [Paper] [Repo]

CodeQwen1.5 [2024-04] [блог]

Гранит : «Модели гранитного кода: семейство моделей открытых фундаментов для интеллекта кода» [2024-05] [Paper] «Масштабирование моделей гранита кода до 128K контекст» [2024-07] [Paper]

NT-Java : «Узкий трансформатор: Java-LM на основе StarCoder для настольного компьютера» [2024-07] [Paper]

Arctic-Snowcoder : «Arctic-Snowcoder: демистификация высококачественных данных в предварительной подготовке кода» [2024-09] [Paper]

AIXCODER : «AIXCODER-7B: легкая и эффективная большая языковая модель для завершения кода» [2024-10] [Paper]

Opencoder : «Opencoder: открытая кулинарная книга для кода высшего уровня на большие языковые модели» [2024-11] [Paper]

PYMT5 (CRORUPTION): «PYMT5: Многомодовая трансляция естественного языка и кода Python с трансформаторами» [2020-10] [EMNLP 2020] [Paper]

Mastropaolo et al. (MLM + Deobfuscation): «DOBF: A Deobfuscation предварительно тренировочный объектив для языков программирования» [2021-02] [ICSE 2021] [Paper] [Repo]

DOBF (SPAN CORRUPTION): «Изучение использования трансформатора передачи текста в текст для поддержки задач, связанных с кодом» [2021-02] [Neurips 2021] [Paper] [Repo]

Plbart (DAE): «Объединенное предварительное обучение для понимания программы и поколения» [2021-03] [NAACL 2021] [Paper] [Repo]

CODET5 (SPAN CORRUPTION + TAGING IDENIFIER + Прогнозирование идентификатора маскированного идентификатора + TEXT2CODE + CODE2TEXT): «CODET5: Унифицированный предварительно обученный предварительно обученные модели энкодера для понимания и генерации кода» [2021-09] [EMNLP 2021] [Paper] [Repo]

SPT-Code (Span Corruption + NSP + Прогнозирование имени метода): «SPT-Code: предварительное обучение последовательности в последовательности для представлений исходного кода обучения» [2022-01] [ICSE 2022 Технический трек] [Paper]

Альфакод (MLM + CLM): «Генерация кода на уровне конкуренции с альфакодом» [2022-02] [Science] [Paper] [Блог]

Natgen (Code Naturalization): «Natgen: генеративная предварительная тренировка путем« натурализации »исходного кода» [2022-06] [ESEC/FSE 2022] [Paper] [Repo]

Ernie-Code (Span Corruption + Pivot Translation LM): «Эрни-код: за пределами английского ориентированного межсового предварительного подготовки для языков программирования» [2022-12] [ACL23 (выводы)] [Paper] [Repo]

CODET5 + (CROMRUPTION + CLM + Текстовый код контрастирующий обучение + трансляция текстового кода): «Codet5 +: Open Code Большой языковые модели для понимания кода и генерации» [2023-05] [EMNLP 2023] [Paper] [Repo]

AST-T5 (SPAN CORRUPTION): «AST-T5: Предварительная подготовка кода и понимание» [2024-01] [ICML 2024] [Paper] [Paper]

CUGLM (MLM + NSP + CLM): «Многозадачная модель обучения на основе обучения для завершения кода» [2020-12] [ASE 2020] [Paper]

UnixCoder (MLM + NSP + CLM + SPAN CORRUPTION + Contrastive Learning + Code2text): «UnixCoder: унифицированное межмодальное предварительное обучение для представления кода» [2022-03] [ACL 2022] [Paper] [Repo]

Эти модели применяют методы тонкой настройки инструкции для повышения возможностей кода LLMS.

WizardCoder (StarCoder + Evol-Instruct): «WizardCoder: расширение возможностей кода большие языковые модели с помощью evol-instruct» [2023-06] [ICLR 2024] [Paper] [Repo]

Pangu-Coder 2 (StarCoder + Evol-Instruct + RRTF): «Pangu-Coder2: Увеличение больших языковых моделей для кода с ранжированным отзывом» [2023-07] [Paper]

Octocoder (StarCoder) / OctoGeex (CodeGeex2): «Octopack: код настройки инструкций большие языковые модели» [2023-08] [ICLR 2024 Spotlight] [Paper] [Repo]

«На этом этап обучения справляется с кодом данных, которые помогают LLMS рассуждать» [2023-09] [ICLR 2024 Spotlight] [Paper]

Инструктатор : «Инструктор: настройка инструкции большие языковые модели для редактирования кода» [Paper] [Repo]

MFTCoder : «MFTCoder: повышение кода LLM с многозадажной тонкой настройкой» [2023-11] [KDD 2024] [Paper] [Repo]

«Очистка кода с помощью LLM для точных генераторов кода обучения» [2023-11] [ICLR 2024] [Paper]

Magicoder : «Magicoder: расширение возможностей кода с помощью OSS-Instruct» [2023-12] [ICML 2024] [Paper]

WaveCoder : «WaveCoder: широко распространенное и универсальное улучшение для кода большие языковые модели путем настройки инструкций» [2023-12] [ACL 2024] [Paper]

Astraios : «Astraios: Параметр-экономичный код настройки инструкций большие языковые модели» [2024-01] [Paper]

Dolphcoder : «Dolphcoder: эхополирующий код большие языковые модели с разнообразными и многообъясняющими настройками инструкций» [2024-02] [ACL 2024] [Paper]

SafeCoder : «Настройка инструкции для получения защищенного кода» [2024-02] [ICML 2024] [Paper]

«Код нуждается в комментариях: улучшение кода LLM с увеличением комментариев» [ACL 2024 выводы] [Paper]

CCT : «Настройка сравнения кода для моделей Code на больших языках» [2024-03] [Paper]

SAT : «Структурно-аналитическая настройка для моделей, предварительно обученных кодом» [2024-04] [Paper]

Codefort : «Codefort: надежное обучение для моделей генерации кодов» [2024-04] [Paper]

Xft : «XFT: разблокировка мощности настройки инструкций кода, просто слияя Upcycled Mix-Of-Experts» [2024-04] [ACL 2024] [Paper] [Repo]

Анайв-инстакция : «Автокондер: улучшение кода с большой языковой моделью с помощью aiev-instruct» [2024-05] [Paper]

Alchemistcoder : «Alchemistcoder: гармонизирующая и выявляя возможность кода путем настройки задним числом на данные с несколькими источниками» [2024-05] [Paper]

«От символических задач до генерации кода: диверсификация дает лучшие исполнители задач» [2024-05] [Paper]

«Раскрытие влияния инструкции по кодированию данных настройки на большие языковые модели» [2024-05] [Paper]

Слива : «Слива: предпочтение обучению плюс тестовые примеры дают лучшие модели языка кода» [2024-06] [Paper]

MCODER : «MCEVAL: массовая многоязычная оценка кода» [2024-06] [Paper]

«Разблокируйте корреляцию между контролируемой точной настройкой и обучением подкрепления в коде обучения на больших языковых моделях» [2024-06] [Paper]

Код-оптимиза : «Кода-оптимизация: данные самогенерированных предпочтений для правильности и эффективности» [2024-06] [Paper]

Unicoder : «Unicoder: Code Code большой языковой модель через универсальный код» [2024-06] [ACL 2024] [Paper]

«Благодарность-это душа остроумия: обрезка длинных файлов для генерации кода» [2024-06] [Paper]

«Код меньше, выровняйте больше: эффективная тонкая настройка LLM для генерации кода с обрезкой данных» [2024-07] [Paper]

Inversecoder : «Inversecoder: выпущение мощности LLMS, настроенных на инструкции, с обратной установкой» [2024-07] [Paper]

«Обучение учебным программам для малых моделей языка кода» [2024-07] [Paper]

Генетический инстактр : «Генетический инструкт: масштабирование синтетической генерации инструкций кодирования для моделей крупных языков» [2024-07] [Paper]

DataScope : «Синтез набора данных API-управляемых с большими моделями Conetune крупных кодов» [2024-08] [Paper]

** xcoder **: «Как выполняет ваш код LLM?

Галла : «Галла: график выравнивал большие языковые модели для улучшения понимания исходного кода» [2024-09] [Paper]

Hexacoder : «Hexacoder: Gesect Code Generation с помощью синтетического обучения Oracle с помощью Oracle» [2024-09] [Paper]

AMR-EVOL : «AMR-EVOL: Эволюция адаптивного модульного ответа вызывает лучшую дистилляцию знаний для крупных языковых моделей в генерации кода» [2024-10] [Paper]

Lintseq : «Модели языка обучения на последовательностях синтетических редактирования улучшают синтез кода» [2024-10] [Paper]

COBA : «COBA: Balancer с конвергенцией для многозадачного создания больших языковых моделей» [2024-10] [EMNLP 2024] [Paper]

Coursorcore : «Cursorcore: Помощь в программировании через что-нибудь по сравнению с чем-либо» [2024-10] [Paper]

SelfCodeAlign : «SelfCodeAlign: самоопределение для генерации кода» [2024-10] [Paper]

«Освоение ремесла синтеза данных для коделлм» [2024-10] [Paper]

Коделутра : «Коделутра: повышение генерации кода LLM посредством уточнения под предпочтениями» [2024-11] [Paper]

DSTC : «DSTC: Прямое обучение предпочтениям с самостоятельными тестами и кодом для улучшения кода LMS» [2024-11] [Paper]

Compcoder : «Генерация с компилятором с обратной связью компилятора» [2022-03] [ACL 2022] [Paper]

Coderl : «Coderl: Mastering Generation с помощью предварительно подготовленных моделей и обучения глубоким подкреплением» [2022-07] [Neurips 2022] [Paper] [Repo]

PPOCODER : «Генерация кода на основе выполнения с использованием глубокого обучения подкреплению» [2023-01] [TMLR 2023] [Paper] [Repo]

RLTF : «RLTF: Увеличение подкрепления от обратной связи единичных испытаний» [2023-07] [Paper] [Repo]

B-Coder : «B-Coder: Обучение на основе глубокого армирования на основе ценностей для синтеза программы» [2023-10] [ICLR 2024] [Paper]

Ircoco : «Ircoco: немедленное обучение глубоким подкреплению, управляемое вознаграждениями для завершения кода» [2024-01] [FSE 2024] [Paper]

Отчетный кодер : «Отчетник: улучшить генерацию кода с помощью обучения подкреплению от обратной связи компилятора» [2024-02] [ACL 2024] [Paper]

RLPF & DPA : «LLMS, выдвинутые на производительность для генерации быстрого кода» [2024-04] [Paper]

«Измерение запоминания в RLHF для завершения кода» [2024-06] [Paper]

«Применение RLAIF для генерации кода с API-USAGE в легких LLMS» [2024-06] [Paper]

RLCODER : «RLCODER: обучение подкрепления для завершения кода на уровне хранилища» [2024-07] [Paper]

PF-PPO : «Фильтрация политики в RLHF для Fine-Tune LLM для генерации кода» [2024-09] [Paper]

Кофе-гим : «Кофейный гим: среда для оценки и улучшения обратной связи естественного языка по ошибочному коду» [2024-09] [Paper]

RLEF : «RLEF: код заземления LLMS в обратной связи с подкреплением» [2024-10] [Paper]

Codepmp : «Codepmp: масштабируемая модель предпочтения предварительно подготовка для рассуждения о большой языке» [2024-10] [Paper]

CodedPo : «CodedPo: выравнивающие кодовые модели с самого сгенерированным и проверенным исходным кодом» [2024-10] [Paper]

«Оптимизация политики под контролем процесса для генерации кода» [2024-10] [Paper]

«Выравнивание коделлм с прямой оптимизацией предпочтений» [2024-10] [Paper]

Сокол : «Сокол: адаптивная адаптивная/кратковременная память, управляемая обратной связью» [2024-10] [Paper]

PFPO : «Оптимизация предпочтений для рассуждения с псевдо обратной связью» [2024-11] [Paper]

PAL : «PAL: модели языка с программой» [2022-11] [ICML 2023] [Paper] [Repo]

Горник : «Программа мыслей, подсказывающая: раскрытие вычислений от рассуждений по численным задачам» [2022-11] [TMLR 2023] [Paper] [Repo]

PAD : «PAD: Программная дистилляция может научить мелких моделей, рассуждать лучше, чем цепь той настройки» [2023-05] [NAACL 2024] [Paper]

CSV : «Решение сложных задач по математическим словам с использованием интерпретатора кода GPT-4 с самоверовым на основе кода» [2023-08] [ICLR 2024] [Paper]

MathCoder : «MathCoder: бесшовная интеграция кода в LLMS для улучшенных математических рассуждений» [2023-10] [ICLR 2024] [Paper]

COC : «Цепочка кода: рассуждения с эмулятором кода с моделью языка» [2023-12] [ICML 2024] [Paper]

Марио : «Марио: математическая рассуждения с выводом интерпретатора кода-воспроизводимый трубопровод» [2024-01] [ACL 2024 выводы] [Paper]

Regal : «Regal: программы рефакторинга для обнаружения обобщаемых абстракций» [2024-01] [ICML 2024] [Paper]

«Действия исполняемого кода вызывают лучшие агенты LLM» [2024-02] [ICML 2024] [Paper]

Hpropro : «Изучение гибридного ответа на вопрос с помощью программного подсказки» [2024-02] [ACL 2024] [Paper]

Xstreet : «Выявление лучших многоязычных структурированных рассуждений из LLMS до кода» [2024-03] [ACL 2024] [Paper]

Flowmind : «Flowmind: автоматическое генерация рабочих процессов с LLMS» [2024-03] [Paper]

Think и Execute : «Языковые модели как компиляторы: моделирование выполнения псевдокода улучшает алгоритмические рассуждения в языковых моделях» [2024-04] [Paper]

Ядро : «Ядро: LLM как интерпретатор для программирования естественного языка, программирования псевдокода и программирования потока агентов ИИ» [2024-05] [Paper]

Mumath-код : «Mumath-код: комбинирование больших языковых моделей с использованием инструментов с многоперспективным увеличением данных для математических рассуждений» [2024-05] [Paper]

Cogex : «Learning to рассуждать с помощью генерации, эмуляции и поиска программ» [2024-05] [Paper]

«Арифметические рассуждения с LLM: генерация и перестановка пролог» [2024-05] [Paper]

"Могут ли LLMS разум в дикой природе с программами?" [2024-06] [бумага]

Дотамат : «Дотамат: разложение мышления с помощью кодовой помощи и самокоррекции для математических рассуждений» [2024-07] [Paper]

Cibench : «Cibench: оценка ваших LLM с помощью плагина интерпретатора кода» [2024-07] [Paper]

Pybench : «Pybench: оценка агента LLM на различных реальных задачах кодирования» [2024-07] [Paper]

Adacoder : «Adacoder: адаптивное приглашение сжатие для программного визуального ответа» [2024-07] [Paper]

Pyramidcoder : «Pyramid Coder: иерархический код генератор для композиционного визуального ответа» [2024-07] [Paper]

CodeGraph : «CodeGraph: улучшение рассуждений с графом LLM с кодом» [2024-08] [Paper]

SIAM : «Сиам: самосовершенствование кода математические рассуждения о крупных языковых моделях» [2024-08] [Paper]

Codeplan : «Codeplan: разблокировка потенциала рассуждения в крупных моделях Langauge путем масштабирования планирования формы кода» [2024-09] [Paper]

Горник : «Доказательство мышления: синтез нейросимболической программы допускает надежные и интерпретируемые рассуждения» [2024-09] [Paper]

Метамат : «Метамат: интеграция естественного языка и кода для улучшенных математических рассуждений в моделях крупных языков» [2024-09] [Paper]

«Babelbench: Omni Clandmark для кодового анализа мультимодальных и мультиструктурированных данных» [2024-10] [Paper]

Codesteer : «Управление большими языковыми моделями между выполнением кода и текстовыми рассуждениями» [2024-10] [Paper]

MathCoder2 : «MathCoder2: лучшие математические рассуждения от продолжающейся предварительной подготовки по математическому коду, трансляционному модели» [2024-10] [Paper]

LLMFP : «Планирование чего-либо с строгостью: планирование общего назначения с нулевым выстрелом с формализованным программированием на основе LLM» [2024-10] [Paper]

Докажите : «Не все голоса считаются программами как проверки улучшают самосогласованность языковых моделей для математических рассуждений» [2024-10] [Paper]

Докажите : «Доверьтесь, но проверьте: программная оценка VLM в дикой природе» [2024-10] [Paper]

Геокодер : «Геокодер: решение задач геометрии путем генерации модульного кода через модели на языке зрения» [2024-10] [Paper]

DeaseAgain : «Разрешение: Использование извлекаемых символических программ для оценки математических рассуждений» [2024-10] [Paper]

GFP : «Подсказка заполнения пробелов усиливает математические рассуждения с помощью кода» [2024-11] [Paper]

Utmath : «Utmath: оценка математики с помощью единичного теста посредством мысли о рассуждениях о кодировании» [2024-11] [Paper]

Кокоп : «Кокоп: Улучшение текстовой классификации с помощью LLM с помощью подсказки завершения кода» [2024-11] [Paper]

Планирование Replan : «Интерактивное и выразительное планирование кода с большими языковыми моделями» [2024-11] [Paper]

«Проблемы моделирования кода для больших языковых моделей» [2024-01] [Paper]

«Codemind: основа для бросания в борьбу с большими языковыми моделями для рассуждения кода» [2024-02] [Paper]

«Выполнение алгоритмов, описываемых естественным языком с большими языковыми моделями: исследование» [2024-02] [Paper]

«Могут ли языковые модели притворяться решателями? Моделирование логического кода с LLMS» [2024-03] [Paper]

«Оценка крупных языковых моделей с поведением времени выполнения программы» [2024-03] [Paper]

«Далее: обучение крупным языковым моделям для разума об выполнении кода» [2024-04] [ICML 2024] [Paper]

«SelfPico: самостоятельное выполнение частичного кода с LLMS» [2024-07] [Paper]

«Большие языковые модели как исполнители кода: исследовательское исследование» [2024-10] [Paper]

«VisualCoder: направление больших языковых моделей в выполнении кода с помощью мелкозернистой мультимодальной цепочки мыслей» [2024-10] [Paper]

Самооборота : «Генерация кода самостоятельной работы через CHATGPT» [2023-04] [Paper]

Чатдев : «Коммуникативные агенты для разработки программного обеспечения» [2023-07] [Paper] [Repo]

METAGPT : «Метагпт: метапрограммирование для многоагентной совместной системы» [2023-08] [Paper] [Repo]

CODECAUN : «Кодахня: к генерации модульного кода через цепочку самостоятельных разложений с репрезентативными подмодулами» [2023-10] [ICLR 2024] [Paper]

Codeagent : «Кодекс: улучшение генерации кода с помощью интегрированных инструментов систем агентов для реальных задач кодирования в реальном мире» [2024-01] [ACL 2024] [Paper]

Conline : «Conline: Комплексное генерация и утонченность кода с помощью онлайн-поиска и испытания правильности» [2024-03] [Paper]

LCG : «Когда генерация кодов на основе LLM соответствует процессу разработки программного обеспечения» [2024-03] [Paper]

RepairAgent : «RepairAgent: автономный агент на основе LLM для ремонта программы» [2024-03] [Paper]

MAGIS :: «Magis: LLM на основе многоагентной структуры для решения выпуска GitHub» [2024-03] [Paper]

SOA : «Самоорганизованные агенты: многоагентная структура LLM в направлении Ultra крупномасштабной генерации и оптимизации кода» [2024-04] [Paper]

Autocoderover : «Autocoderover: Автономное улучшение программы» [2024-04] [Paper]

Swe-Agent : «Swe-Agent: Agent-Computer Interfaces включает автоматизированную разработку программного обеспечения» [2024-05] [Paper]

MapCoder : «MapCoder: генерация многоагентных кодов для решения конкурентных проблем» [2024-05] [ACL 2024] [Paper]

«Борьба с огнем с огнем: сколько мы можем доверять CHATGPT по задачам, связанным с исходным кодом?» [2024-05] [бумага]

FunCoder : "Divide-and-Conquer Meets Consensus: Unleashing the Power of Functions in Code Generation" [2024-05] [paper]

CTC : "Multi-Agent Software Development through Cross-Team Collaboration" [2024-06] [paper]

MASAI : "MASAI: Modular Architecture for Software-engineering AI Agents" [2024-06] [paper]

AgileCoder : "AgileCoder: Dynamic Collaborative Agents for Software Development based on Agile Methodology" [2024-06] [paper]

CodeNav : "CodeNav: Beyond tool-use to using real-world codebases with LLM agents" [2024-06] [paper]

INDICT : "INDICT: Code Generation with Internal Dialogues of Critiques for Both Security and Helpfulness" [2024-06] [paper]

AppWorld : "AppWorld: A Controllable World of Apps and People for Benchmarking Interactive Coding Agents" [2024-07] [paper]

CortexCompile : "CortexCompile: Harnessing Cortical-Inspired Architectures for Enhanced Multi-Agent NLP Code Synthesis" [2024-08] [paper]

Survey : "Large Language Model-Based Agents for Software Engineering: A Survey" [2024-09] [paper]

AutoSafeCoder : "AutoSafeCoder: A Multi-Agent Framework for Securing LLM Code Generation through Static Analysis and Fuzz Testing" [2024-09] [paper]

SuperCoder2.0 : "SuperCoder2.0: Technical Report on Exploring the feasibility of LLMs as Autonomous Programmer" [2024-09] [paper]

Survey : "Agents in Software Engineering: Survey, Landscape, and Vision" [2024-09] [paper]

MOSS : "MOSS: Enabling Code-Driven Evolution and Context Management for AI Agents" [2024-09] [paper]

HyperAgent : "HyperAgent: Generalist Software Engineering Agents to Solve Coding Tasks at Scale" [2024-09] [paper]

"Compositional Hardness of Code in Large Language Models -- A Probabilistic Perspective" [2024-09] [paper]

RGD : "RGD: Multi-LLM Based Agent Debugger via Refinement and Generation Guidance" [2024-10] [paper]

AutoML-Agent : "AutoML-Agent: A Multi-Agent LLM Framework for Full-Pipeline AutoML" [2024-10] [paper]

Seeker : "Seeker: Enhancing Exception Handling in Code with LLM-based Multi-Agent Approach" [2024-10] [paper]

REDO : "REDO: Execution-Free Runtime Error Detection for COding Agents" [2024-10] [paper]

"Evaluating Software Development Agents: Patch Patterns, Code Quality, and Issue Complexity in Real-World GitHub Scenarios" [2024-10] [paper]

EvoMAC : "Self-Evolving Multi-Agent Collaboration Networks for Software Development" [2024-10] [paper]

VisionCoder : "VisionCoder: Empowering Multi-Agent Auto-Programming for Image Processing with Hybrid LLMs" [2024-10] [paper]

AutoKaggle : "AutoKaggle: A Multi-Agent Framework for Autonomous Data Science Competitions" [2024-10] [paper]

Watson : "Watson: A Cognitive Observability Framework for the Reasoning of Foundation Model-Powered Agents" [2024-11] [paper]

CodeTree : "CodeTree: Agent-guided Tree Search for Code Generation with Large Language Models" [2024-11] [paper]

EvoCoder : "LLMs as Continuous Learners: Improving the Reproduction of Defective Code in Software Issues" [2024-11] [paper]

"Interactive Program Synthesis" [2017-03] [paper]

"Question selection for interactive program synthesis" [2020-06] [PLDI 2020] [paper]

"Interactive Code Generation via Test-Driven User-Intent Formalization" [2022-08] [paper]

"Improving Code Generation by Training with Natural Language Feedback" [2023-03] [TMLR] [paper]

"Self-Refine: Iterative Refinement with Self-Feedback" [2023-03] [NeurIPS 2023] [paper]

"Teaching Large Language Models to Self-Debug" [2023-04] [paper]

"Self-Edit: Fault-Aware Code Editor for Code Generation" [2023-05] [ACL 2023] [paper]

"LeTI: Learning to Generate from Textual Interactions" [2023-05] [paper]

"Is Self-Repair a Silver Bullet for Code Generation?" [2023-06] [ICLR 2024] [paper]

"InterCode: Standardizing and Benchmarking Interactive Coding with Execution Feedback" [2023-06] [NeurIPS 2023] [paper]

"INTERVENOR: Prompting the Coding Ability of Large Language Models with the Interactive Chain of Repair" [2023-11] [ACL 2024 Findings] [paper]

"OpenCodeInterpreter: Integrating Code Generation with Execution and Refinement" [2024-02] [ACL 2024 Findings] [paper]

"Iterative Refinement of Project-Level Code Context for Precise Code Generation with Compiler Feedback" [2024-03] [ACL 2024 Findings] [paper]

"CYCLE: Learning to Self-Refine the Code Generation" [2024-03] [paper]

"LLM-based Test-driven Interactive Code Generation: User Study and Empirical Evaluation" [2024-04] [paper]

"SOAP: Enhancing Efficiency of Generated Code via Self-Optimization" [2024-05] [paper]

"Code Repair with LLMs gives an Exploration-Exploitation Tradeoff" [2024-05] [paper]

"ReflectionCoder: Learning from Reflection Sequence for Enhanced One-off Code Generation" [2024-05] [paper]

"Training LLMs to Better Self-Debug and Explain Code" [2024-05] [paper]

"Requirements are All You Need: From Requirements to Code with LLMs" [2024-06] [paper]

"I Need Help! Evaluating LLM's Ability to Ask for Users' Support: A Case Study on Text-to-SQL Generation" [2024-07] [paper]

"An Empirical Study on Self-correcting Large Language Models for Data Science Code Generation" [2024-08] [paper]

"RethinkMCTS: Refining Erroneous Thoughts in Monte Carlo Tree Search for Code Generation" [2024-09] [paper]

"From Code to Correctness: Closing the Last Mile of Code Generation with Hierarchical Debugging" [2024-10] [paper] [repo]

"What Makes Large Language Models Reason in (Multi-Turn) Code Generation?" [2024-10] [paper]

"The First Prompt Counts the Most! An Evaluation of Large Language Models on Iterative Example-based Code Generation" [2024-11] [paper]

"Planning-Driven Programming: A Large Language Model Programming Workflow" [2024-11] [paper]

"ConAIR:Consistency-Augmented Iterative Interaction Framework to Enhance the Reliability of Code Generation" [2024-11] [paper]

"MarkupLM: Pre-training of Text and Markup Language for Visually-rich Document Understanding" [2021-10] [ACL 2022] [paper]

"WebKE: Knowledge Extraction from Semi-structured Web with Pre-trained Markup Language Model" [2021-10] [CIKM 2021] [paper]

"WebGPT: Browser-assisted question-answering with human feedback" [2021-12] [paper]

"CM3: A Causal Masked Multimodal Model of the Internet" [2022-01] [paper]

"DOM-LM: Learning Generalizable Representations for HTML Documents" [2022-01] [paper]

"WebFormer: The Web-page Transformer for Structure Information Extraction" [2022-02] [WWW 2022] [paper]

"A Dataset for Interactive Vision-Language Navigation with Unknown Command Feasibility" [2022-02] [ECCV 2022] [paper]

"WebShop: Towards Scalable Real-World Web Interaction with Grounded Language Agents" [2022-07] [NeurIPS 2022] [paper]

"Pix2Struct: Screenshot Parsing as Pretraining for Visual Language Understanding" [2022-10] [ICML 2023] [paper]

"Understanding HTML with Large Language Models" [2022-10] [EMNLP 2023 findings] [paper]

"WebUI: A Dataset for Enhancing Visual UI Understanding with Web Semantics" [2023-01] [CHI 2023] [paper]

"Mind2Web: Towards a Generalist Agent for the Web" [2023-06] [NeurIPS 2023] [paper]

"A Real-World WebAgent with Planning, Long Context Understanding, and Program Synthesis", [2023-07] [ICLR 2024] [paper]

"WebArena: A Realistic Web Environment for Building Autonomous Agents" [2023-07] [paper]

"CogAgent: A Visual Language Model for GUI Agents" [2023-12] [paper]

"GPT-4V(ision) is a Generalist Web Agent, if Grounded" [2024-01] [paper]

"WebVoyager: Building an End-to-End Web Agent with Large Multimodal Models" [2024-01] [paper]

"WebLINX: Real-World Website Navigation with Multi-Turn Dialogue" [2024-02] [paper]

"OmniACT: A Dataset and Benchmark for Enabling Multimodal Generalist Autonomous Agents for Desktop and Web" [2024-02] [paper]

"AutoWebGLM: Bootstrap And Reinforce A Large Language Model-based Web Navigating Agent" [2024-04] [paper]

"WILBUR: Adaptive In-Context Learning for Robust and Accurate Web Agents" [2024-04] [paper]

"AutoCrawler: A Progressive Understanding Web Agent for Web Crawler Generation" [2024-04] [paper]

"GUICourse: From General Vision Language Models to Versatile GUI Agents" [2024-06] [paper]

"NaviQAte: Functionality-Guided Web Application Navigation" [2024-09] [paper]

"MobileVLM: A Vision-Language Model for Better Intra- and Inter-UI Understanding" [2024-09] [paper]

"Multimodal Auto Validation For Self-Refinement in Web Agents" [2024-10] [paper]

"Navigating the Digital World as Humans Do: Universal Visual Grounding for GUI Agents" [2024-10] [paper]

"Web Agents with World Models: Learning and Leveraging Environment Dynamics in Web Navigation" [2024-10] [paper]

"Harnessing Webpage UIs for Text-Rich Visual Understanding" [2024-10] [paper]

"AgentOccam: A Simple Yet Strong Baseline for LLM-Based Web Agents" [2024-10] [paper]

"Beyond Browsing: API-Based Web Agents" [2024-10] [paper]

"Large Language Models Empowered Personalized Web Agents" [2024-10] [paper]

"AdvWeb: Controllable Black-box Attacks on VLM-powered Web Agents" [2024-10] [paper]

"Auto-Intent: Automated Intent Discovery and Self-Exploration for Large Language Model Web Agents" [2024-10] [paper]

"OS-ATLAS: A Foundation Action Model for Generalist GUI Agents" [2024-10] [paper]

"From Context to Action: Analysis of the Impact of State Representation and Context on the Generalization of Multi-Turn Web Navigation Agents" [2024-10] [paper]

"AutoGLM: Autonomous Foundation Agents for GUIs" [2024-10] [paper]

"WebRL: Training LLM Web Agents via Self-Evolving Online Curriculum Reinforcement Learning" [2024-11] [paper]

"The Dawn of GUI Agent: A Preliminary Case Study with Claude 3.5 Computer Use" [2024-11] [paper]

"ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data" [2024-11] [paper]

"ShowUI: One Vision-Language-Action Model for GUI Visual Agent" [2024-11] [paper]

[ Ruby ] "On the Transferability of Pre-trained Language Models for Low-Resource Programming Languages" [2022-04] [ICPC 2022] [paper]

[ Verilog ] "Benchmarking Large Language Models for Automated Verilog RTL Code Generation" [2022-12] [DATE 2023] [paper]

[ OCL ] "On Codex Prompt Engineering for OCL Generation: An Empirical Study" [2023-03] [MSR 2023] [paper]

[ Ansible-YAML ] "Automated Code generation for Information Technology Tasks in YAML through Large Language Models" [2023-05] [DAC 2023] [paper]

[ Hansl ] "The potential of LLMs for coding with low-resource and domain-specific programming languages" [2023-07] [paper]

[ Verilog ] "VeriGen: A Large Language Model for Verilog Code Generation" [2023-07] [paper]

[ Verilog ] "RTLLM: An Open-Source Benchmark for Design RTL Generation with Large Language Model" [2023-08] [paper]

[ Racket, OCaml, Lua, R, Julia ] "Knowledge Transfer from High-Resource to Low-Resource Programming Languages for Code LLMs" [2023-08] [paper]

[ Verilog ] "VerilogEval: Evaluating Large Language Models for Verilog Code Generation" [2023-09] [ICCAD 2023] [paper]

[ Verilog ] "RTLFixer: Automatically Fixing RTL Syntax Errors with Large Language Models" [2023-11] [paper]

[ Verilog ] "Advanced Large Language Model (LLM)-Driven Verilog Development: Enhancing Power, Performance, and Area Optimization in Code Synthesis" [2023-12] [paper]

[ Verilog ] "RTLCoder: Outperforming GPT-3.5 in Design RTL Generation with Our Open-Source Dataset and Lightweight Solution" [2023-12] [paper]

[ Verilog ] "BetterV: Controlled Verilog Generation with Discriminative Guidance" [2024-02] [ICML 2024] [paper]

[ R ] "Empirical Studies of Parameter Efficient Methods for Large Language Models of Code and Knowledge Transfer to R" [2024-03] [paper]

[ Haskell ] "Investigating the Performance of Language Models for Completing Code in Functional Programming Languages: a Haskell Case Study" [2024-03] [paper]

[ Verilog ] "A Multi-Expert Large Language Model Architecture for Verilog Code Generation" [2024-04] [paper]

[ Verilog ] "CreativEval: Evaluating Creativity of LLM-Based Hardware Code Generation" [2024-04] [paper]

[ Alloy ] "An Empirical Evaluation of Pre-trained Large Language Models for Repairing Declarative Formal Specifications" [2024-04] [paper]

[ Verilog ] "Evaluating LLMs for Hardware Design and Test" [2024-04] [paper]

[ Kotlin, Swift, and Rust ] "Software Vulnerability Prediction in Low-Resource Languages: An Empirical Study of CodeBERT and ChatGPT" [2024-04] [paper]

[ Verilog ] "MEIC: Re-thinking RTL Debug Automation using LLMs" [2024-05] [paper]

[ Bash ] "Tackling Execution-Based Evaluation for NL2Bash" [2024-05] [paper]

[ Fortran, Julia, Matlab, R, Rust ] "Evaluating AI-generated code for C++, Fortran, Go, Java, Julia, Matlab, Python, R, and Rust" [2024-05] [paper]

[ OpenAPI ] "Optimizing Large Language Models for OpenAPI Code Completion" [2024-05] [paper]

[ Kotlin ] "Kotlin ML Pack: Technical Report" [2024-05] [paper]

[ Verilog ] "VerilogReader: LLM-Aided Hardware Test Generation" [2024-06] [paper]

"Benchmarking Generative Models on Computational Thinking Tests in Elementary Visual Programming" [2024-06] [paper]

[ Logo ] "Program Synthesis Benchmark for Visual Programming in XLogoOnline Environment" [2024-06] [paper]

[ Ansible YAML, Bash ] "DocCGen: Document-based Controlled Code Generation" [2024-06] [paper]

[ Qiskit ] "Qiskit HumanEval: An Evaluation Benchmark For Quantum Code Generative Models" [2024-06] [paper]

[ Perl, Golang, Swift ] "DistiLRR: Transferring Code Repair for Low-Resource Programming Languages" [2024-06] [paper]

[ Verilog ] "AssertionBench: A Benchmark to Evaluate Large-Language Models for Assertion Generation" [2024-06] [paper]

"A Comparative Study of DSL Code Generation: Fine-Tuning vs. Optimized Retrieval Augmentation" [2024-07] [paper]

[ Json, XLM, YAML ] "ConCodeEval: Evaluating Large Language Models for Code Constraints in Domain-Specific Languages" [2024-07] [paper]

[ Verilog ] "AutoBench: Automatic Testbench Generation and Evaluation Using LLMs for HDL Design" [2024-07] [paper]

[ Verilog ] "CodeV: Empowering LLMs for Verilog Generation through Multi-Level Summarization" [2024-07] [paper]

[ Verilog ] "ITERTL: An Iterative Framework for Fine-tuning LLMs for RTL Code Generation" [2024-07] [paper]

[ Verilog ] "OriGen:Enhancing RTL Code Generation with Code-to-Code Augmentation and Self-Reflection" [2024-07] [paper]

[ Verilog ] "Large Language Model for Verilog Generation with Golden Code Feedback" [2024-07] [paper]

[ Verilog ] "AutoVCoder: A Systematic Framework for Automated Verilog Code Generation using LLMs" [2024-07] [paper]

[ RPA ] "Plan with Code: Comparing approaches for robust NL to DSL generation" [2024-08] [paper]

[ Verilog ] "VerilogCoder: Autonomous Verilog Coding Agents with Graph-based Planning and Abstract Syntax Tree (AST)-based Waveform Tracing Tool" [2024-08] [paper]

[ Verilog ] "Revisiting VerilogEval: Newer LLMs, In-Context Learning, and Specification-to-RTL Tasks" [2024-08] [paper]

[ MaxMSP, Web Audio ] "Benchmarking LLM Code Generation for Audio Programming with Visual Dataflow Languages" [2024-09] [paper]

[ Verilog ] "RTLRewriter: Methodologies for Large Models aided RTL Code Optimization" [2024-09] [paper]

[ Verilog ] "CraftRTL: High-quality Synthetic Data Generation for Verilog Code Models with Correct-by-Construction Non-Textual Representations and Targeted Code Repair" [2024-09] [paper]

[ Bash ] "ScriptSmith: A Unified LLM Framework for Enhancing IT Operations via Automated Bash Script Generation, Assessment, and Refinement" [2024-09] [paper]

[ Survey ] "Survey on Code Generation for Low resource and Domain Specific Programming Languages" [2024-10] [paper]

[ R ] "Do Current Language Models Support Code Intelligence for R Programming Language?" [2024-10] [paper]

"Can Large Language Models Generate Geospatial Code?" [2024-10] [paper]

[ PLC ] "Agents4PLC: Automating Closed-loop PLC Code Generation and Verification in Industrial Control Systems using LLM-based Agents" [2024-10] [paper]

[ Lua ] "Evaluating Quantized Large Language Models for Code Generation on Low-Resource Language Benchmarks" [2024-10] [paper]

"Improving Parallel Program Performance Through DSL-Driven Code Generation with LLM Optimizers" [2024-10] [paper]

"GeoCode-GPT: A Large Language Model for Geospatial Code Generation Tasks" [2024-10] [paper]

[ R, D, Racket, Bash ]: "Bridge-Coder: Unlocking LLMs' Potential to Overcome Language Gaps in Low-Resource Code" [2024-10] [paper]

[ SPICE ]: "SPICEPilot: Navigating SPICE Code Generation and Simulation with AI Guidance" [2024-10] [paper]

[ IEC 61131-3 ST ]: "Training LLMs for Generating IEC 61131-3 Structured Text with Online Feedback" [2024-10] [paper]

[ Verilog ] "MetRex: A Benchmark for Verilog Code Metric Reasoning Using LLMs" [2024-11] [paper]

[ Verilog ] "CorrectBench: Automatic Testbench Generation with Functional Self-Correction using LLMs for HDL Design" [2024-11] [paper]

[ MUMPS, ALC ] "Leveraging LLMs for Legacy Code Modernization: Challenges and Opportunities for LLM-Generated Documentation" [2024-11] [paper]

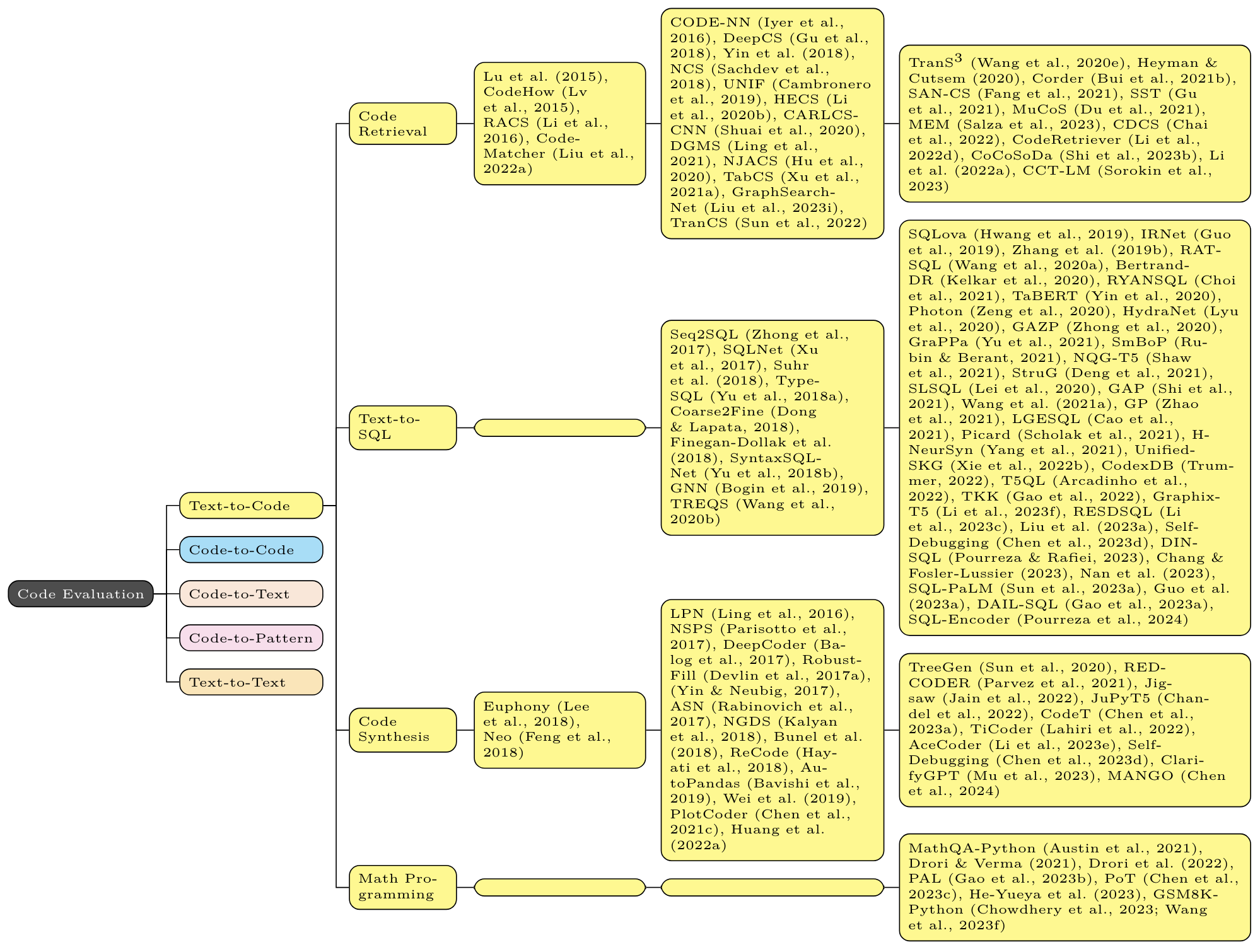

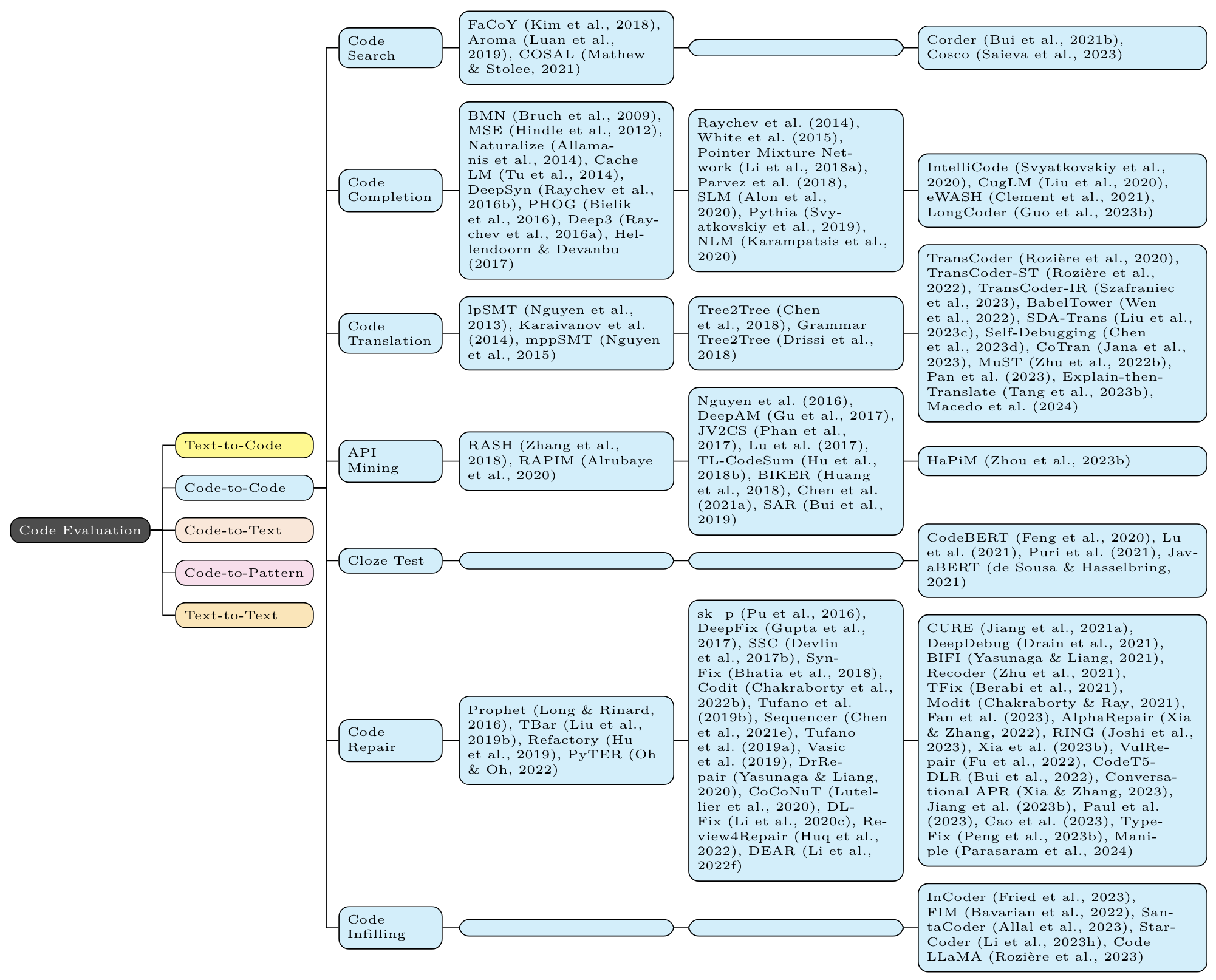

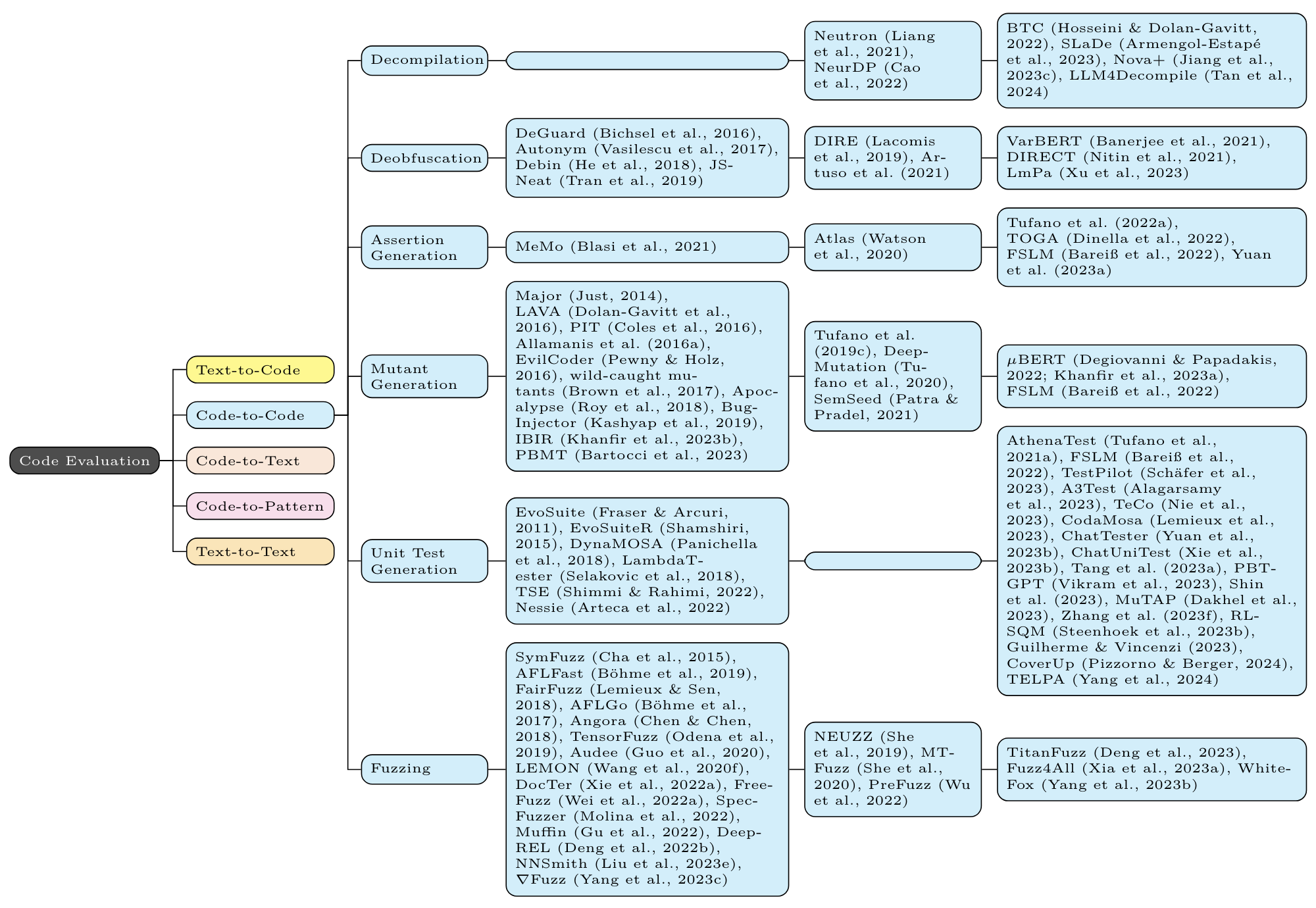

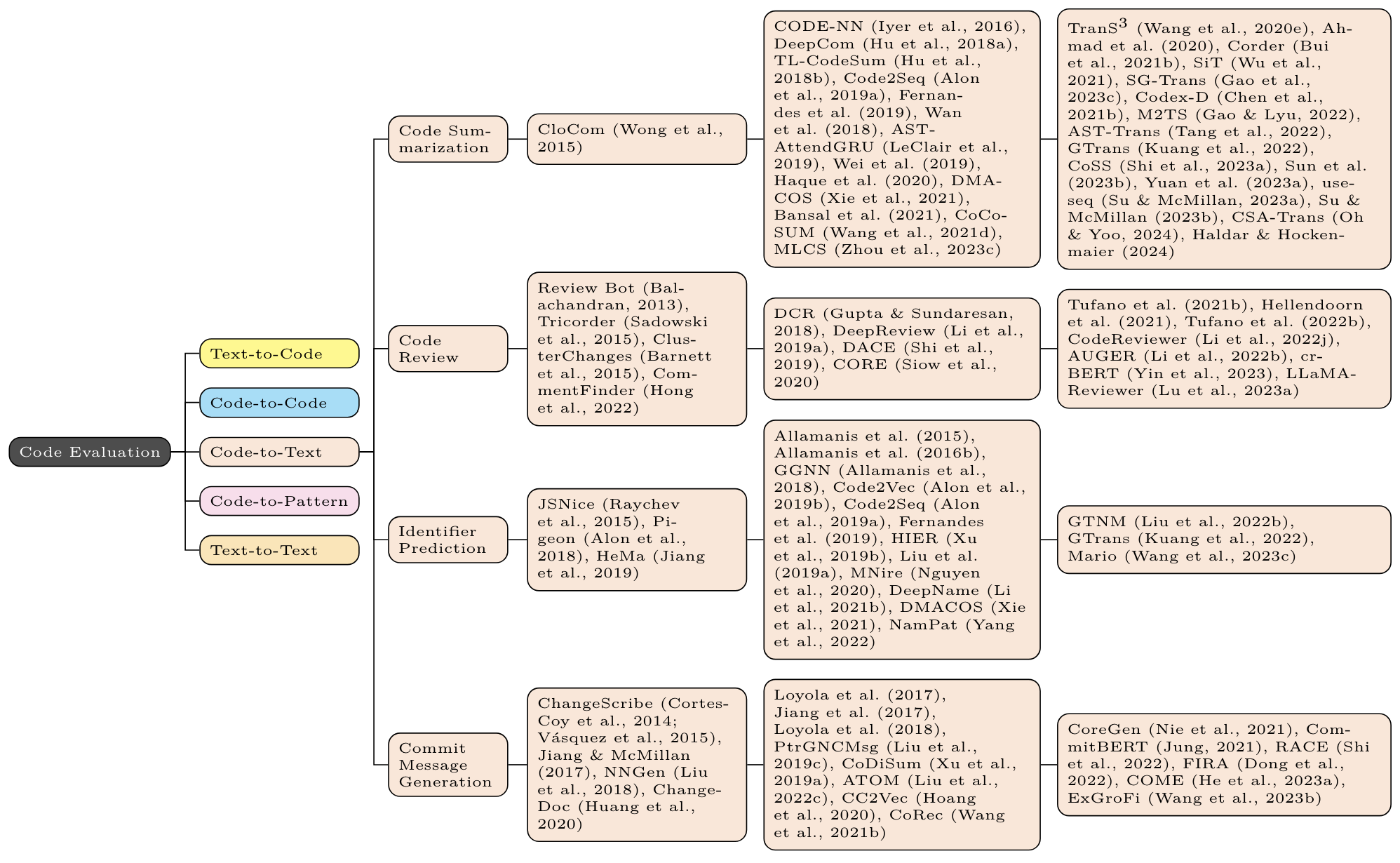

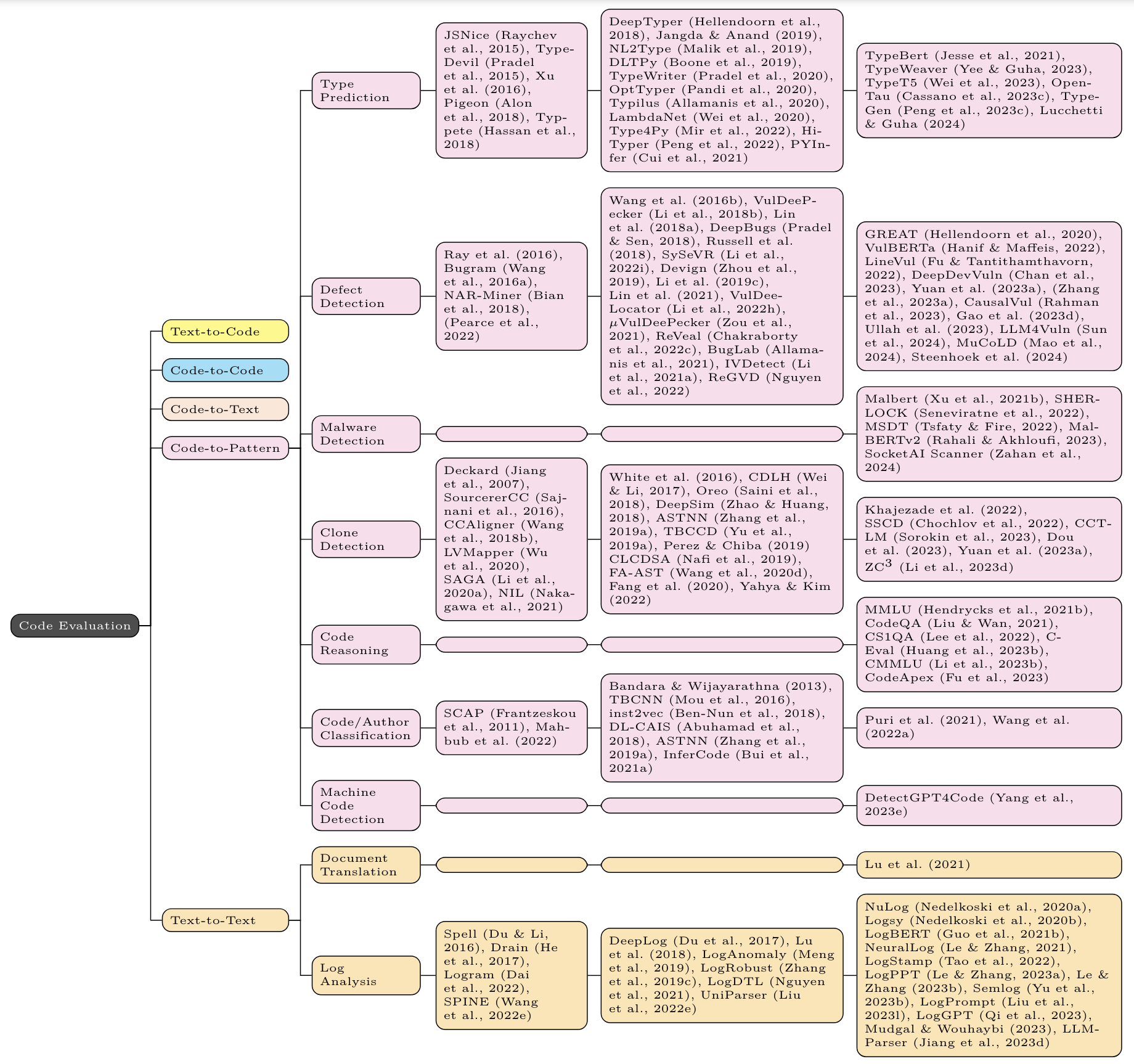

For each task, the first column contains non-neural methods (eg n-gram, TF-IDF, and (occasionally) static program analysis); the second column contains non-Transformer neural methods (eg LSTM, CNN, GNN); the third column contains Transformer based methods (eg BERT, GPT, T5).

"Enhancing Large Language Models in Coding Through Multi-Perspective Self-Consistency" [2023-09] [ACL 2024] [paper]

"Self-Infilling Code Generation" [2023-11] [ICML 2024] [paper]

"JumpCoder: Go Beyond Autoregressive Coder via Online Modification" [2024-01] [ACL 2024] [paper]

"Unsupervised Evaluation of Code LLMs with Round-Trip Correctness" [2024-02] [ICML 2024] [paper]

"The Larger the Better? Improved LLM Code-Generation via Budget Reallocation" [2024-03] [paper]

"Quantifying Contamination in Evaluating Code Generation Capabilities of Language Models" [2024-03] [ACL 2024] [paper]

"Comments as Natural Logic Pivots: Improve Code Generation via Comment Perspective" [2024-04] [ACL 2024 Findings] [paper]

"Distilling Algorithmic Reasoning from LLMs via Explaining Solution Programs" [2024-04] [paper]

"Quality Assessment of Prompts Used in Code Generation" [2024-04] [paper]

"Assessing GPT-4-Vision's Capabilities in UML-Based Code Generation" [2024-04] [paper]

"Large Language Models Synergize with Automated Machine Learning" [2024-05] [paper]

"Model Cascading for Code: Reducing Inference Costs with Model Cascading for LLM Based Code Generation" [2024-05] [paper]

"A Survey on Large Language Models for Code Generation" [2024-06] [paper]

"Is Programming by Example solved by LLMs?" [2024-06] [paper]

"Benchmarks and Metrics for Evaluations of Code Generation: A Critical Review" [2024-06] [paper]

"MPCODER: Multi-user Personalized Code Generator with Explicit and Implicit Style Representation Learning" [2024-06] [ACL 2024] [paper]

"Revisiting the Impact of Pursuing Modularity for Code Generation" [2024-07] [paper]

"Evaluating Long Range Dependency Handling in Code Generation Models using Multi-Step Key Retrieval" [2024-07] [paper]

"When to Stop? Towards Efficient Code Generation in LLMs with Excess Token Prevention" [2024-07] [paper]

"Assessing Programming Task Difficulty for Efficient Evaluation of Large Language Models" [2024-07] [paper]

"ArchCode: Incorporating Software Requirements in Code Generation with Large Language Models" [2024-08] [ACL 2024] [paper]

"Fine-tuning Language Models for Joint Rewriting and Completion of Code with Potential Bugs" [2024-08] [ACL 2024 Findings] [paper]

"Selective Prompt Anchoring for Code Generation" [2024-08] [paper]

"Bridging the Language Gap: Enhancing Multilingual Prompt-Based Code Generation in LLMs via Zero-Shot Cross-Lingual Transfer" [2024-08] [paper]

"Optimizing Large Language Model Hyperparameters for Code Generation" [2024-08] [paper]

"EPiC: Cost-effective Search-based Prompt Engineering of LLMs for Code Generation" [2024-08] [paper]

"CodeRefine: A Pipeline for Enhancing LLM-Generated Code Implementations of Research Papers" [2024-08] [paper]

"No Man is an Island: Towards Fully Automatic Programming by Code Search, Code Generation and Program Repair" [2024-09] [paper]

"Planning In Natural Language Improves LLM Search For Code Generation" [2024-09] [paper]

"Multi-Programming Language Ensemble for Code Generation in Large Language Model" [2024-09] [paper]

"A Pair Programming Framework for Code Generation via Multi-Plan Exploration and Feedback-Driven Refinement" [2024-09] [paper]

"USCD: Improving Code Generation of LLMs by Uncertainty-Aware Selective Contrastive Decoding" [2024-09] [paper]

"Eliciting Instruction-tuned Code Language Models' Capabilities to Utilize Auxiliary Function for Code Generation" [2024-09] [paper]

"Selection of Prompt Engineering Techniques for Code Generation through Predicting Code Complexity" [2024-09] [paper]

"Horizon-Length Prediction: Advancing Fill-in-the-Middle Capabilities for Code Generation with Lookahead Planning" [2024-10] [paper]

"Showing LLM-Generated Code Selectively Based on Confidence of LLMs" [2024-10] [paper]

"AutoFeedback: An LLM-based Framework for Efficient and Accurate API Request Generation" [2024-10] [paper]

"Enhancing LLM Agents for Code Generation with Possibility and Pass-rate Prioritized Experience Replay" [2024-10] [paper]

"From Solitary Directives to Interactive Encouragement! LLM Secure Code Generation by Natural Language Prompting" [2024-10] [paper]

"Self-Explained Keywords Empower Large Language Models for Code Generation" [2024-10] [paper]

"Context-Augmented Code Generation Using Programming Knowledge Graphs" [2024-10] [paper]

"In-Context Code-Text Learning for Bimodal Software Engineering" [2024-10] [paper]

"Combining LLM Code Generation with Formal Specifications and Reactive Program Synthesis" [2024-10] [paper]

"Less is More: DocString Compression in Code Generation" [2024-10] [paper]

"Multi-Programming Language Sandbox for LLMs" [2024-10] [paper]

"Personality-Guided Code Generation Using Large Language Models" [2024-10] [paper]

"Do Advanced Language Models Eliminate the Need for Prompt Engineering in Software Engineering?" [2024-11] [paper]

"Scattered Forest Search: Smarter Code Space Exploration with LLMs" [2024-11] [paper]

"Anchor Attention, Small Cache: Code Generation with Large Language Models" [2024-11] [paper]

"ROCODE: Integrating Backtracking Mechanism and Program Analysis in Large Language Models for Code Generation" [2024-11] [paper]

"SRA-MCTS: Self-driven Reasoning Aurmentation with Monte Carlo Tree Search for Enhanced Code Generation" [2024-11] [paper]

"CodeGRAG: Extracting Composed Syntax Graphs for Retrieval Augmented Cross-Lingual Code Generation" [2024-05] [paper]

"Prompt-based Code Completion via Multi-Retrieval Augmented Generation" [2024-05] [paper]

"A Lightweight Framework for Adaptive Retrieval In Code Completion With Critique Model" [2024-06] [papaer]

"Preference-Guided Refactored Tuning for Retrieval Augmented Code Generation" [2024-09] [paper]

"Building A Coding Assistant via the Retrieval-Augmented Language Model" [2024-10] [paper]

"DroidCoder: Enhanced Android Code Completion with Context-Enriched Retrieval-Augmented Generation" [2024-10] [ASE 2024] [paper]

"Assessing the Answerability of Queries in Retrieval-Augmented Code Generation" [2024-11] [paper]

"Fault-Aware Neural Code Rankers" [2022-06] [NeurIPS 2022] [paper]

"Functional Overlap Reranking for Neural Code Generation" [2023-10] [ACL 2024 Findings] [paper]

"Top Pass: Improve Code Generation by Pass@k-Maximized Code Ranking" [2024-08] [paper]

"DOCE: Finding the Sweet Spot for Execution-Based Code Generation" [2024-08] [paper]

"Sifting through the Chaff: On Utilizing Execution Feedback for Ranking the Generated Code Candidates" [2024-08] [paper]

"B4: Towards Optimal Assessment of Plausible Code Solutions with Plausible Tests" [2024-09] [paper]

"Learning Code Preference via Synthetic Evolution" [2024-10] [paper]

"Tree-to-tree Neural Networks for Program Translation" [2018-02] [NeurIPS 2018] [paper]

"Program Language Translation Using a Grammar-Driven Tree-to-Tree Model" [2018-07] [paper]

"Unsupervised Translation of Programming Languages" [2020-06] [NeurIPS 2020] [paper]

"Leveraging Automated Unit Tests for Unsupervised Code Translation" [2021-10] [ICLR 2022] paper]

"Code Translation with Compiler Representations" [2022-06] [ICLR 2023] [paper]

"Multilingual Code Snippets Training for Program Translation" [2022-06] [AAAI 2022] [paper]

"BabelTower: Learning to Auto-parallelized Program Translation" [2022-07] [ICML 2022] [paper]

"Syntax and Domain Aware Model for Unsupervised Program Translation" [2023-02] [ICSE 2023] [paper]

"CoTran: An LLM-based Code Translator using Reinforcement Learning with Feedback from Compiler and Symbolic Execution" [2023-06] [paper]

"Lost in Translation: A Study of Bugs Introduced by Large Language Models while Translating Code" [2023-08] [ICSE 2024] [paper]

"On the Evaluation of Neural Code Translation: Taxonomy and Benchmark", 2023-08, ASE 2023, [paper]

"Program Translation via Code Distillation" [2023-10] [EMNLP 2023] [paper]

"Explain-then-Translate: An Analysis on Improving Program Translation with Self-generated Explanations" [2023-11] [EMNLP 2023 Findings] [paper]

"Exploring the Impact of the Output Format on the Evaluation of Large Language Models for Code Translation" [2024-03] [paper]

"Exploring and Unleashing the Power of Large Language Models in Automated Code Translation" [2024-04] [paper]

"VERT: Verified Equivalent Rust Transpilation with Few-Shot Learning" [2024-04] [paper]

"Towards Translating Real-World Code with LLMs: A Study of Translating to Rust" [2024-05] [paper]

"An interpretable error correction method for enhancing code-to-code translation" [2024-05] [ICLR 2024] [paper]

"LASSI: An LLM-based Automated Self-Correcting Pipeline for Translating Parallel Scientific Codes" [2024-06] [paper]

"Rectifier: Code Translation with Corrector via LLMs" [2024-07] [paper]

"Enhancing Code Translation in Language Models with Few-Shot Learning via Retrieval-Augmented Generation" [2024-07] [paper]

"A Joint Learning Model with Variational Interaction for Multilingual Program Translation" [2024-08] [paper]

"Automatic Library Migration Using Large Language Models: First Results" [2024-08] [paper]

"Context-aware Code Segmentation for C-to-Rust Translation using Large Language Models" [2024-09] [paper]

"TRANSAGENT: An LLM-Based Multi-Agent System for Code Translation" [2024-10] [paper]

"Unraveling the Potential of Large Language Models in Code Translation: How Far Are We?" [2024-10] [paper]

"CodeRosetta: Pushing the Boundaries of Unsupervised Code Translation for Parallel Programming" [2024-10] [paper]

"A test-free semantic mistakes localization framework in Neural Code Translation" [2024-10] [paper]

"Repository-Level Compositional Code Translation and Validation" [2024-10] [paper]

"Leveraging Large Language Models for Code Translation and Software Development in Scientific Computing" [2024-10] [paper]

"InterTrans: Leveraging Transitive Intermediate Translations to Enhance LLM-based Code Translation" [2024-11] [paper]

"Translating C To Rust: Lessons from a User Study" [2024-11] [paper]

"A Transformer-based Approach for Source Code Summarization" [2020-05] [ACL 2020] [paper]

"Code Summarization with Structure-induced Transformer" [2020-12] [ACL 2021 Findings] [paper]

"Code Structure Guided Transformer for Source Code Summarization" [2021-04] [ACM TSEM] [paper]

"M2TS: Multi-Scale Multi-Modal Approach Based on Transformer for Source Code Summarization" [2022-03] [ICPC 2022] [paper]

"AST-trans: code summarization with efficient tree-structured attention" [2022-05] [ICSE 2022] [paper]

"CoSS: Leveraging Statement Semantics for Code Summarization" [2023-03] [IEEE TSE] [paper]

"Automatic Code Summarization via ChatGPT: How Far Are We?" [2023-05] [paper]

"Semantic Similarity Loss for Neural Source Code Summarization" [2023-08] [paper]

"Distilled GPT for Source Code Summarization" [2023-08] [ASE] [paper]

"CSA-Trans: Code Structure Aware Transformer for AST" [2024-04] [paper]

"Analyzing the Performance of Large Language Models on Code Summarization" [2024-04] [paper]

"Enhancing Trust in LLM-Generated Code Summaries with Calibrated Confidence Scores" [2024-04] [paper]

"DocuMint: Docstring Generation for Python using Small Language Models" [2024-05] [paper] [repo]

"Natural Is The Best: Model-Agnostic Code Simplification for Pre-trained Large Language Models" [2024-05] [paper]

"Large Language Models for Code Summarization" [2024-05] [paper]

"Exploring the Efficacy of Large Language Models (GPT-4) in Binary Reverse Engineering" [2024-06] [paper]

"Identifying Inaccurate Descriptions in LLM-generated Code Comments via Test Execution" [2024-06] [paper]

"MALSIGHT: Exploring Malicious Source Code and Benign Pseudocode for Iterative Binary Malware Summarization" [2024-06] [paper]

"ESALE: Enhancing Code-Summary Alignment Learning for Source Code Summarization" [2024-07] [paper]

"Source Code Summarization in the Era of Large Language Models" [2024-07] [paper]

"Natural Language Outlines for Code: Literate Programming in the LLM Era" [2024-08] [paper]

"Context-aware Code Summary Generation" [2024-08] [paper]

"AUTOGENICS: Automated Generation of Context-Aware Inline Comments for Code Snippets on Programming Q&A Sites Using LLM" [2024-08] [paper]

"LLMs as Evaluators: A Novel Approach to Evaluate Bug Report Summarization" [2024-09] [paper]

"Evaluating the Quality of Code Comments Generated by Large Language Models for Novice Programmers" [2024-09] [paper]

"Generating Equivalent Representations of Code By A Self-Reflection Approach" [2024-10] [paper]

"A review of automatic source code summarization" [2024-10] [Empirical Software Engineering] [paper]

"DeepDebug: Fixing Python Bugs Using Stack Traces, Backtranslation, and Code Skeletons" [2021-05] [paper]

"Break-It-Fix-It: Unsupervised Learning for Program Repair" [2021-06] [ICML 2021] [paper]

"TFix: Learning to Fix Coding Errors with a Text-to-Text Transformer" [2021-07] [ICML 2021] [paper]

"Automated Repair of Programs from Large Language Models" [2022-05] [ICSE 2023] [paper]

"Less Training, More Repairing Please: Revisiting Automated Program Repair via Zero-shot Learning" [2022-07] [ESEC/FSE 2022] [paper]

"Repair Is Nearly Generation: Multilingual Program Repair with LLMs" [2022-08] [AAAI 2023] [paper]

"Practical Program Repair in the Era of Large Pre-trained Language Models" [2022-10] [paper]

"VulRepair: a T5-based automated software vulnerability repair" [2022-11] [ESEC/FSE 2022] [paper]

"Conversational Automated Program Repair" [2023-01] [paper]

"Impact of Code Language Models on Automated Program Repair" [2023-02] [ICSE 2023] [paper]

"InferFix: End-to-End Program Repair with LLMs" [2023-03] [ESEC/FSE 2023] [paper]

"Enhancing Automated Program Repair through Fine-tuning and Prompt Engineering" [2023-04] [paper]

"A study on Prompt Design, Advantages and Limitations of ChatGPT for Deep Learning Program Repair" [2023-04] [paper]

"Domain Knowledge Matters: Improving Prompts with Fix Templates for Repairing Python Type Errors" [2023-06] [ICSE 2024] [paper]

"RepairLLaMA: Efficient Representations and Fine-Tuned Adapters for Program Repair" [2023-12] [paper]

"The Fact Selection Problem in LLM-Based Program Repair" [2024-04] [paper]

"Aligning LLMs for FL-free Program Repair" [2024-04] [paper]

"A Deep Dive into Large Language Models for Automated Bug Localization and Repair" [2024-04] [paper]

"Multi-Objective Fine-Tuning for Enhanced Program Repair with LLMs" [2024-04] [paper]

"How Far Can We Go with Practical Function-Level Program Repair?" [2024-04] [paper]

"Revisiting Unnaturalness for Automated Program Repair in the Era of Large Language Models" [2024-04] [paper]

"A Unified Debugging Approach via LLM-Based Multi-Agent Synergy" [2024-04] [paper]

"A Systematic Literature Review on Large Language Models for Automated Program Repair" [2024-05] [paper]

"NAVRepair: Node-type Aware C/C++ Code Vulnerability Repair" [2024-05] [paper]

"Automated Program Repair: Emerging trends pose and expose problems for benchmarks" [2024-05] [paper]

"Automated Repair of AI Code with Large Language Models and Formal Verification" [2024-05] [paper]

"A Case Study of LLM for Automated Vulnerability Repair: Assessing Impact of Reasoning and Patch Validation Feedback" [2024-05] [paper]

"CREF: An LLM-based Conversational Software Repair Framework for Programming Tutors" [2024-06] [paper]

"Towards Practical and Useful Automated Program Repair for Debugging" [2024-07] [paper]

"ThinkRepair: Self-Directed Automated Program Repair" [2024-07] [paper]

"MergeRepair: An Exploratory Study on Merging Task-Specific Adapters in Code LLMs for Automated Program Repair" [2024-08] [paper]

"RePair: Automated Program Repair with Process-based Feedback" [2024-08] [ACL 2024 Findings] [paper]

"Enhancing LLM-Based Automated Program Repair with Design Rationales" [2024-08] [paper]

"Automated Software Vulnerability Patching using Large Language Models" [2024-08] [paper]

"Enhancing Source Code Security with LLMs: Demystifying The Challenges and Generating Reliable Repairs" [2024-09] [paper]

"MarsCode Agent: AI-native Automated Bug Fixing" [2024-09] [paper]

"Co-Learning: Code Learning for Multi-Agent Reinforcement Collaborative Framework with Conversational Natural Language Interfaces" [2024-09] [paper]

"Debugging with Open-Source Large Language Models: An Evaluation" [2024-09] [paper]

"VulnLLMEval: A Framework for Evaluating Large Language Models in Software Vulnerability Detection and Patching" [2024-09] [paper]

"ContractTinker: LLM-Empowered Vulnerability Repair for Real-World Smart Contracts" [2024-09] [paper]

"Can GPT-O1 Kill All Bugs? An Evaluation of GPT-Family LLMs on QuixBugs" [2024-09] [paper]

"Exploring and Lifting the Robustness of LLM-powered Automated Program Repair with Metamorphic Testing" [2024-10] [paper]

"LecPrompt: A Prompt-based Approach for Logical Error Correction with CodeBERT" [2024-10] [paper]

"Semantic-guided Search for Efficient Program Repair with Large Language Models" [2024-10] [paper]

"A Comprehensive Survey of AI-Driven Advancements and Techniques in Automated Program Repair and Code Generation" [2024-11] [paper]

"Self-Supervised Contrastive Learning for Code Retrieval and Summarization via Semantic-Preserving Transformations" [2020-09] [SIGIR 2021] [paper]

"REINFOREST: Reinforcing Semantic Code Similarity for Cross-Lingual Code Search Models" [2023-05] [paper]

"Rewriting the Code: A Simple Method for Large Language Model Augmented Code Search" [2024-01] [ACL 2024] [paper]

"Revisiting Code Similarity Evaluation with Abstract Syntax Tree Edit Distance" [2024-04] [ACL 2024 short] [paper]

"Is Next Token Prediction Sufficient for GPT? Exploration on Code Logic Comprehension" [2024-04] [paper]

"Refining Joint Text and Source Code Embeddings for Retrieval Task with Parameter-Efficient Fine-Tuning" [2024-05] [paper]

"Typhon: Automatic Recommendation of Relevant Code Cells in Jupyter Notebooks" [2024-05] [paper]

"Toward Exploring the Code Understanding Capabilities of Pre-trained Code Generation Models" [2024-06] [paper]

"Aligning Programming Language and Natural Language: Exploring Design Choices in Multi-Modal Transformer-Based Embedding for Bug Localization" [2024-06] [paper]

"Assessing the Code Clone Detection Capability of Large Language Models" [2024-07] [paper]

"CodeCSE: A Simple Multilingual Model for Code and Comment Sentence Embeddings" [2024-07] [paper]

"Large Language Models for cross-language code clone detection" [2024-08] [paper]

"Coding-PTMs: How to Find Optimal Code Pre-trained Models for Code Embedding in Vulnerability Detection?" [2024-08] [paper]

"You Augment Me: Exploring ChatGPT-based Data Augmentation for Semantic Code Search" [2024-08] [paper]

"Improving Source Code Similarity Detection Through GraphCodeBERT and Integration of Additional Features" [2024-08] [paper]

"LLM Agents Improve Semantic Code Search" [2024-08] [paper]

"zsLLMCode: An Effective Approach for Functional Code Embedding via LLM with Zero-Shot Learning" [2024-09] [paper]

"Exploring Demonstration Retrievers in RAG for Coding Tasks: Yeas and Nays!" [2024-10] [paper]

"Instructive Code Retriever: Learn from Large Language Model's Feedback for Code Intelligence Tasks" [2024-10] [paper]

"Binary Code Similarity Detection via Graph Contrastive Learning on Intermediate Representations" [2024-10] [paper]

"Are Decoder-Only Large Language Models the Silver Bullet for Code Search?" [2024-10] [paper]

"CodeXEmbed: A Generalist Embedding Model Family for Multiligual and Multi-task Code Retrieval" [2024-11] [paper]

"CodeSAM: Source Code Representation Learning by Infusing Self-Attention with Multi-Code-View Graphs" [2024-11] [paper]

"EnStack: An Ensemble Stacking Framework of Large Language Models for Enhanced Vulnerability Detection in Source Code" [2024-11] [paper]

"Isotropy Matters: Soft-ZCA Whitening of Embeddings for Semantic Code Search" [2024-11] [paper]

"An Empirical Study on the Code Refactoring Capability of Large Language Models" [2024-11] [paper]

"Automated Update of Android Deprecated API Usages with Large Language Models" [2024-11] [paper]

"An Empirical Study on the Potential of LLMs in Automated Software Refactoring" [2024-11] [paper]

"CODECLEANER: Elevating Standards with A Robust Data Contamination Mitigation Toolkit" [2024-11] [paper]

"Instruct or Interact? Exploring and Eliciting LLMs' Capability in Code Snippet Adaptation Through Prompt Engineering" [2024-11] [paper]

"Learning type annotation: is big data enough?" [2021-08] [ESEC/FSE 2021] [paper]

"Do Machine Learning Models Produce TypeScript Types That Type Check?" [2023-02] [ECOOP 2023] [paper]

"TypeT5: Seq2seq Type Inference using Static Analysis" [2023-03] [ICLR 2023] [paper]

"Type Prediction With Program Decomposition and Fill-in-the-Type Training" [2023-05] [paper]

"Generative Type Inference for Python" [2023-07] [ASE 2023] [paper]

"Activation Steering for Robust Type Prediction in CodeLLMs" [2024-04] [paper]

"An Empirical Study of Large Language Models for Type and Call Graph Analysis" [2024-10] [paper]

"Repository-Level Prompt Generation for Large Language Models of Code" [2022-06] [ICML 2023] [paper]

"CoCoMIC: Code Completion By Jointly Modeling In-file and Cross-file Context" [2022-12] [paper]

"RepoCoder: Repository-Level Code Completion Through Iterative Retrieval and Generation" [2023-03] [EMNLP 2023] [paper]

"Coeditor: Leveraging Repo-level Diffs for Code Auto-editing" [2023-05] [ICLR 2024 Spotlight] [paper]

"RepoBench: Benchmarking Repository-Level Code Auto-Completion Systems" [2023-06] [ICLR 2024] [paper]

"Guiding Language Models of Code with Global Context using Monitors" [2023-06] [paper]

"RepoFusion: Training Code Models to Understand Your Repository" [2023-06] [paper]

"CodePlan: Repository-level Coding using LLMs and Planning" [2023-09] [paper]

"SWE-bench: Can Language Models Resolve Real-World GitHub Issues?" [2023-10] [ICLR 2024] [paper]

"CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion" [2023-10] [NeurIPS 2023] [paper]

"A^3-CodGen: A Repository-Level Code Generation Framework for Code Reuse with Local-Aware, Global-Aware, and Third-Party-Library-Aware" [2023-12] [paper]

"Teaching Code LLMs to Use Autocompletion Tools in Repository-Level Code Generation" [2024-01] [paper]

"RepoHyper: Better Context Retrieval Is All You Need for Repository-Level Code Completion" [2024-03] [paper]

"Repoformer: Selective Retrieval for Repository-Level Code Completion" [2024-03] [ICML 2024] [paper]

"CodeS: Natural Language to Code Repository via Multi-Layer Sketch" [2024-03] [paper]

"Class-Level Code Generation from Natural Language Using Iterative, Tool-Enhanced Reasoning over Repository" [2024-04] [paper]

"Contextual API Completion for Unseen Repositories Using LLMs" [2024-05] [paper]

"Dataflow-Guided Retrieval Augmentation for Repository-Level Code Completion" [2024-05][ACL 2024] [paper]

"How to Understand Whole Software Repository?" [2024-06] [paper]

"R2C2-Coder: Enhancing and Benchmarking Real-world Repository-level Code Completion Abilities of Code Large Language Models" [2024-06] [paper]

"CodeR: Issue Resolving with Multi-Agent and Task Graphs" [2024-06] [paper]

"Enhancing Repository-Level Code Generation with Integrated Contextual Information" [2024-06] [paper]

"On The Importance of Reasoning for Context Retrieval in Repository-Level Code Editing" [2024-06] [paper]

"GraphCoder: Enhancing Repository-Level Code Completion via Code Context Graph-based Retrieval and Language Model" [2024-06] [ASE 2024] [paper]

"STALL+: Boosting LLM-based Repository-level Code Completion with Static Analysis" [2024-06] [paper]

"Hierarchical Context Pruning: Optimizing Real-World Code Completion with Repository-Level Pretrained Code LLMs" [2024-06] [paper]

"Agentless: Demystifying LLM-based Software Engineering Agents" [2024-07] [paper]

"RLCoder: Reinforcement Learning for Repository-Level Code Completion" [2024-07] [paper]

"CoEdPilot: Recommending Code Edits with Learned Prior Edit Relevance, Project-wise Awareness, and Interactive Nature" [2024-08] [paper] [repo]

"RAMBO: Enhancing RAG-based Repository-Level Method Body Completion" [2024-09] [paper]

"Exploring the Potential of Conversational Test Suite Based Program Repair on SWE-bench" [2024-10] [paper]

"RepoGraph: Enhancing AI Software Engineering with Repository-level Code Graph" [2024-10] [paper]

"See-Saw Generative Mechanism for Scalable Recursive Code Generation with Generative AI" [2024-11] [paper]

"Seeking the user interface", 2014-09, ASE 2014, [paper]

"pix2code: Generating Code from a Graphical User Interface Screenshot", 2017-05, EICS 2018, [paper]

"Machine Learning-Based Prototyping of Graphical User Interfaces for Mobile Apps", 2018-02, TSE 2020, [paper]

"Automatic HTML Code Generation from Mock-Up Images Using Machine Learning Techniques", 2019-04, EBBT 2019, [paper]

"Sketch2code: Generating a website from a paper mockup", 2019-05, [paper]

"HTLM: Hyper-Text Pre-Training and Prompting of Language Models", 2021-07, ICLR 2022, [paper]

"Learning UI-to-Code Reverse Generator Using Visual Critic Without Rendering", 2023-05, [paper]

"Design2Code: How Far Are We From Automating Front-End Engineering?" [2024-03] [paper]

"Unlocking the conversion of Web Screenshots into HTML Code with the WebSight Dataset" [2024-03] [paper]

"VISION2UI: A Real-World Dataset with Layout for Code Generation from UI Designs" [2024-04] [paper]

"LogoMotion: Visually Grounded Code Generation for Content-Aware Animation" [2024-05] [paper]

"PosterLLaVa: Constructing a Unified Multi-modal Layout Generator with LLM" [2024-06] [paper]

"UICoder: Finetuning Large Language Models to Generate User Interface Code through Automated Feedback" [2024-06] [paper]

"On AI-Inspired UI-Design" [2024-06] [paper]

"Identifying User Goals from UI Trajectories" [2024-06] [paper]

"Automatically Generating UI Code from Screenshot: A Divide-and-Conquer-Based Approach" [2024-06] [paper]

"Web2Code: A Large-scale Webpage-to-Code Dataset and Evaluation Framework for Multimodal LLMs" [2024-06] [paper]

"Vision-driven Automated Mobile GUI Testing via Multimodal Large Language Model" [2024-07] [paper]

"AUITestAgent: Automatic Requirements Oriented GUI Function Testing" [2024-07] [paper]

"LLM-based Abstraction and Concretization for GUI Test Migration" [2024-09] [paper]

"Enabling Cost-Effective UI Automation Testing with Retrieval-Based LLMs: A Case Study in WeChat" [2024-09] [paper]

"Self-Elicitation of Requirements with Automated GUI Prototyping" [2024-09] [paper]

"Infering Alt-text For UI Icons With Large Language Models During App Development" [2024-09] [paper]