Node.js has now become a member of the toolbox for building high-concurrency network application services. Why has Node.js become the darling of the public? This article will start with the basic concepts of processes, threads, coroutines, and I/O models, and give you a comprehensive introduction to Node.js and the concurrency model.

We generally call a running instance of a program a process. It is a basic unit for resource allocation and scheduling by the operating system. It generally includes the following parts:

进程表table. Each process occupies a进程表项(also called进程控制块). This entry contains important process status such as program counter, stack pointer, memory allocation, status of open files, and scheduling information. information to ensure that after the process is suspended, the operating system can correctly revive the process.The process has the following characteristics:

It should be noted that if a program is run twice, even if the operating system can enable them to share code (i.e. only one copy of the code is in memory), it cannot change that the two instances of the running program are two different processes fact.

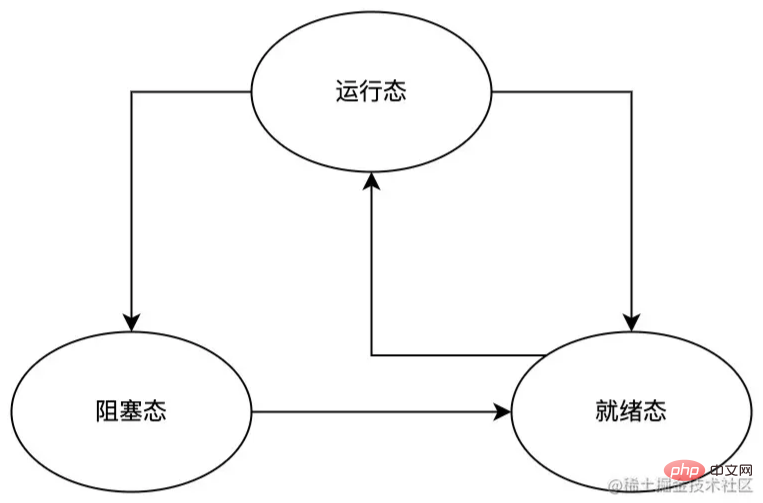

During the execution of the process, due to various reasons such as interruptions and CPU scheduling, the process will switch between the following states:

From the process state switching diagram above, we can see that a process can switch from running state to ready state and blocked state, but only ready state can be directly switched to running state. This is because:

Sometimes, we need to use threads to solve the following problems:

Regarding threads, we need to know the following points:

Now that we understand the basic characteristics of threads, let’s talk about several common thread types.

Kernel state threads are threads directly supported by the operating system. Its main features are as follows:

User-mode threads are threads completely built in user space. Its main characteristics are as follows:

A lightweight process (LWP) is a user thread built on and supported by the kernel. Its main features are as follows:

User space can only use kernel threads through lightweight processes (LWP). It can be regarded as a bridge between user-mode threads and kernel threads. Therefore, only by supporting kernel threads can there be a lightweight process (LWP).

Most operations of lightweight processes (LWP) require user-mode space to initiate the system. Call, this system call is relatively expensive (requires switching between user mode and kernel mode);

each lightweight process (LWP) needs to be associated with a specific kernel thread, therefore:

they can access their own processes All shared address spaces and system resources.

Above, we briefly introduced the common thread types (kernel state threads, user state threads, lightweight processes). Each of them has its own scope of application. In actual use, you can freely use them according to your own needs. Use in combination, such as common one-to-one, many-to-one, many-to-many and other models. Due to space limitations, this article will not introduce too much about this. Interested students can study it by themselves.

, also called Fiber, is a program running mechanism built on threads that allows developers to manage execution scheduling, state maintenance and other behaviors by themselves. Its main features are

In JavaScript, async/await that we often use is an implementation of coroutine, such as the following example:

function updateUserName(id, name) {

const user = getUserById(id);

user.updateName(name);

return true;

}

async function updateUserNameAsync(id, name) {

const user = await getUserById(id);

await user.updateName(name);

return true;

} In the above example, the logical execution sequence within the functions updateUserName and updateUserNameAsync is:

getUserById and assign its return value to the variable user ;updateName method of user ;true to the caller.The main difference between the two lies in the state control during actual operation:

updateUserName , it is executed in sequence according to the logical sequence mentioned above;updateUserNameAsync , it is also executed in sequence according to the logical sequence mentioned above. Execution, but when encountering await , updateUserNameAsync will be suspended and save the current program state at the suspended location. It will not wake up updateUserNameAsync again until the program fragment after await returns and restore the program state before suspending, and then Continue to the next program.From the above analysis, we can boldly guess: What coroutines need to solve is not the program concurrency problems that processes and threads need to solve, but the problems encountered when processing asynchronous tasks (such as file operations, network requests, etc.); in Before async/await , we could only handle asynchronous tasks through callback functions, which could easily make us fall into回调地狱and produce a mess of code that is generally difficult to maintain. Through coroutines, we can achieve synchronization of asynchronous code. Purpose.

What needs to be kept in mind is that the core capability of coroutines is to be able to suspend a certain program and maintain the state of the program's suspension position, and resume it at the suspended position at some time in the future, and continue to execute the next segment after the suspension position. program.

A complete I/O operation needs to go through the following stages:

I/O operation request to the kernel through a system call;I/O operation request (divided into the preparation stage and the actual execution phase), and returns the processing results to the user thread.We can roughly divide I/O operations into four types:阻塞I/O ,非阻塞I/O ,同步I/O , and异步I/O Before discussing these types, we first become familiar with the following two sets of concepts (here Assume that service A calls service B):

阻塞/非阻塞:

阻塞调用;非阻塞调用.同步/异步:

同步;回调after the execution is completed. The result is notified to A, then service B is异步.Many people often confuse阻塞/非阻塞with同步/异步, so special attention needs to be paid:

阻塞/非阻塞is for调用者of the service;同步/异步is for被调用者of the service.After understanding阻塞/非阻塞and同步/异步, let's look at the specific I/O 模型.

definition: After the user thread initiates an I/O system call, the user thread will be阻塞immediately until the entire I/O operation is processed and the result is returned to the user thread. Only after the user enters the (thread) process can阻塞state be released and continue to perform subsequent operations.

Features:

I/O operations, the user's (thread) process cannot perform other operations;I/O request can block the incoming (thread) thread, so in order to respond to the I/O request in time, it is necessary to allocate an incoming (thread) thread to each request, which will cause huge resource usage , and for long connection requests, since the incoming (thread) resources cannot be released for a long time, if there are new requests in the future, a serious performance bottleneck will occur.definition:

I/O system call in a thread (thread), if the I/O operation is not ready, the I/O call will return an error, and the user does not need to enter the thread (thread). Wait, but use polling to detect whether the I/O operation is ready;I/O operation will block the user's thread until the execution result is returned to the user's thread.Features:

I/O operation readiness status (usually using a while loop), the model needs to occupy the CPU and consume CPU resources;I/O operation is ready, the user needs to enter ( The thread) thread will not be blocked. When the I/O operation is ready, subsequent actual I/O operations will block the user from entering the thread (thread) thread;After the user process (thread) initiates an I/O system call, if the I/O call causes the user process (thread) to be blocked, then the I/O call is同步I/O , otherwise it is异步I/O .

The criterion for judging whether an I/O operation同步or异步is the communication mechanism between user threads and I/O operations. In the

同步, the interaction between user threads and I/O is synchronized through the kernel buffer. , that is, the kernel will synchronize the execution results of the I/O operation to the buffer, and then copy the data in the buffer to the user thread. This process will block the user thread until the I/O operation Completed;异步situations, the interaction between the user thread (thread) and I/O is directly synchronized through the kernel, that is, the kernel will directly copy the execution results of the I/O operation to the user thread (thread). This process will not Block the user's (thread) process.Node.js uses a single-threaded, event-driven asynchronous I/O model. Personally, I think the reason for choosing this model is:

I/O intensive. How to reasonably and efficiently manage multi-thread resources while ensuring high concurrency is more complicated than the management of single-thread resources.In short, for the purpose of simplicity and efficiency, Node.js adopts a single-threaded, event-driven asynchronous I/O model, and implements its model through the EventLoop of the main thread and the auxiliary Worker thread:

It should be noted that Node.js is not suitable for performing CPU-intensive (i.e., requiring a lot of calculations) tasks; this is because EventLoop and JavaScript code (non-asynchronous event task code) run in the same thread (i.e., the main thread), and any of them If one runs for too long, it may cause the main thread to block. If the application contains a large number of tasks that require long execution, it will reduce the throughput of the server and may even cause the server to become unresponsive.

Node.js is a technology that front-end developers have to face now and even in the future. However, most front-end developers only have superficial knowledge of Node.js. In order to let everyone better understand the concurrency model of Node.js, This article first introduces processes, threads, and coroutines, then introduces different I/O models, and finally gives a brief introduction to the concurrency model of Node.js. Although there is not much space to introduce the Node.js concurrency model, the author believes that it can never be separated from the basic principles. Mastering the relevant basics and then deeply understanding the design and implementation of Node.js will get twice the result with half the effort.

Finally, if there are any mistakes in this article, I hope you can correct them. I wish you all happy coding every day.