Node was originally created to build high-performance web servers. As a server-side runtime for JavaScript, it has features such as event-driven, asynchronous I/O, and single-threading. The asynchronous programming model based on the event loop enables Node to handle high concurrency and greatly improves server performance. At the same time, because it maintains the single-threaded characteristics of JavaScript, Node does not need to deal with issues such as state synchronization and deadlock under multi-threads. There is no performance overhead caused by thread context switching. Based on these characteristics, Node has the inherent advantages of high performance and high concurrency, and various high-speed and scalable network application platforms can be built based on it.

This article will go deep into the underlying implementation and execution mechanism of Node's asynchronous and event loop. I hope it will be helpful to you.

Why does Node use asynchronous as its core programming model?

As mentioned before, Node was originally created to build high-performance web servers. Assuming that there are several sets of unrelated tasks to be completed in the business scenario, there are two modern mainstream solutions:

single-threaded serial execution.

Completed in parallel with multiple threads.

Single-threaded serial execution is a synchronous programming model. Although it is more in line with the programmer's way of thinking in sequence and makes it easier to write more convenient code, because it executes I/O synchronously, it can only process I/O at the same time. A single request will cause the server to respond slowly and cannot be applied in high-concurrency application scenarios. Moreover, because it blocks I/O, the CPU will always wait for the I/O to complete and cannot do other things, which will limit the CPU's processing power. To be fully utilized, it will eventually lead to low efficiency,

and the multi-threaded programming model will also give developers headaches due to issues such as state synchronization and deadlock in programming. Although multi-threading can effectively improve CPU utilization on multi-core CPUs.

Although the programming model of single-threaded serial execution and multi-threaded parallel execution has its own advantages, it also has shortcomings in terms of performance and development difficulty.

In addition, starting from the speed of responding to client requests, if the client obtains two resources at the same time, the response speed of the synchronous method will be the sum of the response speeds of the two resources, and the response speed of the asynchronous method will be the middle of the two. The biggest one, the performance advantage is very obvious compared to synchronization. As the application complexity increases, this scenario will evolve into responding to n requests at the same time, and the advantages of asynchronous compared to synchronized will be highlighted.

To sum up, Node gives its answer: use a single thread to stay away from multi-thread deadlock, state synchronization and other problems; use asynchronous I/O to keep a single thread away from blocking to better use the CPU. This is why Node uses asynchronous as its core programming model.

In addition, in order to make up for the shortcoming of a single thread that cannot utilize multi-core CPUs, Node also provides a sub-process similar to the Web Workers in the browser, which can efficiently utilize the CPU through worker processes.

After talking about why we should use asynchronous, how to implement asynchronous?

There are two types of asynchronous operations that we usually call: one is I/O-related operations such as file I/O and network I/O; the other is operations unrelated to I/O such as setTimeOut and setInterval . Obviously the asynchronous we are discussing refers to operations related to I/O, that is, asynchronous I/O.

Asynchronous I/O is proposed in the hope that I/O calls will not block the execution of subsequent programs, and the original time waiting for I/O to be completed will be allocated to other required businesses for execution. To achieve this goal, you need to use non-blocking I/O.

Blocking I/O means that after the CPU initiates an I/O call, it will block until the I/O is completed. Knowing blocking I/O, non-blocking I/O is easy to understand. The CPU will return immediately after initiating the I/O call instead of blocking and waiting. The CPU can handle other transactions before the I/O is completed. Obviously, compared to blocking I/O, non-blocking I/O has more performance improvements.

So, since non-blocking I/O is used and the CPU can return immediately after initiating the I/O call, how does it know that the I/O is completed? The answer is polling.

In order to obtain the status of I/O calls in time, the CPU will continuously call I/O operations repeatedly to confirm whether the I/O has been completed. This technology of repeated calls to determine whether the operation is completed is called polling.

Obviously, polling will cause the CPU to repeatedly perform status judgments, which is a waste of CPU resources. Moreover, the polling interval is difficult to control. If the interval is too long, the completion of the I/O operation will not receive a timely response, which indirectly reduces the response speed of the application; if the interval is too short, the CPU will inevitably be spent on polling. It takes longer and reduces the utilization of CPU resources.

Therefore, although polling meets the requirement that non-blocking I/O does not block the execution of subsequent programs, for the application, it can still only be regarded as a kind of synchronization, because the application still needs to wait for the I/O to return completely. Still spent a lot of time waiting.

The perfect asynchronous I/O we expect should be that the application initiates a non-blocking call. There is no need to continuously query the status of the I/O call through polling. Instead, the next task can be processed directly. After the I/O is completed, Just pass the data to the application through a semaphore or callback.

How to implement this asynchronous I/O? The answer is thread pool.

Although this article has always mentioned that Node is executed in a single thread, the single thread here means that the JavaScript code is executed on a single thread. For parts such as I/O operations that have nothing to do with the main business logic, by running in other Implementation in the form of threads will not affect or block the running of the main thread. On the contrary, it can improve the execution efficiency of the main thread and realize asynchronous I/O.

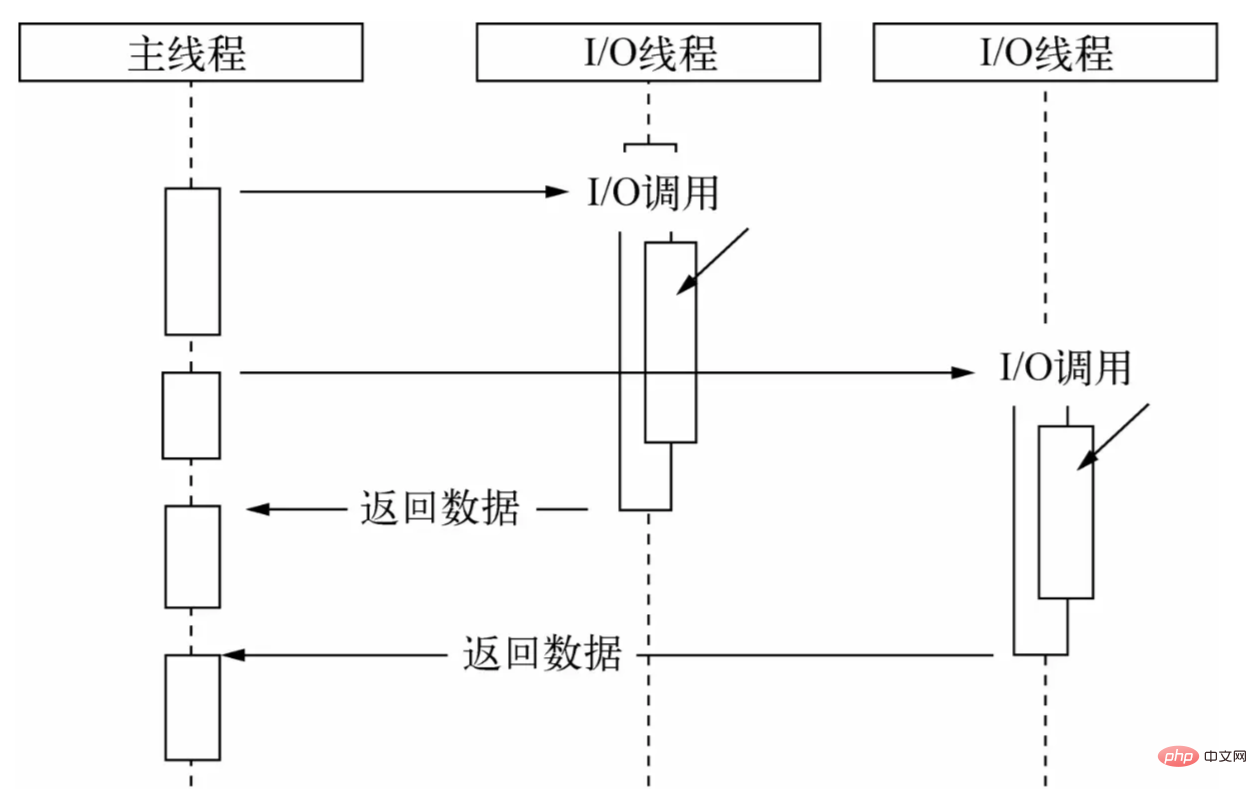

Through the thread pool, let the main thread only make I/O calls, let other threads perform blocking I/O or non-blocking I/O plus polling technology to complete data acquisition, and then use the communication between threads to complete the I/O The obtained data is passed, which easily implements asynchronous I/O:

The main thread performs I/O calls, while the thread pool performs I/O operations, completes the acquisition of data, and then passes the data to the main thread through communication between threads to complete an I/O call, and the main thread reuses The callback function exposes the data to the user, who then uses the data to complete operations at the business logic level. This is a complete asynchronous I/O process in Node. For users, there is no need to worry about the cumbersome implementation details of the underlying layer. They only need to call the asynchronous API encapsulated by Node and pass in the callback function that handles the business logic, as shown below:

const fs = require("fs") ;

fs.readFile('example.js', (data) => {

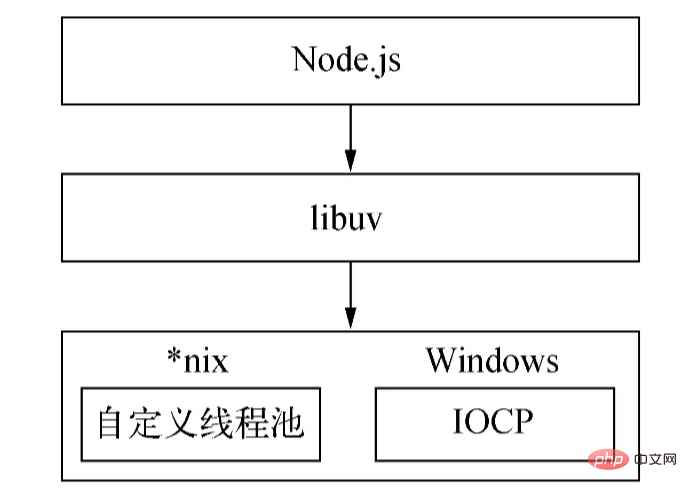

// Process business logic}); The asynchronous underlying implementation mechanism of Nodejs is different on different platforms: under Windows, IOCP is mainly used to send I/O calls to the system kernel and obtain completed I/O operations from the kernel. Equipped with an event loop to complete the asynchronous I/O process; this process is implemented through epoll under Linux; through kqueue under FreeBSD, and through Event ports under Solaris. The thread pool is directly provided by the kernel (IOCP) under Windows, while the *nix series is implemented by libuv itself.

Due to the difference between Windows platform and *nix platform, Node provides libuv as an abstract encapsulation layer, so that all platform compatibility judgments are completed by this layer, ensuring that the upper-layer Node and the lower-layer custom thread pool and IOCP are independent of each other. independent. Node will determine the platform conditions during compilation and selectively compile source files in the unix directory or win directory into the target program:

The above is Node's implementation of asynchronous.

(The size of the thread pool can be set through the environment variable UV_THREADPOOL_SIZE . The default value is 4. The user can adjust the size of this value based on the actual situation.)

Then the question is, after getting the data passed by the thread pool, how does the main thread? When is the callback function called? The answer is the event loop.

Sinceuses callback functions to process I/O data, it inevitably involves the issue of when and how to call the callback function. In actual development, multiple and multi-type asynchronous I/O call scenarios are often involved. How to reasonably arrange the calls of these asynchronous I/O callbacks and ensure the orderly progress of asynchronous callbacks is a difficult problem. Moreover, in addition to asynchronous I/O In addition to /O, there are also non-I/O asynchronous calls such as timers. Such APIs are highly real-time and have correspondingly higher priorities. How to schedule callbacks with different priorities?

Therefore, there must be a scheduling mechanism to coordinate asynchronous tasks of different priorities and types to ensure that these tasks run in an orderly manner on the main thread. Like browsers, Node has chosen the event loop to do this heavy lifting.

Node divides tasks into seven categories according to their type and priority: Timers, Pending, Idle, Prepare, Poll, Check, and Close. For each type of task, there is a first-in, first-out task queue to store tasks and their callbacks (Timers are stored in a small top heap). Based on these seven types, Node divides the execution of the event loop into the following seven stages:

The execution priority of this stage of

At this stage, the event loop will check the data structure (minimum heap) that stores the timer, traverse the timers in it, compare the current time and expiration time one by one, and determine whether the timer has expired. If it has expired, the timer will be The callback function is taken out and executed.

Thephase will execute callbacks when network, IO and other exceptions occur. Some errors reported by *nix will be handled at this stage. In addition, some I/O callbacks that should be executed in the poll phase of the previous cycle will be postponed to this phase.

phases are only used inside the event loop.

retrieves new I/O events; executes I/O-related callbacks (almost all callbacks except shutdown callbacks, timer-scheduled callbacks, and setImmediate() ); the node will block here at the appropriate time.

Poll, that is, the polling stage is the most important stage of the event loop. Callbacks for network I/O and file I/O are mainly processed at this stage. This stage has two main functions:

calculating how long this stage should block and poll for I/O.

Handle callbacks in the I/O queue.

When the event loop enters the poll phase and no timer is set:

If the poll queue is not empty, the event loop will traverse the queue, executing them synchronously until the queue is empty or the maximum number that can be executed is reached.

If the polling queue is empty, one of two other things will happen:

If there is setImmediate() callback that needs to be executed, the poll phase ends immediately and the check phase is entered to execute the callback.

If there are no setImmediate() callbacks to execute, the event loop will stay in this phase waiting for callbacks to be added to the queue and then execute them immediately. The event loop will wait until the timeout expires. The reason why I choose to stop here is because Node mainly handles IO, so that it can respond to IO in a more timely manner.

Once the poll queue is empty, the event loop checks for timers that have reached their time threshold. If one or more timers reach the time threshold, the event loop will return to the timers phase to execute the callbacks for these timers.

phase will execute the callbacks of setImmediate() in sequence.

This phase will execute some callbacks to close resources, such as socket.on('close', ...) . Delayed execution of this stage will have little impact and has the lowest priority.

When the Node process starts, it will initialize the event loop, execute the user's input code, make corresponding asynchronous API calls, timer scheduling, etc., and then start to enter the event loop:

┌─────────── ────────────────┐ ┌─>│ timers │ │ └─────────────┬─────────────┘ │ ┌─────────────┴─────────────┐ │ │ pending callbacks │ │ └─────────────┬─────────────┘ │ ┌─────────────┴─────────────┐ │ │ idle, prepare │ │ └─────────────┬────────────┘ ┌────────────────┐ │ ┌─────────────┴────────────┐ │ incoming: │ │ │ poll │<─────┤ connections, │ │ └─────────────┬─────────────┘ │ data, etc. │ │ ┌─────────────┴────────────┐ └────────────────┘ │ │ check │ │ └─────────────┬─────────────┘ │ ┌─────────────┴─────────────┐ └──┤ close callbacks │ └─────────────────────────────┘Each

iteration of the event loop (often called a tick) will be as given above The priority order enters the seven stages of execution. Each stage will execute a certain number of callbacks in the queue. The reason why only a certain number is executed but not all is executed is to prevent the execution time of the current stage from being too long and avoid the failure of the next stage. Not executed.

OK, the above is the basic execution flow of the event loop. Now let's look at another question.

For the following scenario:

const server = net.createServer(() => {}).listen(8080);

server.on('listening', () => {}); When the service is successfully bound to port 8000, that is, when listen() is called successfully, the callback of the listening event has not been bound yet, so after the port is successfully bound , the callback of the listening event we passed in will not be executed.

Thinking about another question, we may have some needs during development, such as handling errors, cleaning up unnecessary resources, and other tasks with low priority. If these logics are executed in a synchronous manner, it will affect the current task. Execution efficiency; if setImmediate() is passed in asynchronously, such as in the form of callbacks, their execution timing cannot be guaranteed, and the real-time performance is not high. So how to deal with these logics?

Based on these issues, Node took reference from the browser and implemented a set of micro-task mechanisms. In Node, in addition to calling new Promise().then() the callback function passed in will be encapsulated into a microtask. The callback of process.nextTick() will also be encapsulated into a microtask, and the execution priority of the latter will be higher than the former.

With microtasks, what is the execution process of the event loop? In other words, when are microtasks executed?

In node 11 and later versions, once a task in a stage is executed, the microtask queue is immediately executed and the queue is cleared.

The microtask execution starts after a stage has been executed before node11.

Therefore, with microtasks, each cycle of the event loop will first execute a task in the timers stage, and then clear the microtask queues of process.nextTick() and new Promise().then() in order, and then Continue to execute the next task in the timers stage or the next stage, that is, a task in the pending stage, and so on in this order.

Using process.nextTick() , Node can solve the above port binding problem: inside the listen() method, the issuance of the listening event will be encapsulated into a callback and passed to process.nextTick() , as shown in the following pseudo code:

function listen() {

// Carry out listening port operations...

// Encapsulate the issuance of the `listening` event into a callback and pass it into `process.nextTick()` in process.nextTick(() => {

emit('listening');

});

}; After the current code is executed, the microtask will start to be executed, thereby issuing listening event and triggering the call of the event callback.

Due to the unpredictability and complexity of asynchronous itself, in the process of using the asynchronous API provided by Node, although we have mastered the execution principle of the event loop, there may still be some phenomena that are not intuitive or expected. .

For example, the execution order of timers ( setTimeout , setImmediate ) will differ depending on the context in which they are called. If both are called from the top-level context, their execution time depends on the performance of the process or machine.

Let's look at the following example:

setTimeout(() => {

console.log('timeout');

}, 0);

setImmediate(() => {

console.log('immediate');

}); What is the execution result of the above code? According to our description of the event loop just now, you may have this answer: Since the timers phase will be executed before the check phase, the callback of setTimeout() will be executed first, and then the callback of setImmediate() will be executed.

In fact, the output result of this code is uncertain. Timeout may be output first, or immediate may be output first. This is because both timers are called in the global context. When the event loop starts running and executes to the timers stage, the current time may be greater than 1 ms or less than 1 ms, depending on the execution performance of the machine. Therefore, it is actually uncertain setTimeout() will be executed in the first timers stage, so different output results will appear.

(When the value of delay (the second parameter of setTimeout ) is greater than 2147483647 or less than 1 , delay will be set to 1 )

Let's look at the following code:

const fs = require('fs');

fs.readFile(__filename, () => {

setTimeout(() => {

console.log('timeout');

}, 0);

setImmediate(() => {

console.log('immediate');

});

}); It can be seen that in this code, both timers are encapsulated into callback functions and passed into readFile . It is obvious that when the callback is called, the current time must be greater than 1 ms, so the callback of setTimeout will be longer than the callback of setImmediate The callback is called first, so the printed result is: timeout immediate .

The above are things related to timers that you need to pay attention to when using Node. In addition, you also need to pay attention to the execution order of process.nextTick() , new Promise().then() and setImmediate() . Since this part is relatively simple, it has been mentioned before and will not be repeated.

: The article begins with a more detailed explanation of the implementation principles of the Node event loop from the two perspectives of why asynchronous is needed and how to implement asynchronous, and mentions some related matters that need attention. I hope it will be helpful to you.