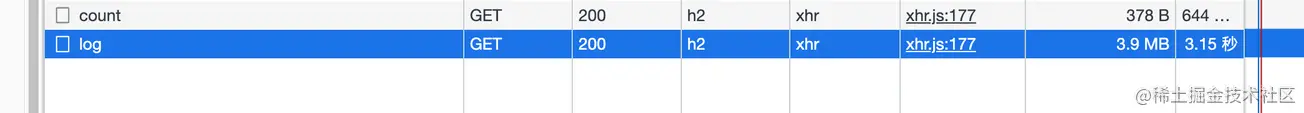

When checking my own application logs, I found that it always takes a few seconds to load after entering the log page (the interface is not paginated), so I opened the network panel to check

Only then did I find that the data returned by the interface was not compressed. I thought that the interface used Nginx reverse proxy, and Nginx would automatically help me do this layer (I will explore this later, it is theoretically feasible).

The backend here is Node. Service

This article will share knowledge about HTTP数据压缩and Node侧的实践

. The following clients all refer to the browser

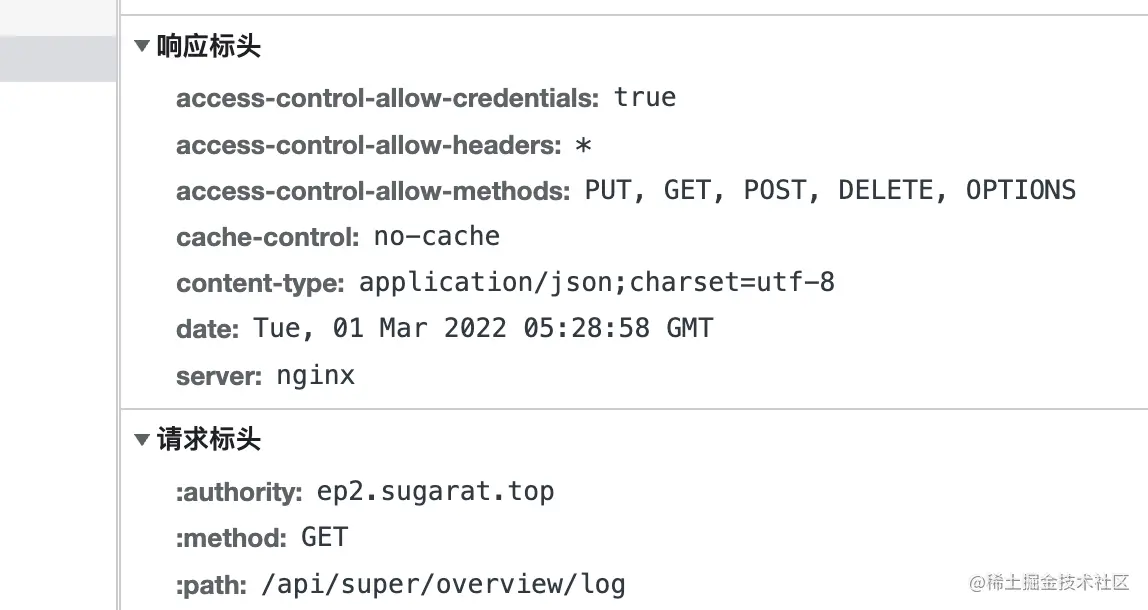

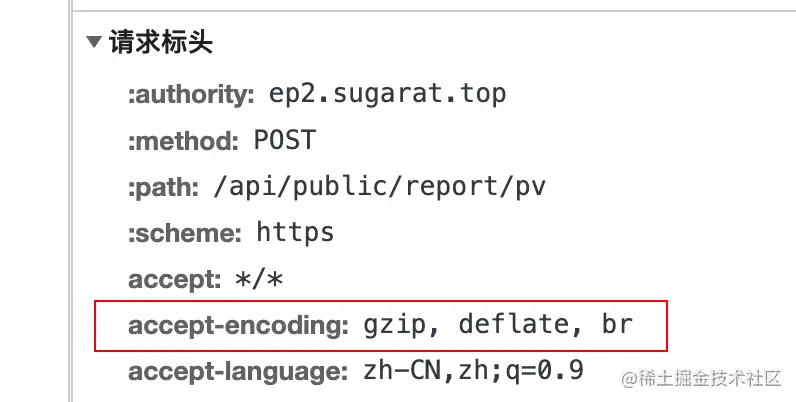

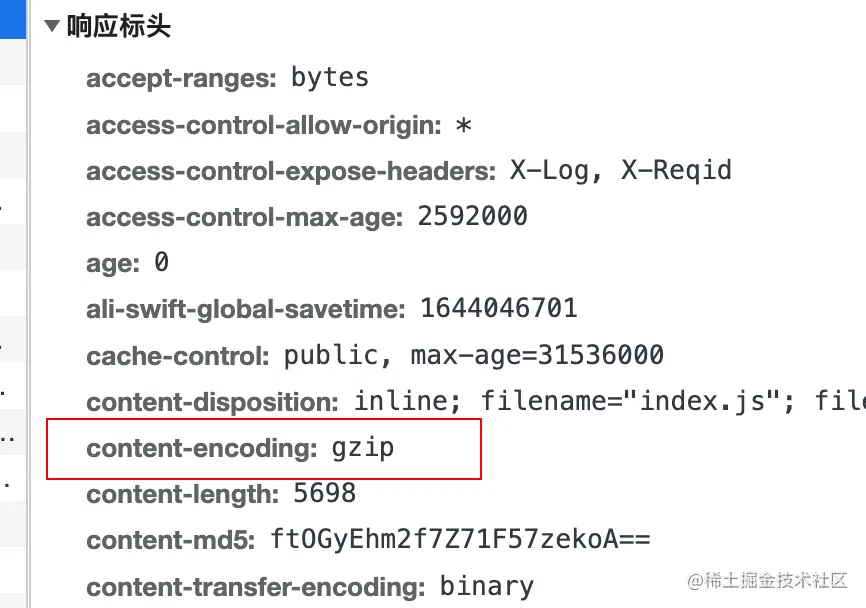

When the client initiates a request to the server, it will add the accept-encoding field to the request header, whose value indicates支持的压缩内容编码

实际压缩使用的编码算法of the content by adding content-encoding to the response header.

deflate uses both the LZ77 algorithm and哈夫曼编码(Huffman Coding) A lossless data compression algorithm based on哈夫曼编码(Huffman Coding) .

gzip is an algorithm based on DEFLATE

br refers to Brotli . This data format aims to further improve the compression ratio. Compared with deflate , the compression of text can increase the compression density by 20% , while its compression and decompression speed remains roughly unchanged

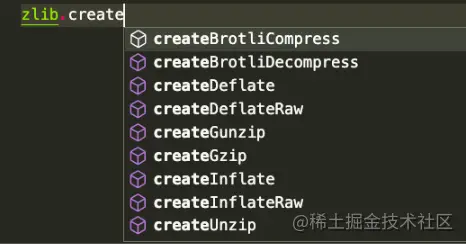

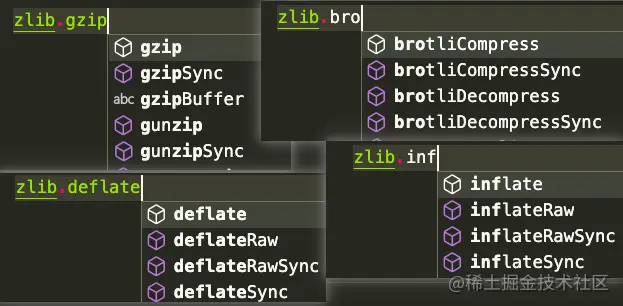

Node. js contains a zlib 模块, which provides compression functions implemented using Gzip , Deflate/Inflate , and Brotli

Here, gzip is used as an example to list various usage methods according to scenarios. Deflate/Inflate and Brotli are used in the same way, but the API is

based on stream . operate

buffer -based operations

Introduce several required modules

const zlib = require('zlib')

const fs = require('fs')

const stream = require('stream')

const testFile = 'tests/origin.log'

const targetFile = `${testFile}.gz`

Const decodeFile = `${testFile}.un.gz` /compression results view, here use the du command to directly count the results before and after decompression

# Execute du -ah tests # The results are as follows 108K tests/origin.log.gz 2.2M tests/origin.log 2.2M tests/origin.log.un.gz 4.6M tests

流(stream) based operationsusing createGzip and createUnzip

zlib APIs, except those explicitly synchronous, use the Node.js internal thread pool and can be considered asynchronousMethod 1: Directly use the pipe method on the instance to transfer the stream

// Compression const readStream = fs.createReadStream(testFile) const writeStream = fs.createWriteStream(targetFile) readStream.pipe(zlib.createGzip()).pipe(writeStream) // Decompress const readStream = fs.createReadStream(targetFile) const writeStream = fs.createWriteStream(decodeFile) readStream.pipe(zlib.createUnzip()).pipe(writeStream)

Method 2: Using pipeline on stream , you can do other processing separately in the callback

// Compression const readStream = fs.createReadStream(testFile)

const writeStream = fs.createWriteStream(targetFile)

stream.pipeline(readStream, zlib.createGzip(), writeStream, err => {

if (err) {

console.error(err);

}

})

// Decompress const readStream = fs.createReadStream(targetFile)

const writeStream = fs.createWriteStream(decodeFile)

stream.pipeline(readStream, zlib.createUnzip(), writeStream, err => {

if (err) {

console.error(err);

}

}) Method 3: Promise pipeline method

const { promisify } = require('util')

const pipeline = promisify(stream.pipeline)

// Compress const readStream = fs.createReadStream(testFile)

const writeStream = fs.createWriteStream(targetFile)

pipeline(readStream, zlib.createGzip(), writeStream)

.catch(err => {

console.error(err);

})

// Decompress const readStream = fs.createReadStream(targetFile)

const writeStream = fs.createWriteStream(decodeFile)

pipeline(readStream, zlib.createUnzip(), writeStream)

.catch(err => {

console.error(err);

}) Buffer -based operationsutilize gzip and unzip APIs. These two methods include同步and异步type

gzipgzipSyncunzipunzipSyncmethod 1: Convert readStream to Buffer , and then perform further operations

// Compression const buff = []

readStream.on('data', (chunk) => {

buff.push(chunk)

})

readStream.on('end', () => {

zlib.gzip(Buffer.concat(buff), targetFile, (err, resBuff) => {

if(err){

console.error(err);

process.exit()

}

fs.writeFileSync(targetFile,resBuff)

})

}) // compression const buff = []

readStream.on('data', (chunk) => {

buff.push(chunk)

})

readStream.on('end', () => {

fs.writeFileSync(targetFile,zlib.gzipSync(Buffer.concat(buff)))

}) Method 2: Read directly through readFileSync

// Compressed const readBuffer = fs.readFileSync(testFile) const decodeBuffer = zlib.gzipSync(readBuffer) fs.writeFileSync(targetFile,decodeBuffer) // Decompress const readBuffer = fs.readFileSync(targetFile) const decodeBuffer = zlib.gzipSync(decodeFile) fs.writeFileSync(targetFile,decodeBuffer)

In addition to file compression, sometimes it may be necessary to directly decompress the transmitted content.

Here is the compressed text content as an example

// Test data const testData = fs.readFileSync( testFile, { encoding: 'utf-8' }) 流(stream) operations, just consider the conversion of string => buffer => stream

string => buffer

const buffer = Buffer.from(testData)

buffer => stream

const transformStream = new stream.PassThrough() transformStream.write(buffer) // or const transformStream = new stream.Duplex() transformStream.push(Buffer.from(testData)) transformStream.push(null)

Here is an example of writing to a file. Of course, it can also be written to other streams, such as HTTP的Response (will be introduced separately later)

transformStream

.pipe(zlib.createGzip())

.pipe(fs.createWriteStream(targetFile)) also uses Buffer.from to convert the string into buffer

Buffer operationconst buffer = Buffer.from(testData)

and then directly uses the synchronization API for conversion. The result here is the compressed content

const result = zlib.gzipSync(buffer)

can write files, and in HTTP Server the compressed content can also be returned directly

fs.writeFileSync(targetFile, result)

Here we directly use the http module in Node to create a simple Server For demonstrations

in other Node Web frameworks, the processing ideas are similar. Of course, there are generally ready-made plug-ins that can be accessed with one click.

const http = require('http')

const { PassThrough, pipeline } = require('stream')

const zlib = require('zlib')

//Test data const testTxt = 'Test data 123'.repeat(1000)

const app = http.createServer((req, res) => {

const { url } = req

// Read the supported compression algorithm const acceptEncoding = req.headers['accept-encoding'].match(/(br|deflate|gzip)/g)

//Default response data type res.setHeader('Content-Type', 'application/json; charset=utf-8')

// Several example routes const routes = [

['/gzip', () => {

if (acceptEncoding.includes('gzip')) {

res.setHeader('content-encoding', 'gzip')

// Use synchronization API to directly compress text content res.end(zlib.gzipSync(Buffer.from(testTxt)))

return

}

res.end(testTxt)

}],

['/deflate', () => {

if (acceptEncoding.includes('deflate')) {

res.setHeader('content-encoding', 'deflate')

// Single operation based on stream const originStream = new PassThrough()

originStream.write(Buffer.from(testTxt))

originStream.pipe(zlib.createDeflate()).pipe(res)

originStream.end()

return

}

res.end(testTxt)

}],

['/br', () => {

if (acceptEncoding.includes('br')) {

res.setHeader('content-encoding', 'br')

res.setHeader('Content-Type', 'text/html; charset=utf-8')

// Multiple write operations based on streams const originStream = new PassThrough()

pipeline(originStream, zlib.createBrotliCompress(), res, (err) => {

if (err) {

console.error(err);

}

})

originStream.write(Buffer.from('<h1>BrotliCompress</h1>'))

originStream.write(Buffer.from('<h2>Test data</h2>'))

originStream.write(Buffer.from(testTxt))

originStream.end()

return

}

res.end(testTxt)

}]

]

const route = routes.find(v => url.startsWith(v[0]))

if (route) {

route[1]()

return

}

// Back to top res.setHeader('Content-Type', 'text/html; charset=utf-8')

res.end(`<h1>404: ${url}</h1>

<h2>Registered route</h2>

<ul>

${routes.map(r => `<li><a href="${r[0]}">${r[0]}</a></li>`).join('') }

</ul>

`)

res.end()

})

app.listen(3000)