Before we start, you need to abide by local laws and regulations, and do not scrape data that is disclosed without permission.

Here are some things you'll need for this tutorial:

Cheerio is a tool for parsing HTML and XML in Node.js. It is very popular on GitHub and has more than 23k stars.

It's fast, flexible and easy to use. Since it implements a subset of JQuery, it's easy to get started with Cheerio if you're already familiar with JQuery.

The main difference between Cheerio and a web browser is that cheerio does not generate visual rendering, load CSS, load external resources, or execute JavaScript. It just parses the markup and provides an API for manipulating the resulting data structures. This explains why it's also very fast - cheerio documentation.

If you want to use cheerio to fetch web pages, you need to first use a package like axios or node-fetch to get the tags.

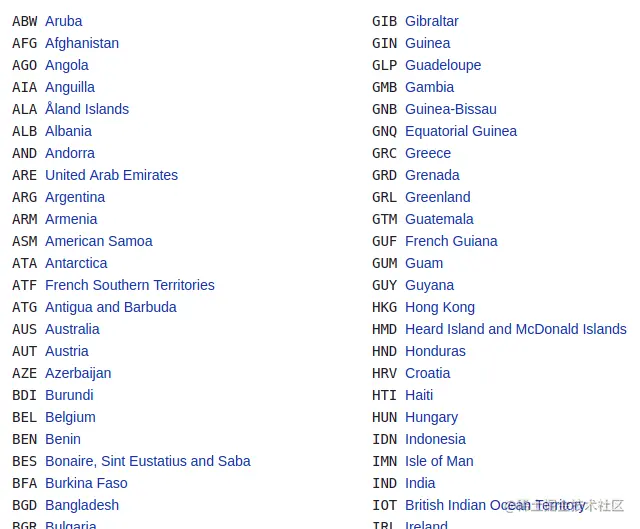

In this example, we will crawl the ISO 3166-1 alpha-3 codes for all countries and other jurisdictions listed on this Wikipedia page. It's located under the current code section of the ISO 3166-1 alpha-3 page.

This is what the list of countries/jurisdictions and their corresponding codes look like:

In this step, you will create a directory for your project by running the following command on the terminal. This command will create a file named learn-cheerio . You can give it a different name if you wish.

mkdir learn-cheerio

learn-cheerio After successfully running the above command, you should be able to see a folder named created.

In the next step, you will open the directory you just created in your favorite text editor and initialize the project.

In this step, you will navigate to the project directory and initialize the project. Open the directory you created in the previous step in your favorite text editor and initialize the project by running the following command.

npm init -y

A successful run of the above command will create a file package.json in the root of the project directory.

In the next step, you will install the project dependencies.

In this step, you will install the project dependencies by running the following command. This will take a few minutes, so please be patient.

npm i axios cheerio pretty

Successfully running the above command will register three dependencies in the file under the field package.json . dependencies first dependency is axios , the second is cheerio , and the third is pretty .

axios is a very popular http client that can run in node and browsers. We need it because cheerio is a token parser.

In order for Cheerio to parse the tags and crawl the data you need, we need axios for getting the tags from the website. If you prefer, you can use another HTTP client to get the token. It doesn't have to be axios .

pretty is an npm package for beautifying markup so it's readable when printed on the terminal.

In the next section, you'll examine the tags from which data will be scraped.

Before scraping data from a web page, it is important to understand the HTML structure of the page.

In this step, you examine the HTML structure of the web page from which you want to scrape data.

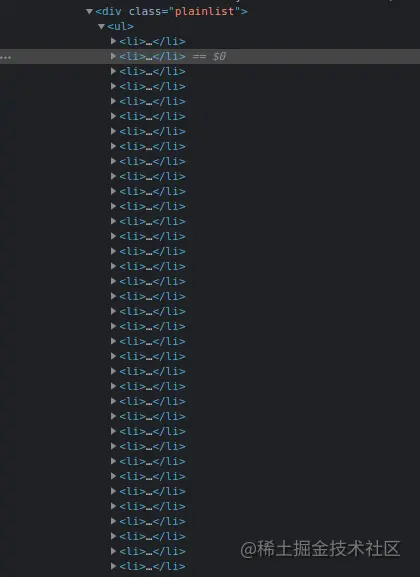

Navigate to the ISO 3166-1 alpha-3 codes page on Wikipedia. Under the "Current Codes" section, there is a list of countries and their corresponding codes. CTRL + SHIFT + I You can open DevTools by pressing the key combination on chrome or by right-clicking and selecting the "Inspect" option.

This is my list in chrome DevTools:

In the next section, you'll write the code to crawl the web.

In this section, you will write the code to scrape the data that we are interested in. First run the following command which will create the app.js file.

touch app.js

Successfully running the above command will create a file app.js in the root directory of the project directory.

Like any other Node package, you must first require axios , cheerio , and axios before starting to use them. You can do this by adding the following code at the top of the file you just created pretty . app.js

const axios = require("axios");

const cheerio = require("cheerio");

const pretty = require("pretty"); Before we write the code for scraping the data, we need to learn cheerio . We will parse the markup below and try to manipulate the resulting data structure. This will help us learn Cheerio syntax and its most commonly used methods.

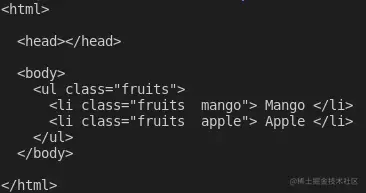

The markup below is ul li element that contains our element.

const markup = ` <ul class="fruits"> <li class="fruits__mango"> Mango </li> <li class="fruits__apple"> Apple </li> </ul> `;

Add the above variable declaration to the app.js file

cheerio can load tags using the cheerio.load method. This method takes the marker as parameter. It also requires two additional optional parameters. If you're interested, you can read more about them in the documentation.

Below, we pass the first and only required parameter and store the return value in the $ variable. We use this variable because of cheerio's similarity to Jquery $ . You can use different variable names if you wish.

Add the following code to your app.js file:

const $ = cheerio.load(markup); console.log(pretty($.html()));

If you now execute the code in the file node app.js by running the command app.js on the terminal, you should be able to see the markup on the terminal. This is what I see on the terminal:

Cheerio supports most common CSS selectors, such as class , id and element selectors. In the code below, we select an element with class fruits__mango and then log the selected element to the console. Add the following code to your app.js file.

const mango = $(".fruits__mango");

console.log(mango.html()); // Mango If you use command execution, the above line of code will Mango log text on the terminal. app.js``node app.js

You can also select an element and get specific attributes such as class , id or all attributes and their corresponding values.

Add the following code to your app.js file:

const apple = $(".fruits__apple");

console.log(apple.attr("class")); // The code above fruits__apple will log in to the fruits__apple terminal. fruits__apple is the class of the selected element.

Cheerio provides the .each method to loop through multiple selected elements.

Below, we select all elements and loop through them using the method li . .each we log the text content of each list item on the terminal.

Add the following code to your app.js file.

const listItems = $("li");

console.log(listItems.length); // 2

listItems.each(function (idx, el) {

console.log($(el).text());

});

//Mango

// Apple 's code above will record 2 , which is the length of the list item. After executing the code, the text Mango and will be displayed on the terminal. Apple``app.js

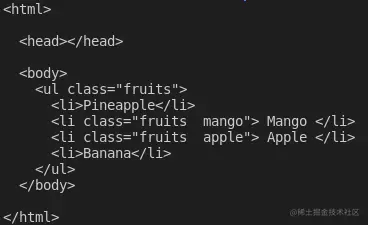

Cheerio provides a way to append or append elements to markup.

The append method will append the element passed as parameter after the last child element of the selected element. On the other hand, prepend will add the passed element before the first child of the selected element.

Add the following code to your app.js file:

const ul = $("ul");

ul.append("<li>Banana</li>");

ul.prepend("<li>Pineapple</li>");

console.log(pretty($.html())); After adding and adding elements to the markup, this is what I see when I log into $.html() terminal:

These are the basics of Cheerio to get you started with web scraping. To scrape the data from Wikipedia we described at the beginning of this article, copy and paste the following code into the app.js file:

// Loading the dependencies. We don't need pretty

// because we shall not log html to the terminal

const axios = require("axios");

const cheerio = require("cheerio");

const fs = require("fs");

// URL of the page we want to scrape

const url = "https://en.wikipedia.org/wiki/ISO_3166-1_alpha-3";

// Async function which scrapes the data

async function scrapeData() {

try {

// Fetch HTML of the page we want to scrape

const { data } = await axios.get(url);

// Load HTML we fetched in the previous line

const $ = cheerio.load(data);

// Select all the list items in plainlist class

const listItems = $(".plainlist ul li");

// Stores data for all countries

const countries = [];

// Use .each method to loop through the li we selected

listItems.each((idx, el) => {

// Object holding data for each country/jurisdiction

const country = { name: "", iso3: "" };

// Select the text content of a and span elements

// Store the textcontent in the above object

country.name = $(el).children("a").text();

country.iso3 = $(el).children("span").text();

// Populate countries array with country data

countries.push(country);

});

// Logs countries array to the console

console.dir(countries);

// Write countries array in countries.json file

fs.writeFile("coutries.json", JSON.stringify(countries, null, 2), (err) => {

if (err) {

console.error(err);

return;

}

console.log("Successfully written data to file");

});

} catch (err) {

console.error(err);

}

}

//Invoke the above function

scrapeData(); By reading the code, do you understand what is happening? If not, I'll go into detail now. I've also commented each line of code to help you understand.

In the above code, we need all the dependencies at the top of the file app.js and then we declare the scrapeData function. Inside the function, the fetched HTML of the page we need to scrape is then loaded into cheerio using axios .

The list of countries and their corresponding iso3 codes is nested in a div element with the class plainlist . The li elements are selected and we then loop through them using the .each method. Data for each country is scraped and stored in an array.

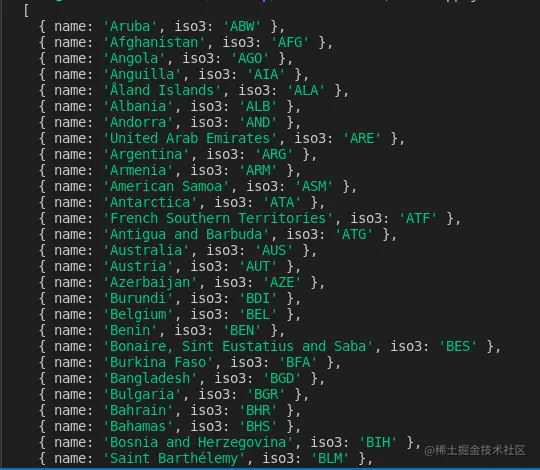

After running the above code using the node app.js command, the captured data is written to the countries.json file and printed on the terminal. This is part of what I see on the terminal:

Thank you for reading this article! We've already covered using cheerio . If you want to go deeper and fully understand how it works, you can head to the Cheerio documentation.