Ultraman's latest interview at the OpenAI London Developer Day has finally been released in full!

During the 40-minute interview, Altman not only talked about OpenAI’s future model development direction, Agent, and its most respected competitors (these are the issues that had been leaked in pieces before), but also talked about Scaling Law, semiconductor supply chain, and fundamentals. More than ten questions were asked and answered quickly, such as the cost of model competition and what age group of employees should be hired .

When asked about what he was and is not fully prepared for in the position of CEO of OpenAI.

Ultraman said without hesitation: Product!

Overall, my weakness is with the product.

Now the company needs me to have a stronger, clearer vision in this area.

What’s interesting is that if the 39-year-old Ultraman were allowed to go back to when he was 23 or 24 years old, he would consider doing something related to AI in the vertical direction .

For example, AI Tutors who help humans learn, or AI lawyers, AI CAD engineers, etc.

The full text of this wonderful interview is attached below. Some paragraphs have been deleted without changing the original meaning.

Finally, there are 11 quick questions and answers~

Content quick overview

Interview with Ultraman at OpenAI London Developer Day

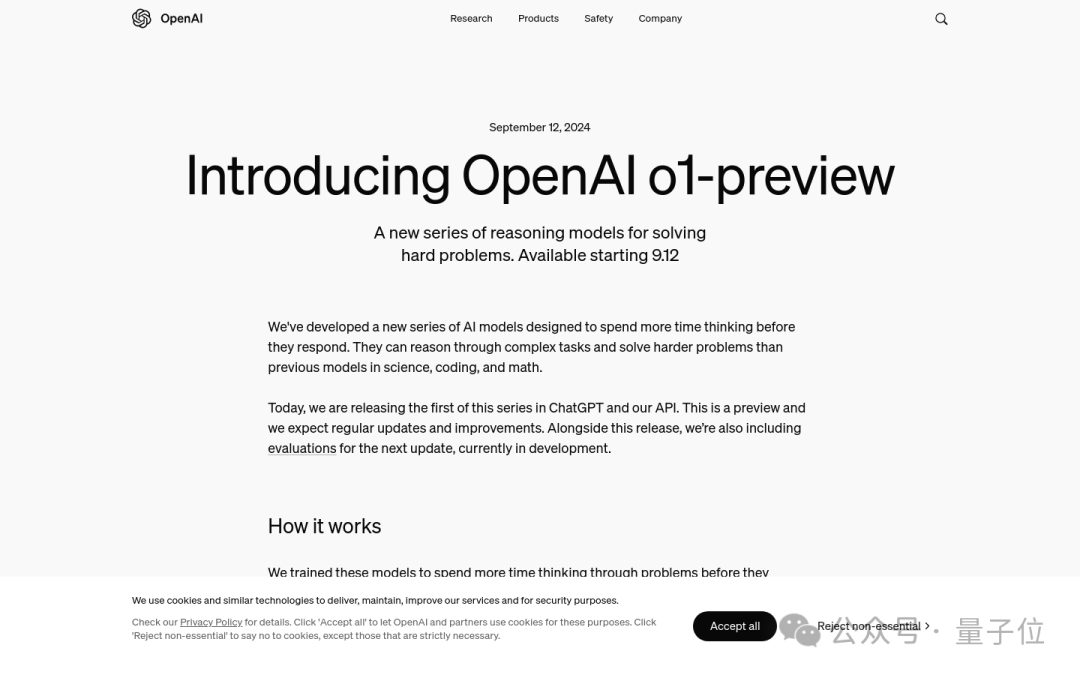

Q1: When we look to the future, will OpenAI have more models like o1 in the future, or can we expect models with larger parameters?

A: Of course we hope to advance comprehensively, but the direction of inference models is particularly important to us . We hope to use reasoning to solve many things that we have looked forward to accomplishing for many years.

For example, inference models can contribute to new science and help write difficult code - which I think can move the world forward to a large extent.

So you can look forward to the rapid improvement of the O series models, which will be of great significance.

Q2: Also based on OpenAI’s future plans, how do you think as a non-technical founder, you can build and expand AI applications by developing no-code tools?

A: There will definitely be that day.

Our first step is to make those who know how to write code more productive; but in the end, we still hope to provide truly high-quality no-code tools.

There are some meaningful (no-code) tools out there, but it will take time to build a startup completely no-code.

Q3: One of the founders asked: At present, OpenAI is obviously somewhere in the technology stack. How far will OpenAI go? Is it a waste of time to spend a lot of time tuning the RAG system since OpenAI will eventually cover this part of the application?

A: We usually answer that OpenAI will do its best and firmly believe that we will make the models we launch better and better.

If you're building a company that makes a living by "patching small bugs," whether OpenAI advances its work smoothly and correctly won't have that much of an impact on you.

To put it another way, if your company will be more successful because "OpenAI's models are getting better and better," you will definitely be happy about it.

For example, if Oracle secretly tells you that OpenAI's o4 will be more powerful than you imagine, you will definitely be happy.

Of course, if you insist on picking an area where o1-preview does not perform well to delve into it and barely base it on it, then you will definitely feel that our next-generation model will not perform as well as imagined.

——This is what I want to tell startups.

We believe that OpenAI is on a very steep improvement track, and the shortcomings of the current model will be solved and made up for by latecomers.

Q4: From a founder’s perspective, where is OpenAI likely to succeed (and not others)? I believe investors also want to understand this issue, and no one wants to lose money on investment.

A: OpenAI will create trillions of dollars in market value. Specifically, it will create new highs in market value by using AI to build products and services that were previously considered impossible or impractical.

We hope to launch a model that is so great that users have nothing to worry about and just do what they want to do with it.

In the era of GPT-3.5, 95% of startups and people are betting that the model will not get better.

In fact, we have long foreseen that GPT-4 can handle what they do and do it better, and there will be no mistakes like the models in the 3.5 era. If all entrepreneurs/developers do is to make up for a shortcoming of a certain generation of models, you will find that this shortcoming becomes increasingly insignificant.

Has everyone forgotten how bad the models from a few years ago were? In fact, it has only been a few years.

But opportunities are everywhere, so patching existing models seems like a good opportunity at first glance (so I won’t become an AI teaching assistant or an AI medical consultant).

That’s why I said that at the beginning, 95% of people definitely believed that the model would not improve anymore, and only 5% of people bet that the model would get better and better.

But I think this is no longer the case.

Everyone now thinks that the speed at which GPT-3.5 evolves to GPT-4 will be the norm, but this is not the case. The good thing is that our internal staff is very diligent and we know what to expect.

Q5: Masayoshi Son of SoftBank said that AI will create US$9 trillion in value every year, which will offset what he believes is an annual expenditure of US$9 trillion. What do you think when you see this statement?

A: In terms of order of magnitude, it’s almost the same now. AI will obviously result in a lot of capital outlay while creating a lot of value.

This happens with every big technological revolution, and AI is obviously one of them.

Next year will be a year when OpenAI will vigorously advance towards the next generation of systems.

We just talked about when no-code software Agent will appear. I can’t give an exact time, but you can imagine how much economic growth this will bring to the world if anyone can describe the software they want for the entire company. value.

At the same value, more widely available, more accessible, and cheaper, that would be very powerful.

There are other examples, like healthcare and education, which I mentioned earlier, two areas worth trillions of dollars to the world.

If AI can truly achieve this in a different way than in the past, I think the specific number of economic value it brings is not the point at all, let alone whether the introduction is 9 trillion or 1 trillion.

But what is certain is that the value created by AI is truly incredible.

Q6: Open source is a very important approach. How do you see open source playing a role in the future of artificial intelligence? Is there any internal discussion at OpenAI about “should we open source all models or some of them”?

A: There is no doubt that open source models are very important in the ecosystem, and there are also very good open source models on the market.

But I think it also makes sense to provide good services and APIs - it makes sense and people will choose what works for them.

Q7: What do you think of the definition of Agent today? What is an Agent to you?

A: I haven't fully considered this issue, but I can give the Agent a long-term task and supervise its execution as little as possible.

Q7': Do you think people's views on Agent are actually wrong?

A: Maybe we don’t all have an accurate understanding, but we all know some important indicators.

For example, when people talk about AI agents acting on their behalf, the example they often give is asking the agent to make a restaurant reservation. The agent may make a reservation online or call the restaurant to make a reservation.

Indeed, Agent can help do some things to save time. But I think the really interesting thing is to let the agent do something that humans don't or can't do.

For example, instead of calling a specific restaurant to make a reservation, the Agent contacts 300 restaurants to find out which one is best for me. It would be even cooler if all the calls you made were answered by an agent! Because humans can't advance this kind of thing on a large scale in parallel.

Let’s take a more interesting example.

The Agent may be more like a very smart colleague. You work with it on a project that takes 2 days or 2 weeks. The Agent can execute it on its own and do a good job. It will communicate with you when necessary and finally hand over a project. An outstanding result.

Q7": Does this fundamentally change the way SaaS is priced? SaaS is usually priced by the number of users (seat charging) , but now AI is actually replacing labor. How do you see pricing going forward?

A: I can only guess, I really don’t know.

I can imagine a world where you could demand, "I want 1/10/100 GPUs to work on me constantly," and it wouldn't be priced per seat, but rather based on the amount of compute needed to continuously process this problem.

Q7"': Do we need to build specific models for Aengts usage scenarios?

A: It certainly requires a lot of infrastructure, but I feel that the o1 model points the way to a model that can perform agent tasks well.

Q8: Everyone says that models are depreciating assets, and the commoditization of models is very common. What are your responses and thoughts on this? When you factor in the ever-increasing capital intensity required to train models, are we going to see a reversal of "this is an area where a lot of money is needed but very few people can actually do it"?

A: Models are indeed depreciating assets, but that doesn’t mean they aren’t worth the cost of training. Not to mention the positive compounding effect when you train a model, allowing you to better train the next model.

The actual revenue we get from the model justifies the investment - of course, not everyone can do this, and there are many people who are reinventing the wheel when it comes to training models.

If the model you train is lagging behind, or if you don’t have a sticky and valuable product, it may be difficult to get a return on investment.

OpenAI is very fortunate to have ChatGPT, with hundreds of millions of users using our models.

So even if the cost is high, we can spread this astronomical figure over a large number of users and dilute it.

Q9: How do OpenAI models continue to differentiate over time? What areas would you most like to focus on extending this differentiation?

A: Inference is our most important area of focus right now, and I think that will unlock the next giant leap in creating value.

So we're going to improve the models in various aspects, we 're going to do multimodal work and add other features that we think are important to the way people want to use these models.

Q9': How do you view reasoning and multimodal work, including challenges and goals you want to achieve?

A: I hope it comes true. This obviously takes some effort to accomplish.

But humans in infancy and early childhood, before they become adept at language, can still perform fairly sophisticated visual reasoning.

Q9”: How will visual capabilities expand with o1’s new reasoning paradigm?

A: Without spoiling anything, I expect image-based models to grow rapidly.

You can expect rapid progress on image models .

Q10: How does OpenAI achieve breakthroughs in core reasoning? Is there a need to start pushing reinforcement learning as an approach or other new technologies besides Transformers?

A: These are two questions: how do we do it, and what comes after the Transformer.

First, how we do it is our secret.

One of the reasons it's really easy to copy something that's known to work is that you have confidence in knowing what's possible. After researchers do something, you can replicate it even if you don't know how they did it. This can be verified in replicas of GPT-4 and o1.

The really hard thing, and what I'm most proud of about OpenAI, is that we repeatedly do something new and completely unproven.

Many institutions and organizations claim to have the ability to do such things, but in fact few actually do it, including outside the field of AI.

In a sense, I think this is one of the most important investments in human progress.

I'm looking forward to writing a book when I retire, a book about what I've learned and sharing my experience of how to build an organization and company culture that can do this (as opposed to an organization that simply copies the work of others) .

I think the world needs more organizations like this, although their number will be limited by human ingenuity.

But the reality is that a lot of talent is wasted because the world is not good at building organizations like this. But I still wish there were more organizations like this.

Q11: How is talent wasted?

A: There are many talented people in the world, but because they work for bad companies or for some other reason, they are unable to reach their full potential.

One of the things I’m most excited about about AI is that I hope it will allow us to better help everyone reach their highest potential.

This goal is far from being achieved.

I'm sure that many people in the world would be excellent AI researchers if their life trajectories were slightly different.

Q12: In the past few years, you have had an incredible experience, (leading) incredible rapid growth. You mentioned that you will write a memoir when you retire, so when you look back on the past 10 years, what significant changes have been made in your leadership style?

A: To me, the most extraordinary thing about these past few years is the speed at which things have changed.

In a regular company, you have plenty of time to go from zero to $100 million in revenue, then from $100 million to $1 billion, all the way to $10 billion. You don’t need to reach this process in two years. We are definitely not a Silicon Valley startup in the traditional sense;

We had to get there so quickly, and there were a lot of things I should have spent more time learning (but didn't).

Q12': Is there something you don't know that you wish you had spent more time learning?

A: Let me say one thing, which is "Let the company focus on how to grow the next 10 times" rather than growing by 10%.

How much effort does this require? How difficult is this?

If it's the next 10% growth, what worked before will still work. But for a company with $1 billion in revenue to grow to $10 billion in revenue, you can't simply repeat what you did before. It requires a lot of change.

In a world where people don't even have time to master the basics because growth is so fast, I seriously underestimated the effort it takes to sprint forward to the next step without losing sight of everything else we have to do.

Q13: Keith Rabois (Silicon Valley investor, former Vice President of Paypal) said that you should hire very young people, under 30 years old, which is what Peter Thiel (Founder of Paypal) taught him to build a great company Secret. I'm curious, what do you think of this perspective? How do you balance hiring young, energetic but inexperienced people with those who are more experienced?

A: When we founded OpenAI, I was about 30 years old, not particularly young.

But so far, OpenAI’s progress seems to be pretty good~

Q13': What do you think about hiring people under 30 years old for work? Are they young, energetic, ambitious, but less experienced (and some may be rich) ?

A: The obvious answer is that you can succeed by hiring two types of people.

Our team recently hired a young man who does an amazing job. I don't understand how these people can do such a good job at such a young age? ! But that's what it is.

If you can find people like this, they bring amazing new perspectives, energy, and more.

On the other hand, when it comes to designing some of the most complex and expensive computer systems ever built, I'm not willing to bet on someone who's just starting out.

So it needs to be both.

I think what you really want is an extremely high standard of talent made up of people of any age, and a strategy that says I'll only hire younger people, or I'll only hire older people.

One of the things I appreciate most about Y Combinator is that "lack of experience does not mean lack of value."

There are some very promising people who can create tremendous value early in their careers, and it's a great thing that we should bet on those people.

Q15: Someone told me that sometimes Anthropic’s model is more suitable for coding tasks. Why is this? Do you think this assessment is fair? When should developers choose OpenAI instead of other model vendors?

A: Yes, Anthropic has a very impressive model for writing code.

I think developers will use several models most of the time , and as agents become more and more important, I don't know how it will develop in the future.

I think AI is going to be everywhere, and the way we're currently talking or thinking about it doesn't feel right. If I had to describe it clearly, we would move from talking about models to talking about systems, but that takes some time.

Q16: When we consider scale models, how many model iterations do you think the Scaling Law will still apply? It was generally thought that it wouldn't last long, but it seems to have lasted longer than people thought.

A: I understand that the core of this question is "will the improvement in model capabilities be the same as it was in the past?"

I believe the answer is yes, and it will be for a long time to come.

Q16': Have you ever doubted this?

A: Not at all.

Q16": Why?

A: We've encountered behavior that we didn't understand, like failed training runs, or various other things.

As we approach the end of one paradigm, we have to figure out the next one.

Q16"': Which one is the most difficult to control?

A: When we were studying GPT-4, there was a very difficult problem and we really didn’t know how to solve it.

Although we finally found a solution, for a long time we didn't know how to move forward with the project.

Later, we turned to the direction that we have been interested in for a long time, which is o1 and inference models, but before that we experienced a long and tortuous research road.

Q17: Training and operations can fail. Is it difficult to maintain morale? How will you maintain/boost morale?

A: As you know, many of our employees are very excited about building AGI, which is a very direct motivation.

No one expects the road to success to be easy and straight.

There is a good saying, which probably goes like this: "I never pray for God to be on my side, I always pray and hope that I will be on God's side."

In a way, betting on deep learning feels like being on the side of the angels, and although you'll encounter some big stumbling blocks along the way, you'll eventually find that it always seems to work out.

Therefore, having a deep belief in this is very beneficial to the team's morale.

Q18: Can I ask a really weird question? I heard a great quote recently, "The heaviest things in life are not iron or gold, but the decisions left unmade." Which unmade decision bothers you the most?

A: The decisions I make (and fail to make) vary every day, but none of them are “big” decisions.

There are big things like whether or not to bet on the next product, or whether we would like to build our next computer one way or another—those are kind of like high-stakes one-way portals, I Will procrastinate like everyone else.

But mostly, what's difficult is that every day there's something that makes me say yes even when the vote is 51:49. These things may be 51:49 in themselves, and I don't think I can do it better than others, but I have to make the final decision.

Q18': When you have to make decisions on these 51:49 matters, who do you usually call?

A: No specific person.

I think it would be wrong to rely on one person for everything . The right approach for me is to have 15 or 20 people advising me, each of whom has good intuition or background in a particular area.

Instead of relying on one person for everything, you can call the best experts in the field.

Q19: Next I want to talk about the semiconductor supply chain. How concerned are you about the semiconductor supply chain?

A: I don’t know how to quantify this concern.

There is admittedly concern about it, but it is not my primary concern, but it is in the top 10% of all concerns.

We're in too much trouble to worry about it.

To some extent, I thought it would all work out, but right now it feels like there's a very complex system with each level operating independently. The same is true within OpenAI, and this is true within any team.

To give you the example of semiconductors, you have to balance power availability with making good network decisions and being able to get enough chips in a timely manner, as well as researching and being prepared to intersect with any risks that may exist so that you're not completely blindsided, or caught off guard. It holds an unexploitable system.

“Supply chain” sounds too much like a pipeline, but the complexity of the entire ecosystem at each level is something I’ve never seen before in any industry.

Well, that's probably my biggest concern.

Q20: Many people compare this wave of AI to the Internet, especially in terms of excitement and enthusiasm. I think the amount of money spent is still somewhat different between the two. Larry Ellison (founder of Oracle) said it would cost $100 billion to get to the starting point of the basic model race. Do you agree with that? Is this reasonable?

A: No, I think it will cost less.

The cost to compete in the base model space will be less than $100 billion.

An interesting phenomenon is that everyone likes to use previous technological revolutions as examples to talk about new technologies. I think this is a bad habit, but I understand why people do it.

In my opinion, the analogies people use to make AI are particularly bad. It is obvious that the Internet and AI are very different.

You mentioned one thing about cost and whether you need to spend $100 billion to compete, but the characteristic of the Internet revolution is that it was really easy to start at that time, and for many companies, this wave is just a continuation of the Internet.

It's like someone makes these AI models and you can use them to build all kinds of great things; but if you're trying to build the AI itself, that's a completely different story.

Another example that people often use to compare AI is electricity. For many reasons, I don’t think that makes sense either.

My favorite analogy is the transistor.

It was a new physical discovery with incredible scaling properties that quickly spread everywhere.

We can now imagine things like Moore's Law, like a series of laws for artificial intelligence that tell us how quickly it will get better.

Everyone benefits from it, the entire tech industry benefits from it. There are a lot of transistors in products and services, but you don't really think of them as a transistor company.

It's a very complex, very expensive industrial process with a huge supply chain.

There has been huge long-term economic growth based on this very simple discovery of physics - even though most of the time you don't think about it.

You don't say "This is a transistor product", you just think "OK, this thing can process information for me".

You even ignore the existence of transistors as a matter of course.

Quick Questions and Answers Easter Egg

Q1: If you were in your early 20s now, using our infrastructure today, what would you choose to do?

A: In a certain AI-supported vertical field, I would choose an AI teaching assistant, or the best AI lawyer, AI medical consultant, or anything similar that I can imagine.

Q2: If you were to write a book, what would you name it?

A: I don’t have a title ready yet, and I haven’t fully conceived the book except for some of what I want to write about.

But I think it will be about human potential.

Q3: In the field of AI, what is something that has not received enough attention, but that everyone should spend more time on?

A: Some kind of AI that can understand your entire life, I'd like to see a lot of different ways of approaching this problem.

It doesn't really have to be infinite context, but in some way you can have an AI agent that knows everything about you and has access to all your data.

Q4: What surprised you last month, Sam?

A: A study I can’t talk about, but it’s shockingly good.

Q5: Which competitor do you respect the most? Why them?

A: I would say that I respect everyone in this field right now.

I think this whole field is filled with very talented, very hard-working people.

I'm not dodging the question, I can point out that there are super talented people doing super awesome work everywhere.

Q6: Tell me, what is your favorite OpenAI API?

A: I think the new real-time API is great. We have a huge API business now with a lot of good stuff.

Q7: Who do you respect the most in the AI field today?

A: Let me salute the Cursor team.

There are a lot of people doing incredible work in AI, but in terms of using AI and having AI deliver truly amazing experiences that create a ton of value, Cursor is putting the pieces together in a way that people haven't thought of before, and I Think this is pretty amazing.

This answer excludes people from OpenAI, otherwise I would have to name a long list.

Q8: How do you see the trade-off between latency and accuracy?

A: A scale standard between latency and accuracy is needed. We're doing quick questions and answers, and I'm not trying to speed it up, but I'm trying not to take it too long.

In this case, what you want is (lower) latency. If you wanted to make new important discoveries in physics, you would be willing to wait a few more years.

The answer is that the user should control this trade-off.

Q9: I believe everyone will feel uneasy about their leadership. In this case, and when you want to improve your leadership areas, as the leader and CEO of OpenAI, what aspects do you most want to improve?

A: It’s a long list… I’m trying to figure out which one is number one.

The thing that bothers me most this week is that I'm even more unsure about the details of our product strategy than I have been in the past.

I think the product in general is my weakness, and now the company needs me to have a stronger, clearer vision in this area.

We have a great product lead and a great product team, but I wish I was stronger in this area.

Q10: You hired Kevin Weil (to serve as CPO) . I've known Kevin for many years and he's great. What makes you think Kevin is a world-class product leader?

A: “Discipline” is the first word that comes to mind.

We're going to focus on the things we're saying "no" to, really trying to say on behalf of the user why we're going to do or not do something, and really trying to be disenchanted.

Q11: Sam, you’ve done a lot of interviews.

Finally, I want to talk about our five-year outlook and ten-year outlook for OpenAI.

A: If we're right, we could easily start building systems within the next two years to help science advance.

Five years from now, OpenAI’s technological progress will be incredibly fast and can be described as crazy.

The second point is that society itself has actually changed very little.

Just like asking everyone five years ago whether a computer would pass the Turing test, everyone would shake their heads.

If you say an oracle tells you what's going to happen, they're going to say, oh, this is going to be a crazy, amazing social change.

Now, we have indeed passed the Turing test, and society has not changed much.

Everything just whizzed by.

What I expect to keep happening is progress, scientific progress, moving forward, exceeding all expectations in a way that I think is good and healthy, while society doesn't change that much.