OmniParser, a screen content parsing tool recently launched by Microsoft, this week topped the list of the most popular models on the artificial technology open source platform HuggingFace. According to Clem Delangue, co-founder and CEO of HuggingFace, this is the first parsing tool in this field to win this award.

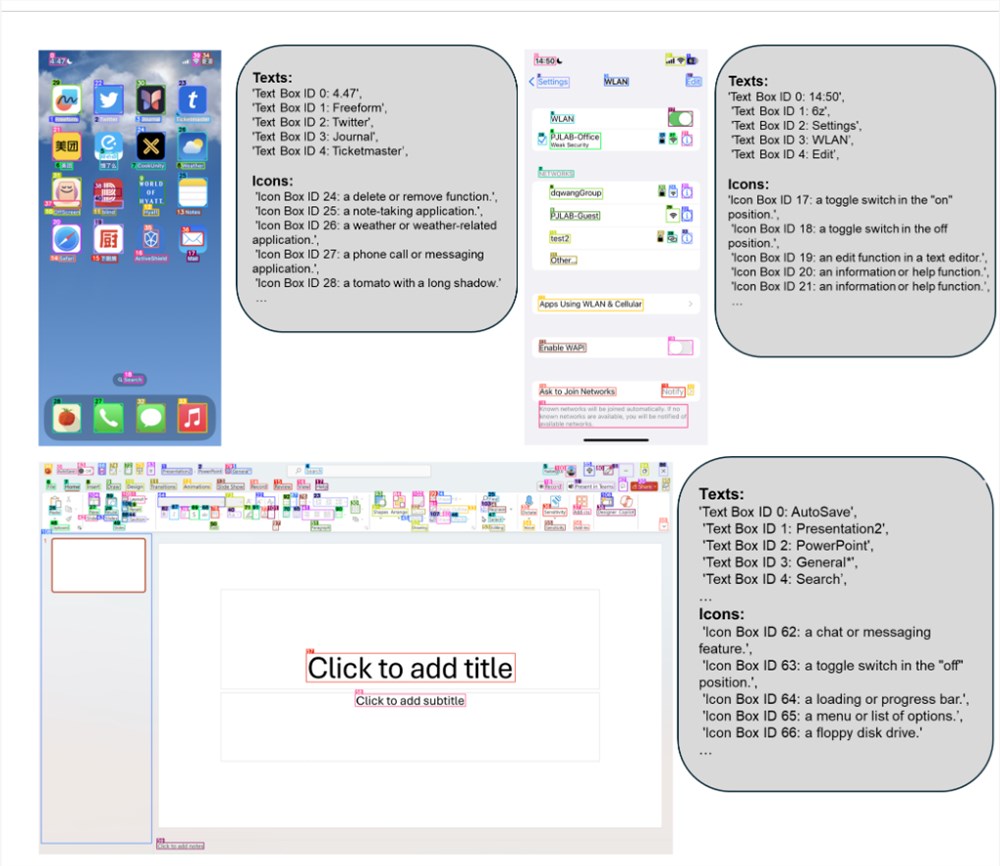

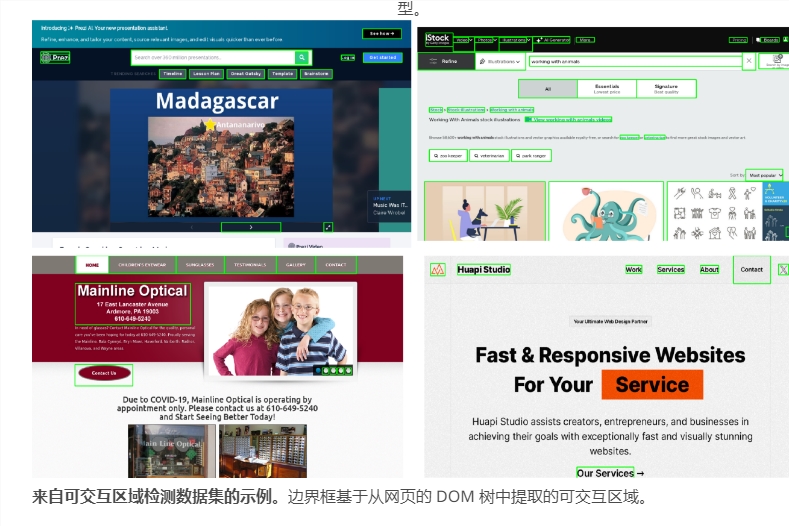

OmniParser is mainly used to convert screenshots into structured data to help other systems better understand and process graphical user interfaces. The tool adopts a multi-model collaborative working method: YOLOv8 is responsible for detecting the position of interactive elements, BLIP-2 analyzes the use of elements, and is equipped with an optical character recognition module to extract text information, ultimately achieving a comprehensive analysis of the interface.

This open source tool has broad compatibility and supports many mainstream vision models. Microsoft Partner Research Manager Ahmed Awadallah emphasized that open cooperation is crucial to promoting technological development, and OmniParser is the product of practicing this concept.

At present, technology giants have laid out their plans in the field of screen interaction. Anthropic released a closed-source solution called Computer Use, and Apple launched Ferret-UI for mobile interfaces. In contrast, OmniParser shows unique advantages due to its cross-platform versatility.

However, OmniParser still faces some technical challenges, such as repeated icon recognition and precise positioning in text overlapping scenarios. But the open source community generally believes that as more developers participate in improvements, these problems are expected to be solved.

The rapid popularity of OmniParser shows developers' urgent need for universal screen interaction tools, and also indicates that this field may usher in rapid development.

Address: https://microsoft.github.io/OmniParser/