The editor of Downcodes learned that Hugging Face today released SmolLM2, a series of compact language models that are impressive in terms of performance and require far less computing resources than large models. This is undoubtedly good news for developers who want to deploy AI applications on resource-constrained devices. SmolLM2 is released under the Apache 2.0 license and provides three models with different parameter sizes, which can be flexibly adapted to various application scenarios.

Hugging Face today released SmolLM2, a new set of compact language models that achieve impressive performance while requiring far fewer computational resources than larger models. The new model is released under the Apache 2.0 license and comes in three sizes - 135M, 360M and 1.7B parameters - suitable for deployment on smartphones and other edge devices with limited processing power and memory.

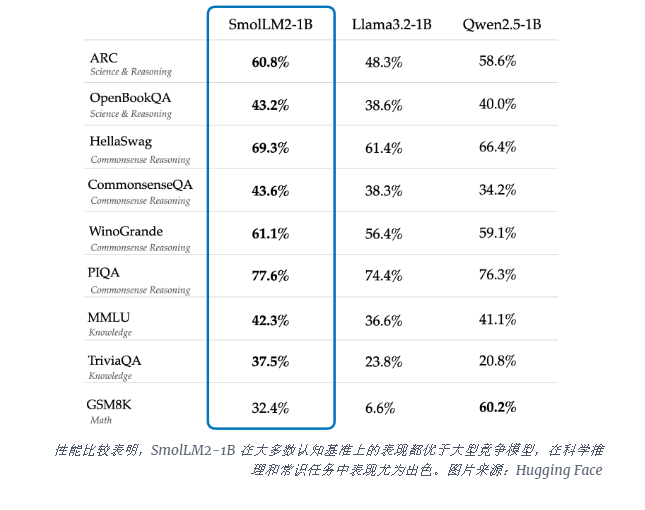

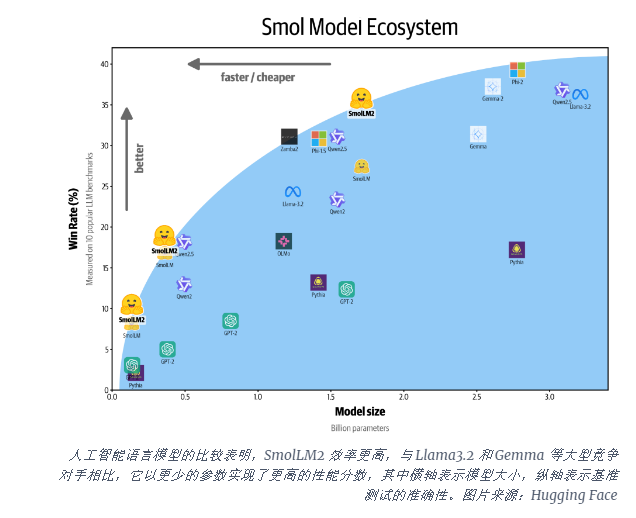

The SmolLM2-1B model outperforms Meta's Llama1B model in several key benchmarks, particularly performing well on scientific reasoning and common sense tasks. The model outperforms large competing models on most cognitive benchmarks, using a diverse combination of datasets including FineWeb-Edu and specialized math and coding datasets.

The release of SmolLM2 comes at a critical time as the AI industry grapples with the computational demands of running large language models (LLMs). While companies like OpenAI and Anthropic continue to push the boundaries of model scale, there is a growing recognition of the need for efficient, lightweight AI that can run natively on the device.

SmolLM2 offers a different approach to bringing powerful AI capabilities directly into personal devices, pointing to a future where advanced AI tools are available to more users and companies, not just tech giants with massive data centers. These models support a range of applications, including text rewriting, summarization and function calling, and are suitable for deployment in scenarios where privacy, latency or connectivity constraints make cloud-based AI solutions impractical.

While these smaller models still have limitations, they represent part of a broader trend toward more efficient AI models. The release of SmolLM2 shows that the future of artificial intelligence may not just belong to larger and larger models, but to more efficient architectures that can provide powerful performance with fewer resources.

The emergence of SmolLM2 provides new possibilities for the development of lightweight AI applications, and also indicates that AI technology will become more popular and benefit more users. The editor of Downcodes believes that more efficient and low resource consumption AI models will appear in the future to promote the development of artificial intelligence technology.