The development of deep learning in the field of robot control is limited by the lack of large-scale data patterns. A research team from Tsinghua University recently made a breakthrough. Through an efficient data collection strategy, it collected enough data in just one afternoon, achieving a 90% success rate for the robot strategy in new environments and new objects. The editor of Downcodes will take you to understand the results of this research and the data scaling rules behind it.

The rapid development of deep learning is inseparable from large-scale data sets, models and calculations. In the fields of natural language processing and computer vision, researchers have discovered a power-law relationship between model performance and data size. However, the field of robotics, especially the field of robot control, has not yet established similar rules of scale.

A research team from Tsinghua University recently published a paper exploring the rules of data scaling in robot imitation learning, and proposed an efficient data collection strategy that collected enough data in just one afternoon, making the strategy Able to achieve approximately 90% success rate on new environments and new objects.

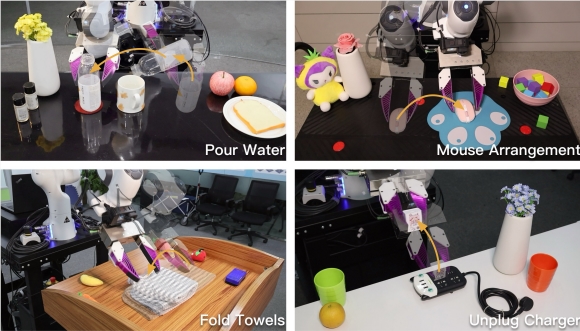

The researchers divided generalization ability into two dimensions: environment generalization and object generalization, and used a handheld gripper to collect human demonstration data on various environments and different objects, and modeled these data using a diffusion strategy. The researchers first focused on two tasks: pouring water and mouse placement. By analyzing how the performance of the strategy on new environments or new objects changes with the increase in the number of training environments or objects, they summarized the rules of data scaling.

Research results show:

The ability of a policy to generalize to new objects, new environments, or both, has a power-law relationship with the number of training objects, training environments, or training environment-object pairs, respectively.

Increasing the variety of environments and objects is more effective than increasing the number of demonstrations of each environment or object.

By collecting data in as many environments as possible (for example, 32 environments), with a unique operating object and 50 demonstrations in each environment, a strategy with strong generalization ability (success rate 90%) can be trained, so that It can run on new environments and new objects.

Based on these data scaling rules, the researchers proposed an efficient data collection strategy. They recommend collecting data in as many different environments as possible, using only one unique object in each environment. When the total number of environment-object pairs reaches 32, it is usually sufficient to train a policy that can operate in new environments and interact with previously unseen objects. For each environment-object pair, it is recommended to collect 50 demos.

To verify the general applicability of the data collection strategy, the researchers applied it to two new tasks: folding a towel and unplugging a charger. The results show that this strategy can also train strategies with strong generalization ability on these two new tasks.

This study shows that with a relatively modest investment of time and resources, it is possible to learn a single-task policy that can be deployed to any environment and object with zero-shot deployment. To further support researchers' efforts in this area, the Tsinghua team released their code, data, and models in the hope of inspiring further research in the field and ultimately realizing universal robots capable of solving complex, open-world problems.

Paper address: https://arxiv.org/pdf/2410.18647

This research provides valuable experience for the data scaling rules in the field of robot control, and efficient data collection strategies also provide new directions for future research. The Tsinghua University team’s open source code, data and models will further promote the development of this field and ultimately achieve more powerful general-purpose robots.